标签:

6-Support Vector Regression

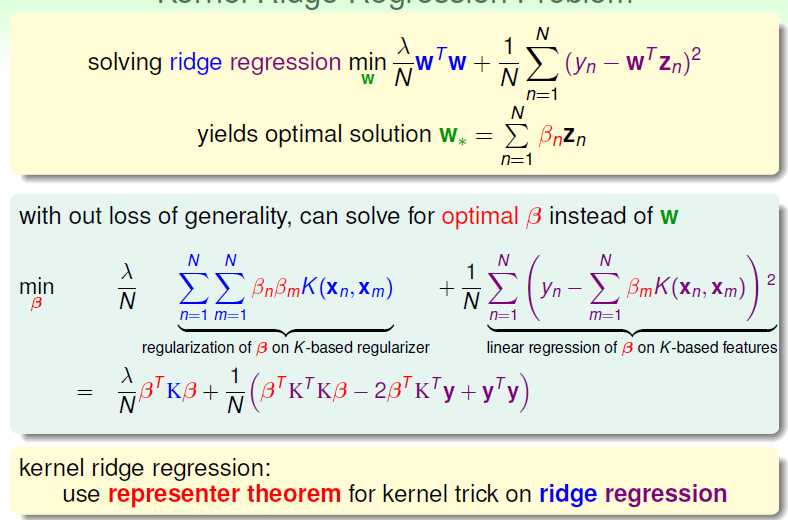

For the regression with squared error, we discuss the kernel ridge regression.

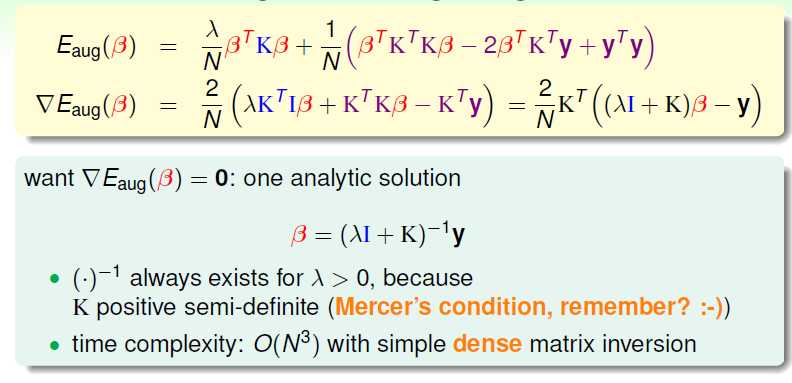

With the knowledge of kernel function, could we find an analytic solution for kernel ridge regression?

Since we want to find the best βn

However, compare to the linear situation, the large number of data will suffer from this formation of βn.

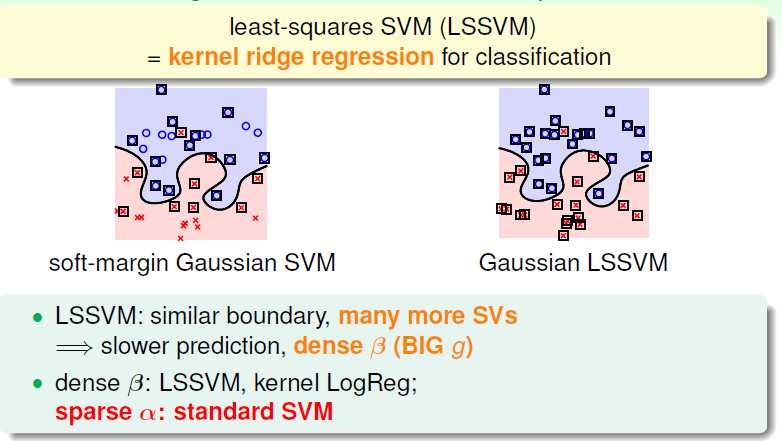

Compared to soft-margin Gaussian SVM, kernel ridge regression suffers from the operation of βn through N:

That means more SVs and will slow down our calculation, a sparse βn is now we want.

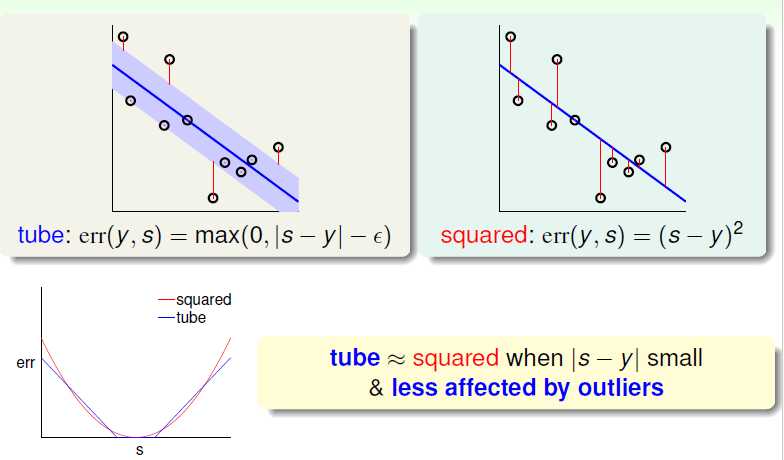

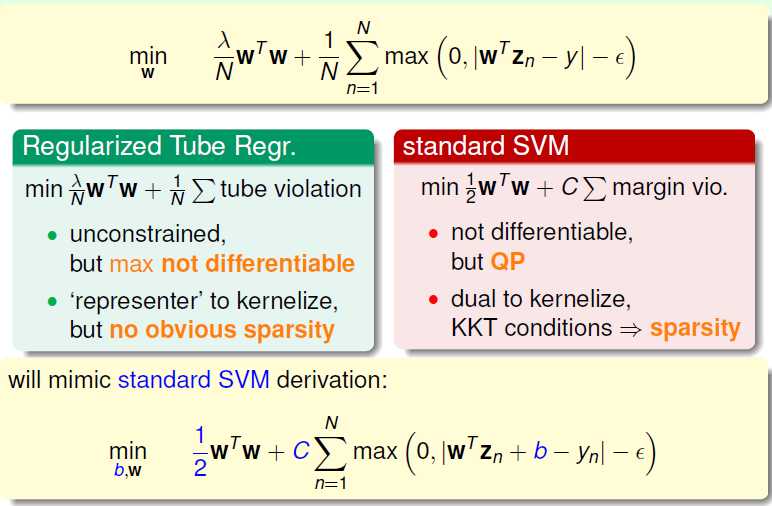

Thus we add a tube, with the familiar function of MAX, we prune the points at a small |s - y|.

Max function is not differentable at some points, so we need some other operation as well.

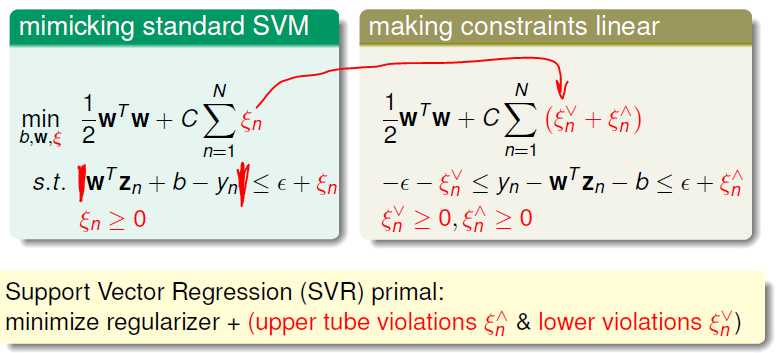

These operations are about changing the appearance to be more like standard SVM, in order to deal with the tool of QP.

wTZn + b = wTZn +w0, which is separated as a Constant.

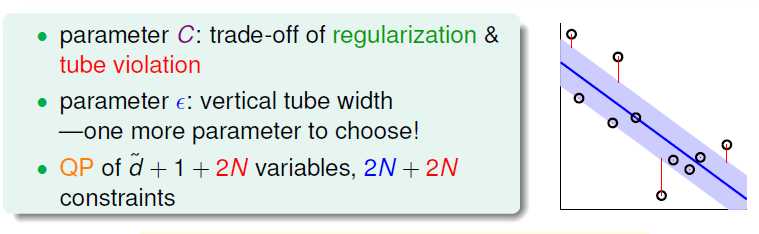

we add a factor to descrip the violation of margin, and use upper and lower bound to keep linear formation.

Our next task : SVR primal -> dual

Machine Learning Techniques -6-Support Vector Regression

标签:

原文地址:http://www.cnblogs.com/windniu/p/4762749.html