标签:

一、AdaBoost的损失函数

AdaBoost优化的是指数损失,即\begin{align*} \mathbb{E}_{\boldsymbol{x} \sim \mathfrak{D}, y}[e^{-y H(\boldsymbol{x})}] = \int_{\boldsymbol{x}} \sum_y e^{-y H(\boldsymbol{x})} p(y|\boldsymbol{x}) p(\boldsymbol{x}) \mbox{d} \boldsymbol{x} \end{align*}记$F(\boldsymbol{x}, H, H‘) = \sum_y e^{-y H(\boldsymbol{x})} p(y|\boldsymbol{x}) p(\boldsymbol{x})$,于是\begin{align*} F_H & = \sum_y e^{-y H(\boldsymbol{x})} (-y) p(y|\boldsymbol{x}) p(\boldsymbol{x}) = - e^{- H(\boldsymbol{x})} p(y=1|\boldsymbol{x}) p(\boldsymbol{x}) + e^{H(\boldsymbol{x})} p(y=-1|\boldsymbol{x}) p(\boldsymbol{x}) \\ F_H‘ & = 0 \end{align*}由Euler-Lagrange方程知该泛函的最优解应满足$e^{- H(\boldsymbol{x})} p(y=1|\boldsymbol{x}) p(\boldsymbol{x}) = e^{H(\boldsymbol{x})} p(y=-1|\boldsymbol{x}) p(\boldsymbol{x})$,即\begin{align*} H(\boldsymbol{x}) = \frac{1}{2} \ln \frac{p(y=1|\boldsymbol{x})}{p(y=-1|\boldsymbol{x})} \end{align*}于是\begin{align*} sign(H(\boldsymbol{x})) = \begin{cases} 1 & p(y=1|\boldsymbol{x}) > p(y=-1|\boldsymbol{x}) \\ -1 & p(y=1|\boldsymbol{x}) < p(y=-1|\boldsymbol{x}) \end{cases} \end{align*}这表明若$H(\boldsymbol{x})$是指数损失的最优解,则取最终分类器为$sign(H(\boldsymbol{x}))$可达到Bayes最优错误率,故取指数损失作为优化目标是合理的。

二、AdaBoost的算法原理

设前$t-1$轮的分类器组合为$H_{t-1}$,那么第$t$轮有\begin{align*} E = \sum_{i=1}^m e^{- y_i H_t (\boldsymbol{x}_i)} = \sum_{i=1}^m e^{- y_i H_{t-1}(\boldsymbol{x}_i) - y_i \alpha_t h_t(\boldsymbol{x}_i)} = \sum_{i=1}^m \mathcal{D}_{t-1}(i) e^{- y_i \alpha_t h_t(\boldsymbol{x}_i)} \end{align*}其中$\mathcal{D}_{t-1}(i) = e^{- y_i H_{t-1}(\boldsymbol{x}_i)}$可看作第$t$轮样本$(\boldsymbol{x}_i, y_i)$的权重,进一步化简有\begin{align*} E & = e^{- \alpha_t} \sum_{i=1}^m \mathcal{D}_{t-1}(i) I_{y_i = h_t(\boldsymbol{x}_i)} + e^{\alpha_t} \sum_{i=1}^m \mathcal{D}_{t-1}(i) I_{y_i \neq h_t(\boldsymbol{x}_i)} \\ & = e^{- \alpha_t} \sum_{i=1}^m \mathcal{D}_{t-1}(i) - e^{- \alpha_t} \sum_{i=1}^m \mathcal{D}_{t-1}(i) I_{y_i \neq h_t(\boldsymbol{x}_i)} + e^{\alpha_t} \sum_{i=1}^m \mathcal{D}_{t-1}(i) I_{y_i \neq h_t(\boldsymbol{x}_i)} \\ & = (e^{\alpha_t} - e^{- \alpha_t}) \sum_{i=1}^m \mathcal{D}_{t-1}(i) I_{y_i \neq h_t(\boldsymbol{x}_i)} + e^{- \alpha_t} \sum_{i=1}^m \mathcal{D}_{t-1}(i) \end{align*}注意此时$E$是关于$\alpha_t$和$h_t$的函数,其中第二项与$h_t$无关,故\begin{align*} h_t = \mathop{argmin}_{h} \sum_{i=1}^m \mathcal{D}_{t-1}(i) I_{y_i \neq h(\boldsymbol{x}_i)} \end{align*}即第$t$轮选取的基分类器应该最小化加权错误率。此外易知有\begin{align*} \frac{\partial E}{\partial \alpha_t} = (e^{\alpha_t} + e^{- \alpha_t}) \sum_{i=1}^m \mathcal{D}_{t-1}(i) I_{y_i \neq h_t(\boldsymbol{x}_i)} - e^{- \alpha_t} \sum_{i=1}^m \mathcal{D}_{t-1}(i) \end{align*}令其为零可得\begin{align*} e^{2 \alpha_t} = \frac{\sum_{i=1}^m \mathcal{D}_{t-1}(i)}{\sum_{i=1}^m \mathcal{D}_{t-1}(i) I_{y_i \neq h_t(\boldsymbol{x}_i)}} - 1 = \frac{1 - \sum_{i=1}^m \mathcal{D}_{t-1}(i) I_{y_i \neq h_t(\boldsymbol{x}_i)}/\sum_{i=1}^m \mathcal{D}_{t-1}(i)}{\sum_{i=1}^m \mathcal{D}_{t-1}(i) I_{y_i \neq h_t(\boldsymbol{x}_i)}/\sum_{i=1}^m \mathcal{D}_{t-1}(i)} \Longrightarrow \alpha_t = \frac{1}{2} \ln \frac{1 - \epsilon_t}{\epsilon_t} \end{align*}其中\begin{align} \label{eq: epsilon} \epsilon_t = \frac{\sum_{i=1}^m \mathcal{D}_{t-1}(i) I_{y_i \neq h_t(\boldsymbol{x}_i)}}{\sum_{i=1}^m \mathcal{D}_{t-1}(i)} \end{align}

有了$\alpha_t$和$h_t$就可以计算下一轮所有样本的权重\begin{align*} \mathcal{D}_{t}(i) = e^{- y_i H_t(\boldsymbol{x}_i)} = \mathcal{D}_{t-1}(i) e^{- y_i \alpha_t h_t(\boldsymbol{x}_i)} \end{align*}注意将权重进行线性拉升不会影响$\epsilon_t$的值,故令\begin{align*} \mathcal{D}‘_{t}(i) = \frac{\mathcal{D}_{t}(i)}{\sum_{i=1}^m \mathcal{D}_{t}(i)} \end{align*}即每轮结束后将权重归一化为一个概率分布,这样(\ref{eq: epsilon})式中的分母为1,$\epsilon_t$就是加权错误率。

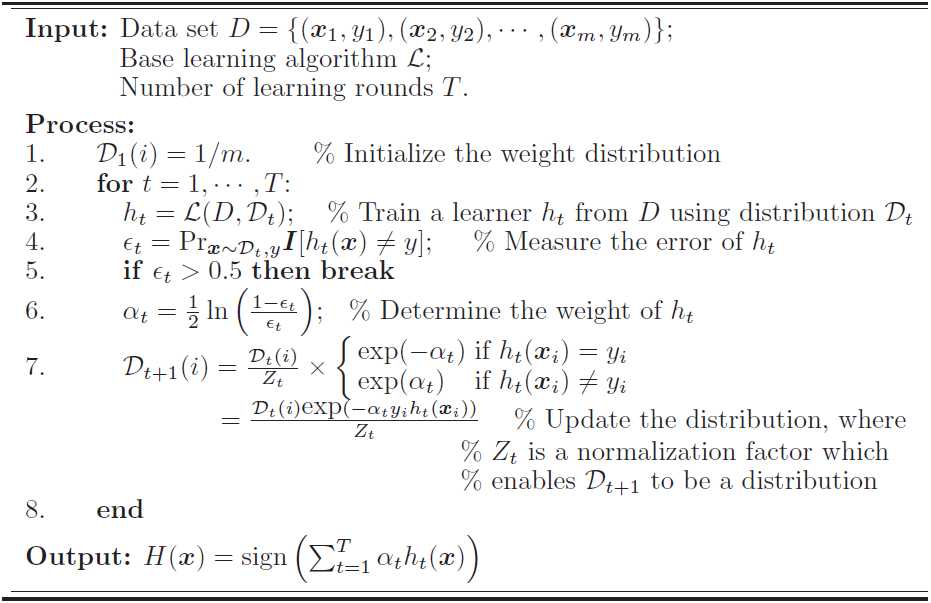

算法如下图所示:

标签:

原文地址:http://www.cnblogs.com/murongxixi/p/4766155.html