标签:

需求是分析音频,用图形化展示。

思路:

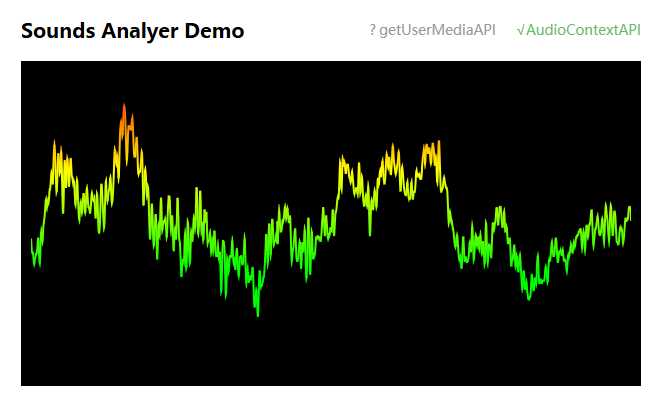

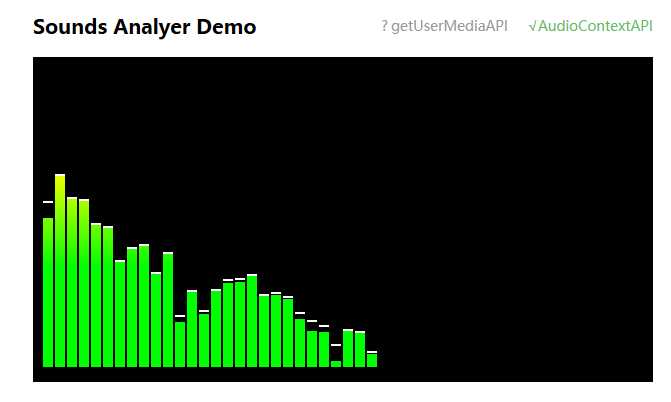

1、回想当年使用的播放器,如XX静听 一般就2种图形化展示 一个是条形柱 一个是波纹

2、分析数据转化成图像 这个是canvas常用的,之前做过的canvas分析图像数据,做滤镜做变形都是一把好手,这里当然 图形化也交给canvas了。

3、既然是分析音频,那当然要将音频转化成数据,才可以进行分析,而关于音频的HTML API 就有 audio标签 ,而麦克风访问就有getUserMedia了。什么?你问我咋知道这个api的?我只能告诉你 去查MDN 、W3C 这类网站...

首先我们要得到音频数据,这里我们用了2个途径得到,一个是音频流,一个是麦克风

1、api兼容

window.AudioContext = (window.AudioContext || window.webkitAudioContext || window.mozAudioContext); window.requestAnimationFrame = window.requestAnimationFrame || window.webkitRequestAnimationFrame; try { audioCtx = new AudioContext; console.log(‘浏览器支持AudioContext‘); } catch(e) { console.log(‘浏览器不支持AudioContext‘, e); };

2、得到麦克风数据

//开始监听 if (navigator.getUserMedia) { console.log(‘浏览器支持getUserMedia‘); apiMedia.className = "checked"; navigator.getUserMedia( // 我们只获取麦克风数据,这里还可以设置video为true来获取摄像头数据 { audio: true }, onSccess, onErr) } else { console.log(‘浏览器不支持getUserMedia‘); apiMedia.className = "false"; };

通过onSccess来得到数据,介入分析

// Success callback function onSccess(stream) { //将声音输入对像 source = audioCtx.createMediaStreamSource(stream); source.connect(analyser); analyser.connect(distortion); distortion.connect(biquadFilter); biquadFilter.connect(convolver); convolver.connect(gainNode); gainNode.connect(audioCtx.destination); visualize();//分析音频 }

同样,文件的也是一样的

if (mediaSetting == "file") { loadFile(); //得到数据源 source=audioCtx.createMediaElementSource(audio); //连接节点 source.connect(analyser); analyser.connect(distortion); distortion.connect(gainNode); gainNode.connect(audioCtx.destination); }

visualize();//分析音频

得到了数据,接下来就交个canvas君吧

//波纹1 if (visualSetting == "wave1") { analyser.fftSize = 2048; var bufferLength = analyser.fftSize; console.log(bufferLength); var dataArray = new Uint8Array(bufferLength); canvasCtx.clearRect(0, 0, WIDTH, HEIGHT); draw = function() { drawVisual = requestAnimationFrame(draw); analyser.getByteTimeDomainData(dataArray); canvasCtx.fillStyle = ‘#000‘; canvasCtx.fillRect(0, 0, WIDTH, HEIGHT); canvasCtx.lineWidth = 2; canvasCtx.strokeStyle = ‘#4aeb46‘; canvasCtx.beginPath(); var sliceWidth = WIDTH * 1.0 / bufferLength; var x = 0; for (var i = 0; i < bufferLength; i++) { var v = dataArray[i] / 128.0; var y = v * HEIGHT / 2; if (i === 0) { canvasCtx.moveTo(x, y); } else { canvasCtx.lineTo(x, y); } x += sliceWidth; } canvasCtx.lineTo(canvas.width, canvas.height / 2); canvasCtx.stroke(); }; draw(); }

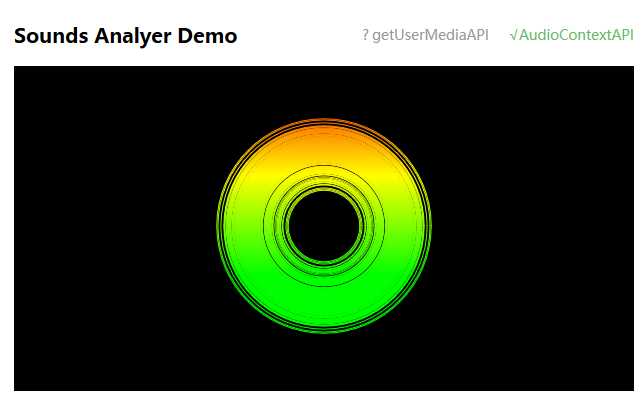

//circle else if (visualSetting == "circle") { // analyser.fftSize = 1024; // var bufferLength = analyser.fftSize; // var dataArray = new Uint8Array(bufferLength); analyser.fftSize = 128; var frequencyData = new Uint8Array(analyser.frequencyBinCount); var count = analyser.frequencyBinCount; var circles = []; var circleMaxWidth = (HEIGHT*0.66) >> 0; canvasCtx.clearRect(0, 0, WIDTH, HEIGHT); canvasCtx.lineWidth = 1; for(var i = 0; i < count; i++ ){ circles.push(i/count*circleMaxWidth) } draw = function() { canvasCtx.clearRect(0, 0, WIDTH, HEIGHT); analyser.getByteFrequencyData(frequencyData); drawVisual = requestAnimationFrame(draw); for(var i = 0; i < circles.length; i++) { var v = frequencyData[i] / 128.0; var y = v * HEIGHT / 2; var circle = circles[i]; canvasCtx.beginPath(); canvasCtx.arc(WIDTH/2,HEIGHT/2, y/2, Math.PI * 2, false); canvasCtx.stroke() } }; draw(); }

//柱形条 else if (visualSetting == "bar") { analyser.fftSize = 256; var bufferLength = analyser.frequencyBinCount; console.log(bufferLength); var dataArray = new Uint8Array(bufferLength); canvasCtx.clearRect(0, 0, WIDTH, HEIGHT); var gradient = canvasCtx.createLinearGradient(0, 0, 0, 200); gradient.addColorStop(1, ‘#0f0‘); gradient.addColorStop(0.5, ‘#ff0‘); gradient.addColorStop(0, ‘#f00‘); var barWidth = 10; var gap = 2; //间距 var capHeight = 2;//顶部高度 var capStyle = ‘#fff‘; var barNum = WIDTH / (barWidth + gap); //bar个数 var capYPositionArray = []; var step = Math.round(dataArray.length / barNum); draw = function() { drawVisual = requestAnimationFrame(draw); analyser.getByteFrequencyData(dataArray); canvasCtx.clearRect(0, 0, WIDTH, HEIGHT); for (var i = 0; i < barNum; i++) { var value = dataArray[i * step]; if (capYPositionArray.length < Math.round(barNum)) { capYPositionArray.push(value); }; canvasCtx.fillStyle = capStyle; //顶端帽子 if (value < capYPositionArray[i]) { canvasCtx.fillRect(i * 12, HEIGHT - (--capYPositionArray[i]), barWidth, capHeight); } else { canvasCtx.fillRect(i * 12, HEIGHT - value, barWidth, capHeight); capYPositionArray[i] = value; }; canvasCtx.fillStyle = gradient;//渐变 canvasCtx.fillRect(i * 12 , HEIGHT - value + capHeight, barWidth, HEIGHT-2); //绘制bar } }; draw(); }

完整代码 github

HTML5 getUserMedia/AudioContext 打造音谱图形化

标签:

原文地址:http://www.cnblogs.com/cuoreqzt/p/4819140.html