标签:

1)首先创建WordCount1023文件夹,然后在此目录下使用编辑器,例如vim编写WordCount源文件,并保存为WordCount.java文件

1 /** 2 * Licensed under the Apache License, Version 2.0 (the "License"); 3 * you may not use this file except in compliance with the License. 4 * You may obtain a copy of the License at 5 * 6 * http://www.apache.org/licenses/LICENSE-2.0 7 * 8 * Unless required by applicable law or agreed to in writing, software 9 * distributed under the License is distributed on an "AS IS" BASIS, 10 * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 11 * See the License for the specific language governing permissions and 12 * limitations under the License. 13 */ 14 15 16 import java.io.IOException; 17 import java.util.StringTokenizer; 18 19 import org.apache.hadoop.conf.Configuration; 20 import org.apache.hadoop.fs.Path; 21 import org.apache.hadoop.io.IntWritable; 22 import org.apache.hadoop.io.Text; 23 import org.apache.hadoop.fs.FileSystem; 24 import org.apache.hadoop.mapred.JobConf; 25 import org.apache.hadoop.mapreduce.Job; 26 import org.apache.hadoop.mapreduce.Mapper; 27 import org.apache.hadoop.mapreduce.Reducer; 28 import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; 29 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; 30 import org.apache.hadoop.util.GenericOptionsParser; 31 32 public class WordCount { 33 34 public static class TokenizerMapper 35 extends Mapper<Object, Text, Text, IntWritable>{ 36 37 private final static IntWritable one = new IntWritable(1); 38 private Text word = new Text(); 39 40 public void map(Object key, Text value, Context context 41 ) throws IOException, InterruptedException { 42 StringTokenizer itr = new StringTokenizer(value.toString()); 43 while (itr.hasMoreTokens()) { 44 word.set(itr.nextToken()); 45 context.write(word, one); 46 } 47 } 48 } 49 50 public static class IntSumReducer 51 extends Reducer<Text,IntWritable,Text,IntWritable> { 52 private IntWritable result = new IntWritable(); 53 54 public void reduce(Text key, Iterable<IntWritable> values, 55 Context context 56 ) throws IOException, InterruptedException { 57 int sum = 0; 58 for (IntWritable val : values) { 59 sum += val.get(); 60 } 61 result.set(sum); 62 context.write(key, result); 63 } 64 } 65 66 public static void main(String[] args) throws Exception { 67 Configuration conf = new Configuration(); 68 //JobConf conf=new JobConf(); 69 // 70 //conf.setJar("org.apache.hadoop.examples.WordCount.jar"); 71 // conf.set("fs.default.name", "hdfs://Master:9000/"); 72 //conf.set("hadoop.job.user","hadoop"); 73 //指定jobtracker的ip和端口号,master在/etc/hosts中可以配置 74 // conf.set("mapred.job.tracker","Master:9001"); 75 String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs(); 76 if (otherArgs.length != 2) { 77 System.err.println("Usage: wordcount <in> <out>"); 78 System.exit(2); 79 } 80 81 FileSystem hdfs =FileSystem.get(conf); 82 Path findf=new Path(otherArgs[1]); 83 boolean isExists=hdfs.exists(findf); 84 System.out.println("exit?"+isExists); 85 if(isExists) 86 { 87 hdfs.delete(findf, true); 88 System.out.println("delete output"); 89 90 } 91 92 93 Job job = new Job(conf, "word count"); 94 95 job.setJarByClass(WordCount.class); 96 job.setMapperClass(TokenizerMapper.class); 97 job.setCombinerClass(IntSumReducer.class); 98 job.setReducerClass(IntSumReducer.class); 99 job.setOutputKeyClass(Text.class); 100 job.setOutputValueClass(IntWritable.class); 101 FileInputFormat.addInputPath(job, new Path(otherArgs[0])); 102 FileOutputFormat.setOutputPath(job, new Path(otherArgs[1])); 103 System.exit(job.waitForCompletion(true) ? 0 : 1); 104 } 105 }

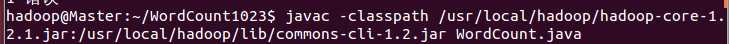

2)然后在WordCount1023目录下使用javac编译java源文件。

使用classpath添加源程序编译所需要的hadoop的两个jar包,然后是待编译的源程序的文件名。

编译成功之后产生三个class文件:

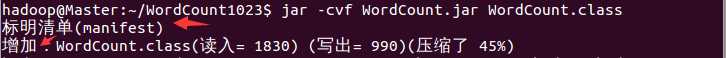

jar文件是一种压缩文件,可以将若干java的class文件压缩到一个jar文件中,如下只是将WordCount.class文件压缩到一个jar文件中。

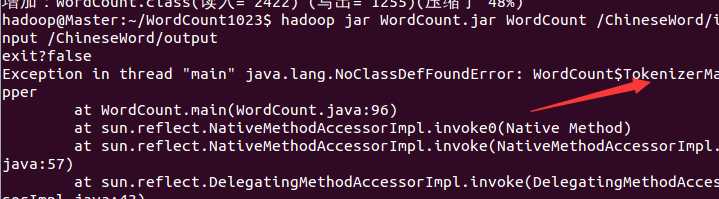

然后将这个jar包提交到hadoop集群,运行出错:

错误提示:每天发现已经定义的类:即是WordCount的内部类TokenizerMapper。因为没有把这个类打到jar包内呀~~

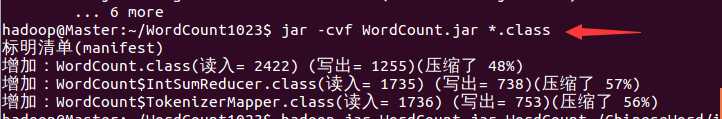

重新打jar包:

使用*.class表示把所有以.class为后缀的打成一个jar包(其实也就是那三个class文件)。

可以通过表明清单(manifest)看到打入jar包的class文件。

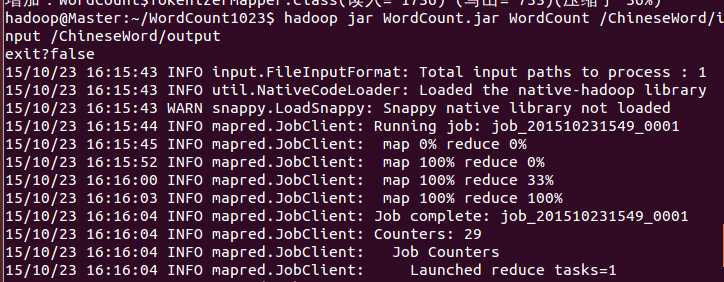

再次运行就成功了:

、

、

3)

hadoop程序当输出文件存在的时候会报错,所以本程序在内部检测输出文件是否存在,存在的话就删除。有三行代码需要详细解释。

String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs();

从命令行读取参数,命令行就是像hadoop提交作业使用的命令行。args读取的就是命令行末尾的数据记得输入路径和存放结果的输出路径,然后将其存放在字符串数组otherArgs中。

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

otherArgs[0]就是表示数据集输入路径的字符换。

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

otherArgs[1]就是表示结果输出路径的字符串。

联想到了eclipse中配置参数一项中配置输入输出路径那里,就明白了为什么eclipse不使用命令行也可以直接运行hadoop程序了。

使用命令行编译、打包、运行WordCount--不用eclipse

标签:

原文地址:http://www.cnblogs.com/lz3018/p/4904902.html