标签:

以前和人家谈论sungard的工作时,总是被质疑:"你们还在使用ejb啊?太老了吧". "早就是spring的年代了". 我总是反击,你们真的了解ejb吗?了解ejb在分布式应用里集群部署和spring比较有多方便吗?不要总是把什么IOC, AOP肤浅的挂在嘴上, 现在早已不是ejb2的时代了. ejb3 dependency injection也很容易,除了在非javaee容器管理的资源里受限(需要借助javaee6 CDI). AOP?ejb也有intercepter, transaction management? ejb CMT更simple. opensessioninviewfilter? 嗯这个真是一个很好的practice吗?自己实现一个也不难. ejb3.1有不弱于quartz的timer service, singleton bean, 异步bean等等feature,你们都了解吗?好了牢骚发到这儿,这里没有贬低spring的意思,我也承认spring的生态圈更加为大家所接受而且发展更快,只是在贬低一门技术的时候,你得先问下自己,我真的了解它的方方面面吗?

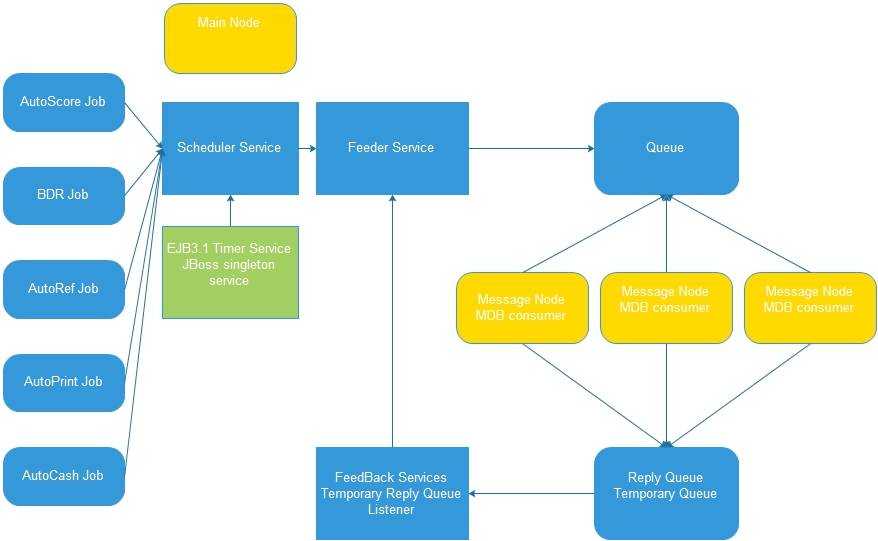

考虑如下的场景,我们的分布式系统里有8个节点,其中1个节点作为primary node, 启动定时任务, 然后发布需要处理的data. 需要8个节点同时取得data pool里的数据, 使用ejbtimer+MDB集群就很容易实现.架构图大致如下:(这里借用自己presentation的一张图.可以把左半边看成primary node, 右半边看成MDB集群同时取data pool数据并且消费)

好了,这里左半边我们create 1个timer service, 并且发送data到queue里. 同时,我们create一个temporary reply queue作为replyTo并且创建一个listener.MDB集群消费queue里的message并且发回到reply queue.

问题是ejb timer。这里我们需要解决的问题是:

1) timer只能有1个instance, 否则集群里每个节点都启动timer就会有问题

2) timer需要支持HA

3) 进1步,我们需要支持多个集群里只有1个timer instance

对于JbossAS6 里的timer service, cluster支持并不完美。可以把timer部署成1个HASingleton bean以支持HA,fail over和one instance. 创建jboss.xml在meta-inf下

<?xml version="1.0" encoding="UTF-8"?> <jboss xmlns="http://www.jboss.com/xml/ns/javaee" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.jboss.com/xml/ns/javaee http://www.jboss.org/j2ee/schema/jboss_5_1.xsd" version="5.1"> <enterprise-beans> <session> <ejb-name>SchedulerManagementServiceBean</ejb-name> <depends>jboss.ha:service=HASingletonDeployer,type=Barrier</depends> </session> </enterprise-beans> </jboss>

现在启动jbossas6, hasingleton会自己选定一个节点作为primary node并deploy. 问题来了,我们去找SchedulerManagementServiceBean的remote接口时,如果我们不在primary节点, 则会抛错....

这是因为下面的配置:

ejb3-intecepters-aop.xml:

<stack name="ClusteredStatelessSessionClientInterceptors"> <interceptor-ref name="org.jboss.ejb3.async.impl.interceptor.AsynchronousInterceptorFactory" /> <interceptor-ref name="org.jboss.ejb3.remoting.ClusteredIsLocalInterceptor"/> <interceptor-ref name="org.jboss.ejb3.security.client.SecurityClientInterceptor"/> <interceptor-ref name="org.jboss.aspects.tx.ClientTxPropagationInterceptor"/> <interceptor-ref name="org.jboss.aspects.remoting.ClusterChooserInterceptor"/> <interceptor-ref name="org.jboss.aspects.remoting.InvokeRemoteInterceptor"/> </stack>

其中<interceptor-ref name="org.jboss.ejb3.remoting.ClusteredIsLocalInterceptor"/>会告诉容器,当我们在集群环境寻找remote bean时,任然prefer去当前节点去找!

那么就注释掉这一行,ok问题解决了. 哦对了jndi别忘了用HA port啊(as default, 1100 is the ha port and offset is 100)

最后支持多个集群里一个节点. 当时哪个客户提出需要部署双jboss集群,一台为主一台备份,连接同一个oracle RAC. 问题又来了, 这样同一个环境我们任然会有2个ejb timer启动!

解决方案也很简单,把一个jboss集群下的timer关闭, 并且在这个jboss集群里call timer bean的时候指定jndi为远程的jndi即可:

比如我们cluster1是10.110.173.150(8180,8280.8380,8480)/10.110.173.151(8180,8280.8380,8480), cluster2则是10.110.173.152,10.110.173.153

那么我们可以把cluster2的timer 关闭,并且在每次call SchedulerManagementServiceBean的时候,指定jndi成10.110.173.150:1200;10.110.173.150:1300;10.110.173.150:1400;即可。

关于weblogic timer的集群,下面链接很详细而且HA支持完美,我们只需要默认配置就可以了.

https://blogs.oracle.com/muraliveligeti/entry/ejb_timer_ejb

MDB集群:

JBOSS6 AS, JBOSS7默认的hornetq对message driven bean的集群support很好,无需过多配置.

jboss-6.1.0.Final-GETPAID\server\node1\deploy\hornetq

<!-- ~ Copyright 2009 Red Hat, Inc. ~ Red Hat licenses this file to you under the Apache License, version ~ 2.0 (the "License"); you may not use this file except in compliance ~ with the License. You may obtain a copy of the License at ~ http://www.apache.org/licenses/LICENSE-2.0 ~ Unless required by applicable law or agreed to in writing, software ~ distributed under the License is distributed on an "AS IS" BASIS, ~ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or ~ implied. See the License for the specific language governing ~ permissions and limitations under the License. --> <configuration xmlns="urn:hornetq" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="urn:hornetq /schema/hornetq-configuration.xsd"> <!-- Don‘t change this name. This is used by the dependency framework on the deployers, to make sure this deployment is done before any other deployment --> <name>HornetQ.main.config</name> <clustered>true</clustered> <log-delegate-factory-class-name>org.hornetq.integration.logging.Log4jLogDelegateFactory</log-delegate-factory-class-name> <bindings-directory>${jboss.server.data.dir}/${hornetq.data.dir:hornetq}/bindings</bindings-directory> <journal-directory>${jboss.server.data.dir}/${hornetq.data.dir:hornetq}/journal</journal-directory> <!-- Default journal file size is set to 1Mb for faster first boot --> <journal-file-size>${hornetq.journal.file.size:1048576}</journal-file-size> <!-- Default journal min file is 2, increase for higher average msg rates --> <journal-min-files>${hornetq.journal.min.files:2}</journal-min-files> <large-messages-directory>${jboss.server.data.dir}/${hornetq.data.dir:hornetq}/largemessages</large-messages-directory> <paging-directory>${jboss.server.data.dir}/${hornetq.data.dir:hornetq}/paging</paging-directory> <connectors> <connector name="netty"> <factory-class>org.hornetq.core.remoting.impl.netty.NettyConnectorFactory</factory-class> <param key="host" value="${jboss.bind.address:localhost}"/> <param key="port" value="${hornetq.remoting.netty.port:5445}"/> </connector> <connector name="netty-throughput"> <factory-class>org.hornetq.core.remoting.impl.netty.NettyConnectorFactory</factory-class> <param key="host" value="${jboss.bind.address:localhost}"/> <param key="port" value="${hornetq.remoting.netty.batch.port:5455}"/> <param key="batch-delay" value="50"/> </connector> <connector name="in-vm"> <factory-class>org.hornetq.core.remoting.impl.invm.InVMConnectorFactory</factory-class> <param key="server-id" value="${hornetq.server-id:0}"/> </connector> </connectors> <acceptors> <acceptor name="netty"> <factory-class>org.hornetq.core.remoting.impl.netty.NettyAcceptorFactory</factory-class> <param key="host" value="${jboss.bind.address:localhost}"/> <param key="port" value="${hornetq.remoting.netty.port:5445}"/> </acceptor> <acceptor name="netty-throughput"> <factory-class>org.hornetq.core.remoting.impl.netty.NettyAcceptorFactory</factory-class> <param key="host" value="${jboss.bind.address:localhost}"/> <param key="port" value="${hornetq.remoting.netty.batch.port:5455}"/> <param key="batch-delay" value="50"/> <param key="direct-deliver" value="false"/> </acceptor> <acceptor name="in-vm"> <factory-class>org.hornetq.core.remoting.impl.invm.InVMAcceptorFactory</factory-class> <param key="server-id" value="0"/> </acceptor> </acceptors> <broadcast-groups> <broadcast-group name="bg-group1"> <group-address>231.7.7.7</group-address> <group-port>9876</group-port> <broadcast-period>5000</broadcast-period> <connector-ref>netty</connector-ref> </broadcast-group> </broadcast-groups> <discovery-groups> <discovery-group name="dg-group1"> <group-address>231.7.7.7</group-address> <group-port>9876</group-port> <refresh-timeout>10000</refresh-timeout> </discovery-group> </discovery-groups> <cluster-connections> <cluster-connection name="my-cluster"> <address>jms</address> <connector-ref>netty</connector-ref> <discovery-group-ref discovery-group-name="dg-group1"/> </cluster-connection> </cluster-connections> <security-settings> <security-setting match="#"> <permission type="createNonDurableQueue" roles="guest"/> <permission type="deleteNonDurableQueue" roles="guest"/> <permission type="consume" roles="guest"/> <permission type="send" roles="guest"/> </security-setting> </security-settings> <address-settings> <!--default for catch all--> <address-setting match="#"> <dead-letter-address>jms.queue.DLQ</dead-letter-address> <expiry-address>jms.queue.ExpiryQueue</expiry-address> <redelivery-delay>0</redelivery-delay> <max-size-bytes>10485760</max-size-bytes> <message-counter-history-day-limit>10</message-counter-history-day-limit> <address-full-policy>BLOCK</address-full-policy> </address-setting> </address-settings> </configuration>

唯一需要注意的是,如果同一网段有多个jboss集群,我们需要把组播broadcast-groups,discovery-groups配置成每个集群唯一,否则会互相干扰, hoho...

weblogic里又有不同。weblogic推荐UDD(Unique distributed destination)的配置方式,我们需要建立几个jms server,并且在 ear/meta-inf下create一个weblogic-jms-config-cluster.xml文件.

<uniform-distributed-queue name="SAMPLEPROB"> <sub-deployment-name>SubModule-SAMPLE</sub-deployment-name> <jndi-name>java:global/queue/SAMPLEDPROB</jndi-name> <load-balancing-policy>Round-Robin</load-balancing-policy> </uniform-distributed-queue>

同时ejb的meta-inf下create weblogic-ejb-jar.xml:

<weblogic-enterprise-bean> <ejb-name>SampleMessageServiceBean</ejb-name> <message-driven-descriptor> <destination-jndi-name>java:global/queue/SAMPLEDPROB</destination-jndi-name>

<distributed-destination-connection>EveryMember</distributed-destination-connection>

</message-driven-descriptor>

</weblogic-enterprise-bean>

<timer-implementation>Clustered</timer-implementation>

这种方式是在disable weblogic server affinity情况下的集群方式。这里不再介绍server affinity, 以下link更加详细

http://docs.oracle.com/cd/E11035_01/wls100/jms_admin/advance_config.html#jms_distributed_destination_config

如果server affinity is on, 则我们不需要额外再指定<distributed-destination-connection>EveryMember</distributed-destination-connection>

记录在Sungard工作时对ejb3.1的研究(2)--ejb3 集群(ejb timer/MDB)

标签:

原文地址:http://www.cnblogs.com/steve0511/p/4929046.html