标签:

Python3 网络爬虫

1. 直接使用python3

以下这个简单的伪代码用到了set和queue这两种经典的数据结构, 集与队列. 集的作用是记录那些已经访问过的页面, 队列的作用是进行广度优先搜索.

|

1

2

3

4

5

6

7

8

9

10

11

|

queue Q

set S

StartPoint = "http://jecvay.com"

Q.push(StartPoint) # 经典的BFS开头

S.insert(StartPoint) # 访问一个页面之前先标记他为已访问

while (Q.empty() == false) # BFS循环体

T = Q.top() # 并且pop

for point in PageUrl(T) # PageUrl(T)是指页面T中所有url的集合, point是这个集合中的一个元素.

if (point not in S)

Q.push(point)

S.insert(point)

|

这里用到的Set其内部原理是采用了Hash表, 传统的Hash对爬虫来说占用空间太大, 因此有一种叫做Bloom Filter的数据结构更适合用在这里替代Hash版本的set.

简单的webSpider实现

1 from html.parser import HTMLParser 2 from urllib.request import urlopen 3 from urllib import parse 4 5 class LinkParser(HTMLParser): 6 def handle_starttag(self, tag, attrs): 7 if tag == ‘a‘: 8 for (key, value) in attrs: 9 if key == ‘href‘: 10 newUrl = parse.urljoin(self.baseUrl, value) 11 self.links = self.links + [newUrl] 12 13 def getLinks(self, url): 14 self.links = [] 15 self.baseUrl = url 16 response = urlopen(url) 17 if response.getheader(‘Content-Type‘)==‘text/html‘: 18 htmlBytes = response.read() 19 htmlString = htmlBytes.decode("utf-8") 20 self.feed(htmlString) 21 return htmlString, self.links 22 else: 23 return "", [] 24 25 def spider(url, word, maxPages): 26 pagesToVisit = [url] 27 numberVisited = 0 28 foundWord = False 29 while numberVisited < maxPages and pagesToVisit != [] and not foundWord: 30 numberVisited = numberVisited + 1 31 url = pagesToVisit[0] 32 pagesToVisit = pagesToVisit[1:] 33 try: 34 print(numberVisited, "Visiting:", url) 35 parser = LinkParser() 36 data, links = parser.getLinks(url) 37 if data.find(word) > -1: 38 foundWord = True 39 pagesToVisit = pagesToVisit + links 40 print("**Success!**") 41 except: 42 print("**Failed!**") 43 44 if foundWord: 45 print("The word", word, "was found at", url) 46 return 47 else: 48 print("Word never found")

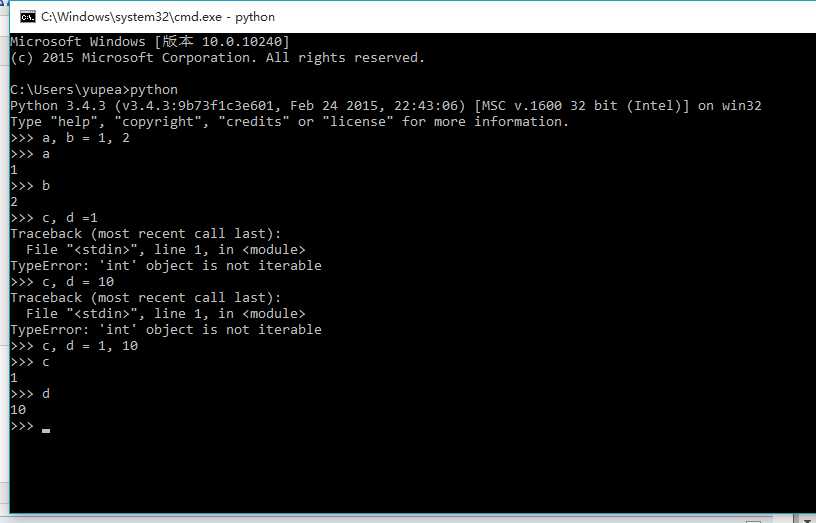

附:(python赋值和module使用)

# Assign values directly a, b = 0, 1 assert a == 0 assert b == 1 # Assign values from a list (r,g,b) = ["Red","Green","Blue"] assert r == "Red" assert g == "Green" assert b == "Blue" # Assign values from a tuple (x,y) = (1,2) assert x == 1 assert y == 2

在同级目录下打开python,输入执行以下语句

$ import WebSpider

WebSpider.spider("http://baike.baidu.com",‘羊城‘,1000)

2. 使用scrapy框架

安装

环境依赖:

openSSL, libxml2

安装方法: pip install pyOpenSSL lxml

$pip install scrapy

cat > myspider.py <<EOF

import scrapy

class BlogSpider(scrapy.Spider):

name = ‘blogspider‘

start_urls = [‘http://blog.scrapinghub.com‘]

def parse(self, response):

for url in response.css(‘ul li a::attr("href")‘).re(r‘.*/\d\d\d\d/\d\d/$‘):

yield scrapy.Request(response.urljoin(url), self.parse_titles)

def parse_titles(self, response):

for post_title in response.css(‘div.entries > ul > li a::text‘).extract():

yield {‘title‘: post_title}

EOF

scrapy runspider myspider.py

参考资料:

https://jecvay.com/2014/09/python3-web-bug-series1.html

http://www.netinstructions.com/how-to-make-a-web-crawler-in-under-50-lines-of-python-code/

http://www.jb51.net/article/65260.htm

http://scrapy.org/

https://docs.python.org/3/tutorial/modules.html

标签:

原文地址:http://www.cnblogs.com/7explore-share/p/4943230.html