标签:

在上一篇文章中描述了MySQL HA on Azured 设计思路,本篇文章中将描述具体的部署,每个组件的安装和配置。

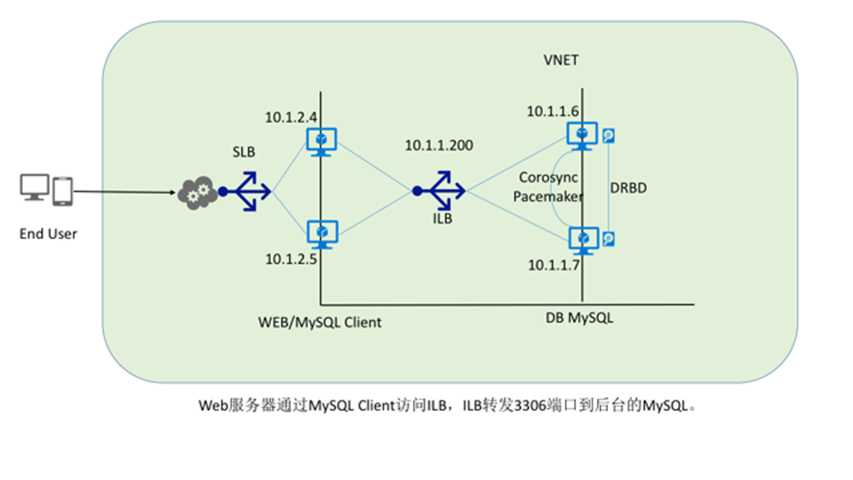

整体的设计架构如下:

下面将是所有组件的安装配置过程,所有的虚拟机是CentOS 6.5的操作系统。Azure上虚拟机的新建、Vnet的配置等本文就不再涉及。如有需要,请参考张磊同学的博客:

http://www.cnblogs.com/threestone

本文采用Xplate CLI部署Internal Load balancer,其地址为静态的10.1.1.200,Distribution模式采用sourceIP。

azure service internal-load-balancer add -a 10.1.1.200 -t Subnet-2 mysql-ha mysql-ha-ilb

info: service internal-load-balancer add command OK

azure vm endpoint create -n mysql -o tcp -t 3306 -r tcp -b mysql-ha-lbs -i mysql-ha-ilb -a sourceIP mysql-ha1 3306 3306

info: vm endpoint create command OK

azure vm endpoint create -n mysql -o tcp -t 3306 -r tcp -b mysql-ha-lbs -i mysql-ha-ilb -a sourceIP mysql-ha2 3306 3306

info: vm endpoint create command OK

azure vm static-ip set mysql-ha1 10.1.1.6

azure vm static-ip set mysql-ha2 10.1.1.7

azure vm disk attach-new mysql-ha1 20 https://portalvhds68sv75c8hs05m.blob.core.chinacloudapi.cn/hwmysqltest/mysqlha1sdc.vhd

azure vm disk attach-new mysql-ha2 20 https://portalvhds68sv75c8hs05m.blob.core.chinacloudapi.cn/hwmysqltest/mysqlha2sdc.vhd

?

?

yum -y install gcc rpm-build kernel-devel kernel-headers flex ncurses-devel

wget http://elrepo.org/linux/elrepo/el5/x86_64/RPMS/drbd84-utils-8.4.3-1.el5.elrepo.x86_64.rpm

wget http://elrepo.org/linux/elrepo/el6/x86_64/RPMS/kmod-drbd84-8.4.3-1.el6_4.elrepo.x86_64.rpm

yum -y install drbd84-utils-8.4.3-1.el5.elrepo.x86_64.rpm

yum install -y kmod-drbd84-8.4.3-1.el6_4.elrepo.x86_64.rpm

setenforce 0

或者修改配置文件/etc/sysconfig/selinux

SELINUX=disabled

vim /etc/drbd.d/mysql.res

resource mysql {

protocol C;

device /dev/drbd0;

disk /dev/sdc1;

meta-disk internal;

on mysql-ha1 {

address 10.1.1.6:7789;

}

on mysql-ha2 {

address 10.1.1.7:7789;

}

}

为保证DRBD的工作正常,修改hosts文件,把DRBD集群的机器都加入到hosts文件中

vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.1.1.6 mysql-ha1

10.1.1.7 mysql-ha2

注意此时不要进行格式化。具体方法不再累述。

在两台VM上运行drbdadm create-md mysql,分别创建DRBD的工作盘

在mysql-ha1上运行:drbdadm -- --overwrite-data-of-peer primary mysql,使这台vm的成为DRBD组的Primary。此时通过drbd-overview查看DRBD的状态,可以看到两块磁盘此时正在进行同步:

0:mysql/0 SyncSource Primary/Secondary UpToDate/Inconsistent C r-----

????[>....................] sync‘ed: 0.6% (20372/20476)M

此时在主节点上进行格式化:mkfs.ext4 /dev/drbd0

?

至此,DRBD安装配置完成。这时,只能在Primary的VM上mount这块DRBD的盘,在secondary的VM上只能看到这块盘,但不能对它进行操作。

?

如前文所述,Corosync整个集群Message Layer,Pacemaker是集群的大脑,而CRM软件是集群的编辑器。集群的安装部署过程如下:

在国内访问国外的epel源因为受到GFW的影响,不能访问。所以选择国内yahoo的yum源。具体操作如下:

vim /etc/yum.repos.d/epel.repo

[epel]

name=epel

baseurl=http://mirrors.sohu.com/fedora-epel/6/$basearch

enabled=1

gpgcheck=0

?

yum install -y corosync pacemaker -y

yum install cluster-glue -y

wget http://download.opensuse.org/repositories/network:/ha-clustering:/Stable/CentOS_CentOS-6/src/crmsh-2.1-1.6.src.rpm

wget http://download.opensuse.org/repositories/network:/ha-clustering:/Stable/CentOS_CentOS-6/src/pssh-2.3.1-4.2.src.rpm

yum install python-devel python-setuptools gcc make gcc-c++ rpm-build python-lxml cluster-glue-libs-devel pacemaker-libs-devel asciidoc autoconf automake redhat-rpm-config -y

rpmbuild --rebuild crmsh-2.1-1.6.src.rpm

rpmbuild --rebuild pssh-2.3.1-4.2.src.rpm

cd /root/rpmbuild/RPMS/x86_64/

yum install * -y

其中的member配置和transport: udpu就是指定采用udp单播(unicast)的配置。

在所有节点做full mesh的节点配置:

vim /etc/corosync/corosync.conf

compatibility: whitetank

totem {

version: 2

secauth: off

threads: 0

interface {

member{

memberaddr: 10.1.1.6

}

member{

memberaddr: 10.1.1.7

}

ringnumber: 0

bindnetaddr: 10.1.1.0

mcastport: 5405

ttl: 1

}

transport: udpu

}

logging {

fileline: off

to_stderr: no

to_logfile: yes

logfile: /var/log/cluster/corosync.log

to_syslog: yes

debug: off

timestamp: on

logger_subsys {

subsys: AMF

debug: off

}

}

amf {

mode: disabled

}

service {

ver: 1

name: pacemaker

use_mgmtd: no

use_logd: no

}

aisexec {

user: root

group: root

}

?

mv /dev/random /dev/random.bak

ln -s /dev/urandom /dev/random

corosync-keygen

scp /etc/corosync/authkey mysql-ha2:/etc/corosync

service corosync start

ssh mysql-ha2 ‘service corosync start‘

service pacemaker start

ssh mysql-ha2 ‘service pacemaker start‘

安装Azure cli:

yum install nodejs –y

yum install npm –y

npm install azure-cli –g

在/etc/init.d里创建文件mdfilb

#!/bin/sh

start()

{

echo -n $"Starting Azure ILB Modify: "

/root/mdfilb.sh

echo

}

stop()

{

echo -n $"Shutting down Azure ILB Modify: "

echo

}

MDFILB="/root/mdfilb.sh"

[ -f $MDFILB ] || exit 1

# See how we were called.

case "$1" in

start)

start

;;

stop)

stop

;;

restart)

stop

sleep 3

start

;;

*)

echo $"Usage: $0 {start|stop|restart}"

exit 1

esac

exit 0

vim /root/mdfilb.sh

azure vm endpoint delete mysql-ha2 mysql

azure vm endpoint create -i mysql-ha-ilb -o tcp -t 3306 -k 3306 -b mysql-ha-lbs -r tcp -a sourceIP mysql-ha1 3306 3306

?

但实测下来,VM对ILB对修改结果不稳定。

?

property stonith-enabled=false

property no-quorum-policy=ignore

业务的编排前,需要把两台VM重新启动。

crm configure

primitive mysqldrbd ocf:linbit:drbd params drbd_resource="mysql" op start timeout=240 op stop timeout=240

ms mydrbd mysqldrbd meta master-max="1" master-node-max="1" clone-max="2" clone-node-max="1" notify="true"

verify

commit

primitive mystore ocf:heartbeat:Filesystem params device=/dev/drbd0 directory=/mydata fstype=ext4 op start timeout=60 op stop timeout=60

verify

commit

保证文件挂载和DRBD在一台机器上,同时保证启动顺序

colocation mystore_with_mysqldrbd inf: mystore mydrbd:Master

order mystore_after_ms_mydrbd mandatory: mydrbd:promote mystore:start

verify

commit

primitive mdfilb lsb:mdfilb

要保证与文件挂载在一个服务器上,同时保证启动顺序

colocation mdfilb_with_mystore inf: mdfilb mystore

order mdfilb_after_mystore mandatory: mystore mdfilb

verify

commit

primitive mysqld lsb:mysqld

要保证与文件挂载在一个服务器上,同时保证启动顺序

colocation mysqld_with_mystore inf: mysqld mystore

order mysqld_after_mdfilb mandatory: mdfilb mysqld

verify

commit

?

至此,所有的配置都配置完成。在测试中,VM控制ILB的部分不稳定,可以视情况选择是否部署。

可以查看状态:

?

在mysql-ha1上:

[root@mysql-ha1 /]# crm status

Last updated: Sun Dec 6 03:33:21 2015

Last change: Sun Dec 6 02:57:11 2015

Stack: classic openais (with plugin)

Current DC: mysql-ha2 - partition with quorum

Version: 1.1.11-97629de

2 Nodes configured, 2 expected votes

5 Resources configured

?

?

Online: [ mysql-ha1 mysql-ha2 ]

?

Master/Slave Set: mydrbd [mysqldrbd]

Masters: [ mysql-ha2 ]

Slaves: [ mysql-ha1 ]

mystore????(ocf::heartbeat:Filesystem):????Started mysql-ha2

mdfilb????(lsb:mdfilb):????Started mysql-ha2

mysqld????(lsb:mysqld):????Started mysql-ha2

?

?

[root@mysql-ha1 /]# drbd-overview

0:mysql/0 Connected Secondary/Primary UpToDate/UpToDate C r-----

?

[root@mysql-ha1 /]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/sda1 29G 3.3G 24G 13% /

tmpfs 1.7G 30M 1.7G 2% /dev/shm

/dev/sdb1 50G 180M 47G 1% /mnt/resource

?

[root@mysql-ha1 /]# service mysqld status

mysqld is stopped

?

在mysql-ha2上:

[root@mysql-ha2 ~]# crm status

Last updated: Sun Dec 6 03:36:30 2015

Last change: Sun Dec 6 02:57:11 2015

Stack: classic openais (with plugin)

Current DC: mysql-ha2 - partition with quorum

Version: 1.1.11-97629de

2 Nodes configured, 2 expected votes

5 Resources configured

?

?

Online: [ mysql-ha1 mysql-ha2 ]

?

Master/Slave Set: mydrbd [mysqldrbd]

Masters: [ mysql-ha2 ]

Slaves: [ mysql-ha1 ]

mystore????(ocf::heartbeat:Filesystem):????Started mysql-ha2

mdfilb????(lsb:mdfilb):????Started mysql-ha2

mysqld????(lsb:mysqld):????Started mysql-ha2

?

[root@mysql-ha2 ~]# drbd-overview

0:mysql/0 Connected Primary/Secondary UpToDate/UpToDate C r----- /mydata ext4 20G 193M 19G 2%

?

[root@mysql-ha2 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/sda1 29G 3.2G 24G 12% /

tmpfs 1.7G 45M 1.7G 3% /dev/shm

/dev/sdb1 50G 180M 47G 1% /mnt/resource

/dev/drbd0 20G 193M 19G 2% /mydata

?

[root@mysql-ha2 ~]# service mysqld status

mysqld (pid 2367) is running...

?

[root@mysql-ha2 ~]# cd /mydata/

[root@mysql-ha2 mydata]# ls

hengwei ibdata1 ib_logfile0 ib_logfile1 lost+found mysql test

?

?

此时在前端通过mysql客户端访问10.1.1.200的3306端口,在两台机器随意关停机的情况下,可以快速的恢复连接,保持业务的连续性!

MySQL on Azure高可用性设计 DRBD - Corosync - Pacemaker - CRM (二)

标签:

原文地址:http://www.cnblogs.com/hengwei/p/5023277.html