标签:

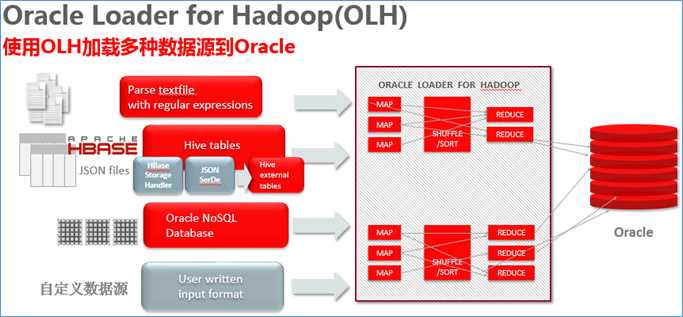

OLH是Oracle Loader for Hadoop的缩写,Oracle出品的大数据连接器的一个组件

本文介绍的就是如何使用OLH加载Hbase表到Oracle数据库。

这里需要注意两点:

输出结果如下:

Oracle Loader for Hadoop Release 3.4.0 - Production

Copyright (c) 2011, 2015, Oracle and/or its affiliates. All rights reserved.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-2.6.2/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/hive-1.1.1/lib/hive-jdbc-1.1.1-standalone.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

15/12/08 04:53:51 INFO loader.OraLoader: Oracle Loader for Hadoop Release 3.4.0 - Production

Copyright (c) 2011, 2015, Oracle and/or its affiliates. All rights reserved.

15/12/08 04:53:51 INFO loader.OraLoader: Built-Against: hadoop-2.2.0 hive-0.13.0 avro-1.7.3 jackson-1.8.8

15/12/08 04:53:51 INFO Configuration.deprecation: mapreduce.outputformat.class is deprecated. Instead, use mapreduce.job.outputformat.class

15/12/08 04:53:51 INFO Configuration.deprecation: mapred.output.dir is deprecated. Instead, use mapreduce.output.fileoutputformat.outputdir

15/12/08 04:54:23 INFO Configuration.deprecation: mapred.submit.replication is deprecated. Instead, use mapreduce.client.submit.file.replication

15/12/08 04:54:24 INFO loader.OraLoader: oracle.hadoop.loader.loadByPartition is disabled because table: CATALOG is not partitioned

15/12/08 04:54:24 INFO output.DBOutputFormat: Setting reduce tasks speculative execution to false for : oracle.hadoop.loader.lib.output.JDBCOutputFormat

15/12/08 04:54:24 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative

15/12/08 04:54:26 WARN loader.OraLoader: Sampler is disabled because the number of reduce tasks is less than two. Job will continue without sampled information.

15/12/08 04:54:26 INFO loader.OraLoader: Submitting OraLoader job OraLoader

15/12/08 04:54:26 INFO client.RMProxy: Connecting to ResourceManager at server1/192.168.56.101:8032

15/12/08 04:54:28 INFO metastore.HiveMetaStore: 0: Opening raw store with implemenation class:org.apache.hadoop.hive.metastore.ObjectStore

15/12/08 04:54:28 INFO metastore.ObjectStore: ObjectStore, initialize called

15/12/08 04:54:29 INFO DataNucleus.Persistence: Property hive.metastore.integral.jdo.pushdown unknown - will be ignored

15/12/08 04:54:29 INFO DataNucleus.Persistence: Property datanucleus.cache.level2 unknown - will be ignored

15/12/08 04:54:31 INFO metastore.ObjectStore: Setting MetaStore object pin classes with hive.metastore.cache.pinobjtypes="Table,StorageDescriptor,SerDeInfo,Partition,Database,Type,FieldSchema,Order"

15/12/08 04:54:33 INFO DataNucleus.Datastore: The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table.

15/12/08 04:54:33 INFO DataNucleus.Datastore: The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table.

15/12/08 04:54:34 INFO DataNucleus.Datastore: The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table.

15/12/08 04:54:34 INFO DataNucleus.Datastore: The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table.

15/12/08 04:54:34 INFO DataNucleus.Query: Reading in results for query "org.datanucleus.store.rdbms.query.SQLQuery@0" since the connection used is closing

15/12/08 04:54:34 INFO metastore.MetaStoreDirectSql: Using direct SQL, underlying DB is MYSQL

15/12/08 04:54:34 INFO metastore.ObjectStore: Initialized ObjectStore

15/12/08 04:54:34 INFO metastore.HiveMetaStore: Added admin role in metastore

15/12/08 04:54:34 INFO metastore.HiveMetaStore: Added public role in metastore

15/12/08 04:54:35 INFO metastore.HiveMetaStore: No user is added in admin role, since config is empty

15/12/08 04:54:35 INFO metastore.HiveMetaStore: 0: get_table : db=default tbl=catalog

15/12/08 04:54:35 INFO HiveMetaStore.audit: ugi=hadoop ip=unknown-ip-addr cmd=get_table : db=default tbl=catalog

15/12/08 04:54:36 INFO mapred.FileInputFormat: Total input paths to process : 1

15/12/08 04:54:36 INFO metastore.HiveMetaStore: 0: Shutting down the object store...

15/12/08 04:54:36 INFO HiveMetaStore.audit: ugi=hadoop ip=unknown-ip-addr cmd=Shutting down the object store...

15/12/08 04:54:36 INFO metastore.HiveMetaStore: 0: Metastore shutdown complete.

15/12/08 04:54:36 INFO HiveMetaStore.audit: ugi=hadoop ip=unknown-ip-addr cmd=Metastore shutdown complete.

15/12/08 04:54:37 INFO mapreduce.JobSubmitter: number of splits:2

15/12/08 04:54:37 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1449544601730_0015

15/12/08 04:54:38 INFO impl.YarnClientImpl: Submitted application application_1449544601730_0015

15/12/08 04:54:38 INFO mapreduce.Job: The url to track the job: http://server1:8088/proxy/application_1449544601730_0015/

15/12/08 04:54:49 INFO loader.OraLoader: map 0% reduce 0%

15/12/08 04:55:07 INFO loader.OraLoader: map 100% reduce 0%

15/12/08 04:55:22 INFO loader.OraLoader: map 100% reduce 67%

15/12/08 04:55:47 INFO loader.OraLoader: map 100% reduce 100%

15/12/08 04:55:47 INFO loader.OraLoader: Job complete: OraLoader (job_1449544601730_0015)

15/12/08 04:55:47 INFO loader.OraLoader: Counters: 49

File System Counters

FILE: Number of bytes read=395

FILE: Number of bytes written=370110

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=6005

HDFS: Number of bytes written=1861

HDFS: Number of read operations=9

HDFS: Number of large read operations=0

HDFS: Number of write operations=5

Job Counters

Launched map tasks=2

Launched reduce tasks=1

Data-local map tasks=2

Total time spent by all maps in occupied slots (ms)=29809

Total time spent by all reduces in occupied slots (ms)=36328

Total time spent by all map tasks (ms)=29809

Total time spent by all reduce tasks (ms)=36328

Total vcore-seconds taken by all map tasks=29809

Total vcore-seconds taken by all reduce tasks=36328

Total megabyte-seconds taken by all map tasks=30524416

Total megabyte-seconds taken by all reduce tasks=37199872

Map-Reduce Framework

Map input records=3

Map output records=3

Map output bytes=383

Map output materialized bytes=401

Input split bytes=5610

Combine input records=0

Combine output records=0

Reduce input groups=1

Reduce shuffle bytes=401

Reduce input records=3

Reduce output records=3

Spilled Records=6

Shuffled Maps =2

Failed Shuffles=0

Merged Map outputs=2

GC time elapsed (ms)=1245

CPU time spent (ms)=14220

Physical memory (bytes) snapshot=757501952

Virtual memory (bytes) snapshot=6360301568

Total committed heap usage (bytes)=535298048

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=1620

连接Oracle与Hadoop(3) 使用OLH加载Hbase到Oracle

标签:

原文地址:http://www.cnblogs.com/panwenyu/p/5058590.html