标签:

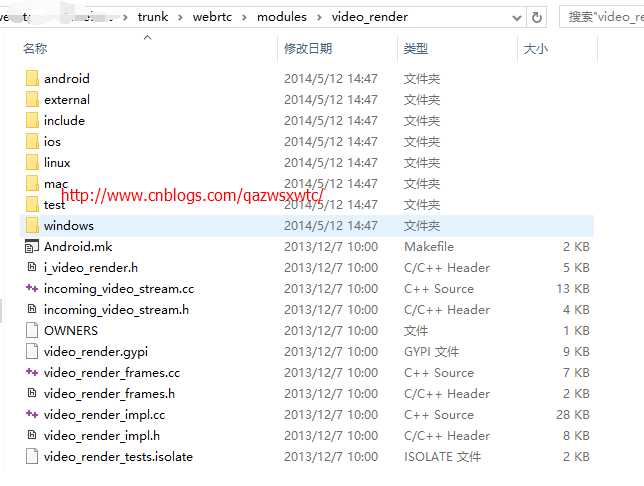

在上一篇博文中,简单介绍了webrtc为我们提供了跨平台的视频采集模块,这篇博文也简单介绍下webrtc为我们提供的跨平台的视频显示模块:video_render。 该模块的源码结构如下:

如上图,我们也可以看到webrtc提供的视频显示模块video_render模块支持android、IOS、linux、mac和windows平台。我们在使用的时候只用单独编译该模块或者从webrtc中提取出该模块即可。

video_render模块的头文件内容为:

// Class definitions class VideoRender: public Module { public: /* * Create a video render module object * * id - unique identifier of this video render module object * window - pointer to the window to render to * fullscreen - true if this is a fullscreen renderer * videoRenderType - type of renderer to create */ static VideoRender * CreateVideoRender( const int32_t id, void* window, const bool fullscreen, const VideoRenderType videoRenderType = kRenderDefault); /* * Destroy a video render module object * * module - object to destroy */ static void DestroyVideoRender(VideoRender* module); /* * Change the unique identifier of this object * * id - new unique identifier of this video render module object */ virtual int32_t ChangeUniqueId(const int32_t id) = 0; virtual int32_t TimeUntilNextProcess() = 0; virtual int32_t Process() = 0; /************************************************************************** * * Window functions * ***************************************************************************/ /* * Get window for this renderer */ virtual void* Window() = 0; /* * Change render window * * window - the new render window, assuming same type as originally created. */ virtual int32_t ChangeWindow(void* window) = 0; /************************************************************************** * * Incoming Streams * ***************************************************************************/ /* * Add incoming render stream * * streamID - id of the stream to add * zOrder - relative render order for the streams, 0 = on top * left - position of the stream in the window, [0.0f, 1.0f] * top - position of the stream in the window, [0.0f, 1.0f] * right - position of the stream in the window, [0.0f, 1.0f] * bottom - position of the stream in the window, [0.0f, 1.0f] * * Return - callback class to use for delivering new frames to render. */ virtual VideoRenderCallback * AddIncomingRenderStream(const uint32_t streamId, const uint32_t zOrder, const float left, const float top, const float right, const float bottom) = 0; /* * Delete incoming render stream * * streamID - id of the stream to add */ virtual int32_t DeleteIncomingRenderStream(const uint32_t streamId) = 0; /* * Add incoming render callback, used for external rendering * * streamID - id of the stream the callback is used for * renderObject - the VideoRenderCallback to use for this stream, NULL to remove * * Return - callback class to use for delivering new frames to render. */ virtual int32_t AddExternalRenderCallback(const uint32_t streamId, VideoRenderCallback* renderObject) = 0; /* * Get the porperties for an incoming render stream * * streamID - [in] id of the stream to get properties for * zOrder - [out] relative render order for the streams, 0 = on top * left - [out] position of the stream in the window, [0.0f, 1.0f] * top - [out] position of the stream in the window, [0.0f, 1.0f] * right - [out] position of the stream in the window, [0.0f, 1.0f] * bottom - [out] position of the stream in the window, [0.0f, 1.0f] */ virtual int32_t GetIncomingRenderStreamProperties(const uint32_t streamId, uint32_t& zOrder, float& left, float& top, float& right, float& bottom) const = 0; /* * The incoming frame rate to the module, not the rate rendered in the window. */ virtual uint32_t GetIncomingFrameRate(const uint32_t streamId) = 0; /* * Returns the number of incoming streams added to this render module */ virtual uint32_t GetNumIncomingRenderStreams() const = 0; /* * Returns true if this render module has the streamId added, false otherwise. */ virtual bool HasIncomingRenderStream(const uint32_t streamId) const = 0; /* * Registers a callback to get raw images in the same time as sent * to the renderer. To be used for external rendering. */ virtual int32_t RegisterRawFrameCallback(const uint32_t streamId, VideoRenderCallback* callbackObj) = 0; /* * This method is usefull to get last rendered frame for the stream specified */ virtual int32_t GetLastRenderedFrame(const uint32_t streamId, I420VideoFrame &frame) const = 0; /************************************************************************** * * Start/Stop * ***************************************************************************/ /* * Starts rendering the specified stream */ virtual int32_t StartRender(const uint32_t streamId) = 0; /* * Stops the renderer */ virtual int32_t StopRender(const uint32_t streamId) = 0; /* * Resets the renderer * No streams are removed. The state should be as after AddStream was called. */ virtual int32_t ResetRender() = 0; /************************************************************************** * * Properties * ***************************************************************************/ /* * Returns the preferred render video type */ virtual RawVideoType PreferredVideoType() const = 0; /* * Returns true if the renderer is in fullscreen mode, otherwise false. */ virtual bool IsFullScreen() = 0; /* * Gets screen resolution in pixels */ virtual int32_t GetScreenResolution(uint32_t& screenWidth, uint32_t& screenHeight) const = 0; /* * Get the actual render rate for this stream. I.e rendered frame rate, * not frames delivered to the renderer. */ virtual uint32_t RenderFrameRate(const uint32_t streamId) = 0; /* * Set cropping of incoming stream */ virtual int32_t SetStreamCropping(const uint32_t streamId, const float left, const float top, const float right, const float bottom) = 0; /* * re-configure renderer */ // Set the expected time needed by the graphics card or external renderer, // i.e. frames will be released for rendering |delay_ms| before set render // time in the video frame. virtual int32_t SetExpectedRenderDelay(uint32_t stream_id, int32_t delay_ms) = 0; virtual int32_t ConfigureRenderer(const uint32_t streamId, const unsigned int zOrder, const float left, const float top, const float right, const float bottom) = 0; virtual int32_t SetTransparentBackground(const bool enable) = 0; virtual int32_t FullScreenRender(void* window, const bool enable) = 0; virtual int32_t SetBitmap(const void* bitMap, const uint8_t pictureId, const void* colorKey, const float left, const float top, const float right, const float bottom) = 0; virtual int32_t SetText(const uint8_t textId, const uint8_t* text, const int32_t textLength, const uint32_t textColorRef, const uint32_t backgroundColorRef, const float left, const float top, const float right, const float bottom) = 0; /* * Set a start image. The image is rendered before the first image has been delivered */ virtual int32_t SetStartImage(const uint32_t streamId, const I420VideoFrame& videoFrame) = 0; /* * Set a timout image. The image is rendered if no videoframe has been delivered */ virtual int32_t SetTimeoutImage(const uint32_t streamId, const I420VideoFrame& videoFrame, const uint32_t timeout)= 0; virtual int32_t MirrorRenderStream(const int renderId, const bool enable, const bool mirrorXAxis, const bool mirrorYAxis) = 0; };

1,在显示前需要向该模块传入一个windows窗口对象的指针,理由如下函数:

static VideoRender

* CreateVideoRender(

const int32_t id,

void* window,

const bool fullscreen,

const VideoRenderType videoRenderType =

kRenderDefault);,

2,

以下两个函数主要用于开始显示和结束显示。

/*

* Starts rendering the specified stream

*/

virtual int32_t StartRender(const uint32_t streamId) = 0;

/*

* Stops the renderer

*/

virtual int32_t StopRender(const uint32_t streamId) = 0;

另:

webrtc也有自带调用video_render的例程: 如下图

该例程包含 直接c++调用(主要针对windows系统)和android版本(其实android版本是没有实际源码,但是我们能仿照windows的调用方式写出来)和ios的调用,下面只列出直接C++调用的程序

头文件为:

/* * Copyright (c) 2011 The WebRTC project authors. All Rights Reserved. * * Use of this source code is governed by a BSD-style license * that can be found in the LICENSE file in the root of the source * tree. An additional intellectual property rights grant can be found * in the file PATENTS. All contributing project authors may * be found in the AUTHORS file in the root of the source tree. */ #ifndef WEBRTC_MODULES_VIDEO_RENDER_MAIN_TEST_TESTAPI_TESTAPI_H #define WEBRTC_MODULES_VIDEO_RENDER_MAIN_TEST_TESTAPI_TESTAPI_H #include "webrtc/modules/video_render/include/video_render_defines.h" void RunVideoRenderTests(void* window, webrtc::VideoRenderType windowType); #endif // WEBRTC_MODULES_VIDEO_RENDER_MAIN_TEST_TESTAPI_TESTAPI_H

源码文件为:

/* * Copyright (c) 2012 The WebRTC project authors. All Rights Reserved. * * Use of this source code is governed by a BSD-style license * that can be found in the LICENSE file in the root of the source * tree. An additional intellectual property rights grant can be found * in the file PATENTS. All contributing project authors may * be found in the AUTHORS file in the root of the source tree. */ #include "webrtc/modules/video_render/test/testAPI/testAPI.h" #include <stdio.h> #if defined(_WIN32) #include <tchar.h> #include <windows.h> #include <assert.h> #include <fstream> #include <iostream> #include <string> #include <windows.h> #include <ddraw.h> #elif defined(WEBRTC_LINUX) && !defined(WEBRTC_ANDROID) #include <X11/Xlib.h> #include <X11/Xutil.h> #include <iostream> #include <sys/time.h> #endif #include "webrtc/common_types.h" #include "webrtc/modules/interface/module_common_types.h" #include "webrtc/modules/utility/interface/process_thread.h" #include "webrtc/modules/video_render/include/video_render.h" #include "webrtc/modules/video_render/include/video_render_defines.h" #include "webrtc/system_wrappers/interface/sleep.h" #include "webrtc/system_wrappers/interface/tick_util.h" #include "webrtc/system_wrappers/interface/trace.h" using namespace webrtc; void GetTestVideoFrame(I420VideoFrame* frame, uint8_t startColor); int TestSingleStream(VideoRender* renderModule); int TestFullscreenStream(VideoRender* &renderModule, void* window, const VideoRenderType videoRenderType); int TestBitmapText(VideoRender* renderModule); int TestMultipleStreams(VideoRender* renderModule); int TestExternalRender(VideoRender* renderModule); #define TEST_FRAME_RATE 30 #define TEST_TIME_SECOND 5 #define TEST_FRAME_NUM (TEST_FRAME_RATE*TEST_TIME_SECOND) #define TEST_STREAM0_START_COLOR 0 #define TEST_STREAM1_START_COLOR 64 #define TEST_STREAM2_START_COLOR 128 #define TEST_STREAM3_START_COLOR 192 #if defined(WEBRTC_LINUX) #define GET_TIME_IN_MS timeGetTime() unsigned long timeGetTime() { struct timeval tv; struct timezone tz; unsigned long val; gettimeofday(&tv, &tz); val= tv.tv_sec*1000+ tv.tv_usec/1000; return(val); } #elif defined(WEBRTC_MAC) #include <unistd.h> #define GET_TIME_IN_MS timeGetTime() unsigned long timeGetTime() { return 0; } #else #define GET_TIME_IN_MS ::timeGetTime() #endif using namespace std; #if defined(_WIN32) LRESULT CALLBACK WebRtcWinProc( HWND hWnd,UINT uMsg,WPARAM wParam,LPARAM lParam) { switch(uMsg) { case WM_DESTROY: break; case WM_COMMAND: break; } return DefWindowProc(hWnd,uMsg,wParam,lParam); } int WebRtcCreateWindow(HWND &hwndMain,int winNum, int width, int height) { HINSTANCE hinst = GetModuleHandle(0); WNDCLASSEX wcx; wcx.hInstance = hinst; wcx.lpszClassName = TEXT("VideoRenderTest"); wcx.lpfnWndProc = (WNDPROC)WebRtcWinProc; wcx.style = CS_DBLCLKS; wcx.hIcon = LoadIcon (NULL, IDI_APPLICATION); wcx.hIconSm = LoadIcon (NULL, IDI_APPLICATION); wcx.hCursor = LoadCursor (NULL, IDC_ARROW); wcx.lpszMenuName = NULL; wcx.cbSize = sizeof (WNDCLASSEX); wcx.cbClsExtra = 0; wcx.cbWndExtra = 0; wcx.hbrBackground = GetSysColorBrush(COLOR_3DFACE); // Register our window class with the operating system. // If there is an error, exit program. if ( !RegisterClassEx (&wcx) ) { MessageBox( 0, TEXT("Failed to register window class!"),TEXT("Error!"), MB_OK|MB_ICONERROR ); return 0; } // Create the main window. hwndMain = CreateWindowEx( 0, // no extended styles TEXT("VideoRenderTest"), // class name TEXT("VideoRenderTest Window"), // window name WS_OVERLAPPED |WS_THICKFRAME, // overlapped window 800, // horizontal position 0, // vertical position width, // width height, // height (HWND) NULL, // no parent or owner window (HMENU) NULL, // class menu used hinst, // instance handle NULL); // no window creation data if (!hwndMain) return -1; // Show the window using the flag specified by the program // that started the application, and send the application // a WM_PAINT message. ShowWindow(hwndMain, SW_SHOWDEFAULT); UpdateWindow(hwndMain); return 0; } #elif defined(WEBRTC_LINUX) && !defined(WEBRTC_ANDROID) int WebRtcCreateWindow(Window *outWindow, Display **outDisplay, int winNum, int width, int height) // unsigned char* title, int titleLength) { int screen, xpos = 10, ypos = 10; XEvent evnt; XSetWindowAttributes xswa; // window attribute struct XVisualInfo vinfo; // screen visual info struct unsigned long mask; // attribute mask // get connection handle to xserver Display* _display = XOpenDisplay( NULL ); // get screen number screen = DefaultScreen(_display); // put desired visual info for the screen in vinfo if( XMatchVisualInfo(_display, screen, 24, TrueColor, &vinfo) != 0 ) { //printf( "Screen visual info match!\n" ); } // set window attributes xswa.colormap = XCreateColormap(_display, DefaultRootWindow(_display), vinfo.visual, AllocNone); xswa.event_mask = StructureNotifyMask | ExposureMask; xswa.background_pixel = 0; xswa.border_pixel = 0; // value mask for attributes mask = CWBackPixel | CWBorderPixel | CWColormap | CWEventMask; switch( winNum ) { case 0: xpos = 200; ypos = 200; break; case 1: xpos = 300; ypos = 200; break; default: break; } // create a subwindow for parent (defroot) Window _window = XCreateWindow(_display, DefaultRootWindow(_display), xpos, ypos, width, height, 0, vinfo.depth, InputOutput, vinfo.visual, mask, &xswa); // Set window name if( winNum == 0 ) { XStoreName(_display, _window, "VE MM Local Window"); XSetIconName(_display, _window, "VE MM Local Window"); } else if( winNum == 1 ) { XStoreName(_display, _window, "VE MM Remote Window"); XSetIconName(_display, _window, "VE MM Remote Window"); } // make x report events for mask XSelectInput(_display, _window, StructureNotifyMask); // map the window to the display XMapWindow(_display, _window); // wait for map event do { XNextEvent(_display, &evnt); } while (evnt.type != MapNotify || evnt.xmap.event != _window); *outWindow = _window; *outDisplay = _display; return 0; } #endif // LINUX // Note: Mac code is in testApi_mac.mm. class MyRenderCallback: public VideoRenderCallback { public: MyRenderCallback() : _cnt(0) { } ; ~MyRenderCallback() { } ; virtual int32_t RenderFrame(const uint32_t streamId, I420VideoFrame& videoFrame) { _cnt++; if (_cnt % 100 == 0) { printf("Render callback %d \n",_cnt); } return 0; } int32_t _cnt; }; void GetTestVideoFrame(I420VideoFrame* frame, uint8_t startColor) { // changing color static uint8_t color = startColor; memset(frame->buffer(kYPlane), color, frame->allocated_size(kYPlane)); memset(frame->buffer(kUPlane), color, frame->allocated_size(kUPlane)); memset(frame->buffer(kVPlane), color, frame->allocated_size(kVPlane)); ++color; } int TestSingleStream(VideoRender* renderModule) { int error = 0; // Add settings for a stream to render printf("Add stream 0 to entire window\n"); const int streamId0 = 0; VideoRenderCallback* renderCallback0 = renderModule->AddIncomingRenderStream(streamId0, 0, 0.0f, 0.0f, 1.0f, 1.0f); assert(renderCallback0 != NULL); #ifndef WEBRTC_INCLUDE_INTERNAL_VIDEO_RENDER MyRenderCallback externalRender; renderModule->AddExternalRenderCallback(streamId0, &externalRender); #endif printf("Start render\n"); error = renderModule->StartRender(streamId0); if (error != 0) { // TODO(phoglund): This test will not work if compiled in release mode. // This rather silly construct here is to avoid compilation errors when // compiling in release. Release => no asserts => unused ‘error‘ variable. assert(false); } // Loop through an I420 file and render each frame const int width = 352; const int half_width = (width + 1) / 2; const int height = 288; I420VideoFrame videoFrame0; videoFrame0.CreateEmptyFrame(width, height, width, half_width, half_width); const uint32_t renderDelayMs = 500; for (int i=0; i<TEST_FRAME_NUM; i++) { GetTestVideoFrame(&videoFrame0, TEST_STREAM0_START_COLOR); // Render this frame with the specified delay videoFrame0.set_render_time_ms(TickTime::MillisecondTimestamp() + renderDelayMs); renderCallback0->RenderFrame(streamId0, videoFrame0); SleepMs(1000/TEST_FRAME_RATE); } // Shut down printf("Closing...\n"); error = renderModule->StopRender(streamId0); assert(error == 0); error = renderModule->DeleteIncomingRenderStream(streamId0); assert(error == 0); return 0; } int TestFullscreenStream(VideoRender* &renderModule, void* window, const VideoRenderType videoRenderType) { VideoRender::DestroyVideoRender(renderModule); renderModule = VideoRender::CreateVideoRender(12345, window, true, videoRenderType); TestSingleStream(renderModule); VideoRender::DestroyVideoRender(renderModule); renderModule = VideoRender::CreateVideoRender(12345, window, false, videoRenderType); return 0; } int TestBitmapText(VideoRender* renderModule) { #if defined(WIN32) int error = 0; // Add settings for a stream to render printf("Add stream 0 to entire window\n"); const int streamId0 = 0; VideoRenderCallback* renderCallback0 = renderModule->AddIncomingRenderStream(streamId0, 0, 0.0f, 0.0f, 1.0f, 1.0f); assert(renderCallback0 != NULL); printf("Adding Bitmap\n"); DDCOLORKEY ColorKey; // black ColorKey.dwColorSpaceHighValue = RGB(0, 0, 0); ColorKey.dwColorSpaceLowValue = RGB(0, 0, 0); HBITMAP hbm = (HBITMAP)LoadImage(NULL, (LPCTSTR)_T("renderStartImage.bmp"), IMAGE_BITMAP, 0, 0, LR_LOADFROMFILE); renderModule->SetBitmap(hbm, 0, &ColorKey, 0.0f, 0.0f, 0.3f, 0.3f); printf("Adding Text\n"); renderModule->SetText(1, (uint8_t*) "WebRtc Render Demo App", 20, RGB(255, 0, 0), RGB(0, 0, 0), 0.25f, 0.1f, 1.0f, 1.0f); printf("Start render\n"); error = renderModule->StartRender(streamId0); assert(error == 0); // Loop through an I420 file and render each frame const int width = 352; const int half_width = (width + 1) / 2; const int height = 288; I420VideoFrame videoFrame0; videoFrame0.CreateEmptyFrame(width, height, width, half_width, half_width); const uint32_t renderDelayMs = 500; for (int i=0; i<TEST_FRAME_NUM; i++) { GetTestVideoFrame(&videoFrame0, TEST_STREAM0_START_COLOR); // Render this frame with the specified delay videoFrame0.set_render_time_ms(TickTime::MillisecondTimestamp() + renderDelayMs); renderCallback0->RenderFrame(streamId0, videoFrame0); SleepMs(1000/TEST_FRAME_RATE); } // Sleep and let all frames be rendered before closing SleepMs(renderDelayMs*2); // Shut down printf("Closing...\n"); ColorKey.dwColorSpaceHighValue = RGB(0,0,0); ColorKey.dwColorSpaceLowValue = RGB(0,0,0); renderModule->SetBitmap(NULL, 0, &ColorKey, 0.0f, 0.0f, 0.0f, 0.0f); renderModule->SetText(1, NULL, 20, RGB(255,255,255), RGB(0,0,0), 0.0f, 0.0f, 0.0f, 0.0f); error = renderModule->StopRender(streamId0); assert(error == 0); error = renderModule->DeleteIncomingRenderStream(streamId0); assert(error == 0); #endif return 0; } int TestMultipleStreams(VideoRender* renderModule) { // Add settings for a stream to render printf("Add stream 0\n"); const int streamId0 = 0; VideoRenderCallback* renderCallback0 = renderModule->AddIncomingRenderStream(streamId0, 0, 0.0f, 0.0f, 0.45f, 0.45f); assert(renderCallback0 != NULL); printf("Add stream 1\n"); const int streamId1 = 1; VideoRenderCallback* renderCallback1 = renderModule->AddIncomingRenderStream(streamId1, 0, 0.55f, 0.0f, 1.0f, 0.45f); assert(renderCallback1 != NULL); printf("Add stream 2\n"); const int streamId2 = 2; VideoRenderCallback* renderCallback2 = renderModule->AddIncomingRenderStream(streamId2, 0, 0.0f, 0.55f, 0.45f, 1.0f); assert(renderCallback2 != NULL); printf("Add stream 3\n"); const int streamId3 = 3; VideoRenderCallback* renderCallback3 = renderModule->AddIncomingRenderStream(streamId3, 0, 0.55f, 0.55f, 1.0f, 1.0f); assert(renderCallback3 != NULL); assert(renderModule->StartRender(streamId0) == 0); assert(renderModule->StartRender(streamId1) == 0); assert(renderModule->StartRender(streamId2) == 0); assert(renderModule->StartRender(streamId3) == 0); // Loop through an I420 file and render each frame const int width = 352; const int half_width = (width + 1) / 2; const int height = 288; I420VideoFrame videoFrame0; videoFrame0.CreateEmptyFrame(width, height, width, half_width, half_width); I420VideoFrame videoFrame1; videoFrame1.CreateEmptyFrame(width, height, width, half_width, half_width); I420VideoFrame videoFrame2; videoFrame2.CreateEmptyFrame(width, height, width, half_width, half_width); I420VideoFrame videoFrame3; videoFrame3.CreateEmptyFrame(width, height, width, half_width, half_width); const uint32_t renderDelayMs = 500; // Render frames with the specified delay. for (int i=0; i<TEST_FRAME_NUM; i++) { GetTestVideoFrame(&videoFrame0, TEST_STREAM0_START_COLOR); videoFrame0.set_render_time_ms(TickTime::MillisecondTimestamp() + renderDelayMs); renderCallback0->RenderFrame(streamId0, videoFrame0); GetTestVideoFrame(&videoFrame1, TEST_STREAM1_START_COLOR); videoFrame1.set_render_time_ms(TickTime::MillisecondTimestamp() + renderDelayMs); renderCallback1->RenderFrame(streamId1, videoFrame1); GetTestVideoFrame(&videoFrame2, TEST_STREAM2_START_COLOR); videoFrame2.set_render_time_ms(TickTime::MillisecondTimestamp() + renderDelayMs); renderCallback2->RenderFrame(streamId2, videoFrame2); GetTestVideoFrame(&videoFrame3, TEST_STREAM3_START_COLOR); videoFrame3.set_render_time_ms(TickTime::MillisecondTimestamp() + renderDelayMs); renderCallback3->RenderFrame(streamId3, videoFrame3); SleepMs(1000/TEST_FRAME_RATE); } // Shut down printf("Closing...\n"); assert(renderModule->StopRender(streamId0) == 0); assert(renderModule->DeleteIncomingRenderStream(streamId0) == 0); assert(renderModule->StopRender(streamId1) == 0); assert(renderModule->DeleteIncomingRenderStream(streamId1) == 0); assert(renderModule->StopRender(streamId2) == 0); assert(renderModule->DeleteIncomingRenderStream(streamId2) == 0); assert(renderModule->StopRender(streamId3) == 0); assert(renderModule->DeleteIncomingRenderStream(streamId3) == 0); return 0; } int TestExternalRender(VideoRender* renderModule) { MyRenderCallback *externalRender = new MyRenderCallback(); const int streamId0 = 0; VideoRenderCallback* renderCallback0 = renderModule->AddIncomingRenderStream(streamId0, 0, 0.0f, 0.0f, 1.0f, 1.0f); assert(renderCallback0 != NULL); assert(renderModule->AddExternalRenderCallback(streamId0, externalRender) == 0); assert(renderModule->StartRender(streamId0) == 0); const int width = 352; const int half_width = (width + 1) / 2; const int height = 288; I420VideoFrame videoFrame0; videoFrame0.CreateEmptyFrame(width, height, width, half_width, half_width); const uint32_t renderDelayMs = 500; int frameCount = TEST_FRAME_NUM; for (int i=0; i<frameCount; i++) { videoFrame0.set_render_time_ms(TickTime::MillisecondTimestamp() + renderDelayMs); renderCallback0->RenderFrame(streamId0, videoFrame0); SleepMs(33); } // Sleep and let all frames be rendered before closing SleepMs(2*renderDelayMs); assert(renderModule->StopRender(streamId0) == 0); assert(renderModule->DeleteIncomingRenderStream(streamId0) == 0); assert(frameCount == externalRender->_cnt); delete externalRender; externalRender = NULL; return 0; } void RunVideoRenderTests(void* window, VideoRenderType windowType) { #ifndef WEBRTC_INCLUDE_INTERNAL_VIDEO_RENDER windowType = kRenderExternal; #endif int myId = 12345; // Create the render module printf("Create render module\n"); VideoRender* renderModule = NULL; renderModule = VideoRender::CreateVideoRender(myId, window, false, windowType); assert(renderModule != NULL); // ##### Test single stream rendering #### printf("#### TestSingleStream ####\n"); if (TestSingleStream(renderModule) != 0) { printf ("TestSingleStream failed\n"); } // ##### Test fullscreen rendering #### printf("#### TestFullscreenStream ####\n"); if (TestFullscreenStream(renderModule, window, windowType) != 0) { printf ("TestFullscreenStream failed\n"); } // ##### Test bitmap and text #### printf("#### TestBitmapText ####\n"); if (TestBitmapText(renderModule) != 0) { printf ("TestBitmapText failed\n"); } // ##### Test multiple streams #### printf("#### TestMultipleStreams ####\n"); if (TestMultipleStreams(renderModule) != 0) { printf ("TestMultipleStreams failed\n"); } // ##### Test multiple streams #### printf("#### TestExternalRender ####\n"); if (TestExternalRender(renderModule) != 0) { printf ("TestExternalRender failed\n"); } delete renderModule; renderModule = NULL; printf("VideoRender unit tests passed.\n"); } // Note: The Mac main is implemented in testApi_mac.mm. #if defined(_WIN32) int _tmain(int argc, _TCHAR* argv[]) #elif defined(WEBRTC_LINUX) && !defined(WEBRTC_ANDROID) int main(int argc, char* argv[]) #endif #if !defined(WEBRTC_MAC) && !defined(WEBRTC_ANDROID) { // Create a window for testing. void* window = NULL; #if defined (_WIN32) HWND testHwnd; WebRtcCreateWindow(testHwnd, 0, 352, 288); window = (void*)testHwnd; VideoRenderType windowType = kRenderWindows; #elif defined(WEBRTC_LINUX) Window testWindow; Display* display; WebRtcCreateWindow(&testWindow, &display, 0, 352, 288); VideoRenderType windowType = kRenderX11; window = (void*)testWindow; #endif // WEBRTC_LINUX RunVideoRenderTests(window, windowType); return 0; } #endif // !WEBRTC_MAC

标签:

原文地址:http://www.cnblogs.com/qazwsxwtc/p/5415419.html