标签:

现在视频直播非常的火,所以在视频直播开发中,使用的对视频进行解码的框架显得尤为重要了,其实,这种框架蛮多的,这次主要介绍一下FFmpeg视频播放器的集成和使用

原址:http://bbs.520it.com/forum.php?mod=viewthread&tid=707&page=1&extra=#pid3821

一 本播放器原理:

通过ffmpeg对视频进行解码,解码出每一帧图片,然后根据一定时间播放每一帧图

二 如何集成 ffmpeg

下载脚本 ffmpeg脚本

根据上面链接的 README 进行编译

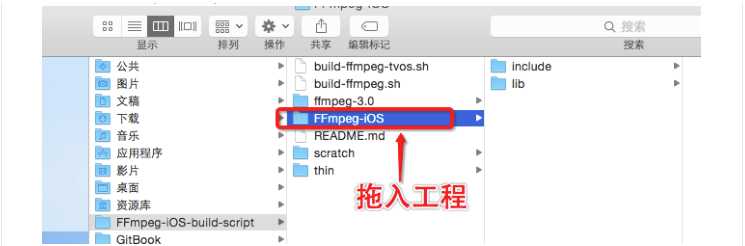

集成到项目,新建工程,将编译好的静态库以及头文件导入工程

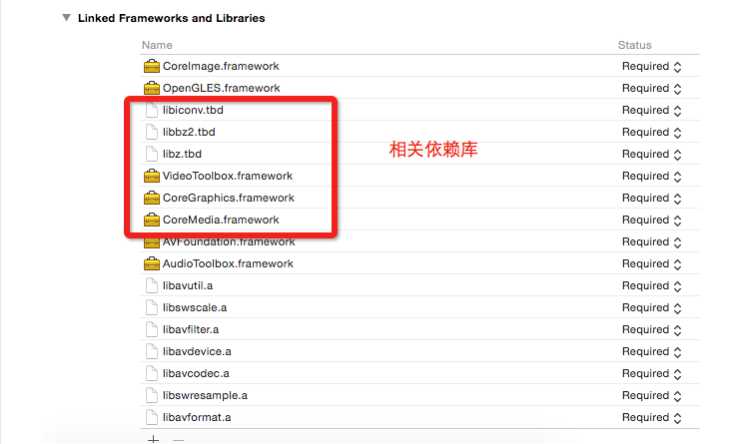

导入依赖库

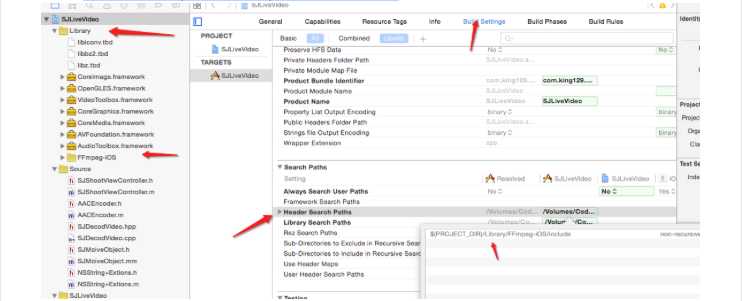

设置头文件路径,路径一定要对,不然胡找不到头文件

先 command + B 编译一下,确保能编译成功

新建一个OC文件

// // SJMoiveObject.h // SJLiveVideo // // Created by king on 16/6/16. // Copyright © 2016年 king. All rights reserved. // #import "Common.h" #import <UIKit/UIKit.h> #import "NSString+Extions.h" #include <libavcodec/avcodec.h> #include <libavformat/avformat.h> #include <libswscale//swscale.h> @interface SJMoiveObject : NSObject /* 解码后的UIImage */ @property (nonatomic, strong, readonly) UIImage *currentImage; /* 视频的frame高度 */ @property (nonatomic, assign, readonly) int sourceWidth, sourceHeight; /* 输出图像大小。默认设置为源大小。 */ @property (nonatomic,assign) int outputWidth, outputHeight; /* 视频的长度,秒为单位 */ @property (nonatomic, assign, readonly) double duration; /* 视频的当前秒数 */ @property (nonatomic, assign, readonly) double currentTime; /* 视频的帧率 */ @property (nonatomic, assign, readonly) double fps; /* 视频路径。 */ - (instancetype)initWithVideo:(NSString *)moviePath; /* 切换资源 */ - (void)replaceTheResources:(NSString *)moviePath; /* 重拨 */ - (void)redialPaly; /* 从视频流中读取下一帧。返回假,如果没有帧读取(视频)。 */ - (BOOL)stepFrame; /* 寻求最近的关键帧在指定的时间 */ - (void)seekTime:(double)seconds; @end 开始实现API // // SJMoiveObject.m // SJLiveVideo // // Created by king on 16/6/16. // Copyright © 2016年 king. All rights reserved. // #import "SJMoiveObject.h" @interface SJMoiveObject () @property (nonatomic, copy) NSString *cruutenPath; @end @implementation SJMoiveObject { AVFormatContext *SJFormatCtx; AVCodecContext *SJCodecCtx; AVFrame *SJFrame; AVStream *stream; AVPacket packet; AVPicture picture; int videoStream; double fps; BOOL isReleaseResources; } #pragma mark ------------------------------------ #pragma mark 初始化 - (instancetype)initWithVideo:(NSString *)moviePath { if (!(self=[super init])) return nil; if ([self initializeResources:[moviePath UTF8String]]) { self.cruutenPath = [moviePath copy]; return self; } else { return nil; } } - (BOOL)initializeResources:(const char *)filePath { isReleaseResources = NO; AVCodec *pCodec; // 注册所有解码器 avcodec_register_all(); av_register_all(); avformat_network_init(); // 打开视频文件 if (avformat_open_input(&SJFormatCtx, filePath, NULL, NULL) != 0) { SJLog(@"打开文件失败"); goto initError; } // 检查数据流 if (avformat_find_stream_info(SJFormatCtx, NULL) < 0) { SJLog(@"检查数据流失败"); goto initError; } // 根据数据流,找到第一个视频流 if ((videoStream = av_find_best_stream(SJFormatCtx, AVMEDIA_TYPE_VIDEO, -1, -1, &pCodec, 0)) < 0) { SJLog(@"没有找到第一个视频流"); goto initError; } // 获取视频流的编解码上下文的指针 stream = SJFormatCtx->streams[videoStream]; SJCodecCtx = stream->codec; #if DEBUG // 打印视频流的详细信息 av_dump_format(SJFormatCtx, videoStream, filePath, 0); #endif if(stream->avg_frame_rate.den && stream->avg_frame_rate.num) { fps = av_q2d(stream->avg_frame_rate); } else { fps = 30; } // 查找解码器 pCodec = avcodec_find_decoder(SJCodecCtx->codec_id); if (pCodec == NULL) { SJLog(@"没有找到解码器"); goto initError; } // 打开解码器 if(avcodec_open2(SJCodecCtx, pCodec, NULL) < 0) { SJLog(@"打开解码器失败"); goto initError; } // 分配视频帧 SJFrame = av_frame_alloc(); _outputWidth = SJCodecCtx->width; _outputHeight = SJCodecCtx->height; return YES; initError: return NO; } - (void)seekTime:(double)seconds { AVRational timeBase = SJFormatCtx->streams[videoStream]->time_base; int64_t targetFrame = (int64_t)((double)timeBase.den / timeBase.num * seconds); avformat_seek_file(SJFormatCtx, videoStream, 0, targetFrame, targetFrame, AVSEEK_FLAG_FRAME); avcodec_flush_buffers(SJCodecCtx); } - (BOOL)stepFrame { int frameFinished = 0; while (!frameFinished && av_read_frame(SJFormatCtx, &packet) >= 0) { if (packet.stream_index == videoStream) { avcodec_decode_video2(SJCodecCtx, SJFrame, &frameFinished, &packet); } } if (frameFinished == 0 && isReleaseResources == NO) { [self releaseResources]; } return frameFinished != 0; } - (void)replaceTheResources:(NSString *)moviePath { if (!isReleaseResources) { [self releaseResources]; } self.cruutenPath = [moviePath copy]; [self initializeResources:[moviePath UTF8String]]; } - (void)redialPaly { [self initializeResources:[self.cruutenPath UTF8String]]; } #pragma mark ------------------------------------ #pragma mark 重写属性访问方法 -(void)setOutputWidth:(int)newValue { if (_outputWidth == newValue) return; _outputWidth = newValue; } -(void)setOutputHeight:(int)newValue { if (_outputHeight == newValue) return; _outputHeight = newValue; } -(UIImage *)currentImage { if (!SJFrame->data[0]) return nil; return [self imageFromAVPicture]; } -(double)duration { return (double)SJFormatCtx->duration / AV_TIME_BASE; } - (double)currentTime { AVRational timeBase = SJFormatCtx->streams[videoStream]->time_base; return packet.pts * (double)timeBase.num / timeBase.den; } - (int)sourceWidth { return SJCodecCtx->width; } - (int)sourceHeight { return SJCodecCtx->height; } - (double)fps { return fps; } #pragma mark -------------------------- #pragma mark - 内部方法 - (UIImage *)imageFromAVPicture { avpicture_free(&picture); avpicture_alloc(&picture, AV_PIX_FMT_RGB24, _outputWidth, _outputHeight); struct SwsContext * imgConvertCtx = sws_getContext(SJFrame->width, SJFrame->height, AV_PIX_FMT_YUV420P, _outputWidth, _outputHeight, AV_PIX_FMT_RGB24, SWS_FAST_BILINEAR, NULL, NULL, NULL); if(imgConvertCtx == nil) return nil; sws_scale(imgConvertCtx, SJFrame->data, SJFrame->linesize, 0, SJFrame->height, picture.data, picture.linesize); sws_freeContext(imgConvertCtx); CGBitmapInfo bitmapInfo = kCGBitmapByteOrderDefault; CFDataRef data = CFDataCreate(kCFAllocatorDefault, picture.data[0], picture.linesize[0] * _outputHeight); CGDataProviderRef provider = CGDataProviderCreateWithCFData(data); CGColorSpaceRef colorSpace = CGColorSpaceCreateDeviceRGB(); CGImageRef cgImage = CGImageCreate(_outputWidth, _outputHeight, 8, 24, picture.linesize[0], colorSpace, bitmapInfo, provider, NULL, NO, kCGRenderingIntentDefault); UIImage *image = [UIImage imageWithCGImage:cgImage]; CGImageRelease(cgImage); CGColorSpaceRelease(colorSpace); CGDataProviderRelease(provider); CFRelease(data); return image; } #pragma mark -------------------------- #pragma mark - 释放资源 - (void)releaseResources { SJLog(@"释放资源"); SJLogFunc isReleaseResources = YES; // 释放RGB avpicture_free(&picture); // 释放frame av_packet_unref(&packet); // 释放YUV frame av_free(SJFrame); // 关闭解码器 if (SJCodecCtx) avcodec_close(SJCodecCtx); // 关闭文件 if (SJFormatCtx) avformat_close_input(&SJFormatCtx); avformat_network_deinit(); } @end

为了方便,在SB 拖一个 UIImageView 控件 和按钮 并连好线

// // ViewController.m // SJLiveVideo // // Created by king on 16/6/14. // Copyright © 2016年 king. All rights reserved. // #import "ViewController.h" #import "SJMoiveObject.h" #import <AVFoundation/AVFoundation.h> #import "SJAudioObject.h" #import "SJAudioQueuPlay.h" #define LERP(A,B,C) ((A)*(1.0-C)+(B)*C) @interface ViewController () @property (weak, nonatomic) IBOutlet UIImageView *ImageView; @property (weak, nonatomic) IBOutlet UILabel *fps; @property (weak, nonatomic) IBOutlet UIButton *playBtn; @property (weak, nonatomic) IBOutlet UIButton *TimerBtn; @property (weak, nonatomic) IBOutlet UILabel *TimerLabel; @property (nonatomic, strong) SJMoiveObject *video; @property (nonatomic, strong) SJAudioObject *audio; @property (nonatomic, strong) SJAudioQueuPlay *audioPlay; @property (nonatomic, assign) float lastFrameTime; @end @implementation ViewController @synthesize ImageView, fps, playBtn, video; - (void)viewDidLoad { [super viewDidLoad]; self.video = [[SJMoiveObject alloc] initWithVideo:[NSString bundlePath:@"Dalshabet.mp4"]]; // self.video = [[SJMoiveObject alloc] initWithVideo:@"/Users/king/Desktop/Stellar.mp4"]; // self.video = [[SJMoiveObject alloc] initWithVideo:@"/Users/king/Downloads/Worth it - Fifth Harmony ft.Kid Ink - May J Lee Choreography.mp4"]; // self.video = [[SJMoiveObject alloc] initWithVideo:@"/Users/king/Downloads/4K.mp4"]; // self.video = [[SJMoiveObject alloc] initWithVideo:@"http://wvideo.spriteapp.cn/video/2016/0328/56f8ec01d9bfe_wpd.mp4"]; // video.outputWidth = 800; // video.outputHeight = 600; self.audio = [[SJAudioObject alloc] initWithVideo:@"/Users/king/Desktop/Stellar.mp4"]; NSLog(@"视频总时长>>>video duration: %f",video.duration); NSLog(@"源尺寸>>>video size: %d x %d", video.sourceWidth, video.sourceHeight); NSLog(@"输出尺寸>>>video size: %d x %d", video.outputWidth, video.outputHeight); // // [self.audio seekTime:0.0]; // SJLog(@"%f", [self.audio duration]) // AVPacket *packet = [self.audio readPacket]; // SJLog(@"%ld", [self.audio decode]) int tns, thh, tmm, tss; tns = video.duration; thh = tns / 3600; tmm = (tns % 3600) / 60; tss = tns % 60; // NSLog(@"fps --> %.2f", video.fps); //// [ImageView setTransform:CGAffineTransformMakeRotation(M_PI)]; // NSLog(@"%02d:%02d:%02d",thh,tmm,tss); } - (IBAction)PlayClick:(UIButton *)sender { [playBtn setEnabled:NO]; _lastFrameTime = -1; // seek to 0.0 seconds [video seekTime:0.0]; [NSTimer scheduledTimerWithTimeInterval: 1 / video.fps target:self selector:@selector(displayNextFrame:) userInfo:nil repeats:YES]; } - (IBAction)TimerCilick:(id)sender { // NSLog(@"current time: %f s",video.currentTime); // [video seekTime:150.0]; // [video replaceTheResources:@"/Users/king/Desktop/Stellar.mp4"]; if (playBtn.enabled) { [video redialPaly]; [self PlayClick:playBtn]; } } -(void)displayNextFrame:(NSTimer *)timer { NSTimeInterval startTime = [NSDate timeIntervalSinceReferenceDate]; // self.TimerLabel.text = [NSString stringWithFormat:@"%f s",video.currentTime]; self.TimerLabel.text = [self dealTime:video.currentTime]; if (![video stepFrame]) { [timer invalidate]; [playBtn setEnabled:YES]; return; } ImageView.image = video.currentImage; float frameTime = 1.0 / ([NSDate timeIntervalSinceReferenceDate] - startTime); if (_lastFrameTime < 0) { _lastFrameTime = frameTime; } else { _lastFrameTime = LERP(frameTime, _lastFrameTime, 0.8); } [fps setText:[NSString stringWithFormat:@"fps %.0f",_lastFrameTime]]; } - (NSString *)dealTime:(double)time { int tns, thh, tmm, tss; tns = time; thh = tns / 3600; tmm = (tns % 3600) / 60; tss = tns % 60; // [ImageView setTransform:CGAffineTransformMakeRotation(M_PI)]; return [NSString stringWithFormat:@"%02d:%02d:%02d",thh,tmm,tss]; } @end

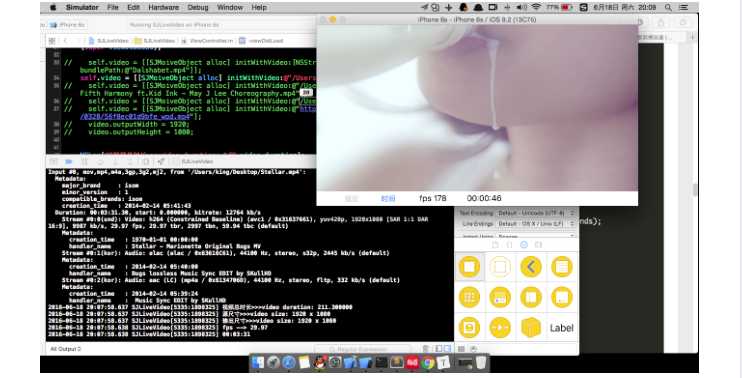

运程序 ,点击播放

标签:

原文地址:http://www.cnblogs.com/XYQ-208910/p/5651166.html