标签:

1、struct task_struct

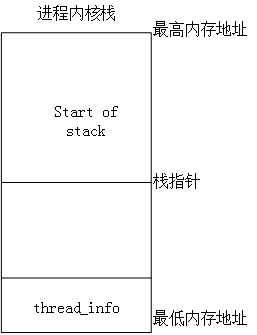

进程内核栈是操作系统为管理每一个进程而分配的一个4k或者8k内存大小的一片内存区域,里面存放了一个进程的所有信息,它能够完整的描述一个正在执行的程序:它打开的文件,进程的地址空间,挂起的信号,进程的状态,从task_struct中可以看到对一个正在执行的程序的完整描述。

进程描述符:

1 struct thread_info { 2 struct task_struct *task; /* main task structure */ 3 unsigned long flags; 4 struct exec_domain *exec_domain; /* execution domain */ 5 int preempt_count; /* 0 => preemptable, <0 => BUG */ 6 __u32 cpu; /* should always be 0 on m68k */ 7 struct restart_block restart_block; 8 };

1 struct task_struct { 2 volatile long state; /* -1 unrunnable, 0 runnable, >0 stopped */ 3 void *stack; 4 atomic_t usage; 5 unsigned int flags; /* per process flags, defined below */ 6 unsigned int ptrace; 7 8 int lock_depth; /* BKL lock depth */ 9 10 #ifdef CONFIG_SMP 11 #ifdef __ARCH_WANT_UNLOCKED_CTXSW 12 int oncpu; 13 #endif 14 #endif 15 int load_weight; /* for niceness load balancing purposes */ 16 int prio, static_prio, normal_prio; 17 struct list_head run_list; 18 struct prio_array *array; 19 20 unsigned short ioprio; 21 #ifdef CONFIG_BLK_DEV_IO_TRACE 22 unsigned int btrace_seq; 23 #endif 24 unsigned long sleep_avg; 25 unsigned long long timestamp, last_ran; 26 unsigned long long sched_time; /* sched_clock time spent running */ 27 enum sleep_type sleep_type; 28 29 unsigned int policy; 30 cpumask_t cpus_allowed; 31 unsigned int time_slice, first_time_slice; 32 33 #if defined(CONFIG_SCHEDSTATS) || defined(CONFIG_TASK_DELAY_ACCT) 34 struct sched_info sched_info; 35 #endif 36 37 struct list_head tasks; 38 /* 39 * ptrace_list/ptrace_children forms the list of my children 40 * that were stolen by a ptracer. 41 */ 42 struct list_head ptrace_children; 43 struct list_head ptrace_list; 44 45 struct mm_struct *mm, *active_mm; 46 47 /* task state */ 48 struct linux_binfmt *binfmt; 49 int exit_state; 50 int exit_code, exit_signal; 51 int pdeath_signal; /* The signal sent when the parent dies */ 52 /* ??? */ 53 unsigned int personality; 54 unsigned did_exec:1; 55 pid_t pid; 56 pid_t tgid; 57 58 #ifdef CONFIG_CC_STACKPROTECTOR 59 /* Canary value for the -fstack-protector gcc feature */ 60 unsigned long stack_canary; 61 #endif 62 /* 63 * pointers to (original) parent process, youngest child, younger sibling, 64 * older sibling, respectively. (p->father can be replaced with 65 * p->parent->pid) 66 */ 67 struct task_struct *real_parent; /* real parent process (when being debugged) */ 68 struct task_struct *parent; /* parent process */ 69 /* 70 * children/sibling forms the list of my children plus the 71 * tasks I‘m ptracing. 72 */ 73 struct list_head children; /* list of my children */ 74 struct list_head sibling; /* linkage in my parent‘s children list */ 75 struct task_struct *group_leader; /* threadgroup leader */ 76 77 /* PID/PID hash table linkage. */ 78 struct pid_link pids[PIDTYPE_MAX]; 79 struct list_head thread_group; 80 81 struct completion *vfork_done; /* for vfork() */ 82 int __user *set_child_tid; /* CLONE_CHILD_SETTID */ 83 int __user *clear_child_tid; /* CLONE_CHILD_CLEARTID */ 84 85 unsigned int rt_priority; 86 cputime_t utime, stime; 87 unsigned long nvcsw, nivcsw; /* context switch counts */ 88 struct timespec start_time; 89 /* mm fault and swap info: this can arguably be seen as either mm-specific or thread-specific */ 90 unsigned long min_flt, maj_flt; 91 92 cputime_t it_prof_expires, it_virt_expires; 93 unsigned long long it_sched_expires; 94 struct list_head cpu_timers[3]; 95 96 /* process credentials */ 97 uid_t uid,euid,suid,fsuid; 98 gid_t gid,egid,sgid,fsgid; 99 struct group_info *group_info; 100 kernel_cap_t cap_effective, cap_inheritable, cap_permitted; 101 unsigned keep_capabilities:1; 102 struct user_struct *user; 103 #ifdef CONFIG_KEYS 104 struct key *request_key_auth; /* assumed request_key authority */ 105 struct key *thread_keyring; /* keyring private to this thread */ 106 unsigned char jit_keyring; /* default keyring to attach requested keys to */ 107 #endif 108 /* 109 * fpu_counter contains the number of consecutive context switches 110 * that the FPU is used. If this is over a threshold, the lazy fpu 111 * saving becomes unlazy to save the trap. This is an unsigned char 112 * so that after 256 times the counter wraps and the behavior turns 113 * lazy again; this to deal with bursty apps that only use FPU for 114 * a short time 115 */ 116 unsigned char fpu_counter; 117 int oomkilladj; /* OOM kill score adjustment (bit shift). */ 118 char comm[TASK_COMM_LEN]; /* executable name excluding path 119 - access with [gs]et_task_comm (which lock 120 it with task_lock()) 121 - initialized normally by flush_old_exec */ 122 /* file system info */ 123 int link_count, total_link_count; 124 #ifdef CONFIG_SYSVIPC 125 /* ipc stuff */ 126 struct sysv_sem sysvsem; 127 #endif 128 /* CPU-specific state of this task */ 129 struct thread_struct thread; 130 /* filesystem information */ 131 struct fs_struct *fs; 132 /* open file information */ 133 struct files_struct *files; 134 /* namespaces */ 135 struct nsproxy *nsproxy; 136 /* signal handlers */ 137 struct signal_struct *signal; 138 struct sighand_struct *sighand; 139 140 sigset_t blocked, real_blocked; 141 sigset_t saved_sigmask; /* To be restored with TIF_RESTORE_SIGMASK */ 142 struct sigpending pending; 143 144 unsigned long sas_ss_sp; 145 size_t sas_ss_size; 146 int (*notifier)(void *priv); 147 void *notifier_data; 148 sigset_t *notifier_mask; 149 150 void *security; 151 struct audit_context *audit_context; 152 seccomp_t seccomp; 153 154 /* Thread group tracking */ 155 u32 parent_exec_id; 156 u32 self_exec_id; 157 /* Protection of (de-)allocation: mm, files, fs, tty, keyrings */ 158 spinlock_t alloc_lock; 159 160 /* Protection of the PI data structures: */ 161 spinlock_t pi_lock; 162 163 #ifdef CONFIG_RT_MUTEXES 164 /* PI waiters blocked on a rt_mutex held by this task */ 165 struct plist_head pi_waiters; 166 /* Deadlock detection and priority inheritance handling */ 167 struct rt_mutex_waiter *pi_blocked_on; 168 #endif 169 170 #ifdef CONFIG_DEBUG_MUTEXES 171 /* mutex deadlock detection */ 172 struct mutex_waiter *blocked_on; 173 #endif 174 #ifdef CONFIG_TRACE_IRQFLAGS 175 unsigned int irq_events; 176 int hardirqs_enabled; 177 unsigned long hardirq_enable_ip; 178 unsigned int hardirq_enable_event; 179 unsigned long hardirq_disable_ip; 180 unsigned int hardirq_disable_event; 181 int softirqs_enabled; 182 unsigned long softirq_disable_ip; 183 unsigned int softirq_disable_event; 184 unsigned long softirq_enable_ip; 185 unsigned int softirq_enable_event; 186 int hardirq_context; 187 int softirq_context; 188 #endif 189 #ifdef CONFIG_LOCKDEP 190 # define MAX_LOCK_DEPTH 30UL 191 u64 curr_chain_key; 192 int lockdep_depth; 193 struct held_lock held_locks[MAX_LOCK_DEPTH]; 194 unsigned int lockdep_recursion; 195 #endif 196 197 /* journalling filesystem info */ 198 void *journal_info; 199 200 /* stacked block device info */ 201 struct bio *bio_list, **bio_tail; 202 203 /* VM state */ 204 struct reclaim_state *reclaim_state; 205 206 struct backing_dev_info *backing_dev_info; 207 208 struct io_context *io_context; 209 210 unsigned long ptrace_message; 211 siginfo_t *last_siginfo; /* For ptrace use. */ 212 /* 213 * current io wait handle: wait queue entry to use for io waits 214 * If this thread is processing aio, this points at the waitqueue 215 * inside the currently handled kiocb. It may be NULL (i.e. default 216 * to a stack based synchronous wait) if its doing sync IO. 217 */ 218 wait_queue_t *io_wait; 219 #ifdef CONFIG_TASK_XACCT 220 /* i/o counters(bytes read/written, #syscalls */ 221 u64 rchar, wchar, syscr, syscw; 222 #endif 223 struct task_io_accounting ioac; 224 #if defined(CONFIG_TASK_XACCT) 225 u64 acct_rss_mem1; /* accumulated rss usage */ 226 u64 acct_vm_mem1; /* accumulated virtual memory usage */ 227 cputime_t acct_stimexpd;/* stime since last update */ 228 #endif 229 #ifdef CONFIG_NUMA 230 struct mempolicy *mempolicy; 231 short il_next; 232 #endif 233 #ifdef CONFIG_CPUSETS 234 struct cpuset *cpuset; 235 nodemask_t mems_allowed; 236 int cpuset_mems_generation; 237 int cpuset_mem_spread_rotor; 238 #endif 239 struct robust_list_head __user *robust_list; 240 #ifdef CONFIG_COMPAT 241 struct compat_robust_list_head __user *compat_robust_list; 242 #endif 243 struct list_head pi_state_list; 244 struct futex_pi_state *pi_state_cache; 245 246 atomic_t fs_excl; /* holding fs exclusive resources */ 247 struct rcu_head rcu; 248 249 /* 250 * cache last used pipe for splice 251 */ 252 struct pipe_inode_info *splice_pipe; 253 #ifdef CONFIG_TASK_DELAY_ACCT 254 struct task_delay_info *delays; 255 #endif 256 #ifdef CONFIG_FAULT_INJECTION 257 int make_it_fail; 258 #endif 259 };

thread_info->task_struct->struct mm_struct

1 struct mm_struct { 2 struct vm_area_struct * mmap; /* list of VMAs */ 3 struct rb_root mm_rb; 4 struct vm_area_struct * mmap_cache; /* last find_vma result */ 5 unsigned long (*get_unmapped_area) (struct file *filp, 6 unsigned long addr, unsigned long len, 7 unsigned long pgoff, unsigned long flags); 8 void (*unmap_area) (struct mm_struct *mm, unsigned long addr); 9 unsigned long mmap_base; /* base of mmap area */ 10 unsigned long task_size; /* size of task vm space */ 11 unsigned long cached_hole_size; /* if non-zero, the largest hole below free_area_cache */ 12 unsigned long free_area_cache; /* first hole of size cached_hole_size or larger */ 13 pgd_t * pgd; 14 atomic_t mm_users; /* How many users with user space? */ 15 atomic_t mm_count; /* How many references to "struct mm_struct" (users count as 1) */ 16 int map_count; /* number of VMAs */ 17 struct rw_semaphore mmap_sem; 18 spinlock_t page_table_lock; /* Protects page tables and some counters */ 19 20 struct list_head mmlist; /* List of maybe swapped mm‘s. These are globally strung //所有mm_struct形成的链表 21 * together off init_mm.mmlist, and are protected 22 * by mmlist_lock 23 */ 24 25 /* Special counters, in some configurations protected by the 26 * page_table_lock, in other configurations by being atomic. 27 */ 28 mm_counter_t _file_rss; 29 mm_counter_t _anon_rss; 30 31 unsigned long hiwater_rss; /* High-watermark of RSS usage */ 32 unsigned long hiwater_vm; /* High-water virtual memory usage */ 33 34 unsigned long total_vm, locked_vm, shared_vm, exec_vm; 35 unsigned long stack_vm, reserved_vm, def_flags, nr_ptes; 36 unsigned long start_code, end_code, start_data, end_data; //进程代码段、数据段的开始结束地址 37 unsigned long start_brk, brk, start_stack; //进程堆的首地址、堆的尾地址、进程栈的首地址 38 unsigned long arg_start, arg_end, env_start, env_end; //进程命令行参数的首地址、命令行参数的尾地址、环境变量的首地址、环境变量的尾地址 39 40 unsigned long saved_auxv[AT_VECTOR_SIZE]; /* for /proc/PID/auxv */ 41 42 cpumask_t cpu_vm_mask; 43 44 /* Architecture-specific MM context */ 45 mm_context_t context; 46 47 /* Swap token stuff */ 48 /* 49 * Last value of global fault stamp as seen by this process. 50 * In other words, this value gives an indication of how long 51 * it has been since this task got the token. 52 * Look at mm/thrash.c 53 */ 54 unsigned int faultstamp; 55 unsigned int token_priority; 56 unsigned int last_interval; 57 58 unsigned char dumpable:2; 59 60 /* coredumping support */ 61 int core_waiters; 62 struct completion *core_startup_done, core_done; 63 64 /* aio bits */ 65 rwlock_t ioctx_list_lock; 66 struct kioctx *ioctx_list; 67 };

进程内存区域可以包含各种内存对象,从上面的mm_struct结构体中我们就可以看出来,主要有:

1、可执行文件代码的内存映射,称为代码段

2、可执行文件的已初始化全局变量的内存映射,称为数据段

3、包含未初始化的全局变量,就是bass段的零页的内存映射

4、用于进程用户空间栈的零页内存映射,这里不要和进程内核栈混淆,进程的内核栈独立存在并由内核维护,因为内核管理着所有进程,而前面讲述的内核栈存放着管理所有进程的信息,所以内核管理着内核栈,内核栈管理着进程

标签:

原文地址:http://www.cnblogs.com/qiuheng/p/5745767.html