标签:iscsi服务的配置+lvm逻辑卷 高可用(ha)集群铺设的业务apache+iscsi服务的配置

主机环境 redhat6.5 64位

实验环境 服务端1 ip 172.25.29.1 主机名:server1.example.com ricci iscsi apache

服务端2 ip 172.25.29.2 主机名:server2.example.com ricci iscsi apache

管理端1 ip 172.25.29.3 主机名:server3.example.com luci scsi

管理端2 ip 172.25.29.250 fence_virtd

防火墙状态:关闭

前面的博文已经写过高可用集群的搭建,现在就不再重复了。这次就以铺设apche和iscsi业务为例,来测试搭建的高可用集群。

在搭建业务之前要保证安装了httpd服务(服务端1和服务端2)

1.安装、开启scsi(管理端1)

[root@server3 ~]# yum install scsi* -y #安装scsi

[root@server3 ~]# vim /etc/tgt/targets.conf #修改配置文件

38 <target iqn.2008-09.com.example:server.target1>

39 backing-store /dev/sda #共享磁盘的名称

40 initiator_address 172.25.29.1 #地址

41 initiator-address 172.25.29.2

42</target>

[root@server3 ~]# /etc/init.d/tgtd start #开启tgtd

Starting SCSI target daemon: [ OK ]

[root@server3 ~]# tgt-admin -s #查看

Target 1:iqn.2008-09.com.example:server.target1

System information:

Driver: iscsi

State: ready

I_T nexus information:

LUN information:

LUN: 0

Type: controller

SCSI ID: IET 00010000

SCSI SN: beaf10

Size: 0 MB, Block size: 1

Online: Yes

Removable media: No

Prevent removal: No

Readonly: No

Backing store type: null

Backing store path: None

Backing store flags:

LUN: 1

Type: disk

SCSI ID: IET 00010001

SCSI SN: beaf11

Size: 4295 MB, Block size: 512

Online: Yes

Removable media: No

Prevent removal: No

Readonly: No

Backing store type: rdwr

Backing store path: /dev/sda #磁盘

Backing store flags:

Account information:

ACL information:

172.25.29.1 #1p

172.25.29.2

2.安装、开启iscsi、将共享分区分成逻辑卷(服务端1)

[root@server1 ~]# yum install iscsi* -y #安装iscsi

[root@server1 ~]# iscsiadm -m discovery -tst -p 172.25.29.3 #查看

Starting iscsid: [ OK ]

172.25.29.3:3260,1iqn.2008-09.com.example:server.target1

[root@server1 ~]# iscsiadm -m node -l

Logging in to [iface: default, target:iqn.2008-09.com.example:server.target1, portal: 172.25.29.3,3260] (multiple)

Login to [iface: default, target: iqn.2008-09.com.example:server.target1,portal: 172.25.29.3,3260] successful.

[root@server1 ~]# pvcreate /dev/sda #分成物理逻辑单元

Physical volume "/dev/sda" successfully created

[root@server1 ~]# pvs

PV VG Fmt Attr PSize PFree

/dev/sda lvm2 a-- 4.00g 4.00g

/dev/vda2 VolGroup lvm2 a-- 8.51g 0

/dev/vdb1 VolGroup lvm2 a-- 8.00g 0

[root@server1 ~]# vgcreate clustervg/dev/sda #组成逻辑卷组

Clustered volume group "clustervg" successfully created

[root@server1 ~]# lvcreate -l 1023 -n democlustervg #用lvm分区

Logical volume "demo" created

[root@server1 ~]# lvs

LV VG Attr LSize Pool Origin Data% Move LogCpy%Sync Convert

lv_root VolGroup -wi-ao---- 15.61g

lv_swap VolGroup -wi-ao----920.00m

demo clustervg -wi-a----- 4.00g

[root@server1 ~]# mkfs.ext4/dev/clustervg/demo #格式化

mke2fs 1.41.12 (17-May-2010)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=0 blocks, Stripe width=0 blocks

262144 inodes, 1047552 blocks

52377 blocks (5.00%) reserved for the superuser

First data block=0

Maximum filesystem blocks=1073741824

32 block groups

32768 blocks per group, 32768 fragments pergroup

8192 inodes per group

Superblock backups stored on blocks:

32768,98304, 163840, 229376, 294912, 819200, 884736

Writing inode tables: done

Creating journal (16384 blocks): done

Writing superblocks and filesystemaccounting information: done

This filesystem will be automaticallychecked every 32 mounts or

180 days, whichever comes first. Use tune2fs -c or -i to override.

[root@server1 /]# mount /dev/clustervg/demo/mnt/ #将磁盘挂载到mnt上

[root@server1 mnt]# vim index.html #写个简单的测试页

server1

[root@server1 /]# umount /mnt/ #卸载

#服务端2

[root@server2 ~]# yum install iscsi* -y #安装iscsi

[root@server2 ~]# iscsiadm -m discovery -tst -p 172.25.29.3 #查看

Starting iscsid: [ OK ]

172.25.29.3:3260,1iqn.2008-09.com.example:server.target1

[root@server2 ~]# iscsiadm -m node -l

Logging in to [iface: default, target:iqn.2008-09.com.example:server.target1, portal: 172.25.29.3,3260] (multiple)

Login to [iface: default, target: iqn.2008-09.com.example:server.target1,portal: 172.25.29.3,3260] successful.

服务端2不用作修改,将分区化成lvm,服务端1上的分区会同步到服务端2上,但可用用命令查看是否同步如[root@server2 ~]# lvs

LV VG Attr LSize Pool Origin Data% Move LogCpy%Sync Convert

lv_root VolGroup -wi-ao---- 7.61g

lv_swap VolGroup -wi-ao----920.00m

demo clustervg -wi-a----- 4.00g

pvs 、vgs等的都可以查看

[root@server3 ~]# /etc/init.d/luci start #将luci开启

Starting saslauthd: [ OK ]

Start luci... [ OK ]

Point your web browser tohttps://server3.example.com:8084 (or equivalent) to access luci

3.在搭建好的集群上添加服务(双机热备),以apche和iscsi为例

1.添加服务 这里采用的是双机热备

登陆https://server3.example.com:8084

选择Failover Domains,如图,填写Name,如图选择,前面打勾的三个分别是结点失效之后可以跳到另一个结点、只服务运行指定的结点、当结点失效之跳到另一个结点之后,原先的结点恢复之后,不会跳回原先的结点。下面的Member打勾,是指服务运行server1.example.com和server2.exampe.com结点,后面的Priority值越小,优先级越高,选择Creale

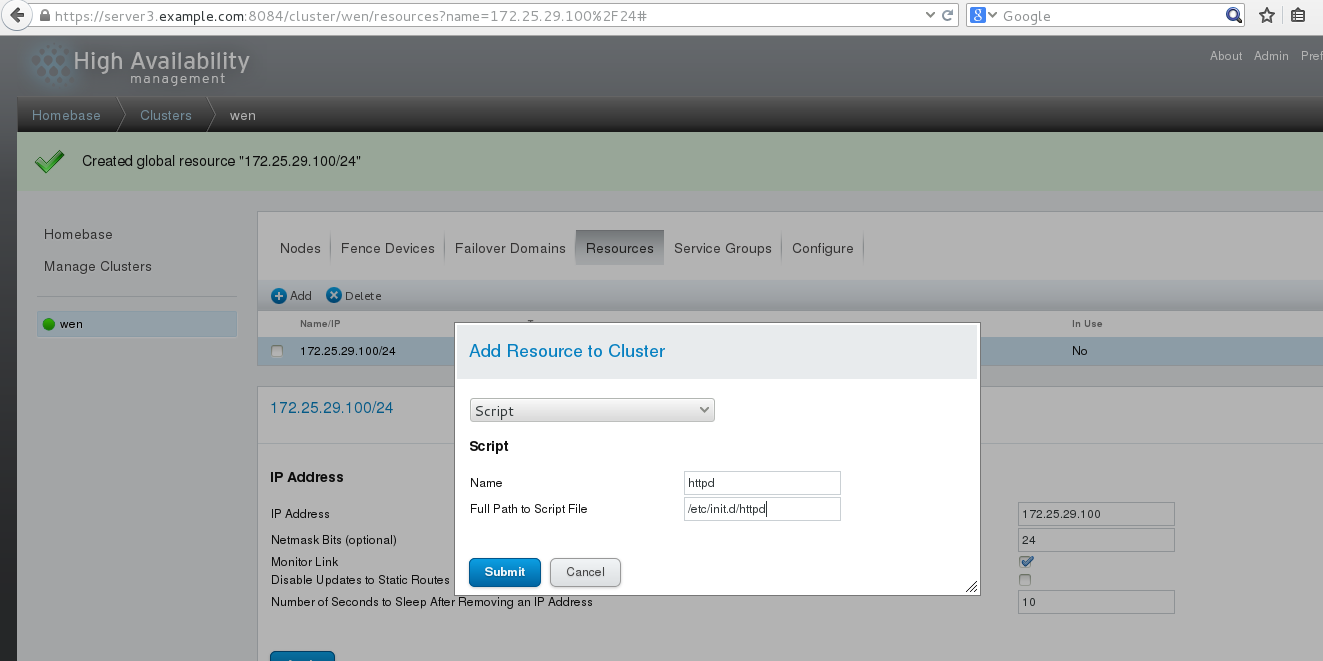

选择Resourcs,点击Add,选择添加IPAddress如图,添加的ip必须是未被占用的ip,24是子网掩码的位数,10指的是等待时间为10秒。选择Submit

以相同的方法添加Script,httpd是服务的名字,/etc/init.d/httpd是服务启动脚本的路径,选择Submit

添加Resource,类型为Filesystem,如图,

选择Service Groups,点击Add如图,apache是服务的名字,下面两个勾指分别的是

自动开启服务、运行 ,选择Add Resource

选择172.25.29.100/24之后,如图点击Add Resource

选择先选择webdata之后,点击Add Resource再选择httpd,点击Submit,完成

2.测试

在测试之前 server1和server2必须安装httpd。注意:不要开启httpd服务,在访问的时候,会自动开启(如果在访问之前开启了服务,访问的时候会报错)

测试 172.25.29.100(vip)

[root@server1~]# ip addr show #查看

1:lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2:eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast stateUP qlen 1000

link/ether 52:54:00:94:2f:4f brdff:ff:ff:ff:ff:ff

inet 172.25.29.1/24 brd 172.25.29.255 scopeglobal eth0

inet 172.25.29.100/24 scope globalsecondary eth0 #自动添加了ip 172.25.29.100

inet6 fe80::5054:ff:fe94:2f4f/64 scope link

valid_lft forever preferred_lft forever

[root@server1~]# clustat #查看服务

ClusterStatus for wen @ Tue Sep 27 18:12:38 2016

MemberStatus: Quorate

Member Name ID Status

------ ---- ---- ------

server1.example.com 1 Online, Local,rgmanager

server2.example.com 2 Online, rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:apache server1.example.com started #serve1在服务

[root@server1~]# /etc/init.d/network stop #当网络断开之后,fence控制server1自动断电,然后启动;服务转到server2

测试

[root@server2~]# ip addr show #查看

1:lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2:eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast stateUP qlen 1000

link/ether 52:54:00:23:81:98 brdff:ff:ff:ff:ff:ff

inet172.25.29.2/24 brd 172.25.29.255 scope global eth0

inet 172.25.29.100/24 scope globalsecondary eth0 #自动添加

inet6 fe80::5054:ff:fe23:8198/64 scope link

valid_lft forever preferred_lft forever

附加:

将iscsi分区的格式换称gfs2格式,再做lvm的拉伸如下:

[root@server1~]# clustat #查看服务

ClusterStatus for wen @ Tue Sep 27 18:22:20 2016

MemberStatus: Quorate

Member Name ID Status

------ ---- ---- ------

server1.example.com 1 Online, Local,rgmanager

server2.example.com 2 Online, rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ ------

service:apache server2.example.com started #server2服务

[root@server1 /]# clusvcadm -d apache #将apache disaled掉

Local machine disablingservice:apache...Success

[root@server1 /]# lvremove/dev/clustervg/demo #删除设备

Do you really want to remove activeclustered logical volume demo? [y/n]: y

Logical volume "demo" successfully removed

[root@server1 /]# lvcreate -L 2g -n democlustervg #重新指定设备的大小

Logical volume "demo" created

[root@server1 /]# mkfs.gfs2 -p lock_dlm -twen:mygfs2 -j 3 /dev/clustervg/demo #格式化(类型:gfs2)

This will destroy any data on/dev/clustervg/demo.

It appears to contain: symbolic link to`../dm-2‘

Are you sure you want to proceed? [y/n] y

Device: /dev/clustervg/demo

Blocksize: 4096

Device Size 2.00 GB (524288 blocks)

Filesystem Size: 2.00 GB (524288 blocks)

Journals: 3

Resource Groups: 8

Locking Protocol: "lock_dlm"

Lock Table: "wen:mygfs2"

UUID: 10486879-ea8c-3244-a2cd-00297f342973

[root@server1 /]# mount /dev/clustervg/demo/mnt/ #将设备挂载到/mnt

[root@server1 mnt]# vim index.html #写简单的测试页

[root@server2 /]# mount /dev/clustervg/demo/mnt/ #将设备挂载到/mnt(服务端2)

[root@server2 /]# cd /mnt/

[root@server2 mnt]# ls

index.html

[root@server2 mnt]# cat index.html

www.server.example.com

[root@server2 mnt]# vim index.html

[root@server2 mnt]# cat index.html #修改测试页

www.server2.example.com

[root@server1 mnt]# cat index.html #查看(服务端1),实现了实时同步

www.server2.example.com

[root@server1 mnt]# cd ..

[root@server1 /]# umount /mnt/

[root@server1 /]# vim /etc/fstab #设置开机自动挂载

UUID="10486879-ea8c-3244-a2cd-00297f342973"/var/www/html gfs2 _netdev 0 0

[root@server1 /]# mount -a #刷新

[root@server1 /]# df #查看

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 16106940 10258892 5031528 68% /

tmpfs 961188 31816 929372 4% /dev/shm

/dev/vda1 495844 33457 436787 8% /boot

/dev/mapper/clustervg-demo 2096912 397152 1699760 19% /var/www/html #挂载上了

[root@server1 /]# clusvcadm -e apache #eabled apache

Local machine trying to enableservice:apache...Service is already running

在测试之前,将上面在ServiceGroups中添加的Filesystem移除掉,在进行测试(如果不移除,系统就会报错)

测试

[root@server1 /]# lvextend -l +511/dev/clustervg/demo #在文件系统层面扩展设备的大小

Extending logical volume demo to 4.00 GiB

Logical volume demo successfully resized

[root@server1 /]# gfs2_grow/dev/clustervg/demo #在物理层面进行扩展

FS: Mount Point: /var/www/html

FS: Device: /dev/dm-2

FS: Size: 524288 (0x80000)

FS: RG size: 65533 (0xfffd)

DEV: Size: 1047552 (0xffc00)

The file system grew by 2044MB.

gfs2_grow complete.

[root@server1 /]# df -lh #查看大小

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup-lv_root 16G 9.8G 4.8G 68% /

tmpfs 939M 32M 908M 4% /dev/shm

/dev/vda1 485M 33M 427M 8% /boot

/dev/mapper/clustervg-demo 3.8G 388M 3.4G 11% /var/www/html #变成3.8G了

本文出自 “不忘初心,方得始终” 博客,请务必保留此出处http://12087746.blog.51cto.com/12077746/1860365

iscsI服务的配置+lvm逻辑卷 高可用(HA)集群铺设的业务Apache+iscsI服务的配置

标签:iscsi服务的配置+lvm逻辑卷 高可用(ha)集群铺设的业务apache+iscsi服务的配置

原文地址:http://12087746.blog.51cto.com/12077746/1860365