标签:style blog http color java 使用 os io

hadoop版本为hadoop1.2.1

eclipse版本为eclipse-standard-kepler-SR2-win32-x86_64

WordCount.java为hadoop-1.2.1\src\examples\org\apache\hadoop\examples\WordCount.java

1 /** 2 * Licensed under the Apache License, Version 2.0 (the "License"); 3 * you may not use this file except in compliance with the License. 4 * You may obtain a copy of the License at 5 * 6 * http://www.apache.org/licenses/LICENSE-2.0 7 * 8 * Unless required by applicable law or agreed to in writing, software 9 * distributed under the License is distributed on an "AS IS" BASIS, 10 * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 11 * See the License for the specific language governing permissions and 12 * limitations under the License. 13 */ 14 15 16 package org.apache.hadoop.examples; 17 18 import java.io.IOException; 19 import java.util.StringTokenizer; 20 21 import org.apache.hadoop.conf.Configuration; 22 import org.apache.hadoop.fs.Path; 23 import org.apache.hadoop.io.IntWritable; 24 import org.apache.hadoop.io.Text; 25 import org.apache.hadoop.mapreduce.Job; 26 import org.apache.hadoop.mapreduce.Mapper; 27 import org.apache.hadoop.mapreduce.Reducer; 28 import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; 29 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; 30 import org.apache.hadoop.util.GenericOptionsParser; 31 32 public class WordCount { 33 34 public static class TokenizerMapper 35 extends Mapper<Object, Text, Text, IntWritable>{ 36 37 private final static IntWritable one = new IntWritable(1); 38 private Text word = new Text(); 39 40 public void map(Object key, Text value, Context context 41 ) throws IOException, InterruptedException { 42 StringTokenizer itr = new StringTokenizer(value.toString()); 43 while (itr.hasMoreTokens()) { 44 word.set(itr.nextToken()); 45 context.write(word, one); 46 } 47 } 48 } 49 50 public static class IntSumReducer 51 extends Reducer<Text,IntWritable,Text,IntWritable> { 52 private IntWritable result = new IntWritable(); 53 54 public void reduce(Text key, Iterable<IntWritable> values, 55 Context context 56 ) throws IOException, InterruptedException { 57 int sum = 0; 58 for (IntWritable val : values) { 59 sum += val.get(); 60 } 61 result.set(sum); 62 context.write(key, result); 63 } 64 } 65 66 public static void main(String[] args) throws Exception { 67 Configuration conf = new Configuration(); 68 String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs(); 69 if (otherArgs.length != 2) { 70 System.err.println("Usage: wordcount <in> <out>"); 71 System.exit(2); 72 } 73 Job job = new Job(conf, "word count"); 74 job.setJarByClass(WordCount.class); 75 job.setMapperClass(TokenizerMapper.class); 76 job.setCombinerClass(IntSumReducer.class); 77 job.setReducerClass(IntSumReducer.class); 78 job.setOutputKeyClass(Text.class); 79 job.setOutputValueClass(IntWritable.class); 80 FileInputFormat.addInputPath(job, new Path(otherArgs[0])); 81 FileOutputFormat.setOutputPath(job, new Path(otherArgs[1])); 82 System.exit(job.waitForCompletion(true) ? 0 : 1); 83 } 84 }

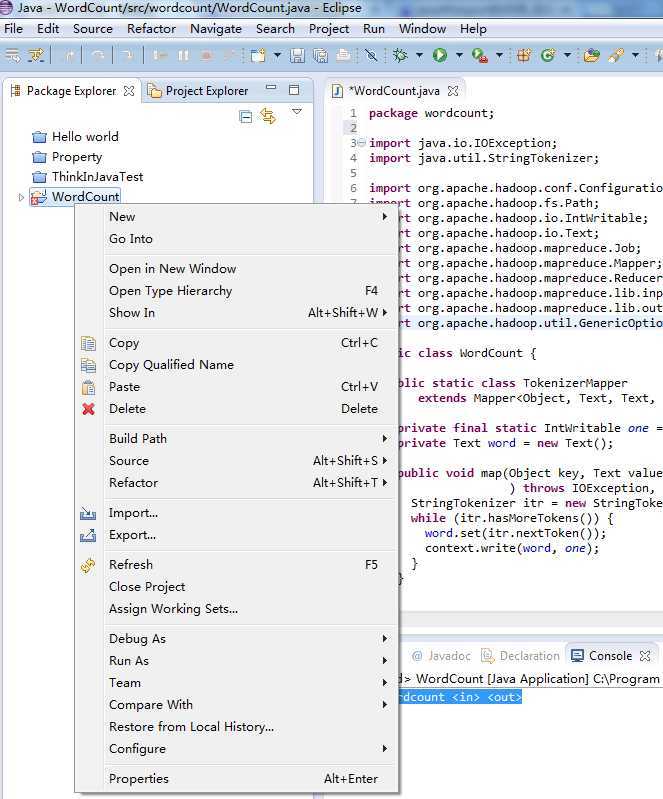

在eclipse中新建java project,project名为WordCount

在project中新建class,类名为WordCount

再将上述代码覆盖eclipse中的WordCount.java

并将页首的package改了wordcount,改后的源码如下

1 package wordcount; 2 3 import java.io.IOException; 4 import java.util.StringTokenizer; 5 6 import org.apache.hadoop.conf.Configuration; 7 import org.apache.hadoop.fs.Path; 8 import org.apache.hadoop.io.IntWritable; 9 import org.apache.hadoop.io.Text; 10 import org.apache.hadoop.mapreduce.Job; 11 import org.apache.hadoop.mapreduce.Mapper; 12 import org.apache.hadoop.mapreduce.Reducer; 13 import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; 14 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; 15 import org.apache.hadoop.util.GenericOptionsParser; 16 17 public class WordCount { 18 19 public static class TokenizerMapper 20 extends Mapper<Object, Text, Text, IntWritable>{ 21 22 private final static IntWritable one = new IntWritable(1); 23 private Text word = new Text(); 24 25 public void map(Object key, Text value, Context context 26 ) throws IOException, InterruptedException { 27 StringTokenizer itr = new StringTokenizer(value.toString()); 28 while (itr.hasMoreTokens()) { 29 word.set(itr.nextToken()); 30 context.write(word, one); 31 } 32 } 33 } 34 35 public static class IntSumReducer 36 extends Reducer<Text,IntWritable,Text,IntWritable> { 37 private IntWritable result = new IntWritable(); 38 39 public void reduce(Text key, Iterable<IntWritable> values, 40 Context context 41 ) throws IOException, InterruptedException { 42 int sum = 0; 43 for (IntWritable val : values) { 44 sum += val.get(); 45 } 46 result.set(sum); 47 context.write(key, result); 48 } 49 } 50 51 public static void main(String[] args) throws Exception { 52 Configuration conf = new Configuration(); 53 String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs(); 54 if (otherArgs.length != 2) { 55 System.err.println("Usage: wordcount <in> <out>"); 56 System.exit(2); 57 } 58 Job job = new Job(conf, "word count"); 59 job.setJarByClass(WordCount.class); 60 job.setMapperClass(TokenizerMapper.class); 61 job.setCombinerClass(IntSumReducer.class); 62 job.setReducerClass(IntSumReducer.class); 63 job.setOutputKeyClass(Text.class); 64 job.setOutputValueClass(IntWritable.class); 65 FileInputFormat.addInputPath(job, new Path(otherArgs[0])); 66 FileOutputFormat.setOutputPath(job, new Path(otherArgs[1])); 67 System.exit(job.waitForCompletion(true) ? 0 : 1); 68 69 } 70 }

1 import org.apache.hadoop.conf.Configuration; 2 import org.apache.hadoop.fs.Path; 3 import org.apache.hadoop.io.IntWritable; 4 import org.apache.hadoop.io.Text; 5 import org.apache.hadoop.mapreduce.Job; 6 import org.apache.hadoop.mapreduce.Mapper; 7 import org.apache.hadoop.mapreduce.Reducer; 8 import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; 9 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; 10 import org.apache.hadoop.util.GenericOptionsParser;

可以看到源码import了好几个hadoop自定义类,非JDK环境自带的类,所以需要把这些类导入eclipse中,不然编译器如何能找到这些类呢,得明确让编译器知道这些类所在位置。

方法一、直接把这些类找出,然后拷贝到当前WordCount文件夹中src文件夹当中

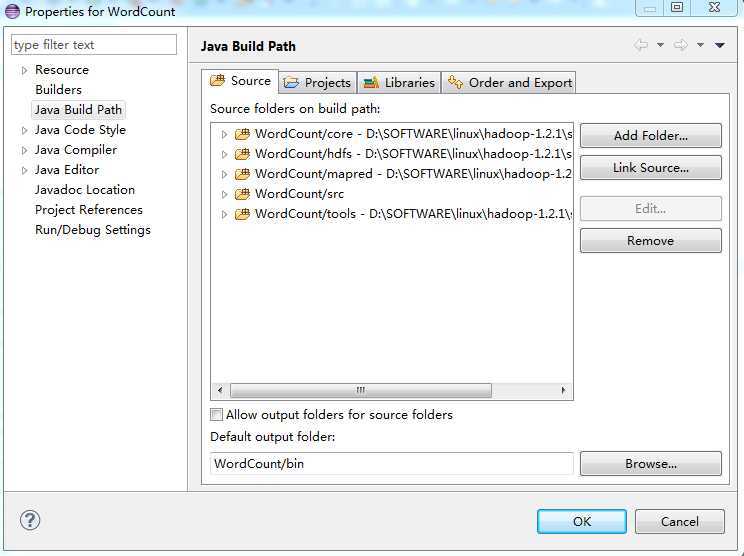

方法二、将这些类所在路径添加到src中,具体如下操作,右击WordCount,点击ProPerties

再在Source使用Link Source方法添加mapred,hdfs,core,tools这四个目录,因为hadoop中的源文件就在这四个目录当中。

已经把import中的类都添加到,会发现WordCount下多了四个目录

这时候编译并运行一下,会发现有如下错误

Exception in thread "main" java.lang.Error: Unresolved compilation problems: The import org.apache.commons cannot be resolved The import org.apache.commons cannot be resolved The import org.codehaus cannot be resolved The import org.codehaus cannot be resolved Log cannot be resolved to a type LogFactory cannot be resolved Log cannot be resolved to a type Log cannot be resolved to a type Log cannot be resolved to a type Log cannot be resolved to a type Log cannot be resolved to a type Log cannot be resolved to a type Log cannot be resolved to a type Log cannot be resolved to a type Log cannot be resolved to a type Log cannot be resolved to a type Log cannot be resolved to a type Log cannot be resolved to a type Log cannot be resolved to a type Log cannot be resolved to a type Log cannot be resolved to a type Log cannot be resolved to a type Log cannot be resolved to a type Log cannot be resolved to a type Log cannot be resolved to a type JsonFactory cannot be resolved to a type JsonFactory cannot be resolved to a type JsonGenerator cannot be resolved to a type at org.apache.hadoop.conf.Configuration.<init>(Configuration.java:60) at wordcount.WordCount.main(WordCount.java:52)

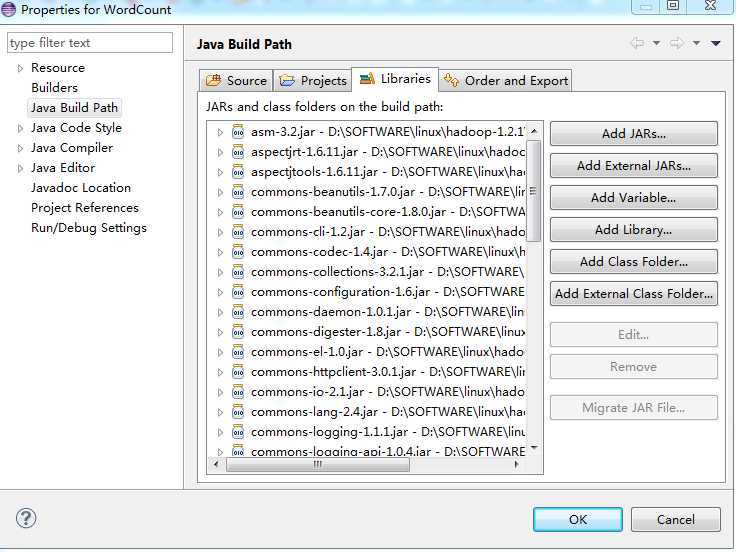

原因是缺少依赖的jar库文件,再把缺少的jar库文件添加入库即可。

使用Add External JARs添加hadoop1.2.1\lib目录下所有jar文件。

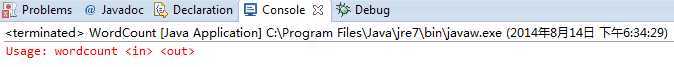

再一次编译并运行,成功

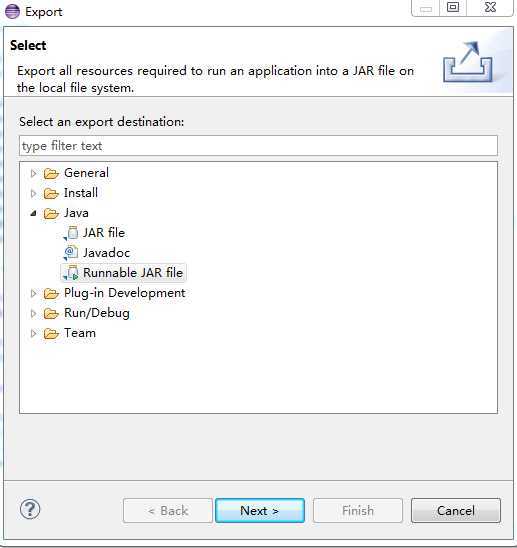

最后导出为可运行jar文件,file->export

本文基于知识共享署名-非商业性使用 3.0 许可协议进行许可。欢迎转载、演绎,但是必须保留本文的署名林羽飞扬,若需咨询,请给我发信

[hadoop]Windows下eclipse导入hadoop源码,编译WordCount,布布扣,bubuko.com

[hadoop]Windows下eclipse导入hadoop源码,编译WordCount

标签:style blog http color java 使用 os io

原文地址:http://www.cnblogs.com/zhengyuhong/p/3913225.html