标签:source 图形化 from map includes storage hit sha vnc

1. 图形化界面,我用的软件是MobaXterm Personal Edition,

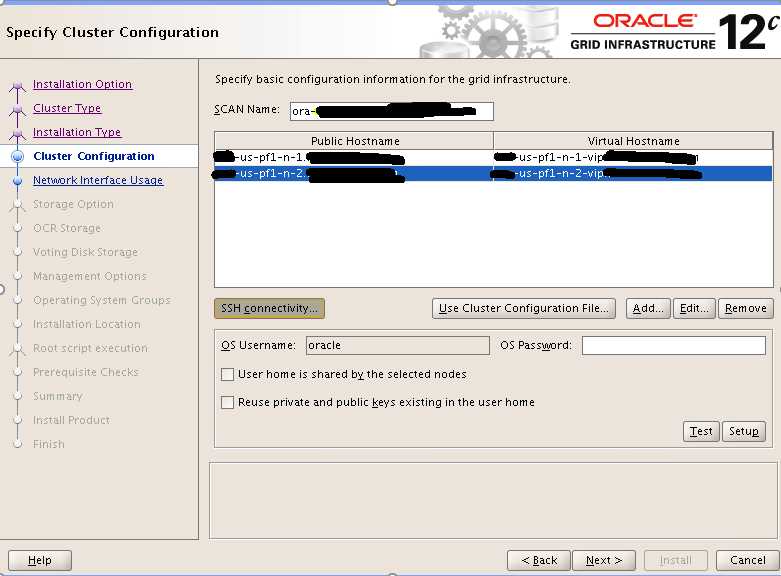

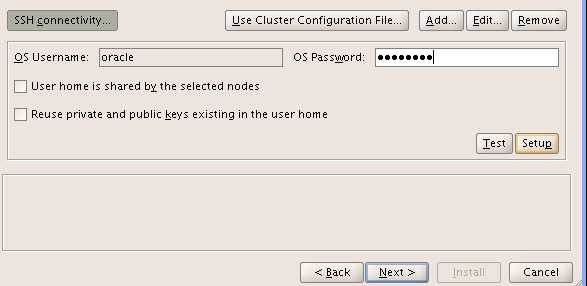

直接ssh oracle@target_server,然后./runInstaller就可以弹出图形化界面,当然还有其他工具如vnc可以实现图形化,这里就略过不提了,下面直接开始安装。

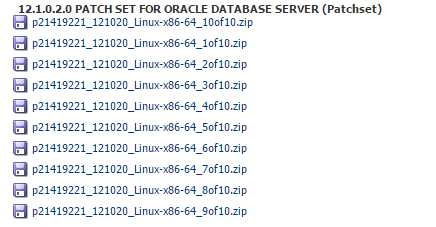

2.关于软件下载,在mos上面下载

| Installation Type | Zip File |

|---|---|

|

Oracle Database (includes Oracle Database, Oracle RAC, and Deinstall) Note: you must download both zip files to install Oracle Database Enterprise Edition. |

|

|

Oracle Database SE2 (includes Oracle Database SE2, Oracle RAC, and Deinstall) Note: you must download both zip files to install Oracle Database SE2. |

|

|

Oracle Grid Infrastructure (includes Oracle ASM, Oracle Clusterware, and Oracle Restart) Note: you must download both zip files to install Oracle Grid Infrastructure. |

|

|

Oracle Database Client |

|

|

Oracle Gateways |

|

|

Oracle Examples |

|

|

Oracle GSM |

|

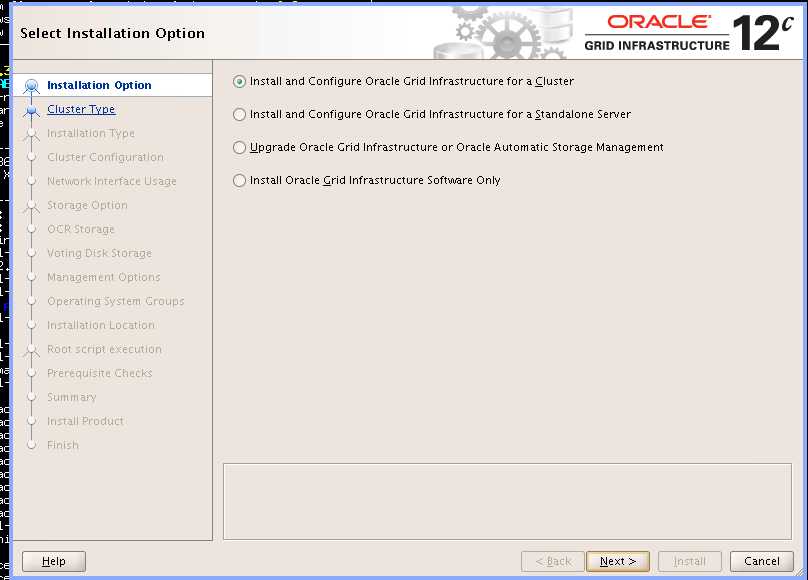

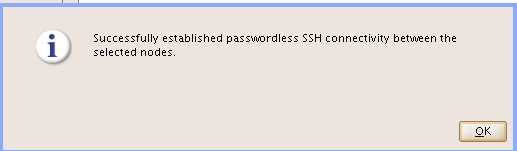

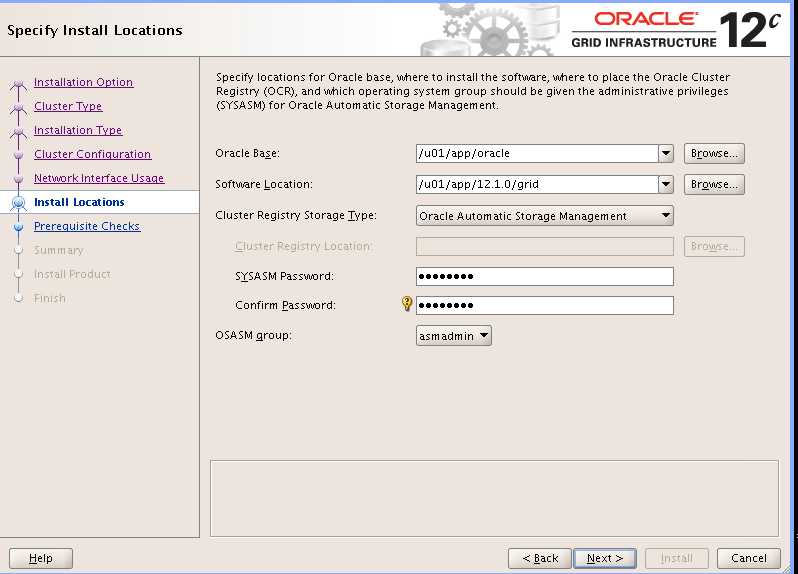

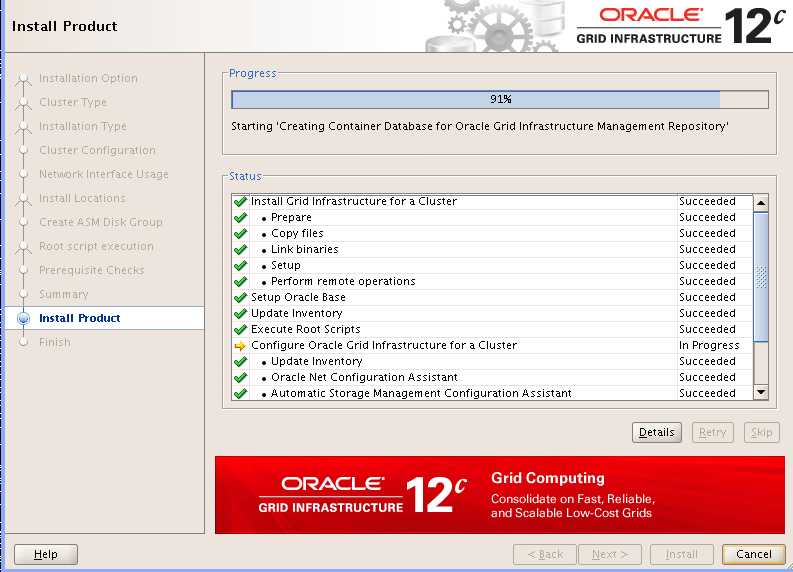

3.安装

AFTER WE TEST successfully,

We run cluvfy pre crsinst,refer to below:

./runcluvfy.sh stage -pre crsinst -n node1_hostname,node2_hostname

.....(log output)

Pre-check for cluster services setup was successful.

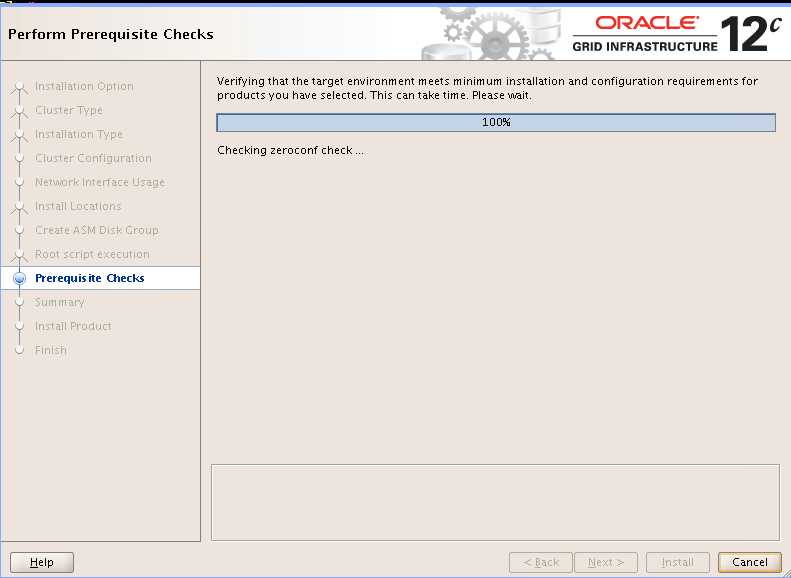

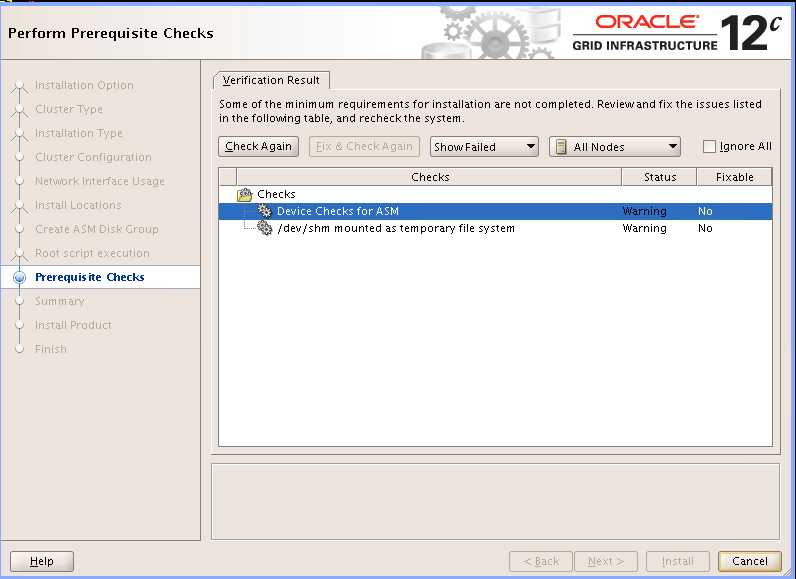

顺序跑完runcluvfy,就继续安装

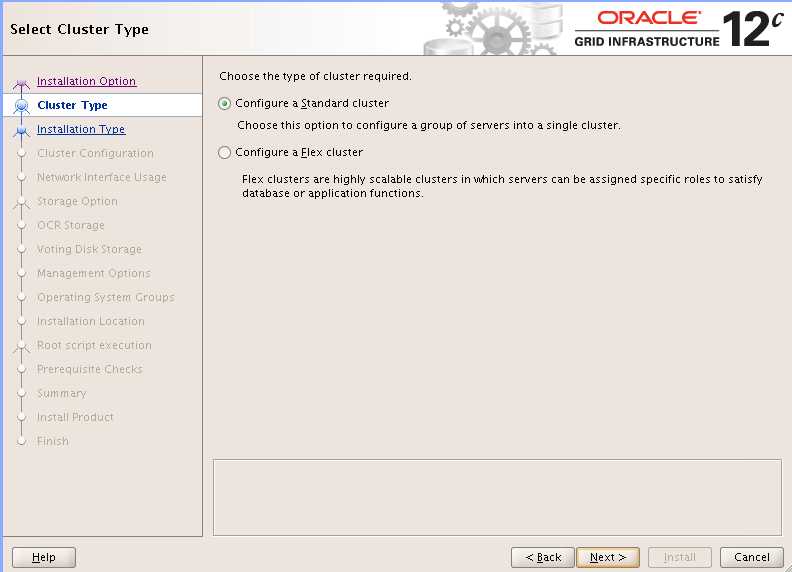

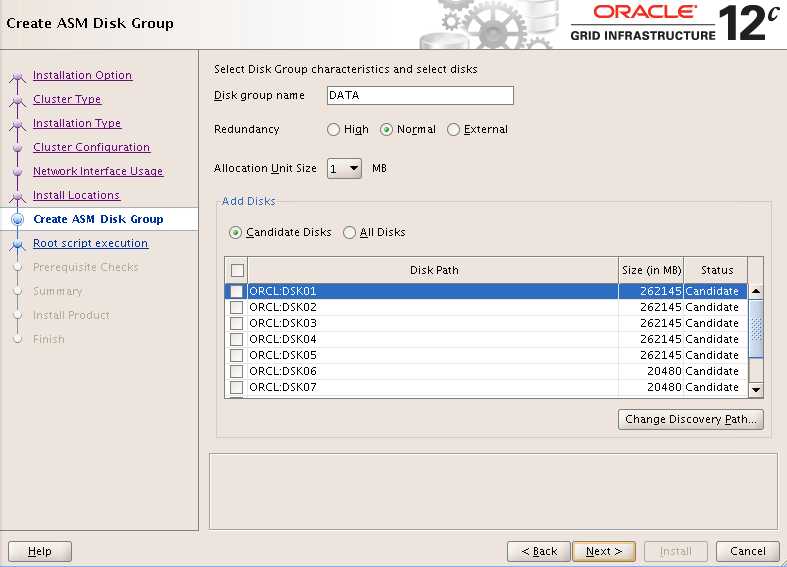

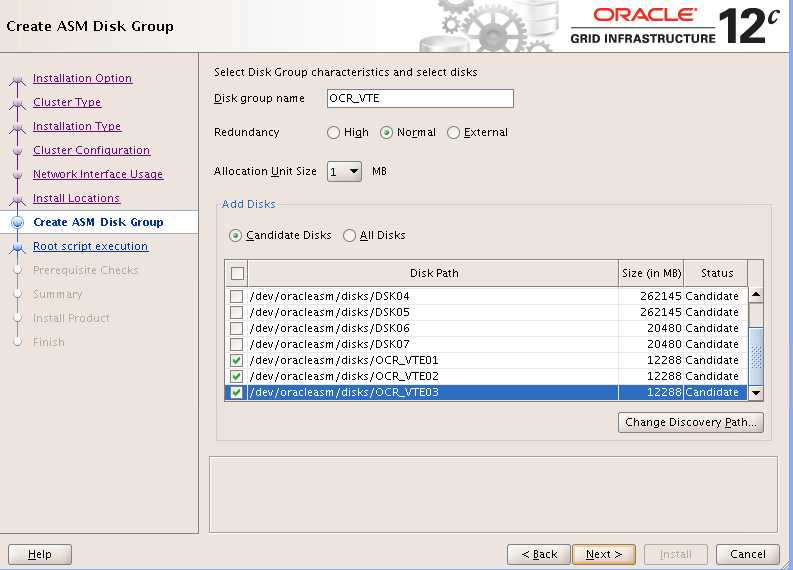

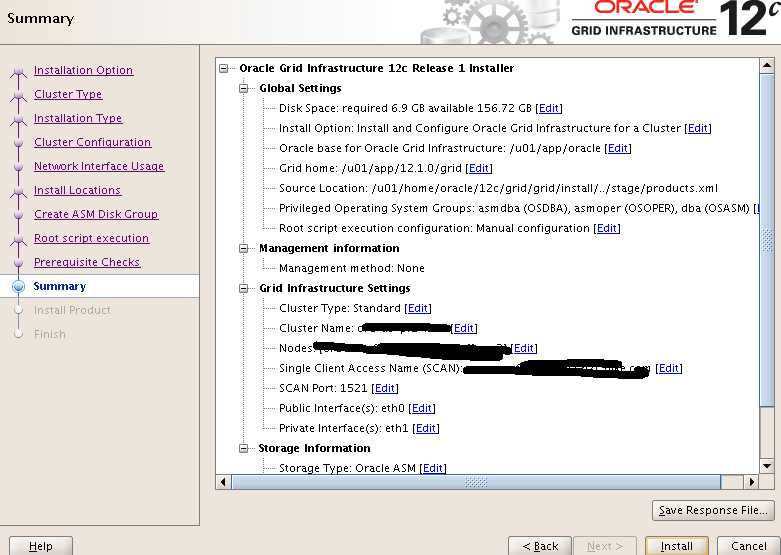

在这里创建OCR相关的磁盘组

Here we only create OCR_VTE DG

OCR_VTE01,02,03 these three disks is from last step that we use createdisk to create.

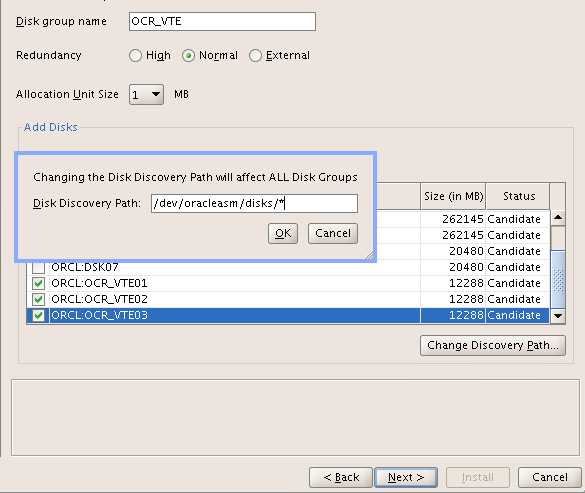

And in this step,I met a bug,which if I named the DG OCR_VOTE,it will hit the bug.

Case 2.5 same storage sub-system are shared by different clusters and same diskgroup name exists in more than one cluster

<ADR_HOME>/crs/<node>/crs/trace/ocrdump_<pid>.trc

2015-07-17 16:57:00.532160 : OCRRAW: AMDU-00211: Inconsistent disks in diskgroup OCR

Solution:

The issue was investigated in bug 21469989, the cause is that multiple clusters are having the same diskgroup name and seeing the same shared disks, the workaround is to change diskgroup name for the new cluster.

An example will be that both cluster1 and cluster2 are seeing the same physical disks /dev/mappers/disk1-10, disk1-5 are allocated to cluster1 and disk6-10 are allocated to cluster2, however, both cluster are trying to use the same diskgroup name dgsys.

Ref: BUG 21469989 - CLSRSC-507 ROOT.SH FAILING ON NODE 2 WHEN CHECKING GLOBAL CHECKPOINT

这里遇到的bug就是在给OCR磁盘组命名的时候,如果命名为OCR_VOTE的话,在后续的跑root.sh时候,在第二个节点跑root.sh一直failed,报的错就是AMDU-00211: Inconsistent disks in diskgroup OCR。所以后面在重装的时候就不在命名为OCR_VOTE了,命名为OCR_VTE。

在给Oracle提SR以后,经过分析是遇到了上述的bug。

关于分析这个bug的过程,在这个系列大致完成后会专门写一个分析的文章。

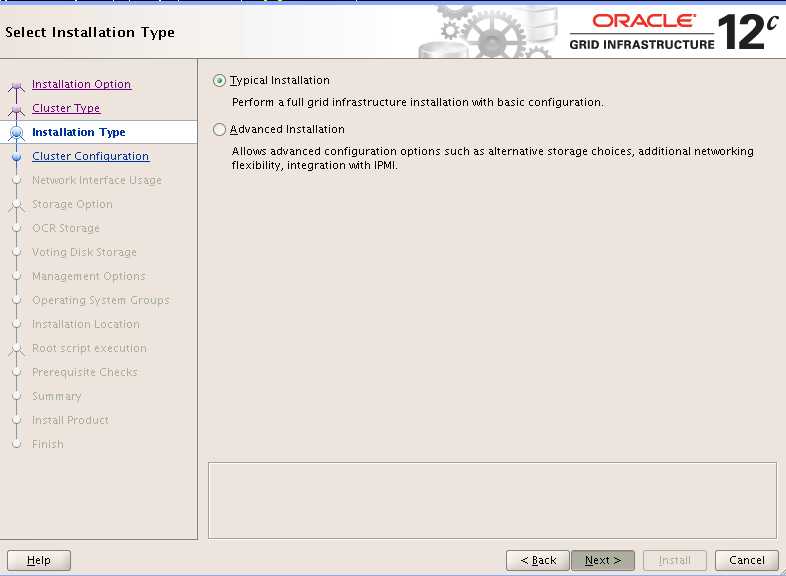

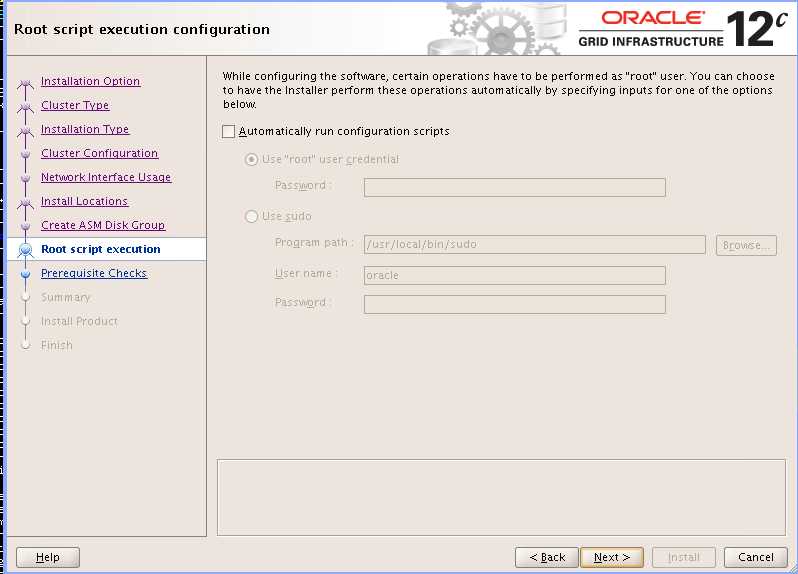

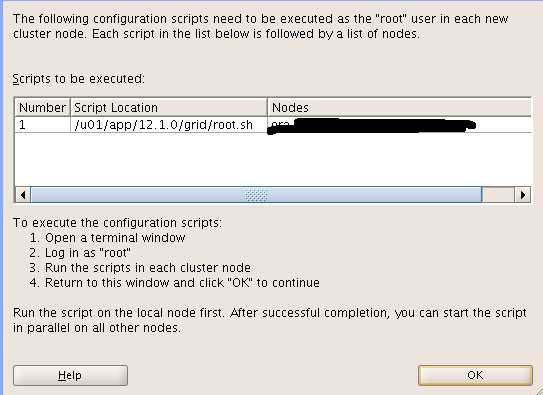

先要确保第一个节点跑成功,再去第二个节点跑。

这里只附上节点2的log

-bash-4.1$ sudo /u01/app/12.1.0/grid/root.sh

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/12.1.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The file "dbhome" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

The file "oraenv" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

The file "coraenv" already exists in /usr/local/bin. Overwrite it? (y/n)

[n]:

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/app/12.1.0/grid/crs/install/crsconfig_params

2017/01/11 03:05:04 CLSRSC-4001: Installing Oracle Trace File Analyzer (TFA) Collector.

2017/01/11 03:05:28 CLSRSC-4002: Successfully installed Oracle Trace File Analyzer (TFA) Collector.

2017/01/11 03:05:29 CLSRSC-363: User ignored prerequisites during installation

OLR initialization - successful

2017/01/11 03:06:43 CLSRSC-330: Adding Clusterware entries to file ‘oracle-ohasd.conf‘

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Oracle High Availability Services has been started.

CRS-4133: Oracle High Availability Services has been stopped.

CRS-4123: Oracle High Availability Services has been started.

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on ‘node2‘

。。。。。。。。。。。。。。。。(省略)

CRS-4123: Oracle High Availability Services has been started.

2017/01/11 03:14:22 CLSRSC-343: Successfully started Oracle Clusterware stack

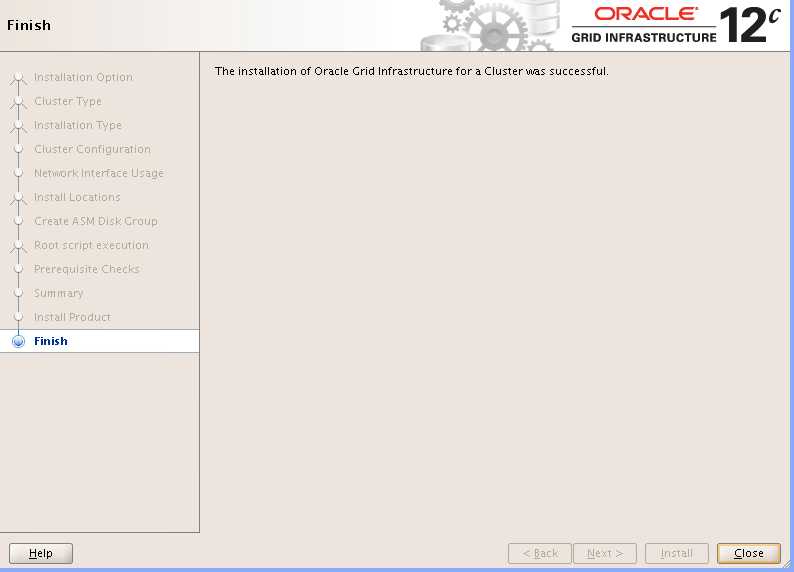

2017/01/11 03:14:37 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

verify the grid installation

./crsctl stat res -t

crsctl check crs

./crsctl check cluster -all

./cluvfy stage -post crsinst -n node1_name

./cluvfy stage -post crsinst -n node2_name

Post-check for cluster services setup was successful.

crsctl check cluster –all

olsnodes –n

ocrcheck

crsctl query css votedisk

经过上面的一些命令查看,集群安装成功。

======================ENDED======================

[原创]zero downtime using goldengate实现oracle 12C升级系列 第四篇:集群安装

标签:source 图形化 from map includes storage hit sha vnc

原文地址:http://www.cnblogs.com/deff/p/6344734.html