标签:获取 import cat err nts image oid private spec

HDFS的shell操作很简单,直接查看文档就可以,和Linux指令类似,下面简单总结一下HDFS的JAVA客户端编写。

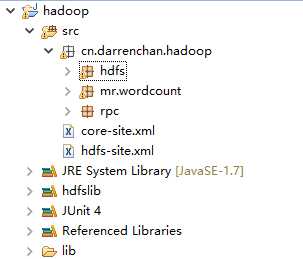

如图建立工程,其中客户端放在hdfs包下面:

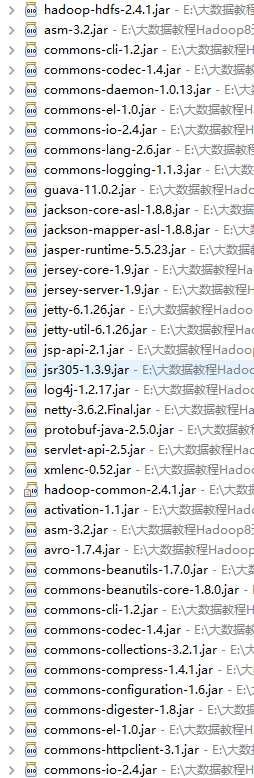

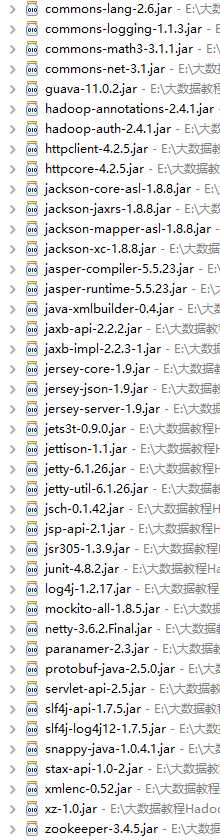

需要导包,在hadoop的share文件夹下面,里面会找到不同的jar包。我把我的贴出来供大家参考:

剩下的就是编写客户端代码了。在Linux下面编写不会有那么多问题,但是在windows下面会莫名其妙各种错误,下面会提到。

首先看core-site.xml文件:

<?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <!-- Put site-specific property overrides in this file. --> <configuration> <!-- 指定HADOOP所使用的文件系统schema(URI),HDFS的老大(NameNode)的地址 --> <property> <name>fs.defaultFS</name> <value>hdfs://192.168.230.134:9000</value> </property> <!-- 指定hadoop运行时产生文件的存储目录 --> <property> <name>hadoop.tmp.dir</name> <value>/home/hadoop/app/hadoop-2.4.1/data</value> </property> </configuration>

然后看一下hdfs-site.xml文件:

<?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>dfs.replication</name> <value>1</value> </property> </configuration>

下面写一个工具类,HdfsUtils.java:

package cn.darrenchan.hadoop.hdfs; import java.io.FileInputStream; import java.io.FileNotFoundException; import java.io.IOException; import java.net.URI; import org.apache.commons.io.IOUtils; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FSDataOutputStream; import org.apache.hadoop.fs.FileStatus; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.LocatedFileStatus; import org.apache.hadoop.fs.Path; import org.apache.hadoop.fs.RemoteIterator; import org.junit.Before; import org.junit.Test; /** * 如果涉及权限用户不是hadoop然后报错的话,可以在run as->run * configuration->arguments里面添加-DHADOOP_USER_NAME=hadoop * 还有另外一种终极解决方案,就是在init()方法中 FileSystem.get进行user的指定 * * @author chenchi * */ public class HdfsUtils { private FileSystem fs; /** * 加上@Before就会在test方法之前执行 * * @throws Exception */ @Before public void init() throws Exception { // 读取classpath下的xxx-site.xml 配置文件,并解析其内容,封装到conf对象中 Configuration conf = new Configuration(); // 如果把配置文件放到src下面,这行代码可以不用写 // 也可以在代码中对conf中的配置信息进行手动设置,会覆盖掉配置文件中的读取的值 conf.set("fs.defaultFS", "hdfs://192.168.230.134:9000"); // 这一行是在windows下运行才用到的 System.setProperty("hadoop.home.dir", "E:\\大数据教程Hadoop8天\\hadoop-2.4.1"); // 根据配置信息,去获取一个具体文件系统的客户端操作实例对象 fs = FileSystem.get(new URI("hdfs://weekend110:9000"), conf, "hadoop"); } /** * 上传文件(底层写法) * * @throws IOException */ @Test public void upload() throws IOException { Path dst = new Path("hdfs://192.168.230.134:9000/aa/qingshu.txt"); FSDataOutputStream out = fs.create(dst); FileInputStream in = new FileInputStream("E:/qingshu.txt"); IOUtils.copy(in, out); } /** * 上传文件(简单版) * * @throws IOException */ @Test public void upload2() throws IOException { fs.copyFromLocalFile(new Path("e:/qingshu.txt"), new Path( "hdfs://weekend110:9000/aaa/bbb/ccc/qingshu100.txt")); } /** * 下载文件 * * @throws IOException * @throws IllegalArgumentException */ @Test public void download() throws IllegalArgumentException, IOException { // 上面是这种写法会报空指针异常的错,所以采用下面这种写法 // fs.copyToLocalFile(new Path("hdfs://weekend110:9000/aa/qingshu.txt"), // new Path("e:/chen.txt")); fs.copyToLocalFile(false, new Path( "hdfs://weekend110:9000/aa/qingshu.txt"), new Path( "e:/chen.txt"), true); } /** * 查看文件信息 * * @throws IOException * @throws IllegalArgumentException * @throws FileNotFoundException */ @Test public void listFiles() throws FileNotFoundException, IllegalArgumentException, IOException { // listFiles列出的是文件信息,而且提供递归遍历 RemoteIterator<LocatedFileStatus> files = fs.listFiles(new Path("/"), true); while (files.hasNext()) { LocatedFileStatus file = files.next(); Path filePath = file.getPath(); String fileName = filePath.getName(); System.out.println(fileName); } System.out.println("---------------------------------"); // listStatus 可以列出文件和文件夹的信息,但是不提供自带的递归遍历 FileStatus[] listStatus = fs.listStatus(new Path("/")); for (FileStatus status : listStatus) { String name = status.getPath().getName(); System.out.println(name + (status.isDirectory() ? " 目录" : " 文件")); } } /** * 创建文件夹 * * @throws IOException * @throws IllegalArgumentException */ @Test public void mkdir() throws IllegalArgumentException, IOException { // 这里也可以简写成/aaa/bbb/ccc fs.mkdirs(new Path("hdfs://weekend110:9000/aaa/bbb/ccc")); } /** * 删除文件或者文件夹 * * @throws IOException * @throws IllegalArgumentException */ @Test public void rm() throws IllegalArgumentException, IOException { // 递归删除,路径名称可以简写 fs.delete(new Path("/aa"), true); // fs.rename(path, path1);// 这是移动文件到不同文件夹 } }

这里总结一下遇到的两个错误:

1.如果涉及权限用户不是hadoop然后报错的话,可以在run as->run configuration->arguments里面添加-DHADOOP_USER_NAME=hadoop。

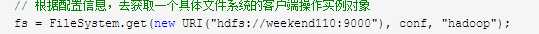

还有另外一种终极解决方案,就是在init()方法中 FileSystem.get进行user的指定,即

2.在windows下运行,可能报错:Could not locate executable null\bin\winutils.exe in the Hadoop binaries...

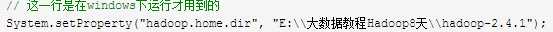

很明显应该是HADOOP_HOME的问题。如果HADOOP_HOME为空,必然fullExeName为null\bin\winutils.exe。解决方法很简单,配置环境变量,不想重启电脑可以在程序里加上:

注:E:\\大数据教程Hadoop8天\\hadoop-2.4.1是我本机解压的hadoop的路径。

稍后再执行,你可能还是会出现同样的错误,因为你进入你的hadoop-x.x.x/bin目录下看,你会发现你压根就没有winutils.exe这个东西。

于是我告诉你,你可以去github下载一个,地球人都知道的地址发你一个。

地址:https://github.com/srccodes/hadoop-common-2.2.0-bin

下载好后,把winutils.exe加入你的hadoop-x.x.x/bin下。

标签:获取 import cat err nts image oid private spec

原文地址:http://www.cnblogs.com/DarrenChan/p/6424023.html