标签:64bit cnn type bic ddl soft pat init first

目前流行的深度学习库有Caffe,Keras,Theano,本文采用谷歌开源的曾用来制作AlphaGo的深度学习系统Tensorflow。

1:安装Tensorflow

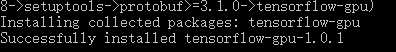

最早TensorFlow只支持mac和Linux系统,目前也支持windows系统,但要求python3.5 (64bit)版本。TensorFlow有cpu和gpu版本,由于本文使用服务器是NVIDIA显卡,因此安装gpu版本,在cmd命令行键入

pip install --upgrade tensorflow-gpu

如果出现错误“Cannot remove entries from nonexistent file”,执行以下命令

“pip install --upgrade -I setuptools”,安装成功出现以下界面

2:安装CUDA库

用gpu来运行Tensorflow还需要配置CUDA和cuDnn库,

用以下link下载win10(64bit)版本CUDA安装包,大小约为1.2G https://developer.nvidia.com/cuda-downloads

安装cuda_8.0.61_win10.exe,完成后配置系统变量,在系统变量中的CUDA_PATH中,加上三个路径, C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v8.0

C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v8.0\bin

C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v8.0\bin\lib\x64

3:安装cuDnn库

用以下link下载cuDnn库

https://developer.nvidia.com/cudnn

下载解压后,为了在运行tensorflow的时候也能将这个库加载进去,我们要将解压后的文件拷到CUDA对应的文件夹下C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v8.0

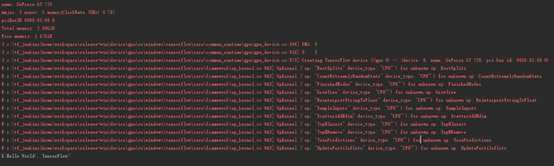

4:测试安装

在Pycharm新建一个py文件键入

import tensorflow as tf

hello = tf.constant(‘Hello world, TensorFlow!‘)

sess = tf.Session()

print(sess.run(hello))

如果能够输出‘Hello, TensorFlow!‘像下面这样就代表配置成功了。

1: 导入必要的模块

import sys

import cv2

import numpy as np

import tensorflow as tf

2: 定义CNN的基本组件

按照LeNet5 的定义,采用32*32 图像输入,CNN的基本组件包括卷积C1,采样层S1、卷积C2,采样层S2、全结合层1、分类层2

3:训练CNN

将输入图像缩小至32*32大小,采用opencv中的resize函数

其变换参数有

CV_INTER_NN - 最近邻插值,

CV_INTER_LINEAR - 双线性插值 (缺省使用)

CV_INTER_AREA - 使用象素关系重采样。当图像缩小时候,该方法可以避免波纹出现。当图像放大时,类似于 CV_INTER_NN 方法..

CV_INTER_CUBIC - 立方插值.

output=cv2.resize(img,(32,32),interpolation=cv2.INTER_CUBIC)

核心代码如下:

class CNNetwork:

NUM_CLASSES = 2 #分两类

IMAGE_SIZE = 28

IMAGE_PIXELS = IMAGE_SIZE*IMAGE_SIZE*3

def inference(images_placeholder, keep_prob):

def weight_variable(shape):

initial = tf.truncated_normal(shape, stddev=0.1)

return tf.Variable(initial)

def bias_variable(shape):

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

def conv2d(x, W):

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding=‘SAME‘)

def max_pool_2x2(x):

return tf.nn.max_pool(x, ksize=[1, 2, 2, 1],

strides=[1, 2, 2, 1], padding=‘SAME‘)

x_image = tf.reshape(images_placeholder, [-1, 28, 28, 1])

with tf.name_scope(‘conv1‘) as scope:

W_conv1 = weight_variable([5, 5, 3, 32])

b_conv1 = bias_variable([32])

h_conv1 = tf.nn.relu(conv2d(x_image, W_conv1) + b_conv1)

with tf.name_scope(‘pool1‘) as scope:

h_pool1 = max_pool_2x2(h_conv1)

with tf.name_scope(‘conv2‘) as scope:

W_conv2 = weight_variable([5, 5, 32, 64])

b_conv2 = bias_variable([64])

h_conv2 = tf.nn.relu(conv2d(h_pool1, W_conv2) + b_conv2)

with tf.name_scope(‘pool2‘) as scope:

h_pool2 = max_pool_2x2(h_conv2)

with tf.name_scope(‘fc1‘) as scope:

W_fc1 = weight_variable([7*7*64, 1024])

b_fc1 = bias_variable([1024])

h_pool2_flat = tf.reshape(h_pool2, [-1, 7*7*64])

h_fc1 = tf.nn.relu(tf.matmul(h_pool2_flat, W_fc1) + b_fc1)

h_fc1_drop = tf.nn.dropout(h_fc1, keep_prob)

with tf.name_scope(‘fc2‘) as scope:

W_fc2 = weight_variable([1024, NUM_CLASSES])

b_fc2 = bias_variable([NUM_CLASSES])

with tf.name_scope(‘softmax‘) as scope:

y_conv=tf.nn.softmax(tf.matmul(h_fc1_drop, W_fc2) + b_fc2)

return y_conv

if __name__ == ‘__main__‘:

test_image = []

filenames = []

for i in range(1, len(sys.argv)):

img = cv2.imread(sys.argv[i])

img = cv2.resize(img, (28, 28))

test_image.append(img.flatten().astype(np.float32)/255.0)

filenames.append(sys.argv[i])

test_image = np.asarray(test_image)

images_placeholder = tf.placeholder("float", shape=(None, IMAGE_PIXELS))

labels_placeholder = tf.placeholder("float", shape=(None, NUM_CLASSES))

keep_prob = tf.placeholder("float")

logits = inference(images_placeholder, keep_prob)

sess = tf.InteractiveSession()

saver = tf.train.Saver()

sess.run(tf.initialize_all_variables())

saver.restore(sess, "model.ckpt")

for i in range(len(test_image)):

pred = np.argmax(logits.eval(feed_dict={

images_placeholder: [test_image[i]],

keep_prob: 1.0 })[0])

pred2 = logits.eval(feed_dict={

images_placeholder: [test_image[i]],

keep_prob: 1.0 })[0]

print filenames[i],pred,"{0:10.8f}".format(pred2[0]),"{0:10.8f}".format(pred2[1])

windows10 64bit 下的tensorflow 安装及demo

标签:64bit cnn type bic ddl soft pat init first

原文地址:http://www.cnblogs.com/marszhw/p/6658990.html