标签:order one close 成绩 plot fprintf function tin off

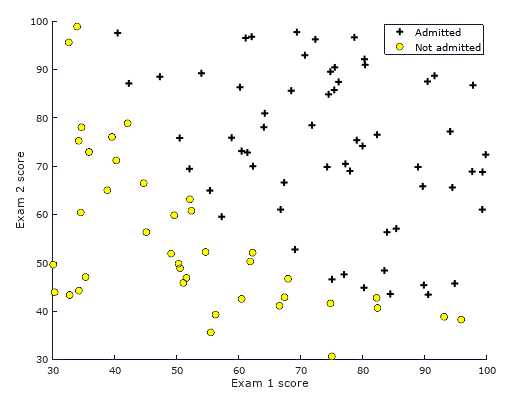

本次Octave仿真解决的问题是,根据两门入学考试的成绩来决定学生是否被录取,我们学习的训练集是包含100名学生成绩及其录取结果的数据,需要设计算法来学习该数据集,并且对新给出的学生成绩进行录取结果预测。

首先,我们读取并绘制training set数据集:

%% Initialization

clear ; close all; clc

%% Load Data

% The first two columns contains the exam scores and the third column

% contains the label.

data = load(‘ex2data1.txt‘);

X = data(:, [1, 2]); y = data(:, 3);

%% ==================== Part 1: Plotting ====================

% We start the exercise by first plotting the data to understand the

% the problem we are working with.

fprintf([‘Plotting data with + indicating (y = 1) examples and o ‘ ...

‘indicating (y = 0) examples.\n‘]);

plotData(X, y);

% Put some labels

hold on;

% Labels and Legend

xlabel(‘Exam 1 score‘)

ylabel(‘Exam 2 score‘)

% Specified in plot order

legend(‘Admitted‘, ‘Not admitted‘)

hold off;

然后,我们来学习训练集,直接使用我们逻辑回归算法原理分析中梯度下降算法的结果:

function [theta, J_history] = gredientDescent(X,y,alpha,iteration);

[m,n]=size(X);

theta = zeros(n,1);

for(i= 1:iteration)

[J,grad] = costFunction(theta,X,y);

J_history(i) = J;

theta = theta-X‘*(sigmoid(X*theta)-y)*alpha/m;

endfor

endfunction

function [J, grad] = costFunction(theta, X, y)

m = length(y);

J = 0;

grad = zeros(size(theta));

tmp=ones(m,1);

h = sigmoid(X*theta);

h1=log(h);

h2=log(tmp-h);

y2=tmp-y;

J=(y‘*h1+y2‘*h2)/(-m);

grad=(X‘*(h-y))/m;

end

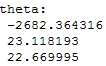

计算后得出的theta值为:

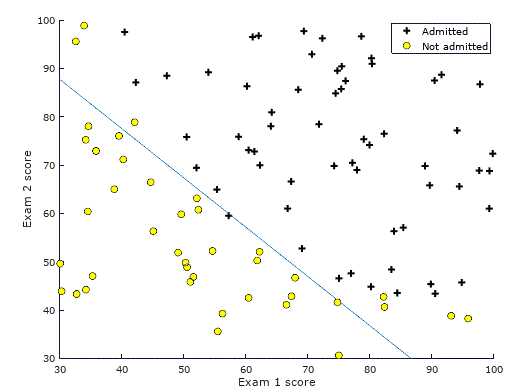

绘出的decision boundary几近完美,但唯一的问题是,貌似梯度下降算法的收敛速度相当之慢,我选择了参数alpha=0.5,iteration=500000,才收敛到此程度。

而对于内建函数fminunc,迭代4000次已可以达到相近的水平。

Logistic Algorithm分类算法的Octave仿真

标签:order one close 成绩 plot fprintf function tin off

原文地址:http://www.cnblogs.com/rhyswang/p/6853657.html