标签:最好 tree proc exec replica nova html exe metadata

Oracle官方出了一个如何在Docker环境下运行Coherence的技术文档,大家可以参考:

https://github.com/oracle/docker-images/tree/master/OracleCoherence

但是对于一个熟悉Coherence的老司机来说,简单搭建起来只是个初步方案,在客户的环境总是各种特性和定制化配置,所以本文研究的也是如何将已经客户化的Coherence架构构建在Kubernetes开源框架上。

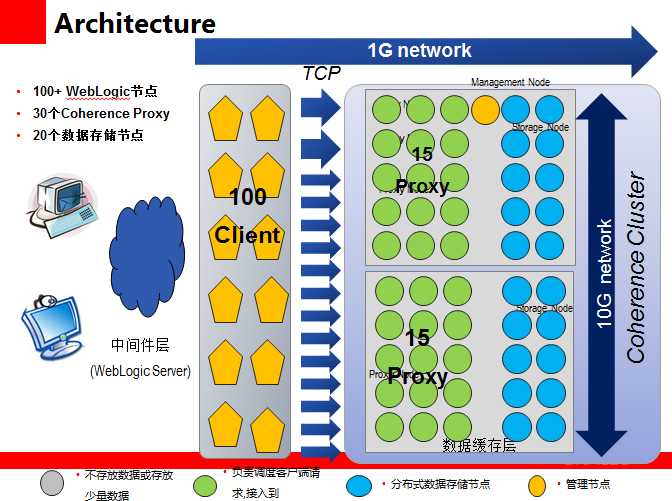

背景架构说明

话不多说,找一个客户的典型的Coherence架构

架构说明:

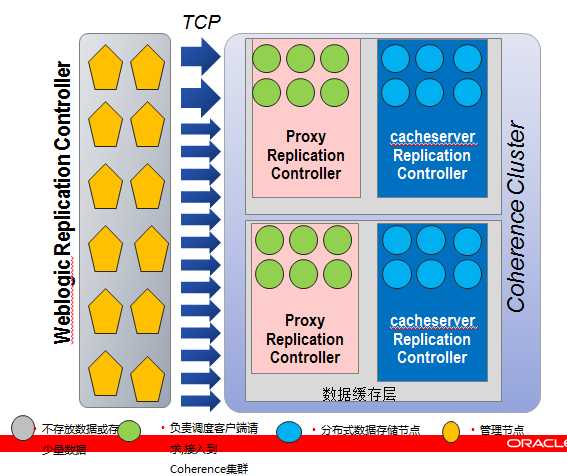

Kubernetes环境下架构

区别在于:

现在问题是proxy server的每个ip都是不固定的,这样在weblogic端就需要指定后端动态变化的ip连入集群。初步想法是让proxy pod绑定服务,然后通过servicename接入。

所以首先需要配置的是dns,作用是每个weblogic pod都能通过dns解析servicename,并转换到proxy server的具体地址。

构建Coherence Proxy Images

其实coherence cacheserver和proxyserver可以定制一个image,然后通过不同的参数来切换不同的配置文件,但这里采用最简单的办法,针对不同角色构建不同的images.

在 /home/weblogic/docker/OracleCoherence/dockerfiles/12.2.1.0.0 目录下加入一个文件proxy-cache-config.xml作为proxyserver启动的配置参数。

[root@k8s-node-1 12.2.1.0.0]# cat proxy-cache-config.xml <?xml version="1.0"?> <!DOCTYPE cache-config SYSTEM "cache-config.dtd"> <cache-config> <caching-scheme-mapping> <cache-mapping> <cache-name>*</cache-name> <scheme-name>distributed-scheme</scheme-name> </cache-mapping> </caching-scheme-mapping> <caching-schemes> <!-- Distributed caching scheme. --> <distributed-scheme> <scheme-name>distributed-scheme</scheme-name> <service-name>DistributedCache</service-name> <thread-count>50</thread-count> <backup-count>1</backup-count> <backing-map-scheme> <local-scheme> <scheme-name>LocalSizeLimited</scheme-name> </local-scheme> </backing-map-scheme> <autostart>true</autostart> <local-storage>false</local-storage> </distributed-scheme> <local-scheme> <scheme-name>LocalSizeLimited</scheme-name> <eviction-policy>LRU</eviction-policy> <high-units>500</high-units> <unit-calculator>BINARY</unit-calculator> <unit-factor>1048576</unit-factor> <expiry-delay>48h</expiry-delay> </local-scheme> <proxy-scheme> <service-name>ExtendTcpProxyService</service-name> <thread-count>5</thread-count> <acceptor-config> <tcp-acceptor> <local-address> <address>0.0.0.0</address> <port>9099</port> </local-address> </tcp-acceptor> </acceptor-config> <autostart>true</autostart> </proxy-scheme> </caching-schemes> </cache-config>

注意这里的address,0.0.0.0意味着可以绑定任何生成的ip.

修改Dockerfile,最好建立一个新的Dockerfile.proxy

[root@k8s-node-1 12.2.1.0.0]# cat Dockerfile.proxy # LICENSE CDDL 1.0 + GPL 2.0 # # ORACLE DOCKERFILES PROJECT # -------------------------- # This is the Dockerfile for Coherence 12.2.1 Standalone Distribution # # REQUIRED BASE IMAGE TO BUILD THIS IMAGE # --------------------------------------- # This Dockerfile requires the base image oracle/serverjre:8 # (see https://github.com/oracle/docker-images/tree/master/OracleJava) # # REQUIRED FILES TO BUILD THIS IMAGE # ---------------------------------- # (1) fmw_12.2.1.0.0_coherence_Disk1_1of1.zip # # Download the Standalone installer from http://www.oracle.com/technetwork/middleware/coherence/downloads/index.html # # HOW TO BUILD THIS IMAGE # ----------------------- # Put all downloaded files in the same directory as this Dockerfile # Run: # $ sh buildDockerImage.sh -s # # or if your Docker client requires root access you can run: # $ sudo sh buildDockerImage.sh -s # # Pull base image # --------------- FROM oracle/serverjre:8 # Maintainer # ---------- MAINTAINER Jonathan Knight # Environment variables required for this build (do NOT change) ENV FMW_PKG=fmw_12.2.1.0.0_coherence_Disk1_1of1.zip FMW_JAR=fmw_12.2.1.0.0_coherence.jar ORACLE_HOME=/u01/oracle/oracle_home PATH=$PATH:/usr/java/default/bin:/u01/oracle/oracle_home/oracle_common/common/bin CONFIG_JVM_ARGS="-Djava.security.egd=file:/dev/./urandom" ENV COHERENCE_HOME=$ORACLE_HOME/coherence # Copy files required to build this image COPY $FMW_PKG install.file oraInst.loc /u01/ COPY start.sh /start.sh COPY proxy-cache-config.xml $COHERENCE_HOME/conf/proxy-cache-config.xml RUN useradd -b /u01 -m -s /bin/bash oracle && echo oracle:oracle | chpasswd && chmod +x /start.sh && chmod a+xr /u01 && chown -R oracle:oracle /u01 USER oracle # Install and configure Oracle JDK # Setup required packages (unzip), filesystem, and oracle user # ------------------------------------------------------------ RUN cd /u01 && $JAVA_HOME/bin/jar xf /u01/$FMW_PKG && cd - && $JAVA_HOME/bin/java -jar /u01/$FMW_JAR -silent -responseFile /u01/install.file -invPtrLoc /u01/oraInst.loc -jreLoc $JAVA_HOME -ignoreSysPrereqs -force -novalidation ORACLE_HOME=$ORACLE_HOME && rm /u01/$FMW_JAR /u01/$FMW_PKG /u01/oraInst.loc /u01/install.file ENTRYPOINT ["/start.sh"]

区别在于需要把刚才的文件copy到容器中去

然后修改start.sh

[root@k8s-node-1 12.2.1.0.0]# cat start.sh #!/usr/bin/env sh #!/bin/sh -e -x -u trap "echo TRAPed signal" HUP INT QUIT KILL TERM main() { COMMAND=server SCRIPT_NAME=$(basename "${0}") MAIN_CLASS="com.tangosol.net.DefaultCacheServer" case "${1}" in server) COMMAND=${1}; shift ;; console) COMMAND=${1}; shift ;; queryplus) COMMAND=queryPlus; shift ;; help) COMMAND=${1}; shift ;; esac case ${COMMAND} in server) server ;; console) console ;; queryPlus) queryPlus ;; help) usage; exit ;; *) server ;; esac } # --------------------------------------------------------------------------- # Display the help text for this script # --------------------------------------------------------------------------- usage() { echo "Usage: ${SCRIPT_NAME} [type] [args]" echo "" echo "type: - the type of process to run, must be one of:" echo " server - runs a storage enabled DefaultCacheServer" echo " (server is the default if type is omitted)" echo " console - runs a storage disabled Coherence console" echo " query - runs a storage disabled QueryPlus session" echo " help - displays this usage text" echo "" echo "args: - any subsequent arguments are passed as program args to the main class" echo "" echo "Environment Variables: The following environment variables affect the script operation" echo "" echo "JAVA_OPTS - this environment variable adds Java options to the start command," echo " for example memory and other system properties" echo "" echo "COH_WKA - Sets the WKA address to use to discover a Coherence cluster." echo "" echo "COH_EXTEND_PORT - If set the Extend Proxy Service will listen on this port instead" echo " of the default ephemeral port." echo "" echo "Any jar files added to the /lib folder will be pre-pended to the classpath." echo "The /conf folder is on the classpath so any files in this folder can be loaded by the process." echo "" } server() { PROPS="" CLASSPATH="" MAIN_CLASS="com.tangosol.net.DefaultCacheServer" start } console() { PROPS="-Dcoherence.localstorage=false" CLASSPATH="" MAIN_CLASS="com.tangosol.net.CacheFactory" start } queryPlus() { PROPS="-Dcoherence.localstorage=false" CLASSPATH="${COHERENCE_HOME}/lib/jline.jar" MAIN_CLASS="com.tangosol.coherence.dslquery.QueryPlus" start } start() { if [ "${COH_WKA}" != "" ] then PROPS="${PROPS} -Dcoherence.wka=${COH_WKA}" fi if [ "${COH_EXTEND_PORT}" != "" ] then PROPS="${PROPS} -Dcoherence.cacheconfig=extend-cache-config.xml -Dcoherence.extend.port=${COH_EXTEND_PORT}" fi CLASSPATH="/conf:/lib/*:${CLASSPATH}:${COHERENCE_HOME}/conf:${COHERENCE_HOME}/lib/coherence.jar" CMD="${JAVA_HOME}/bin/java -cp ${CLASSPATH} ${PROPS} -Dtangosol.coherence.distributed.localstorage=false -Dtangosol.coherence.cacheconfig=proxy-cache-config.xml ${JAVA_OPTS} ${MAIN_CLASS} ${COH_MAIN_ARGS}" echo "Starting Coherence ${COMMAND} using ${CMD}" exec ${CMD} } main "$@"

主要是在最后的java -cp中的修改。修改相应的build文件

最后build image(-s是指用standardalong版本)

sh buildProxyServer.sh -v 12.2.1.0.0 -s

构建Coherence CacheServer Images

按照类似方法,先新建一个storage-cache-server.xml

[root@k8s-node-1 12.2.1.0.0]# cat storage-cache-config.xml <?xml version="1.0"?> <!DOCTYPE cache-config SYSTEM "cache-config.dtd"> <cache-config> <caching-scheme-mapping> <cache-mapping> <cache-name>*</cache-name> <scheme-name>distributed-pof</scheme-name> </cache-mapping> </caching-scheme-mapping> <caching-schemes> <distributed-scheme> <scheme-name>distributed-pof</scheme-name> <service-name>DistributedCache</service-name> <backing-map-scheme> <local-scheme/> </backing-map-scheme> <listener/> <autostart>true</autostart> <local-storage>true</local-storage> </distributed-scheme> </caching-schemes> </cache-config>

然后建立一个Dockerfile.cacheserver

[root@k8s-node-1 12.2.1.0.0]# cat Dockerfile.cacheserver # LICENSE CDDL 1.0 + GPL 2.0 # # ORACLE DOCKERFILES PROJECT # -------------------------- # This is the Dockerfile for Coherence 12.2.1 Standalone Distribution # # REQUIRED BASE IMAGE TO BUILD THIS IMAGE # --------------------------------------- # This Dockerfile requires the base image oracle/serverjre:8 # (see https://github.com/oracle/docker-images/tree/master/OracleJava) # # REQUIRED FILES TO BUILD THIS IMAGE # ---------------------------------- # (1) fmw_12.2.1.0.0_coherence_Disk1_1of1.zip # # Download the Standalone installer from http://www.oracle.com/technetwork/middleware/coherence/downloads/index.html # # HOW TO BUILD THIS IMAGE # ----------------------- # Put all downloaded files in the same directory as this Dockerfile # Run: # $ sh buildDockerImage.sh -s # # or if your Docker client requires root access you can run: # $ sudo sh buildDockerImage.sh -s # # Pull base image # --------------- FROM oracle/serverjre:8 # Maintainer # ---------- MAINTAINER Jonathan Knight # Environment variables required for this build (do NOT change) ENV FMW_PKG=fmw_12.2.1.0.0_coherence_Disk1_1of1.zip FMW_JAR=fmw_12.2.1.0.0_coherence.jar ORACLE_HOME=/u01/oracle/oracle_home PATH=$PATH:/usr/java/default/bin:/u01/oracle/oracle_home/oracle_common/common/bin CONFIG_JVM_ARGS="-Djava.security.egd=file:/dev/./urandom" ENV COHERENCE_HOME=$ORACLE_HOME/coherence # Copy files required to build this image COPY $FMW_PKG install.file oraInst.loc /u01/ COPY start.sh /start.sh COPY storage-cache-config.xml $COHERENCE_HOME/conf/storage-cache-config.xml RUN useradd -b /u01 -m -s /bin/bash oracle && echo oracle:oracle | chpasswd && chmod +x /start.sh && chmod a+xr /u01 && chown -R oracle:oracle /u01 USER oracle # Install and configure Oracle JDK # Setup required packages (unzip), filesystem, and oracle user # ------------------------------------------------------------ RUN cd /u01 && $JAVA_HOME/bin/jar xf /u01/$FMW_PKG && cd - && $JAVA_HOME/bin/java -jar /u01/$FMW_JAR -silent -responseFile /u01/install.file -invPtrLoc /u01/oraInst.loc -jreLoc $JAVA_HOME -ignoreSysPrereqs -force -novalidation ORACLE_HOME=$ORACLE_HOME && rm /u01/$FMW_JAR /u01/$FMW_PKG /u01/oraInst.loc /u01/install.file ENTRYPOINT ["/start.sh"]

最后修改start.sh,关键语句是

CMD="${JAVA_HOME}/bin/java -cp ${CLASSPATH} ${PROPS} -Dtangosol.coherence.distributed.localstorage=true -Dtangosol.coherence.cacheconfig=storage-cache-config.xml ${JAVA_OPTS} ${MAIN_CLASS} ${COH_MAIN_ARGS}"

最后build image

sh buildCacheServer.sh -v 12.2.1.0.0 -s

通过docker images看到

[root@k8s-node-1 12.2.1.0.0]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE 1213-domain v2 326bf14bb29f About an hour ago 2.055 GB oracle/coherence 12.2.1.0.0-cacheserver 57a90e86e1d2 20 hours ago 625 MB oracle/coherence 12.2.1.0.0-proxy 238c85d61468 23 hours ago 625 MB

在master节点创建一系列ReplicationController

coherence-proxy.yaml

[root@k8s-master ~]# cat coherence-proxy.yaml apiVersion: v1 kind: ReplicationController metadata: name: coherence-proxy spec: replicas: 2 template: metadata: labels: coherencecluster: "proxy" version: "0.1" spec: containers: - name: coherenceproxy image: oracle/coherence:12.2.1.0.0-proxy ports: - containerPort: 9099 --- apiVersion: v1 kind: Service metadata: name: coherenceproxysvc labels: coherencecluster: proxy spec: type: NodePort ports: - port: 9099 protocol: TCP targetPort: 9099 nodePort: 30033 selector: coherencecluster: proxy

coherence-cacheserver.yaml

[root@k8s-master ~]# cat coherence-cacheserver.yaml apiVersion: v1 kind: ReplicationController metadata: name: coherence-cacheserver spec: replicas: 2 template: metadata: labels: coherencecluster: "mycluster" version: "0.1" spec: containers: - name: coherencecacheserver image: oracle/coherence:12.2.1.0.0-cacheserver

kubectl create -f coherence-proxy.yaml

kubectl create -f coherence-cacheserver.yaml

然后看看pod是否启动成功

[root@k8s-master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE coherence-cacheserver-96kz7 1/1 Running 0 1h 192.168.33.4 k8s-node-1 coherence-cacheserver-z67ht 1/1 Running 0 1h 192.168.33.3 k8s-node-1 coherence-proxy-j7r0w 1/1 Running 0 1h 192.168.33.5 k8s-node-1 coherence-proxy-tg8n8 1/1 Running 0 1h 192.168.33.6 k8s-node-1

登录进去后确定coherence集群成员状态,看member成员的个数基本确定已经都加入集群了。

MasterMemberSet( ThisMember=Member(Id=8, Timestamp=2017-06-16 10:45:04.244, Address=192.168.33.6:38184, MachineId=42359, Location=machine:coherence-proxy-tg8n8,process:1, Role=CoherenceServer) OldestMember=Member(Id=1, Timestamp=2017-06-16 10:24:16.941, Address=192.168.33.4:46114, MachineId=10698, Location=machine:coherence-cacheserver-96kz7,process:1, Role=CoherenceServer) ActualMemberSet=MemberSet(Size=4 Member(Id=1, Timestamp=2017-06-16 10:24:16.941, Address=192.168.33.4:46114, MachineId=10698, Location=machine:coherence-cacheserver-96kz7,process:1, Role=CoherenceServer) Member(Id=2, Timestamp=2017-06-16 10:24:20.836, Address=192.168.33.3:44182, MachineId=23654, Location=machine:coherence-cacheserver-z67ht,process:1, Role=CoherenceServer) Member(Id=7, Timestamp=2017-06-16 10:45:02.144, Address=192.168.33.5:39932, MachineId=47892, Location=machine:coherence-proxy-j7r0w,process:1, Role=CoherenceServer) Member(Id=8, Timestamp=2017-06-16 10:45:04.244, Address=192.168.33.6:38184, MachineId=42359, Location=machine:coherence-proxy-tg8n8,process:1, Role=CoherenceServer) ) MemberId|ServiceJoined|MemberState 1|2017-06-16 10:24:16.941|JOINED, 2|2017-06-16 10:24:20.836|JOINED, 7|2017-06-16 10:45:02.144|JOINED, 8|2017-06-16 10:45:04.244|JOINED RecycleMillis=1200000 RecycleSet=MemberSet(Size=4 Member(Id=3, Timestamp=2017-06-16 10:25:25.741, Address=192.168.33.6:37346, MachineId=50576) Member(Id=4, Timestamp=2017-06-16 10:25:25.74, Address=192.168.33.5:46743, MachineId=65007) Member(Id=5, Timestamp=2017-06-16 10:43:45.413, Address=192.168.33.5:37568, MachineId=44312) Member(Id=6, Timestamp=2017-06-16 10:43:45.378, Address=192.168.33.6:33362, MachineId=42635) ) ) TcpRing{Connections=[7]} IpMonitor{Addresses=3, Timeout=15s}

确定service状态

[root@k8s-master ~]# kubectl get services NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE coherenceproxysvc 10.254.22.102 <nodes> 9099:30033/TCP 1h kubernetes 10.254.0.1 <none> 443/TCP 26d

coherenceproxysvc已经启动。

开始Coherence客户端WebLogic Pod的配置

因为需要修改setDomainEnv.sh文件,将客户端的coherence配置文件写入,所以转入weblogic目录

[root@k8s-node-1 1213-domain]# pwd /home/weblogic/docker/OracleWebLogic/samples/1213-domain

新建一个proxy-client.xml

[root@k8s-node-1 1213-domain]# cat proxy-client.xml <?xml version="1.0"?> <!DOCTYPE cache-config SYSTEM "cache-config.dtd"> <cache-config> <caching-scheme-mapping> <cache-mapping> <cache-name>*</cache-name> <scheme-name>extend-dist</scheme-name> </cache-mapping> </caching-scheme-mapping> <caching-schemes> <remote-cache-scheme> <scheme-name>extend-dist</scheme-name> <service-name>ExtendTcpCacheService</service-name> <initiator-config> <tcp-initiator> <remote-addresses> <socket-address> <address>coherenceproxysvc</address> <port>9099</port> </socket-address> </remote-addresses> <connect-timeout>10s</connect-timeout> </tcp-initiator> <outgoing-message-handler> <request-timeout>5s</request-timeout> </outgoing-message-handler> </initiator-config> </remote-cache-scheme> </caching-schemes> </cache-config>

需要注意的是address要指到service的名称,依靠dns去解析。

修改Dockerfile,核心是加入JAVA_OPTIONS和CLASSPATH.

FROM oracle/weblogic:12.1.3-generic # Maintainer # ---------- MAINTAINER Bruno Borges <bruno.borges@oracle.com> # WLS Configuration # ------------------------------- ARG ADMIN_PASSWORD ARG PRODUCTION_MODE ENV DOMAIN_NAME="base_domain" DOMAIN_HOME="/u01/oracle/user_projects/domains/base_domain" ADMIN_PORT="7001" ADMIN_HOST="wlsadmin" NM_PORT="5556" MS_PORT="7002" PRODUCTION_MODE="${PRODUCTION_MODE:-prod}" JAVA_OPTIONS="-Dweblogic.security.SSL.ignoreHostnameVerification=true -Dtangosol.coherence.distributed.localstorage=false -Dtangosol.coherence.cacheconfig=/u01/oracle/proxy-client.xml" CLASSPATH="/u01/oracle/coherence.jar" PATH=$PATH:/u01/oracle/oracle_common/common/bin:/u01/oracle/wlserver/common/bin:/u01/oracle/user_projects/domains/base_domain/bin:/u01/oracle # Add files required to build this image USER oracle COPY container-scripts/* /u01/oracle/ COPY coherence.jar /u01/oracle/ COPY proxy-client.xml /u01/oracle/ # Configuration of WLS Domain WORKDIR /u01/oracle RUN /u01/oracle/wlst /u01/oracle/create-wls-domain.py && mkdir -p /u01/oracle/user_projects/domains/base_domain/servers/AdminServer/security && echo "username=weblogic" > /u01/oracle/user_projects/domains/base_domain/servers/AdminServer/security/boot.properties && \ echo "password=$ADMIN_PASSWORD" >> /u01/oracle/user_projects/domains/base_domain/servers/AdminServer/security/boot.properties && echo ". /u01/oracle/user_projects/domains/base_domain/bin/setDomainEnv.sh" >> /u01/oracle/.bashrc && \ echo "export PATH=$PATH:/u01/oracle/wlserver/common/bin:/u01/oracle/user_projects/domains/base_domain/bin" >> /u01/oracle/.bashrc && cp /u01/oracle/commEnv.sh /u01/oracle/wlserver/common/bin/commEnv.sh && rm /u01/oracle/create-wls-domain.py /u01/oracle/jaxrs2-template.jar # Expose Node Manager default port, and also default http/https ports for admin console EXPOSE $NM_PORT $ADMIN_PORT $MS_PORT WORKDIR $DOMAIN_HOME # Define default command to start bash. CMD ["startWebLogic.sh"]

然后build image

docker build -t 1213-domain:v2 --build-arg ADMIN_PASSWORD=welcome1 .

构建weblogic pod作为一个客户端

[root@k8s-master ~]# cat weblogic-pod.yaml apiVersion: v1 kind: Pod metadata: name: weblogic spec: containers: - name: weblogic image: 1213-domain:v2 ports: - containerPort: 7001

启动以后,通过log确定weblogic启动时确实把我们客户化的参数加入

[root@k8s-master ~]# kubectl logs weblogic . . JAVA Memory arguments: -Djava.security.egd=file:/dev/./urandom . CLASSPATH=/u01/oracle/wlserver/../oracle_common/modules/javax.persistence_2.1.jar:/u01/oracle/wlserver/../wlserver/modules/com.oracle.weblogic.jpa21support_1.0.0.0_2-1.jar:/usr/java/jdk1.8.0_101/lib/tools.jar:/u01/oracle/wlserver/server/lib/weblogic_sp.jar:/u01/oracle/wlserver/server/lib/weblogic.jar:/u01/oracle/wlserver/../oracle_common/modules/net.sf.antcontrib_1.1.0.0_1-0b3/lib/ant-contrib.jar:/u01/oracle/wlserver/modules/features/oracle.wls.common.nodemanager_2.0.0.0.jar:/u01/oracle/wlserver/../oracle_common/modules/com.oracle.cie.config-wls-online_8.1.0.0.jar:/u01/oracle/wlserver/common/derby/lib/derbyclient.jar:/u01/oracle/wlserver/common/derby/lib/derby.jar:/u01/oracle/wlserver/server/lib/xqrl.jar:/u01/oracle/coherence.jar . PATH=/u01/oracle/wlserver/server/bin:/u01/oracle/wlserver/../oracle_common/modules/org.apache.ant_1.9.2/bin:/usr/java/jdk1.8.0_101/jre/bin:/usr/java/jdk1.8.0_101/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/java/default/bin:/u01/oracle/oracle_common/common/bin:/u01/oracle/oracle_common/common/bin:/u01/oracle/wlserver/common/bin:/u01/oracle/user_projects/domains/base_domain/bin:/u01/oracle . *************************************************** * To start WebLogic Server, use a username and * * password assigned to an admin-level user. For * * server administration, use the WebLogic Server * * console at http://hostname:port/console * *************************************************** starting weblogic with Java version: java version "1.8.0_101" Java(TM) SE Runtime Environment (build 1.8.0_101-b13) Java HotSpot(TM) 64-Bit Server VM (build 25.101-b13, mixed mode) Starting WLS with line: /usr/java/jdk1.8.0_101/bin/java -server -Djava.security.egd=file:/dev/./urandom -Dweblogic.Name=AdminServer -Djava.security.policy=/u01/oracle/wlserver/server/lib/weblogic.policy -Dweblogic.ProductionModeEnabled=true -Dweblogic.security.SSL.ignoreHostnameVerification=true -Dtangosol.coherence.distributed.localstorage=false -Dtangosol.coherence.cacheconfig=/u01/oracle/proxy-client.xml -Djava.endorsed.dirs=/usr/java/jdk1.8.0_101/jre/lib/endorsed:/u01/oracle/wlserver/../oracle_common/modules/endorsed -da -Dwls.home=/u01/oracle/wlserver/server -Dweblogic.home=/u01/oracle/wlserver/server -Dweblogic.utils.cmm.lowertier.ServiceDisabled=true weblogic.Server

部署一个HelloWorld.war文件,核心的index.jsp代码是:

<!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN" "http://www.w3.org/TR/html4/loose.dtd"> <%@page import="java.util.*"%> <%@page import="com.tangosol.net.*"%> <%@ page contentType="text/html;charset=windows-1252"%> <html> <body> This is a Helloworld test</body> <h3> <% String mysession; NamedCache cache; cache = CacheFactory.getCache("demoCache"); cache.put("eric","eric.nie@oracle.com"); %> Get Eric Email:<%=cache.get("eric").toString()%> </h3> </html>

[root@k8s-master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE coherence-cacheserver-96kz7 1/1 Running 0 1h 192.168.33.4 k8s-node-1 coherence-cacheserver-z67ht 1/1 Running 0 1h 192.168.33.3 k8s-node-1 coherence-proxy-j7r0w 1/1 Running 0 1h 192.168.33.5 k8s-node-1 coherence-proxy-tg8n8 1/1 Running 0 1h 192.168.33.6 k8s-node-1 weblogic 1/1 Running 0 1h 192.168.33.7 k8s-node-1

部署后访问

查看后面的weblogic日志

<Jun 16, 2017 10:32:42 AM GMT> <Notice> <WebLogicServer> <BEA-000329> <Started the WebLogic Server Administration Server "AdminServer" for domain "base_domain" running in production mode.> <Jun 16, 2017 10:32:42 AM GMT> <Notice> <WebLogicServer> <BEA-000360> <The server started in RUNNING mode.> <Jun 16, 2017 10:32:42 AM GMT> <Warning> <Server> <BEA-002611> <The hostname "localhost", maps to multiple IP addresses: 127.0.0.1, 0:0:0:0:0:0:0:1.> <Jun 16, 2017 10:32:42 AM GMT> <Notice> <WebLogicServer> <BEA-000365> <Server state changed to RUNNING.> 2017-06-16 10:36:29.193/280.892 Oracle Coherence 12.1.3.0.0 <Info> (thread=[ACTIVE] ExecuteThread: ‘2‘ for queue: ‘weblogic.kernel.Default (self-tuning)‘, member=n/a): Loaded operational configuration from "jar:file:/u01/oracle/coherence/lib/coherence.jar!/tangosol-coherence.xml" 2017-06-16 10:36:29.451/281.086 Oracle Coherence 12.1.3.0.0 <Info> (thread=[ACTIVE] ExecuteThread: ‘2‘ for queue: ‘weblogic.kernel.Default (self-tuning)‘, member=n/a): Loaded operational overrides from "jar:file:/u01/oracle/coherence/lib/coherence.jar!/tangosol-coherence-override-dev.xml" 2017-06-16 10:36:29.479/281.114 Oracle Coherence 12.1.3.0.0 <D5> (thread=[ACTIVE] ExecuteThread: ‘2‘ for queue: ‘weblogic.kernel.Default (self-tuning)‘, member=n/a): Optional configuration override "/tangosol-coherence-override.xml" is not specified 2017-06-16 10:36:29.511/281.146 Oracle Coherence 12.1.3.0.0 <D5> (thread=[ACTIVE] ExecuteThread: ‘2‘ for queue: ‘weblogic.kernel.Default (self-tuning)‘, member=n/a): Optional configuration override "cache-factory-config.xml" is not specified 2017-06-16 10:36:29.525/281.159 Oracle Coherence 12.1.3.0.0 <D5> (thread=[ACTIVE] ExecuteThread: ‘2‘ for queue: ‘weblogic.kernel.Default (self-tuning)‘, member=n/a): Optional configuration override "cache-factory-builder-config.xml" is not specified 2017-06-16 10:36:29.526/281.161 Oracle Coherence 12.1.3.0.0 <D5> (thread=[ACTIVE] ExecuteThread: ‘2‘ for queue: ‘weblogic.kernel.Default (self-tuning)‘, member=n/a): Optional configuration override "/custom-mbeans.xml" is not specified Oracle Coherence Version 12.1.3.0.0 Build 52031 Grid Edition: Development mode Copyright (c) 2000, 2014, Oracle and/or its affiliates. All rights reserved. 2017-06-16 10:36:29.672/281.306 Oracle Coherence GE 12.1.3.0.0 <Info> (thread=[ACTIVE] ExecuteThread: ‘2‘ for queue: ‘weblogic.kernel.Default (self-tuning)‘, member=n/a): Loaded cache configuration from "file:/u01/oracle/proxy-client.xml"; this document does not refer to any schema definition and has not been validated. 2017-06-16 10:36:30.225/281.860 Oracle Coherence GE 12.1.3.0.0 <Info> (thread=[ACTIVE] ExecuteThread: ‘2‘ for queue: ‘weblogic.kernel.Default (self-tuning)‘, member=n/a): Created cache factory com.tangosol.net.ExtensibleConfigurableCacheFactory 2017-06-16 10:36:30.507/282.142 Oracle Coherence GE 12.1.3.0.0 <D5> (thread=[ACTIVE] ExecuteThread: ‘2‘ for queue: ‘weblogic.kernel.Default (self-tuning)‘, member=n/a): Connecting Socket to 10.254.203.94:9099 2017-06-16 10:36:30.534/282.169 Oracle Coherence GE 12.1.3.0.0 <Info> (thread=[ACTIVE] ExecuteThread: ‘2‘ for queue: ‘weblogic.kernel.Default (self-tuning)‘, member=n/a): Error connecting Socket to 10.254.203.94:9099: java.net.ConnectException: Connection refused 2017-06-16 10:46:11.056/862.693 Oracle Coherence GE 12.1.3.0.0 <Info> (thread=[ACTIVE] ExecuteThread: ‘3‘ for queue: ‘weblogic.kernel.Default (self-tuning)‘, member=n/a): Restarting Service: ExtendTcpCacheService 2017-06-16 10:46:11.156/862.791 Oracle Coherence GE 12.1.3.0.0 <D5> (thread=[ACTIVE] ExecuteThread: ‘3‘ for queue: ‘weblogic.kernel.Default (self-tuning)‘, member=n/a): Connecting Socket to 10.254.22.102:9099 2017-06-16 10:46:11.188/862.837 Oracle Coherence GE 12.1.3.0.0 <Info> (thread=[ACTIVE] ExecuteThread: ‘3‘ for queue: ‘weblogic.kernel.Default (self-tuning)‘, member=n/a): Connected Socket to 10.254.22.102:9099

问题和定位

关于DNS解析是否正确,可以通过下面命令

iptables -L -v -n -t nat

查看路由是否正确,主要是看coherenceproxysvc的路由是否到正确的pod和端口.

[root@k8s-node-1 1213-domain]# iptables -L -v -n -t nat Chain PREROUTING (policy ACCEPT 5 packets, 309 bytes) pkts bytes target prot opt in out source destination 30708 1898K KUBE-SERVICES all -- * * 0.0.0.0/0 0.0.0.0/0 /* kubernetes service portals */ 3 172 DOCKER all -- * * 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL Chain INPUT (policy ACCEPT 0 packets, 0 bytes) pkts bytes target prot opt in out source destination Chain OUTPUT (policy ACCEPT 0 packets, 0 bytes) pkts bytes target prot opt in out source destination 2057 126K KUBE-SERVICES all -- * * 0.0.0.0/0 0.0.0.0/0 /* kubernetes service portals */ 0 0 DOCKER all -- * * 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCAL Chain POSTROUTING (policy ACCEPT 4 packets, 240 bytes) pkts bytes target prot opt in out source destination 4100 283K MASQUERADE all -- * !docker0 192.168.33.0/24 0.0.0.0/0 28494 1716K KUBE-POSTROUTING all -- * * 0.0.0.0/0 0.0.0.0/0 /* kubernetes postrouting rules */ 2 277 RETURN all -- * * 192.168.122.0/24 224.0.0.0/24 0 0 RETURN all -- * * 192.168.122.0/24 255.255.255.255 0 0 MASQUERADE tcp -- * * 192.168.122.0/24 !192.168.122.0/24 masq ports: 1024-65535 0 0 MASQUERADE udp -- * * 192.168.122.0/24 !192.168.122.0/24 masq ports: 1024-65535 0 0 MASQUERADE all -- * * 192.168.122.0/24 !192.168.122.0/24 Chain DOCKER (2 references) pkts bytes target prot opt in out source destination 0 0 RETURN all -- docker0 * 0.0.0.0/0 0.0.0.0/0 Chain KUBE-MARK-DROP (0 references) pkts bytes target prot opt in out source destination 0 0 MARK all -- * * 0.0.0.0/0 0.0.0.0/0 MARK or 0x8000 Chain KUBE-MARK-MASQ (6 references) pkts bytes target prot opt in out source destination 0 0 MARK all -- * * 0.0.0.0/0 0.0.0.0/0 MARK or 0x4000 Chain KUBE-NODEPORTS (1 references) pkts bytes target prot opt in out source destination 0 0 KUBE-MARK-MASQ tcp -- * * 0.0.0.0/0 0.0.0.0/0 /* default/coherenceproxysvc: */ tcp dpt:30033 0 0 KUBE-SVC-BQXHRGVXFCEH2BHH tcp -- * * 0.0.0.0/0 0.0.0.0/0 /* default/coherenceproxysvc: */ tcp dpt:30033 Chain KUBE-POSTROUTING (1 references) pkts bytes target prot opt in out source destination 0 0 MASQUERADE all -- * * 0.0.0.0/0 0.0.0.0/0 /* kubernetes service traffic requiring SNAT */ mark match 0x4000/0x4000 Chain KUBE-SEP-67FRRWLKQK2OD4HZ (1 references) pkts bytes target prot opt in out source destination 0 0 KUBE-MARK-MASQ all -- * * 192.168.33.2 0.0.0.0/0 /* kube-system/kube-dns:dns-tcp */ 0 0 DNAT tcp -- * * 0.0.0.0/0 0.0.0.0/0 /* kube-system/kube-dns:dns-tcp */ tcp to:192.168.33.2:53 Chain KUBE-SEP-GIM2MHZZZBZJL55J (2 references) pkts bytes target prot opt in out source destination 0 0 KUBE-MARK-MASQ all -- * * 192.168.0.105 0.0.0.0/0 /* default/kubernetes:https */ 0 0 DNAT tcp -- * * 0.0.0.0/0 0.0.0.0/0 /* default/kubernetes:https */ recent: SET name: KUBE-SEP-GIM2MHZZZBZJL55J side: source mask: 255.255.255.255 tcp to:192.168.0.105:443 Chain KUBE-SEP-IM4M52WKVEC4AZF3 (1 references) pkts bytes target prot opt in out source destination 0 0 KUBE-MARK-MASQ all -- * * 192.168.33.6 0.0.0.0/0 /* default/coherenceproxysvc: */ 0 0 DNAT tcp -- * * 0.0.0.0/0 0.0.0.0/0 /* default/coherenceproxysvc: */ tcp to:192.168.33.6:9099 Chain KUBE-SEP-LUF3R3GRCSK6KKRS (1 references) pkts bytes target prot opt in out source destination 0 0 KUBE-MARK-MASQ all -- * * 192.168.33.2 0.0.0.0/0 /* kube-system/kube-dns:dns */ 0 0 DNAT udp -- * * 0.0.0.0/0 0.0.0.0/0 /* kube-system/kube-dns:dns */ udp to:192.168.33.2:53 Chain KUBE-SEP-ZZECWQBQCJPODCBC (1 references) pkts bytes target prot opt in out source destination 0 0 KUBE-MARK-MASQ all -- * * 192.168.33.5 0.0.0.0/0 /* default/coherenceproxysvc: */ 0 0 DNAT tcp -- * * 0.0.0.0/0 0.0.0.0/0 /* default/coherenceproxysvc: */ tcp to:192.168.33.5:9099 Chain KUBE-SERVICES (2 references) pkts bytes target prot opt in out source destination 0 0 KUBE-SVC-NPX46M4PTMTKRN6Y tcp -- * * 0.0.0.0/0 10.254.0.1 /* default/kubernetes:https cluster IP */ tcp dpt:443 0 0 KUBE-SVC-TCOU7JCQXEZGVUNU udp -- * * 0.0.0.0/0 10.254.254.254 /* kube-system/kube-dns:dns cluster IP */ udp dpt:53 0 0 KUBE-SVC-ERIFXISQEP7F7OF4 tcp -- * * 0.0.0.0/0 10.254.254.254 /* kube-system/kube-dns:dns-tcp cluster IP */ tcp dpt:53 0 0 KUBE-SVC-BQXHRGVXFCEH2BHH tcp -- * * 0.0.0.0/0 10.254.22.102 /* default/coherenceproxysvc: cluster IP */ tcp dpt:9099 0 0 KUBE-NODEPORTS all -- * * 0.0.0.0/0 0.0.0.0/0 /* kubernetes service nodeports; NOTE: this must be the last rule in this chain */ ADDRTYPE match dst-type LOCAL Chain KUBE-SVC-BQXHRGVXFCEH2BHH (2 references) pkts bytes target prot opt in out source destination 0 0 KUBE-SEP-ZZECWQBQCJPODCBC all -- * * 0.0.0.0/0 0.0.0.0/0 /* default/coherenceproxysvc: */ statistic mode random probability 0.50000000000 0 0 KUBE-SEP-IM4M52WKVEC4AZF3 all -- * * 0.0.0.0/0 0.0.0.0/0 /* default/coherenceproxysvc: */ Chain KUBE-SVC-ERIFXISQEP7F7OF4 (1 references) pkts bytes target prot opt in out source destination 0 0 KUBE-SEP-67FRRWLKQK2OD4HZ all -- * * 0.0.0.0/0 0.0.0.0/0 /* kube-system/kube-dns:dns-tcp */ Chain KUBE-SVC-NPX46M4PTMTKRN6Y (1 references) pkts bytes target prot opt in out source destination 0 0 KUBE-SEP-GIM2MHZZZBZJL55J all -- * * 0.0.0.0/0 0.0.0.0/0 /* default/kubernetes:https */ recent: CHECK seconds: 10800 reap name: KUBE-SEP-GIM2MHZZZBZJL55J side: source mask: 255.255.255.255 0 0 KUBE-SEP-GIM2MHZZZBZJL55J all -- * * 0.0.0.0/0 0.0.0.0/0 /* default/kubernetes:https */ Chain KUBE-SVC-TCOU7JCQXEZGVUNU (1 references) pkts bytes target prot opt in out source destination 0 0 KUBE-SEP-LUF3R3GRCSK6KKRS all -- * * 0.0.0.0/0 0.0.0.0/0 /* kube-system/kube-dns:dns */

可优化之处:

Kubernetes环境下如何运行Coherence缓存集群

标签:最好 tree proc exec replica nova html exe metadata

原文地址:http://www.cnblogs.com/ericnie/p/7029083.html