标签:允许 软件测试 工作 协议 主库故障 自己 文件 exe cat

1,关于MySQL Group Replication

基于组的复制(Group-basedReplication)是一种被使用在容错系统中的技术。Replication-group(复制组)是由能够相互通信的多个服务器(节点)组成的。

在通信层,Groupreplication实现了一系列的机制:比如原子消息(atomicmessage delivery)和全序化消息(totalorderingof messages)。

这些原子化,抽象化的机制,为实现更先进的

数据库复制方案提供了强有力的支持。

MySQL Group Replication正是基于这些技术和概念,实现了一种多主全更新的复制协议。

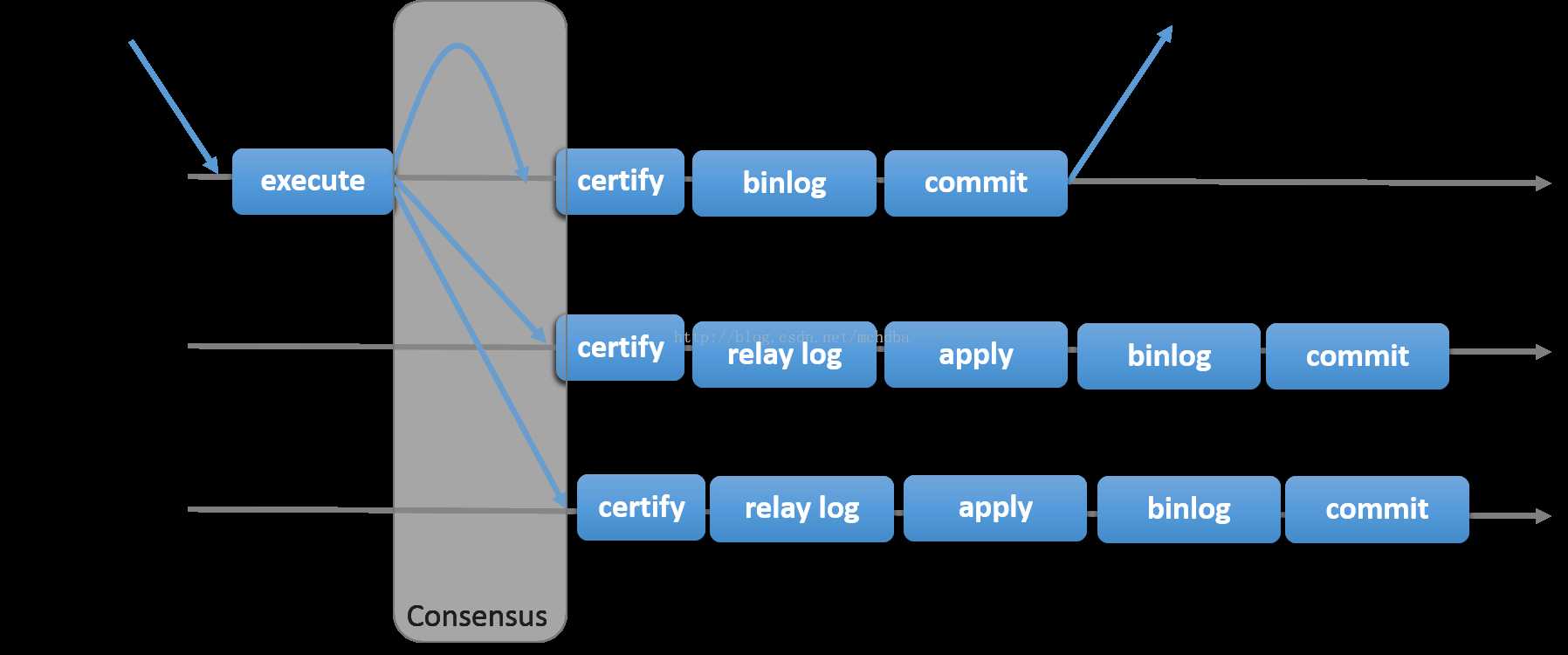

简而言之,一个Replication-group就是一组节点,每个节点都可以独立执行事务,而读写事务则会在于group内的其他节点进行协调之后再commit。

因此,当一个事务准备提交时,会自动在group内进行原子性的广播,告知其他节点变更了什么内容/执行了什么事务。

这种原子广播的方式,使得这个事务在每一个节点上都保持着同样顺序。

这意味着每一个节点都以同样的顺序,接收到了同样的事务日志,所以每一个节点以同样的顺序重演了这些事务日志,最终整个group保持了完全一致的状态。

然而,不同的节点上执行的事务之间有可能存在资源争用。这种现象容易出现在两个不同的并发事务上。

假设在不同的节点上有两个并发事务,更新了同一行数据,那么就会发生资源争用。

面对这种情况,GroupReplication判定先提交的事务为有效事务,会在整个group里面重演,后提交的事务会直接中断,或者回滚,最后丢弃掉。

因此,这也是一个无共享的复制方案,每一个节点都保存了完整的数据副本。看如下图片01.png,描述了具体的工作流程,能够简洁的和其他方案进行对比。这个复制方案,在某种程度上,和数据库状态机(DBSM)的Replication方法比较类似。

MGR的限制

仅支持InnoDB表,并且每张表一定要有一个主键,用于做write set的冲突检测;

必须打开GTID特性,二进制日志格式必须设置为ROW,用于选主与write set

COMMIT可能会导致失败,类似于快照事务隔离级别的失败场景

目前一个MGR集群最多支持9个节点

不支持外键于save point特性,无法做全局间的约束检测与部分部分回滚

二进制日志不支持binlog event checksum

2,安装mysql 5.7.18

在三台db服务器上面设置/etc/hosts映射,如下:

192.168.1.20 mgr1

192.168.1.21 mgr2

192.168.1.22 mgr3

安装的数据库服务器:

数据库服务器地址 |

端口 |

数据目录 |

Server-id |

192.168.1.20(mgr1) |

3306 |

/usr/local/mysql/data |

20 |

192.168.1.21(mgr2) |

3306 |

/usr/local/mysql/data |

21 |

192.168.1.22(mgr3) |

3306 |

/usr/local/mysql/data |

22 |

安装过程略。。。。。

my.cnf的详细配置

[client]

port = 3306

socket = /usr/local/mysql/tmp/mysql.sock

[mysqld]

port = 3306

socket = /usr/local/mysql/tmp/mysql.sock

back_log = 80

basedir = /usr/local/mysql

tmpdir = /tmp

datadir = /usr/local/mysql/data

#-------------------gobal variables------------#

gtid_mode = ON

enforce_gtid_consistency = ON

master_info_repository = TABLE

relay_log_info_repository = TABLE

binlog_checksum = NONE

log_slave_updates = ON

log-bin = /usr/local/mysql/log/mysql-bin

transaction_write_set_extraction = XXHASH64

loose-group_replication_group_name = ‘ce9be252-2b71-11e6-b8f4-00212844f856‘

loose-group_replication_start_on_boot = off

loose-group_replication_local_address = ‘192.168.1.20:33061‘

loose-group_replication_group_seeds =‘192.168.1.20:33061,192.168.1.21:33061,192.168.1.22:33061‘

loose-group_replication_bootstrap_group = off

max_connect_errors = 20000

max_connections = 2000

wait_timeout = 3600

interactive_timeout = 3600

net_read_timeout = 3600

net_write_timeout = 3600

table_open_cache = 1024

table_definition_cache = 1024

thread_cache_size = 512

open_files_limit = 10000

character-set-server = utf8

collation-server = utf8_bin

skip_external_locking

performance_schema = 1

user = mysql

myisam_recover_options = DEFAULT

skip-name-resolve

local_infile = 0

lower_case_table_names = 0

#--------------------innoDB------------#

innodb_buffer_pool_size = 2000M

innodb_data_file_path = ibdata1:200M:autoextend

innodb_flush_log_at_trx_commit = 1

innodb_io_capacity = 600

innodb_lock_wait_timeout = 120

innodb_log_buffer_size = 8M

innodb_log_file_size = 200M

innodb_log_files_in_group = 3

innodb_max_dirty_pages_pct = 85

innodb_read_io_threads = 8

innodb_write_io_threads = 8

innodb_support_xa = 1

innodb_thread_concurrency = 32

innodb_file_per_table

innodb_rollback_on_timeout

#------------session variables-------#

join_buffer_size = 8M

key_buffer_size = 256M

bulk_insert_buffer_size = 8M

max_heap_table_size = 96M

tmp_table_size = 96M

read_buffer_size = 8M

sort_buffer_size = 2M

max_allowed_packet = 64M

read_rnd_buffer_size = 32M

#------------MySQL Log----------------#

log-bin = my3306-bin

binlog_format = row

sync_binlog = 1

expire_logs_days = 15

max_binlog_cache_size = 128M

max_binlog_size = 500M

binlog_cache_size = 64k

slow_query_log

log-slow-admin-statements

log_warnings = 1

long_query_time = 0.25

#---------------replicate--------------#

relay-log-index = relay3306.index

relay-log = relay3306

server-id = 20

init_slave = ‘set sql_mode=STRICT_ALL_TABLES‘

log-slave-updates

[myisamchk]

key_buffer = 512M

sort_buffer_size = 512M

read_buffer = 8M

write_buffer = 8M

[mysqlhotcopy]

interactive-timeout

[mysqld_safe]

open-files-limit = 8192

log-error = /usr/local/mysql/log/mysqld_error.log

my.cnf注意每个节点的server_id、loose-group_replication_local_address、loose-group_replication_group_seeds都配置成自己的相印的参数

3,创建复制环境

在mgr1/mgr2/mgr3上建立复制账号:

mysql> GRANT REPLICATION SLAVE ON *.* TO ‘repl‘@‘192.168.%‘ IDENTIFIED BY ‘repl‘;

4,安装group replication插件

在mgr1、mgr2、mgr3上依次安装group replication插件

mysql> INSTALL PLUGIN group_replication SONAME ‘group_replication.so‘;

5,配置group replication参数

确保binlog_format是row格式。

mysql> show variables like ‘binlog_format‘;

+---------------+-------+

| Variable_name | Value |

+---------------+-------+

| binlog_format | ROW |

+---------------+-------+

1 row in set (0.00 sec)

配置文件配置

(1) mgr1上的my.cnf配置:

server-id=20

transaction_write_set_extraction = XXHASH64

loose-group_replication_group_name = "ce9be252-2b71-11e6-b8f4-00212844f856"

loose-group_replication_start_on_boot = off

loose-group_replication_local_address = "192.168.1.20:33061"

loose-group_replication_group_seeds = "192.168.1.20:33061,192.168.1.21:33061,192.168.1.22:33061"

loose-group_replication_bootstrap_group = off

loose-group_replication_single_primary_mode = true

loose-group_replication_enforce_update_everywhere_checks = false

(2)mgr2上的my.cnf配置:

server-id=21

transaction_write_set_extraction = XXHASH64

loose-group_replication_group_name = "ce9be252-2b71-11e6-b8f4-00212844f856"

loose-group_replication_start_on_boot = off

loose-group_replication_local_address = "192.168.1.21:33061"

loose-group_replication_group_seeds = "192.168.1.20:33061,192.168.1.21:33061,192.168.1.22:33061"

loose-group_replication_bootstrap_group = off

loose-group_replication_single_primary_mode = true

loose-group_replication_enforce_update_everywhere_checks = false

(3)mgr3上的my.cnf配置:

server-id=22

transaction_write_set_extraction = XXHASH64

loose-group_replication_group_name = "ce9be252-2b71-11e6-b8f4-00212844f856"

loose-group_replication_start_on_boot = off

loose-group_replication_local_address = "192.168.1.22 :33061"

loose-group_replication_group_seeds = "192.168.1.20:33061,192.168.1.21:33061,192.168.1.22:33061"

loose-group_replication_bootstrap_group = off

loose-group_replication_single_primary_mode = true

loose-group_replication_enforce_update_everywhere_checks = false |

配置完后,重启3个db上的mysql服务

6,启动mgr集群

开始构建group replication集群,通常操作命令

在mgr1、mgr2、mgr3上依次执行

mysql> CHANGE MASTER TO MASTER_USER=‘repl‘, MASTER_PASSWORD=‘repl‘ FOR CHANNEL ‘group_replication_recovery‘;

Query OK, 0 rows affected, 2 warnings (0.02 sec)

Db1上建立基本主库master库:

# 设置group_replication_bootstrap_group为ON是为了标示以后加入集群的服务器以这台服务器为基准,以后加入的就不需要设置。

mysql> SET GLOBAL group_replication_bootstrap_group = ON;

Query OK, 0 rows affected (0.00 sec)

mysql> START GROUP_REPLICATION;

Query OK, 0 rows affected (1.03 sec)

mysql> select * from performance_schema.replication_group_members;

Db2上启动group_replication:

Db2上mysql命令行上执行启动:

mysql> set global group_replication_allow_local_disjoint_gtids_join=ON;

mysql> start group_replication;

mysql> select * from performance_schema.replication_group_members;

Db3上启动group_replication:

-- Db3命令行上执行:

mysql> set global group_replication_allow_local_disjoint_gtids_join=ON;

mysql> start group_replication;

-- 再去master库mgr1上,查看group_replication成员,会有mgr3的显示,而且已经是ONLINE了

mysql> select * from performance_schema.replication_group_members;

最后查看集群状态,都为ONLINE就表示OK:

mysql> select * from performance_schema.replication_group_members;

7,验证集群复制功能

查看那个是主节点

mysql> select variable_value from performance_schema.global_status where variable_name="group_replication_primary_member";

在master库mgr1上建立测试库mgr1,测试表t1,录入一条数据

mysql> create database mgr1;

Query OK, 1 row affected (0.00 sec)

mysql> create table mgr1.t1(id int,cn varchar(32));

Query OK, 0 rows affected (0.02 sec)

mysql> insert into t1(id,cn)values(1,‘a‘);

ERROR 3098 (HY000): The table does notcomply with the requirements by an external plugin.

-- # 这里原因是group_replaction环境下面,表必须有主键不然不允许往里insert值。所以修改表t1,将id字段设置程主键即可。

mysql> alter table t1 add primary key(id);

mysql> insert into t1(id,cn)values(1,‘a‘);

去mgr2/mgr3上可以看到数据已经同步过去

mysql> select * from mgr1.t1;

+----+------+

| id | cn |

+----+------+

| 1| a |

+----+------+

1 row in set (0.00 sec)

然后在mgr2/mgr3上执行inert操作,则拒绝,因为mgr2、mgr3为readonly

mysql> insert into t1 select 2,‘b‘;

ERROR 1290 (HY000): The MySQL server isrunning with the --super-read-only option so it cannot execute this statement

8,问题记录

8.1问题记录一

MySQL窗口报错:

ERROR 3092 (HY000): The server is notconfigured properly to be an active member of the group. Please see moredetails on error log.

后台ERROR LOG报错:

[ERROR] Plugin group_replication reported:‘This member has more executed transactions than those present in the group.Local transactions: f16f7f74-c283-11e6-ae37-fa163ee40410:1 > Grouptransactions: 3c992270-c282-11e6-93bf-fa163ee40410:1,

aaaaaa:1-5‘

[ERROR]Plugin group_replication reported: ‘The member contains transactions notpresent in the group. The member will now exit the group.‘

[Note] Plugin group_replication reported: ‘Toforce this member into the group you can use the group_replication_allow_local_disjoint_gtids_joinoption‘

【解决办法】:

根据提示打开group_replication_allow_local_disjoint_gtids_join选项,mysql命令行执行:

mysql> set globalgroup_replication_allow_local_disjoint_gtids_join=ON;

再执行开启组复制:

mysql> start group_replication;

Query OK, 0 rows affected (7.89 sec)

8.2 问题记录二RECOVERING

在mgr1上查询集群组成员

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+----------------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+----------------------+-------------+--------------+

| group_replication_applier | 3d872c2e-d670-11e6-ac1f-b8ca3af6e36c | | 3306 | ONLINE

| group_replication_applier | ef8ac2de-d671-11e6-9ba4-18a99b763071 | | 3306 | RECOVERING |

| group_replication_applier | fdf2b02e-d66f-11e6-98a8-18a99b76310d | | 3306 | RECOVERING |

+---------------------------+--------------------------------------+----------------------+-------------+--------------+

3 rows in set (0.00 sec)

【解决办法】:

看报错[ERROR] Slave I/O for channel‘group_replication_recovery‘: error connecting to master ‘repl@:3306‘- retry-time: 60 retries: 1, Error_code:2005,连接master库不上,所以问题在这里,我们赋予的复制账号是ip的repl@‘192.168.%‘,所以还需要做一个hostname()和mgr1的ip地址192.168.1.20的映射关系。

建立hostname和ip映射

vim /etc/hosts

192.168.1.20 mgr1

192.168.1.21 mgr2

192.168.1.22 mgr3

然后在mgr2上执行如下命令后重新开启group_replication即可。

mysql> stop group_replication;

Query OK, 0 rows affected (0.02 sec)

mysql> start group_replication;

Query OK, 0 rows affected (5.68 sec)

再去master库mgr1上,查看group_replication成员,会有mgr2的显示

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+----------------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE

+---------------------------+--------------------------------------+----------------------+-------------+--------------+

| group_replication_applier | 3d872c2e-d670-11e6-ac1f-b8ca3af6e36c | | 3306 | ONLINE

| group_replication_applier | fdf2b02e-d66f-11e6-98a8-18a99b76310d | | 3306 | ONLINE |

+---------------------------+--------------------------------------+----------------------+-------------+--------------+

2 rows in set (0.00 sec)

8.3问题记录三

操作问题

mysql> START GROUP_REPLICATION;

ERROR 3092 (HY000): The server is notconfigured properly to be an active member of the group. Please see moredetails on error log.

【解决办法】:

mysql> SET GLOBALgroup_replication_bootstrap_group = ON;

mysql> START GROUP_REPLICATION;

9,日常维护步骤:

1、如果从库某一节点关闭

stop group_replication;

2、如果所有的库都关闭后,第一个库作为主库首先执行

set global group_replication_bootstrap_group=ON;

start group_replication;

剩下的库直接执行即可!

start group_replication;

3、如果主库故障,会自动从两个从库选出一个主库,主库启动后再次执行如下命令后会变成从库

start group_replication;

[MGR——Mysql的组复制之单主模式 ]详细搭建部署过程

标签:允许 软件测试 工作 协议 主库故障 自己 文件 exe cat

原文地址:http://www.cnblogs.com/manger/p/7212039.html