标签:停用 词云 tin tag turn panda htm urllib2 log

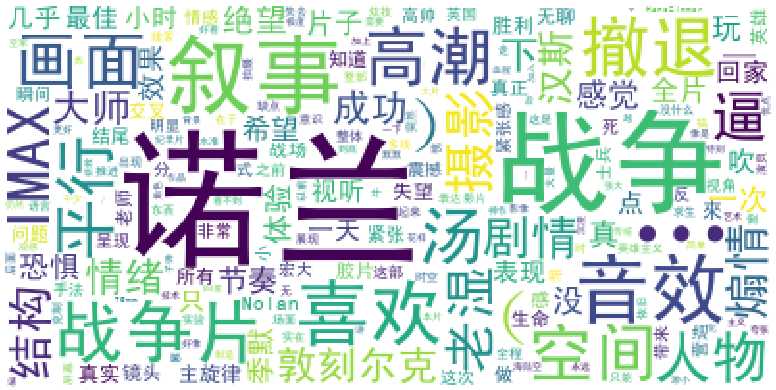

最近很想看的一个电影,去知乎上看一下评论,刚好在学Python爬虫,就做个小实例。

代码基于第三方修改 原文链接 http://python.jobbole.com/88325/#comment-94754

#coding:utf-8 from lib2to3.pgen2.grammar import line __author__ = ‘hang‘ import warnings warnings.filterwarnings("ignore") import jieba #分词包 import numpy #numpy计算包 import re import pandas as pd import matplotlib.pyplot as plt import urllib2 from bs4 import BeautifulSoup as bs import matplotlib matplotlib.rcParams[‘figure.figsize‘] = (10.0, 5.0) from wordcloud import WordCloud#词云包 #分析网页函数 def getNowPlayingMovie_list(): resp = urllib2.urlopen(‘https://movie.douban.com/nowplaying/hangzhou/‘) html_data = resp.read().decode(‘utf-8‘) soup = bs(html_data, ‘html.parser‘) nowplaying_movie = soup.find_all(‘div‘, id=‘nowplaying‘) nowplaying_movie_list = nowplaying_movie[0].find_all(‘li‘, class_=‘list-item‘) nowplaying_list = [] for item in nowplaying_movie_list: nowplaying_dict = {} nowplaying_dict[‘id‘] = item[‘data-subject‘] for tag_img_item in item.find_all(‘img‘): nowplaying_dict[‘name‘] = tag_img_item[‘alt‘] nowplaying_list.append(nowplaying_dict) return nowplaying_list #爬取评论函数 def getCommentsById(movieId, pageNum): eachCommentStr = ‘‘ if pageNum>0: start = (pageNum-1) * 20 else: return False requrl = ‘https://movie.douban.com/subject/‘ + movieId + ‘/comments‘ +‘?‘ +‘start=‘ + str(start) + ‘&limit=20‘ print(requrl) resp = urllib2.urlopen(requrl) html_data = resp.read() soup = bs(html_data, ‘html.parser‘) comment_div_lits = soup.find_all(‘div‘, class_=‘comment‘) for item in comment_div_lits: if item.find_all(‘p‘)[0].string is not None: eachCommentStr+=item.find_all(‘p‘)[0].string return eachCommentStr.strip() def main(): #循环获取第一个电影的前10页评论 commentStr = ‘‘ NowPlayingMovie_list = getNowPlayingMovie_list() for i in range(10): num = i + 1 commentList_temp = getCommentsById(NowPlayingMovie_list[0][‘id‘], num) commentStr+=commentList_temp.strip() #print comments cleaned_comments = re.sub("[\s+\.\!\/_,$%^*(+\"\‘)]+|[+——()?【】《》<>,“”!,...。?、~@#¥%……&*()]+", "",commentStr) print cleaned_comments #使用结巴分词进行中文分词 segment = jieba.lcut(cleaned_comments) words_df=pd.DataFrame({‘segment‘:segment}) #去掉停用词 stopwords=pd.read_csv("D:\pycode\stopwords.txt",index_col=False,quoting=3,sep="\t",names=[‘stopword‘], encoding=‘utf-8‘)#quoting=3全不引用 words_df=words_df[~words_df.segment.isin(stopwords.stopword)] print words_df #统计词频 words_stat=words_df.groupby(by=[‘segment‘])[‘segment‘].agg({"计数":numpy.size}) words_stat=words_stat.reset_index().sort_values(by=["计数"],ascending=False) #用词云进行显示 wordcloud=WordCloud(font_path="D:\pycode\simhei.ttf",background_color="white",max_font_size=80) word_frequence = {x[0]:x[1] for x in words_stat.head(1000).values} word_frequence_list = [] for key in word_frequence: temp = (key,word_frequence[key]) word_frequence_list.append(temp) wordcloud = wordcloud.fit_words(dict(word_frequence_list)) plt.imshow(wordcloud) plt.axis("off") plt.show() #主函数 main()

标签:停用 词云 tin tag turn panda htm urllib2 log

原文地址:http://www.cnblogs.com/cheman/p/7479872.html