标签:粘贴 conf 遇到 googl ted val manager hadoop byte

随着Hbase版本的更新,有一些依赖包也要随着更新,今天碰到一个依赖包引用顺序的问题!源码如下,在windows端直接运行出错,但以jar包的方式放到集群上可以运行!错误提示也会在下面粘贴出来。

package com.iie.Hbase_demo; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hbase.HBaseConfiguration; import org.apache.hadoop.hbase.HColumnDescriptor; import org.apache.hadoop.hbase.HTableDescriptor; import org.apache.hadoop.hbase.TableName; import org.apache.hadoop.hbase.client.*; import org.apache.hadoop.hbase.util.Bytes; import java.io.IOException; import java.sql.DriverManager; import static org.apache.hadoop.hbase.client.ConnectionFactory.createConnection; public class HbasePut { public static Configuration config = null; static { config = HBaseConfiguration.create(); config.set("hbase.zookeeper.quorum", "10.199.33.12:2181"); System.out.println("成功连接ZK"); } /** * 创建Table * * @param tableName 表名 * @param family 列族 */ public static void createTable(String tableName, String[] family) { HTableDescriptor table = new HTableDescriptor(TableName.valueOf(tableName)); try (Connection connection = ConnectionFactory.createConnection(config)) { try (Admin admin = connection.getAdmin()) { for (int i = 0; i < family.length; i++) { table.addFamily(new HColumnDescriptor(family[i])); } if (admin.tableExists(TableName.valueOf(tableName))) { System.out.println("Table Exists!!"); System.exit(0); } else { admin.createTable(table); System.out.println("Create Table Success!!! Table Name :[ " + tableName + " ]"); } } } catch (IOException e) { e.printStackTrace(); } } //数据库的连接 public static Connection getConnection() throws Exception{ String url = "jdbc:mysql://10.199.33.13:3306/test"; String user = "root"; String password = "111111"; Class.forName("com.mysql.jdbc.Driver"); Connection conn = (Connection) DriverManager.getConnection(url, user, password); return conn; } /** * 添加数据 * * @param rowKey rowKey * @param tableName 表名 * @param column 列名 * @param value 值 */ public static void addData(String rowKey, String tableName, String[] column, String[] value) { try (Connection connection = createConnection(config); Table table = connection.getTable(TableName.valueOf(tableName))) { Put put = new Put(Bytes.toBytes(rowKey));//存储到Hbase时都要转化为byte数组的形式 HColumnDescriptor[] columnFamilies = table.getTableDescriptor().getColumnFamilies(); for (int i = 0; i < columnFamilies.length; i++) { String familyName = columnFamilies[i].getNameAsString(); if (familyName.equals("userinfo")) { for (int j = 0; j < column.length; j++) { put.addColumn(Bytes.toBytes(familyName), Bytes.toBytes(column[j]), Bytes.toBytes(value[j])); } } table.put(put); System.out.println("Add Data Success!!!-"); } } catch (IOException e) { e.printStackTrace(); } } public static void main(String[] args) throws IOException { String[] family = {"userinfo"}; HbasePut.createTable("zxf",family); String[] column = {"name", "age", "email", "phone"}; String[] value={"zengxuefeng","24","1564665679@qq.com","18463101815"}; HbasePut.addData( "user","zxf",column,value); } }

成功连接ZK java.io.IOException: java.lang.reflect.InvocationTargetException at org.apache.hadoop.hbase.client.ConnectionFactory.createConnection(ConnectionFactory.java:240) at org.apache.hadoop.hbase.client.ConnectionFactory.createConnection(ConnectionFactory.java:218) at org.apache.hadoop.hbase.client.ConnectionFactory.createConnection(ConnectionFactory.java:119) at com.iie.Hbase_demo.HbasePut.createTable(HbasePut.java:35) at com.iie.Hbase_demo.HbasePut.main(HbasePut.java:94) Caused by: java.lang.reflect.InvocationTargetException at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:422) at org.apache.hadoop.hbase.client.ConnectionFactory.createConnection(ConnectionFactory.java:238) ... 4 more Caused by: java.lang.VerifyError: class org.apache.hadoop.hbase.protobuf.generated.ClientProtos$Result overrides final method getUnknownFields.()Lcom/google/protobuf/UnknownFieldSet; at java.lang.ClassLoader.defineClass1(Native Method) at java.lang.ClassLoader.defineClass(ClassLoader.java:760) at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142) at java.net.URLClassLoader.defineClass(URLClassLoader.java:467) at java.net.URLClassLoader.access$100(URLClassLoader.java:73) at java.net.URLClassLoader$1.run(URLClassLoader.java:368) at java.net.URLClassLoader$1.run(URLClassLoader.java:362) at java.security.AccessController.doPrivileged(Native Method) at java.net.URLClassLoader.findClass(URLClassLoader.java:361) at java.lang.ClassLoader.loadClass(ClassLoader.java:424) at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:331) at java.lang.ClassLoader.loadClass(ClassLoader.java:357) at org.apache.hadoop.hbase.protobuf.ProtobufUtil.<clinit>(ProtobufUtil.java:210) at org.apache.hadoop.hbase.ClusterId.parseFrom(ClusterId.java:64) at org.apache.hadoop.hbase.zookeeper.ZKClusterId.readClusterIdZNode(ZKClusterId.java:75) at org.apache.hadoop.hbase.client.ZooKeeperRegistry.getClusterId(ZooKeeperRegistry.java:105) at org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation.retrieveClusterId(ConnectionManager.java:919) at org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation.<init>(ConnectionManager.java:657) ... 9 more java.io.IOException: java.lang.reflect.InvocationTargetException at org.apache.hadoop.hbase.client.ConnectionFactory.createConnection(ConnectionFactory.java:240) at org.apache.hadoop.hbase.client.ConnectionFactory.createConnection(ConnectionFactory.java:218) at org.apache.hadoop.hbase.client.ConnectionFactory.createConnection(ConnectionFactory.java:119) at com.iie.Hbase_demo.HbasePut.addData(HbasePut.java:73) at com.iie.Hbase_demo.HbasePut.main(HbasePut.java:97) Caused by: java.lang.reflect.InvocationTargetException at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:422) at org.apache.hadoop.hbase.client.ConnectionFactory.createConnection(ConnectionFactory.java:238) ... 4 more Caused by: java.lang.NoClassDefFoundError: Could not initialize class org.apache.hadoop.hbase.protobuf.ProtobufUtil at org.apache.hadoop.hbase.ClusterId.parseFrom(ClusterId.java:64) at org.apache.hadoop.hbase.zookeeper.ZKClusterId.readClusterIdZNode(ZKClusterId.java:75) at org.apache.hadoop.hbase.client.ZooKeeperRegistry.getClusterId(ZooKeeperRegistry.java:105) at org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation.retrieveClusterId(ConnectionManager.java:919) at org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation.<init>(ConnectionManager.java:657) ... 9 more Process finished with exit code 0

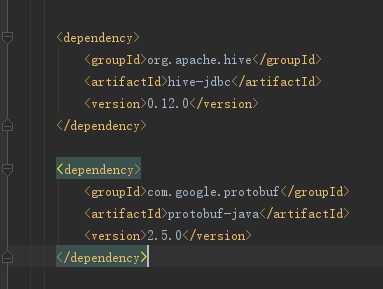

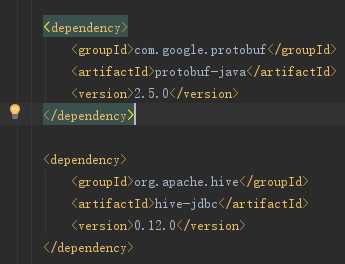

导致这个错误的原因是:protobuf这个jar包引用顺序的问题,他引用在hive之后,被hive最终定义之后不再允许hbase引用导致的,这个错误找了好久!

将上面两个引用在pom文件中变换一下顺序。如下所示:

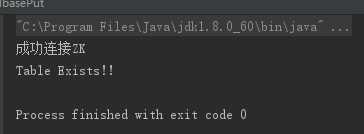

再运行程序时就完美解决了这个问题了!!!

希望对大家有帮助。

标签:粘贴 conf 遇到 googl ted val manager hadoop byte

原文地址:http://www.cnblogs.com/HITxuefeng/p/7517337.html