#include "stdafx.h"

#include <iostream>

#include <vector>

#include <opencv2/core/core.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/features2d/features2d.hpp>

#include <opencv2/calib3d/calib3d.hpp>

using namespace std;

using namespace cv;

int _tmain(int argc, _TCHAR* argv[])

{

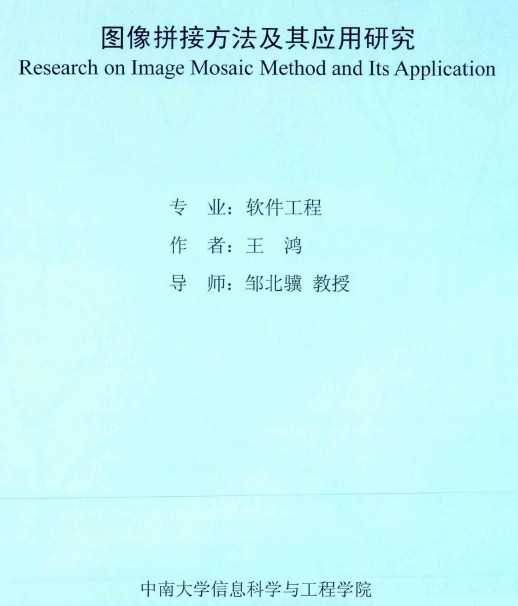

Mat src = imread("Lena.jpg");

src.convertTo(src,CV_32F,1.0/255);

imshow("src",src);

Mat src2= src.clone();

Mat dst;

Mat lastmat;

vector<Mat> vecMats;

Mat tmp;

for (int i=0;i<4;i++)

{

pyrDown(src2,src2);

pyrUp(src2,tmp);

resize(tmp,tmp,src.size());

tmp = src - tmp;

vecMats.push_back(tmp);

src = src2;

}

lastmat = src;

//重建

for (int i=3;i>=0;i--)

{

pyrUp(lastmat,lastmat);

resize(lastmat,lastmat,vecMats[i].size());

lastmat = lastmat + vecMats[i];

}

imshow("dst",lastmat);

waitKey();

return 0;

}

使用工具比对也是完全一样的

二、实现每个金字塔层面的linearblend融合

#include "stdafx.h"

#include <iostream>

#include <vector>

#include <opencv2/core/core.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/features2d/features2d.hpp>

#include <opencv2/calib3d/calib3d.hpp>

using namespace std;

using namespace cv;

int _tmain(int argc, _TCHAR* argv[])

{

Mat srcLeft = imread("apple.png");

Mat srcRight= imread("orange.png");

srcLeft.convertTo(srcLeft,CV_32F,1.0/255);

srcRight.convertTo(srcRight,CV_32F,1.0/255);

Mat rawLeft = srcLeft.clone();

Mat rawRight=srcRight.clone();

imshow("srcRight",srcRight);

imshow("srcLeft",srcLeft);

Mat srcLeft2= srcLeft.clone();

Mat srcRight2=srcRight.clone();

Mat lastmatLeft; Mat lastmatRight;

vector<Mat> vecMatsLeft; vector<Mat> vecMatsRight;

Mat tmp;

for (int i=0;i<4;i++)

{

pyrDown(srcLeft2,srcLeft2);

pyrUp(srcLeft2,tmp);

resize(tmp,tmp,srcLeft.size());

tmp = srcLeft - tmp;

vecMatsLeft.push_back(tmp);

srcLeft = srcLeft2;

}

lastmatLeft = srcLeft;

for (int i=0;i<4;i++)

{

pyrDown(srcRight2,srcRight2);

pyrUp(srcRight2,tmp);

resize(tmp,tmp,srcRight.size());

tmp = srcRight - tmp;

vecMatsRight.push_back(tmp);

srcRight = srcRight2;

}

lastmatRight = srcRight;

//每一层都要对准并融合

int ioffset =vecMatsLeft[0].cols - 100;//这里-100 的操作是linearblend的小技巧

int istep = 1;

double dblend = 0.0;

vector<Mat> vecMatResult;//保存结果

Mat tmpResult;

Mat roi;

for (int i=0;i<4;i++)

{

//对准

tmpResult = Mat::zeros(vecMatsLeft[i].rows,vecMatsLeft[i].cols*2,vecMatsLeft[i].type());

roi = tmpResult(Rect(ioffset,0,vecMatsLeft[i].cols,vecMatsLeft[i].rows));

vecMatsRight[i].copyTo(roi);

roi = tmpResult(Rect(0,0,vecMatsLeft[i].cols,vecMatsLeft[i].rows));

vecMatsLeft[i].copyTo(roi);

//融合

for (int j = 0;j<(100/istep);j++)

{

tmpResult.col(ioffset + j)= tmpResult.col(ioffset+j)*(1-dblend) + vecMatsRight[i].col(j)*dblend;

dblend = dblend +0.01*istep;

}

//结尾

dblend = 0.0;

ioffset = ioffset/2;

istep = istep*2;

vecMatResult.push_back(tmpResult);

}

Mat latmatresult = Mat::zeros(lastmatLeft.rows,lastmatLeft.cols*2,lastmatLeft.type());

roi = latmatresult(Rect(0,0,lastmatLeft.cols,lastmatLeft.rows));

lastmatLeft.copyTo(roi);

roi = latmatresult(Rect(ioffset,0,lastmatLeft.cols,lastmatLeft.rows));

lastmatRight.copyTo(roi);

for (int j=0;j<(100/istep);j++)

{

latmatresult.col(ioffset + j)= latmatresult.col(ioffset+j)*(1-dblend) + lastmatRight.col(j)*dblend;

dblend = dblend +0.01*istep;

}

//重构

for (int i=3;i>=0;i--)

{

pyrUp(lastmatLeft,lastmatLeft);

resize(lastmatLeft,lastmatLeft,vecMatsLeft[i].size());

lastmatLeft = lastmatLeft + vecMatsLeft[i];

}

for (int i=3;i>=0;i--)

{

pyrUp(lastmatRight,lastmatRight);

resize(lastmatRight,lastmatRight,vecMatsRight[i].size());

lastmatRight = lastmatRight + vecMatsRight[i];

}

for (int i=3;i>=0;i--)

{

pyrUp(latmatresult,latmatresult);

resize(latmatresult,latmatresult,vecMatResult[i].size());

latmatresult = latmatresult + vecMatResult[i];

}

imshow("lastmatLeft",lastmatLeft);

lastmatLeft.convertTo(lastmatLeft,CV_8U,255);

imwrite("lastmatleft.png",lastmatLeft);

imshow("lastmatRight",lastmatRight);

imshow("multibandblend",latmatresult);

waitKey();

return 0;

}

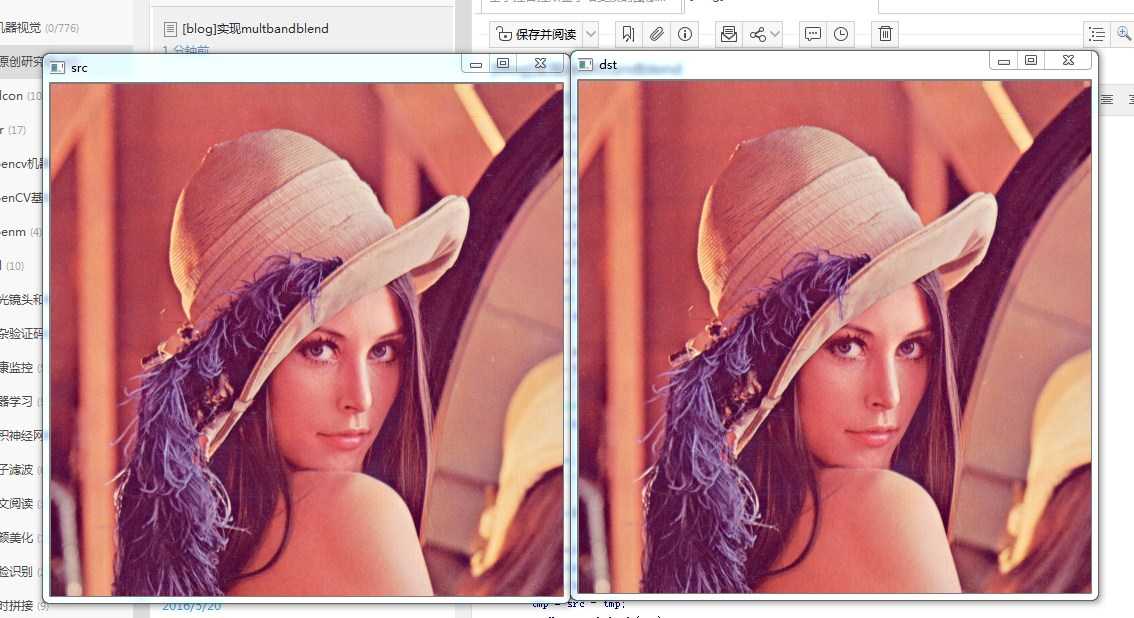

观看细节,可以看到过渡的地方一点都不违和,苹果上面的白色小斑点都过渡了过来。这个结果应该是非常接近论文上面的要求了。

三、改造现有的图像拼接程序,并且运用于图像拼接

//使用Multiblend进行图像融合

#include "stdafx.h"

#include <iostream>

#include <vector>

#include <opencv2/core/core.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/features2d/features2d.hpp>

#include <opencv2/calib3d/calib3d.hpp>

#include "matcher.h"

using namespace cv;

int main()

{

// Read input images 这里的命名最好为imageleft和imageright

cv::Mat image1= cv::imread("Univ4.jpg",1);

cv::Mat image2= cv::imread("Univ5.jpg",1);

if (!image1.data || !image2.data)

return 0;

// Prepare the matcher

RobustMatcher rmatcher;

rmatcher.setConfidenceLevel(0.98);

rmatcher.setMinDistanceToEpipolar(1.0);

rmatcher.setRatio(0.65f);

cv::Ptr<cv::FeatureDetector> pfd= new cv::SurfFeatureDetector(10);

rmatcher.setFeatureDetector(pfd);

// Match the two images

std::vector<cv::DMatch> matches;

std::vector<cv::KeyPoint> keypoints1, keypoints2;

cv::Mat fundemental= rmatcher.match(image1,image2,matches, keypoints1, keypoints2);

// draw the matches

cv::Mat imageMatches;

cv::drawMatches(image1,keypoints1, // 1st image and its keypoints

image2,keypoints2, // 2nd image and its keypoints

matches, // the matches

imageMatches, // the image produced

cv::Scalar(255,255,255)); // color of the lines

// Convert keypoints into Point2f

std::vector<cv::Point2f> points1, points2;

for (std::vector<cv::DMatch>::const_iterator it= matches.begin();

it!= matches.end(); ++it) {

// Get the position of left keypoints

float x= keypoints1[it->queryIdx].pt.x;

float y= keypoints1[it->queryIdx].pt.y;

points1.push_back(cv::Point2f(x,y));

// Get the position of right keypoints

x= keypoints2[it->trainIdx].pt.x;

y= keypoints2[it->trainIdx].pt.y;

points2.push_back(cv::Point2f(x,y));

}

std::cout << points1.size() << " " << points2.size() << std::endl;

// Find the homography between image 1 and image 2

std::vector<uchar> inliers(points1.size(),0);

cv::Mat homography= cv::findHomography(

cv::Mat(points1),cv::Mat(points2), // corresponding points

inliers, // outputed inliers matches

CV_RANSAC, // RANSAC method

1.); // max distance to reprojection point

// Warp image 1 to image 2

cv::Mat result;

cv::warpPerspective(image1, // input image

result, // output image

homography, // homography

cv::Size(2*image1.cols,image1.rows)); // size of output image

cv::Mat resultback;

result.copyTo(resultback);

// Copy image 1 on the first half of full image

cv::Mat half(result,cv::Rect(0,0,image2.cols,image2.rows));

image2.copyTo(half);

// Display the warp image

cv::namedWindow("After warping");

cv::imshow("After warping",result);

//需要注意的一点是,原始文件的图片是按照从右至左边进行移动的。

//进行Multiblend的融合,融合的输入图像为image2(左)和resultback(右)

Mat srcLeft = image2.clone();

Mat srcRight= resultback.clone();

srcLeft.convertTo(srcLeft,CV_32F,1.0/255);

srcRight.convertTo(srcRight,CV_32F,1.0/255);

Mat rawLeft = srcLeft.clone();

Mat rawRight=srcRight.clone();

Mat srcLeft2= srcLeft.clone();

Mat srcRight2=srcRight.clone();

Mat lastmatLeft; Mat lastmatRight;

vector<Mat> vecMatsLeft; vector<Mat> vecMatsRight;

Mat tmp;

for (int i=0;i<4;i++)

{

pyrDown(srcLeft2,srcLeft2);

pyrUp(srcLeft2,tmp);

resize(tmp,tmp,srcLeft.size());

tmp = srcLeft - tmp;

vecMatsLeft.push_back(tmp);

srcLeft = srcLeft2;

}

lastmatLeft = srcLeft;

for (int i=0;i<4;i++)

{

pyrDown(srcRight2,srcRight2);

pyrUp(srcRight2,tmp);

resize(tmp,tmp,srcRight.size());

tmp = srcRight - tmp;

vecMatsRight.push_back(tmp);

srcRight = srcRight2;

}

lastmatRight = srcRight;

//每一层都要对准并融合

int ioffset =vecMatsLeft[0].cols - 100;//这里-100 的操作是linearblend的小技巧

int istep = 1;

double dblend = 0.0;

vector<Mat> vecMatResult;//保存结果

Mat tmpResult;

Mat roi;

for (int i=0;i<4;i++)

{

//对准

tmpResult = Mat::zeros(vecMatsLeft[i].rows,vecMatsLeft[i].cols*2,vecMatsLeft[i].type());

roi = tmpResult(Rect(0,0,vecMatsRight[i].cols,vecMatsRight[i].rows));

vecMatsRight[i].copyTo(roi);

roi = tmpResult(Rect(0,0,vecMatsLeft[i].cols,vecMatsLeft[i].rows));

vecMatsLeft[i].copyTo(roi);

//融合

for (int j = 0;j<(128/istep);j++)

{

tmpResult.col(ioffset + j)= tmpResult.col(ioffset+j)*(1-dblend) + vecMatsRight[i].col(ioffset+j)*dblend;

dblend = dblend +0.0078125*istep;

}

//结尾

dblend = 0.0;

ioffset = ioffset/2;

istep = istep*2;

vecMatResult.push_back(tmpResult);

}

Mat latmatresult = Mat::zeros(lastmatLeft.rows,lastmatLeft.cols*2,lastmatLeft.type());

roi = latmatresult(Rect(0,0,lastmatRight.cols,lastmatRight.rows));

lastmatRight.copyTo(roi);

roi = latmatresult(Rect(0,0,lastmatLeft.cols,lastmatLeft.rows));

lastmatLeft.copyTo(roi);

for (int j=0;j<(128/istep);j++)

{

latmatresult.col(ioffset+j)= latmatresult.col(ioffset+j)*(1-dblend) + lastmatRight.col(ioffset+j)*dblend;

dblend = dblend +0.00725*istep;

}

//重构

for (int i=3;i>=0;i--)

{

pyrUp(lastmatLeft,lastmatLeft);

resize(lastmatLeft,lastmatLeft,vecMatsLeft[i].size());

lastmatLeft = lastmatLeft + vecMatsLeft[i];

}

for (int i=3;i>=0;i--)

{

pyrUp(lastmatRight,lastmatRight);

resize(lastmatRight,lastmatRight,vecMatsRight[i].size());

lastmatRight = lastmatRight + vecMatsRight[i];

}

for (int i=3;i>=0;i--)

{

pyrUp(latmatresult,latmatresult);

resize(latmatresult,latmatresult,vecMatResult[i].size());

latmatresult = latmatresult + vecMatResult[i];

}

cv::waitKey();

return 0;

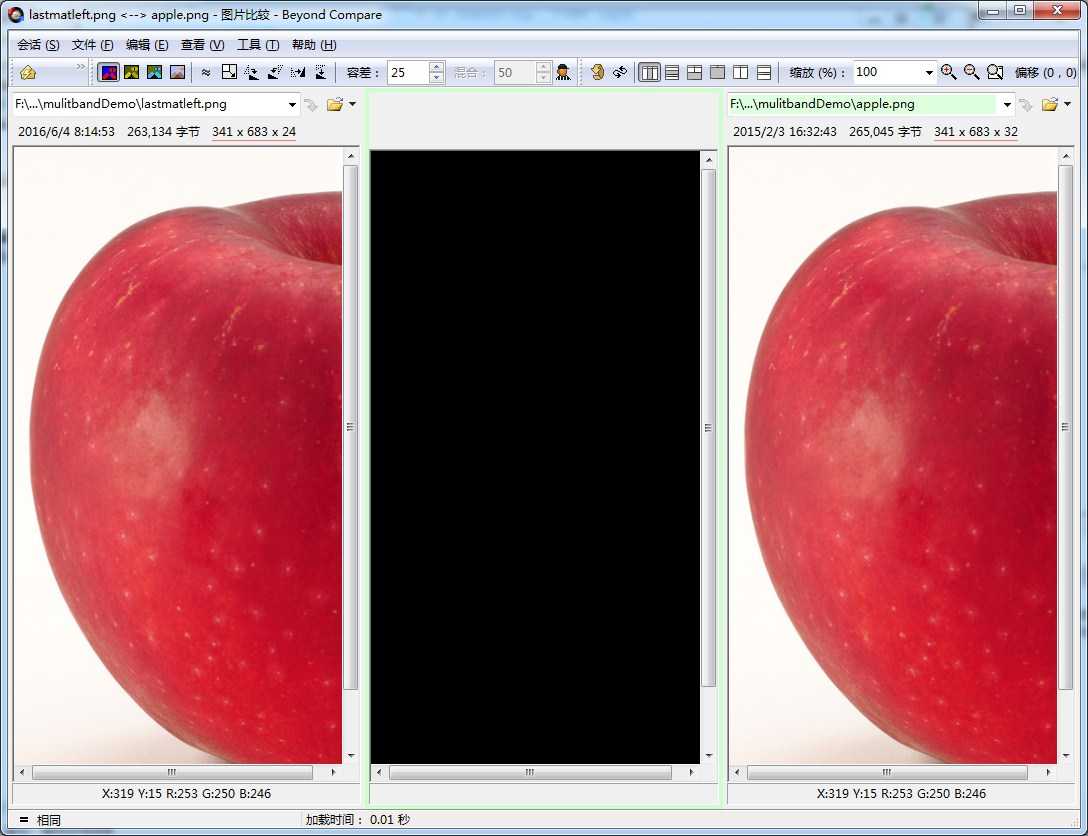

}可以看到纹理过渡不违和,但是光照变化剧烈

四、改造现有代码

上文提及了已经实现的代码,这个代码写的还是相当不错的,其原理完全按照《a multivesolution spline with application to image mosaics 》来实现,为了应用于实际的图像拼接,做了一些修改

//使用Multiblend进行图像融合

//代码重构

#include "stdafx.h"

#include <iostream>

#include <vector>

#include <opencv2/core/core.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/features2d/features2d.hpp>

#include <opencv2/calib3d/calib3d.hpp>

#include "matcher.h"

using namespace cv;

class LaplacianBlending {

private:

Mat_<Vec3f> top;

Mat_<Vec3f> down;

Mat_<float> blendMask;

vector<Mat_<Vec3f> > topLapPyr,downLapPyr,resultLapPyr;//Laplacian Pyramids

Mat topHighestLevel, downHighestLevel, resultHighestLevel;

vector<Mat_<Vec3f> > maskGaussianPyramid; //masks are 3-channels for easier multiplication with RGB

int levels;

//创建金字塔

void buildPyramids() {

//参数的解释 top就是top ,topLapPyr就是top的laplacian的pyr,而topHighestLevel保存的是最高端的高斯金字塔

buildLaplacianPyramid(top,topLapPyr,topHighestLevel);

buildLaplacianPyramid(down,downLapPyr,downHighestLevel);

buildGaussianPyramid();

}

//创建gauss金字塔

void buildGaussianPyramid() {//金字塔内容为每一层的掩模

assert(topLapPyr.size()>0);

maskGaussianPyramid.clear();

Mat currentImg;

//blendMask就是结果所在

cvtColor(blendMask, currentImg, CV_GRAY2BGR);//store color img of blend mask into maskGaussianPyramid

maskGaussianPyramid.push_back(currentImg); //0-level

currentImg = blendMask;

for (int l=1; l<levels+1; l++) {

Mat _down;

if (topLapPyr.size() > l)

pyrDown(currentImg, _down, topLapPyr[l].size());

else

pyrDown(currentImg, _down, topHighestLevel.size()); //lowest level

Mat down;

cvtColor(_down, down, CV_GRAY2BGR);

maskGaussianPyramid.push_back(down);//add color blend mask into mask Pyramid

currentImg = _down;

}

}

//创建laplacian金字塔

void buildLaplacianPyramid(const Mat& img, vector<Mat_<Vec3f> >& lapPyr, Mat& HighestLevel) {

lapPyr.clear();

Mat currentImg = img;

for (int l=0; l<levels; l++) {

Mat down,up;

pyrDown(currentImg, down);

pyrUp(down, up,currentImg.size());

Mat lap = currentImg - up; //存储的就是残差

lapPyr.push_back(lap);

currentImg = down;

}

currentImg.copyTo(HighestLevel);

}

Mat_<Vec3f> reconstructImgFromLapPyramid() {

//将左右laplacian图像拼成的resultLapPyr金字塔中每一层

//从上到下插值放大并相加,即得blend图像结果

Mat currentImg = resultHighestLevel;

for (int l=levels-1; l>=0; l--) {

Mat up;

pyrUp(currentImg, up, resultLapPyr[l].size());

currentImg = up + resultLapPyr[l];

}

return currentImg;

}

void blendLapPyrs() {

//获得每层金字塔中直接用左右两图Laplacian变换拼成的图像resultLapPyr

resultHighestLevel = topHighestLevel.mul(maskGaussianPyramid.back()) +

downHighestLevel.mul(Scalar(1.0,1.0,1.0) - maskGaussianPyramid.back());

for (int l=0; l<levels; l++) {

Mat A = topLapPyr[l].mul(maskGaussianPyramid[l]);

Mat antiMask = Scalar(1.0,1.0,1.0) - maskGaussianPyramid[l];

Mat B = downLapPyr[l].mul(antiMask);

Mat_<Vec3f> blendedLevel = A + B;

resultLapPyr.push_back(blendedLevel);

}

}

public:

LaplacianBlending(const Mat_<Vec3f>& _top, const Mat_<Vec3f>& _down, const Mat_<float>& _blendMask, int _levels)://缺省数据,使用 LaplacianBlending lb(l,r,m,4);

top(_top),down(_down),blendMask(_blendMask),levels(_levels)

{

assert(_top.size() == _down.size());

assert(_top.size() == _blendMask.size());

buildPyramids(); //创建laplacian金字塔和gauss金字塔

blendLapPyrs(); //将左右金字塔融合成为一个图片

};

Mat_<Vec3f> blend() {

return reconstructImgFromLapPyramid();//reconstruct Image from Laplacian Pyramid

}

};

Mat_<Vec3f> LaplacianBlend(const Mat_<Vec3f>& t, const Mat_<Vec3f>& d, const Mat_<float>& m) {

LaplacianBlending lb(t,d,m,4);

return lb.blend();

}

int main()

{

// Read input images 这里的命名最好为imageleft和imageright

cv::Mat image1= cv::imread("Univ3.jpg",1);

cv::Mat image2= cv::imread("Univ4.jpg",1);

if (!image1.data || !image2.data)

return 0;

// Prepare the matcher

RobustMatcher rmatcher;

rmatcher.setConfidenceLevel(0.98);

rmatcher.setMinDistanceToEpipolar(1.0);

rmatcher.setRatio(0.65f);

cv::Ptr<cv::FeatureDetector> pfd= new cv::SurfFeatureDetector(10);

rmatcher.setFeatureDetector(pfd);

// Match the two images

std::vector<cv::DMatch> matches;

std::vector<cv::KeyPoint> keypoints1, keypoints2;

cv::Mat fundemental= rmatcher.match(image1,image2,matches, keypoints1, keypoints2);

// draw the matches

cv::Mat imageMatches;

cv::drawMatches(image1,keypoints1, // 1st image and its keypoints

image2,keypoints2, // 2nd image and its keypoints

matches, // the matches

imageMatches, // the image produced

cv::Scalar(255,255,255)); // color of the lines

// Convert keypoints into Point2f

std::vector<cv::Point2f> points1, points2;

for (std::vector<cv::DMatch>::const_iterator it= matches.begin();

it!= matches.end(); ++it) {

// Get the position of left keypoints

float x= keypoints1[it->queryIdx].pt.x;

float y= keypoints1[it->queryIdx].pt.y;

points1.push_back(cv::Point2f(x,y));

// Get the position of right keypoints

x= keypoints2[it->trainIdx].pt.x;

y= keypoints2[it->trainIdx].pt.y;

points2.push_back(cv::Point2f(x,y));

}

std::cout << points1.size() << " " << points2.size() << std::endl;

// Find the homography between image 1 and image 2

std::vector<uchar> inliers(points1.size(),0);

cv::Mat homography= cv::findHomography(

cv::Mat(points1),cv::Mat(points2), // corresponding points

inliers, // outputed inliers matches

CV_RANSAC, // RANSAC method

1.); // max distance to reprojection point

// Warp image 1 to image 2

cv::Mat result;

cv::warpPerspective(image1, // input image

result, // output image

homography, // homography

cv::Size(2*image1.cols,image1.rows)); // size of output image

cv::Mat resultback;

result.copyTo(resultback);

// Copy image 1 on the first half of full image

cv::Mat half(result,cv::Rect(0,0,image2.cols,image2.rows));

image2.copyTo(half);

// Display the warp image

cv::namedWindow("After warping");

cv::imshow("After warping",result);

//需要注意的一点是,原始文件的图片是按照从右至左边进行移动的。

//进行Multiblend的融合,融合的输入图像为image2(左)和resultback(右)

Mat srcLeft = image2.clone();

Mat srcRight= resultback.clone();

int ioffset = srcLeft.cols -100;

Mat imageL = srcLeft(Rect(ioffset,0,100,srcLeft.rows)).clone();

Mat imageR = srcRight(Rect(ioffset,0,100,srcLeft.rows)).clone();

Mat_<Vec3f> t; imageL.convertTo(t,CV_32F,1.0/255.0);//Vec3f表示有三个通道,即 l[row][column][depth]

Mat_<Vec3f> d; imageR.convertTo(d,CV_32F,1.0/255.0);

Mat_<float> m(t.rows,d.cols,0.0); //将m全部赋值为0

m(Range::all(),Range(0,m.cols/2)) = 1.0; //取m全部行&[0,m.cols/2]列,赋值为1.0

Mat_<Vec3f> matblend = LaplacianBlend(t,d, m);

Mat re;

matblend.convertTo(re,CV_8UC3,255);

Mat roi = srcLeft(Rect(ioffset,0,100,srcLeft.rows));

re.copyTo(roi);

roi = resultback(Rect(0,0,srcLeft.cols,srcLeft.rows));

srcLeft.copyTo(roi);

cv::waitKey();

return 0;

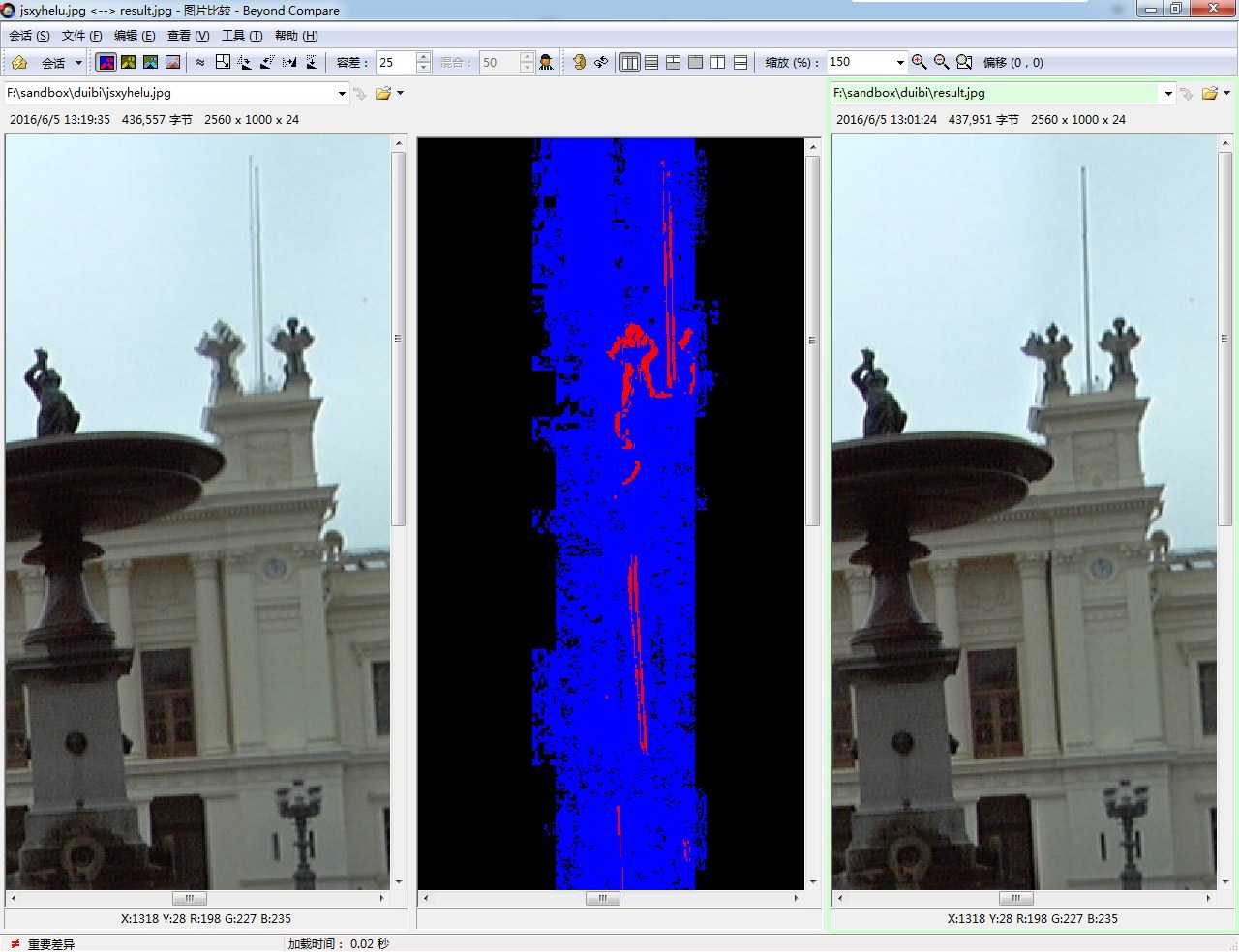

}五、结果比较

我写的multibandblend

liner

multibendblend

六、小结

应该说我采用的算法,虽然也有一定程度上效果,但是“鬼影”并没有减少。采用linearblend的方法,不管分不分金字塔,都是“鬼影”产生的原因。研究问题,还是应该读原始论文,找到好的代码,真正地解决问题。

这个论文背后的原理是什么?

这个代码是谁写的,来自哪里?

搞明白这些问题。