标签:zrc eui ahci awd mku uid 定义 evm ssis

然后在网上找到了符合论文中符号的代码。

function Sest = cosaomp(Phi,u,K,tol,maxiterations) Sest = zeros(size(Phi,2),1); v = u; t = 1; numericalprecision = 1e-12; T = []; while (t <= maxiterations) && (norm(v)/norm(u) > tol) y = abs(Phi‘*v); [vals,z] = sort(y,‘descend‘); Omega = find(y >= vals(2*K) & y > numericalprecision); T = union(Omega,T); b = pinv(Phi(:,T))*u; [vals,z] = sort(abs(b),‘descend‘); Kgoodindices = (abs(b) >= vals(K) & abs(b) > numericalprecision); T = T(Kgoodindices); Sest = zeros(size(Phi,2),1); phit = Phi(:,T); b = pinv(phit)*u; Sest(T) = b; v = u - phit*b; t = t+1; end

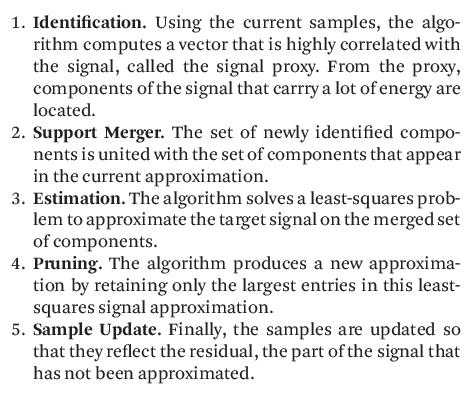

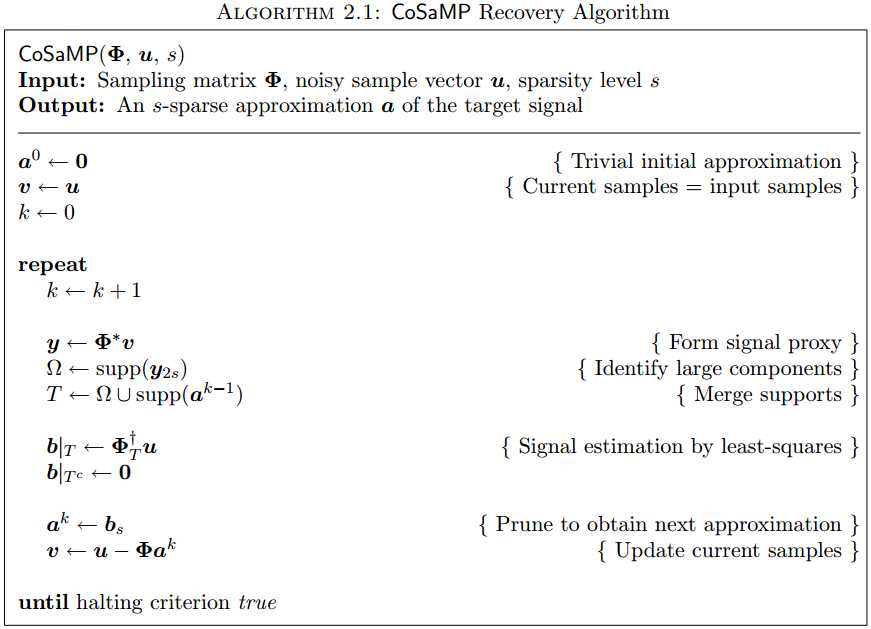

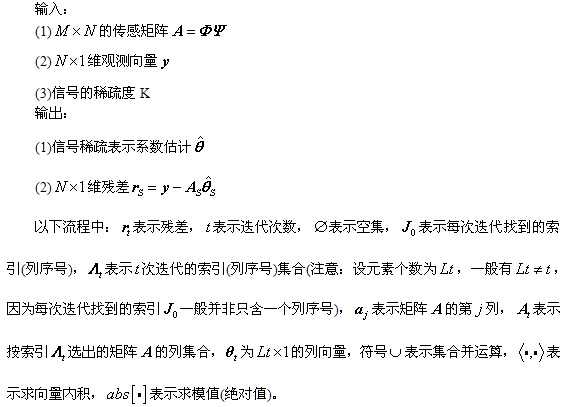

接下来综合代码我准备强行解释一波论文算法的伪代码流程,哎呀半懂半懂希望以后要全懂全懂。

步骤(5)稍微有点绕,综合代码理解一下还是不难的。

function [ theta ] = CS_CoSaMP( y,A,K )

%CS_CoSaOMP Summary of this function goes here

%Created by jbb0523@@2015-04-29

%Version: 1.1 modified by jbb0523 @2015-05-09

% Detailed explanation goes here

% y = Phi * x

% x = Psi * theta

% y = Phi*Psi * theta

% 令 A = Phi*Psi, 则y=A*theta

% K is the sparsity level

% 现在已知y和A,求theta

% Reference:Needell D,Tropp J A.CoSaMP:Iterative signal recovery from

% incomplete and inaccurate samples[J].Applied and Computation Harmonic

% Analysis,2009,26:301-321.

[y_rows,y_columns] = size(y);

if y_rows<y_columns

y = y‘;%y should be a column vector

end

[M,N] = size(A);%传感矩阵A为M*N矩阵

theta = zeros(N,1);%用来存储恢复的theta(列向量)

Pos_theta = [];%用来迭代过程中存储A被选择的列序号

r_n = y;%初始化残差(residual)为y

for kk=1:K%最多迭代K次

%(1) Identification

product = A‘*r_n;%传感矩阵A各列与残差的内积

[val,pos]=sort(abs(product),‘descend‘);

Js = pos(1:2*K);%选出内积值最大的2K列

%(2) Support Merger

Is = union(Pos_theta,Js);%Pos_theta与Js并集

%(3) Estimation

%At的行数要大于列数,此为最小二乘的基础(列线性无关)

if length(Is)<=M

At = A(:,Is);%将A的这几列组成矩阵At

else%At的列数大于行数,列必为线性相关的,At‘*At将不可逆

if kk == 1

theta_ls = 0;

end

break;%跳出for循环

end

%y=At*theta,以下求theta的最小二乘解(Least Square)

theta_ls = (At‘*At)^(-1)*At‘*y;%最小二乘解

%(4) Pruning

[val,pos]=sort(abs(theta_ls),‘descend‘);

%(5) Sample Update

Pos_theta = Is(pos(1:K));

theta_ls = theta_ls(pos(1:K));

%At(:,pos(1:K))*theta_ls是y在At(:,pos(1:K))列空间上的正交投影

r_n = y - At(:,pos(1:K))*theta_ls;%更新残差

if norm(r_n)<1e-6%Repeat the steps until r=0

break;%跳出for循环

end

end

theta(Pos_theta)=theta_ls;%恢复出的theta

end

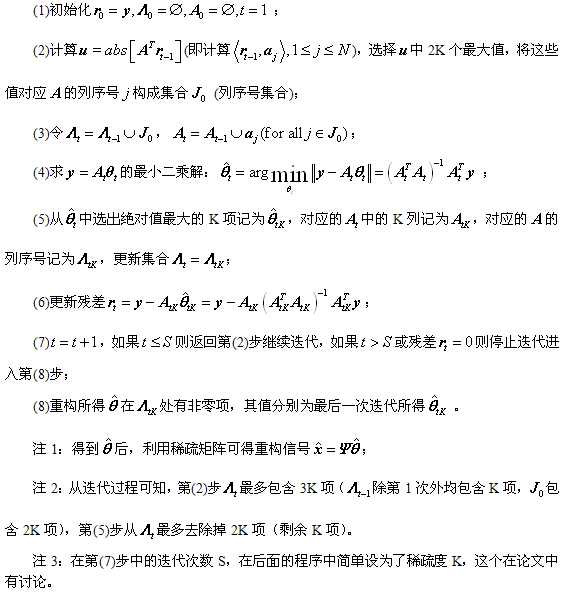

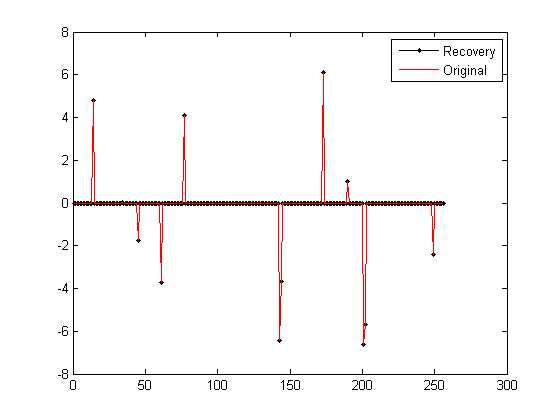

%压缩感知重构算法测试 clear all;close all;clc; M = 64;%观测值个数 N = 256;%信号x的长度 K = 12;%信号x的稀疏度 Index_K = randperm(N); x = zeros(N,1); x(Index_K(1:K)) = 5*randn(K,1);%x为K稀疏的,且位置是随机的 Psi = eye(N);%x本身是稀疏的,定义稀疏矩阵为单位阵x=Psi*theta Phi = randn(M,N);%测量矩阵为高斯矩阵 A = Phi * Psi;%传感矩阵 y = Phi * x;%得到观测向量y %% 恢复重构信号x tic theta = CS_CoSaMP( y,A,K ); x_r = Psi * theta;% x=Psi * theta toc %% 绘图 figure; plot(x_r,‘k.-‘);%绘出x的恢复信号 hold on; plot(x,‘r‘);%绘出原信号x hold off; legend(‘Recovery‘,‘Original‘) fprintf(‘\n恢复残差:‘); norm(x_r-x)%恢复残差

运行结果如下:(信号为随机生成,所以每次结果均不一样)

2)Command windows

2)Command windowsclear all;close all;clc;

%% 参数配置初始化

CNT = 1000;%对于每组(K,M,N),重复迭代次数

N = 256;%信号x的长度

Psi = eye(N);%x本身是稀疏的,定义稀疏矩阵为单位阵x=Psi*theta

K_set = [4,12,20,28,36];%信号x的稀疏度集合

Percentage = zeros(length(K_set),N);%存储恢复成功概率

%% 主循环,遍历每组(K,M,N)

tic

for kk = 1:length(K_set)

K = K_set(kk);%本次稀疏度

M_set = 2*K:5:N;%M没必要全部遍历,每隔5测试一个就可以了

PercentageK = zeros(1,length(M_set));%存储此稀疏度K下不同M的恢复成功概率

for mm = 1:length(M_set)

M = M_set(mm);%本次观测值个数

fprintf(‘K=%d,M=%d\n‘,K,M);

P = 0;

for cnt = 1:CNT %每个观测值个数均运行CNT次

Index_K = randperm(N);

x = zeros(N,1);

x(Index_K(1:K)) = 5*randn(K,1);%x为K稀疏的,且位置是随机的

Phi = randn(M,N)/sqrt(M);%测量矩阵为高斯矩阵

A = Phi * Psi;%传感矩阵

y = Phi * x;%得到观测向量y

theta = CS_CoSaMP(y,A,K);%恢复重构信号theta

x_r = Psi * theta;% x=Psi * theta

if norm(x_r-x)<1e-6%如果残差小于1e-6则认为恢复成功

P = P + 1;

end

end

PercentageK(mm) = P/CNT*100;%计算恢复概率

end

Percentage(kk,1:length(M_set)) = PercentageK;

end

toc

save CoSaMPMtoPercentage1000 %运行一次不容易,把变量全部存储下来

%% 绘图

S = [‘-ks‘;‘-ko‘;‘-kd‘;‘-kv‘;‘-k*‘];

figure;

for kk = 1:length(K_set)

K = K_set(kk);

M_set = 2*K:5:N;

L_Mset = length(M_set);

plot(M_set,Percentage(kk,1:L_Mset),S(kk,:));%绘出x的恢复信号

hold on;

end

本程序运行结果:

标签:zrc eui ahci awd mku uid 定义 evm ssis

原文地址:http://www.cnblogs.com/wwf828/p/7488870.html