标签:匹配 decode alt list usr not www gecko bit

因为工作需要cnnvd漏洞信息,以前用着集客搜、八爪鱼之类的工具,但对其效果和速度都不满意。最近开始接触学习爬虫,作为初学者,还需要慢慢完善。先记录下第一个爬虫。还想着在多进程和IP代理方向改善学习。

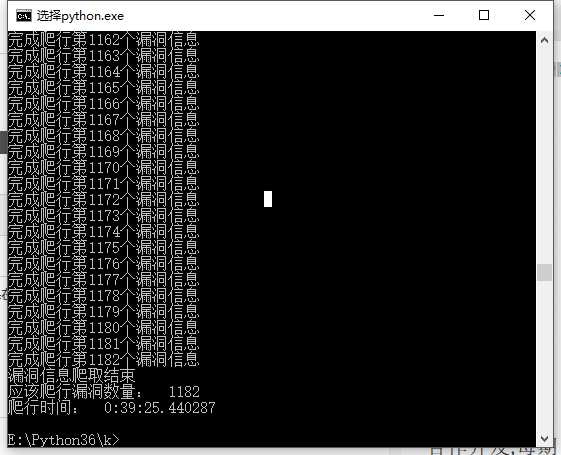

这个是运行情况,速度还是无法忍受,多进程在数据获取应该能快很多,IP代理应该能忽视短时间多次访问被限制的问题,从而可以提高速度。

以下是整个代码:

1 #!/usr/bin/env python3 2 # -*- coding: utf-8 -*- 3 # by Kaiho 4 5 import urllib.request 6 from urllib import parse 7 from bs4 import BeautifulSoup 8 import xlwt 9 import zlib 10 import re 11 import time 12 import xlsxwriter 13 import sys 14 import datetime 15 16 ‘‘‘ 17 运行方法: 18 python holes_crawler 2017-10-01 2017-10-31 178 19 第一个为开始时间,第二个为结束时间,第三个为总页数。 20 21 ‘‘‘ 22 23 24 #获得漏洞详情链接列表 25 def holes_url_list(url,start_time,end_time): 26 header = { 27 ‘Host‘: ‘cnnvd.org.cn‘, 28 ‘User-Agent‘: ‘Mozilla/5.0 (Linux; Android 4.1.2; Nexus 7 Build/JZ054K) AppleWebKit/535.19 (KHTML, like Gecko) Chrome/18.0.1025.166 Safari/535.19‘, 29 ‘Accept‘: ‘text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8‘, 30 ‘Accept-Language‘: ‘zh-CN,zh;q=0.8,en-US;q=0.5,en;q=0.3‘, 31 ‘Accept-Encoding‘: ‘gzip, deflate‘, 32 ‘Content-Type‘: ‘application/x-www-form-urlencoded‘, 33 ‘Content-Length‘:‘ 219‘, 34 ‘Referer‘: ‘http://cnnvd.org.cn/web/vulnerability/queryLds.tag‘, 35 ‘Cookie‘: ‘SESSION=988d12eb-217f-4163-ba47-5c55e018b388‘, 36 ‘Connection‘: ‘keep-alive‘, 37 ‘Upgrade-Insecure-Requests‘: ‘1‘ 38 } 39 data = { 40 ‘CSRFToken‘:‘‘, 41 ‘cvHazardRating‘:‘‘, 42 ‘cvVultype‘:‘‘, 43 ‘qstartdateXq‘:‘‘, 44 ‘cvUsedStyle‘:‘‘, 45 ‘cvCnnvdUpdatedateXq‘:‘‘, 46 ‘cpvendor‘:‘‘, 47 ‘relLdKey‘:‘‘, 48 ‘hotLd‘:‘‘, 49 ‘isArea‘:‘‘, 50 ‘qcvCname‘:‘‘, 51 ‘qcvCnnvdid‘:‘CNNVD或CVE编号‘, 52 ‘qstartdate‘:‘2017-10-30‘, #---------------》开始日期 53 ‘qenddate‘:‘2017-10-31‘ #---------------》结束日期 54 } 55 data[‘qstartdate‘] = start_time 56 data[‘qenddate‘] = end_time 57 data = parse.urlencode(data).encode(‘utf-8‘) 58 holes_url_html = urllib.request.Request(url,headers=header,data=data) 59 holes_url_html = urllib.request.urlopen(holes_url_html) 60 holes_url_html = zlib.decompress(holes_url_html.read(), 16+zlib.MAX_WBITS) 61 holes_url_html = holes_url_html.decode() 62 63 #提取漏洞详情链接 64 response = r‘href="(.+?)" target="_blank" class="a_title2"‘ 65 holes_link_list = re.compile(response).findall(holes_url_html) 66 67 #添加http前序 68 i = 0 69 for link in holes_link_list: 70 holes_lists.append(‘http://cnnvd.org.cn‘+holes_link_list[i]) 71 i+=1 72 print("已完成爬行第%d个漏洞链接"%i) 73 time.sleep(0.2) 74 75 76 #调用漏洞列表函数并获得漏洞链接列表 77 begin = datetime.datetime.now() 78 holes_lists=[] 79 j = 1 80 page_count = sys.argv[3] 81 page_count = int(page_count) 82 start_time = sys.argv[1] 83 end_time = sys.argv[2] 84 while j<=page_count : #-------------------------------》爬取页数 85 try: 86 holes_url = ‘http://cnnvd.org.cn/web/vulnerability/queryLds.tag?pageno=%d&repairLd=‘%j 87 holes_url_list(holes_url,start_time,end_time) 88 print("已完成爬行第%d页"%j) 89 print(‘\n‘) 90 time.sleep(2.5) 91 j+=1 92 except: 93 print(‘爬取失败,等待5秒后重新爬取。‘) 94 time.sleep(5) 95 96 #print(holes_lists) #漏洞链接列表 97 98 99 100 #漏洞信息爬取函数 101 def holes_data(url): 102 header = { 103 ‘Host‘:‘ cnnvd.org.cn‘, 104 ‘Connection‘: ‘keep-alive‘, 105 ‘Accept‘: ‘text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8‘, 106 ‘Upgrade-Insecure-Requests‘:‘ 1‘, 107 ‘User-Agent‘: ‘Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.221 Safari/537.36 SE 2.X MetaSr 1.0‘, 108 ‘Accept-Encoding‘: ‘gzip, deflate, sdch‘, 109 ‘Accept-Language‘:‘ zh-CN,zh;q=0.8‘, 110 ‘Cookie‘:‘SESSION=988d12eb-217f-4163-ba47-5c55e018b388‘ 111 } 112 holes_data_html = urllib.request.Request(url,headers=header) 113 holes_data_html = urllib.request.urlopen(holes_data_html) 114 holes_data_html = zlib.decompress(holes_data_html.read(), 16+zlib.MAX_WBITS) 115 holes_data_html = holes_data_html.decode() 116 117 global holes_result_list 118 holes_result_list=[] #抓取信息列表命名 119 120 #添加漏洞信息详情 121 holes_detainled_soup1 = BeautifulSoup(holes_data_html,‘html.parser‘) 122 holes_detainled_data = holes_detainled_soup1.find(‘div‘,attrs={‘class‘:‘detail_xq w770‘}) ##定义 漏洞信息详情 块的soup 123 holes_detainled_data = holes_detainled_data.encode().decode() 124 holes_detainled_soup = BeautifulSoup(holes_detainled_data,‘html.parser‘) #二次匹配 125 126 holes_detainled_data_list = holes_detainled_soup.find_all(‘li‘) #标签a信息汇总 127 128 try: 129 holes_name = holes_detainled_soup.h2.string #漏洞名称 130 except: 131 holes_name = ‘‘ 132 holes_result_list.append(holes_name) 133 134 try: 135 holes_cnnvd_num = holes_detainled_soup.span.string #cnnvd编号 136 holes_cnnvd_num = re.findall(r"\:([\s\S]*)",holes_cnnvd_num)[0] 137 except: 138 holes_cnnvd_num = ‘‘ 139 holes_result_list.append(holes_cnnvd_num) 140 141 try: #漏洞等级 142 holes_rank = holes_detainled_soup.a.decode() 143 holes_rank = re.search(u‘([\u4e00-\u9fa5]+)‘,holes_rank).group(0) 144 except: 145 holes_rank = ‘‘ 146 holes_result_list.append(holes_rank) 147 148 holes_cve_html = holes_detainled_data_list[2].encode().decode() #漏洞cve编号 149 holes_cve_soup = BeautifulSoup(holes_cve_html,‘html.parser‘) 150 try: 151 holes_cve = holes_cve_soup.a.string 152 holes_cve = holes_cve.replace("\r","").replace("\t","").replace("\n","") 153 except: 154 holes_cve = ‘‘ 155 holes_result_list.append(holes_cve) 156 157 holes_type_html = holes_detainled_data_list[3].encode().decode() #漏洞类型 158 holes_type_soup = BeautifulSoup(holes_type_html,‘html.parser‘) 159 try: 160 holes_type = holes_type_soup.a.string 161 holes_type = holes_type.replace("\r","").replace("\t","").replace("\n","") 162 except: 163 holes_type = ‘‘ 164 holes_result_list.append(holes_type) 165 166 holes_time_html = holes_detainled_data_list[4].encode().decode() #发布时间 167 holes_time_soup = BeautifulSoup(holes_time_html,‘html.parser‘) 168 try: 169 holes_time = holes_time_soup.a.string 170 holes_time = holes_time.replace("\r","").replace("\t","").replace("\n","") 171 except: 172 holes_time = ‘‘ 173 holes_result_list.append(holes_time) 174 175 holes_attack_html = holes_detainled_data_list[5].encode().decode() #威胁类型 176 holes_attack_soup = BeautifulSoup(holes_attack_html,‘html.parser‘) 177 try: 178 holes_attack = holes_attack_soup.a.string 179 holes_attack = holes_attack.replace("\r","").replace("\t","").replace("\n","") 180 except: 181 holes_attack = ‘‘ 182 holes_result_list.append(holes_attack) 183 184 holes_update_html = holes_detainled_data_list[6].encode().decode() #更新时间 185 holes_update_soup = BeautifulSoup(holes_update_html,‘html.parser‘) 186 try: 187 holes_update = holes_update_soup.a.string 188 holes_update = holes_update.replace("\r","").replace("\t","").replace("\n","") 189 except: 190 holes_update = ‘‘ 191 holes_result_list.append(holes_update) 192 193 holes_firm_html = holes_detainled_data_list[7].encode().decode() #厂商 194 holes_firm_soup = BeautifulSoup(holes_firm_html,‘html.parser‘) 195 try: 196 holes_firm = holes_firm_soup.a.string 197 holes_firm = holes_firm.replace("\r","").replace("\t","").replace("\n","") 198 except: 199 holes_firm = ‘‘ 200 holes_result_list.append(holes_firm) 201 202 holes_source_html = holes_detainled_data_list[8].encode().decode() #漏洞来源 203 holes_source_soup = BeautifulSoup(holes_source_html,‘html.parser‘) 204 try: 205 holes_source = holes_source_soup.a.string 206 holes_source = holes_source.replace("\r","").replace("\t","").replace("\n","") 207 except: 208 holes_source = ‘‘ 209 holes_result_list.append(holes_source) 210 211 212 #添加漏洞简介详情 213 holes_title_html = holes_detainled_soup1.find(‘div‘,attrs={‘class‘:‘d_ldjj‘}) #定义“漏洞简介”的soup 214 holes_title_html = holes_title_html.encode().decode() 215 holes_title_soup2 = BeautifulSoup(holes_title_html,‘html.parser‘) 216 217 try: 218 holes_titles1 = holes_title_soup2.find_all(name=‘p‘)[0].string 219 holes_titles2 = holes_title_soup2.find_all(name=‘p‘)[1].string 220 holes_titles = holes_titles1 + holes_titles2 221 holes_titles = holes_titles.replace(‘ ‘,‘‘).replace(‘\t‘,‘‘).replace(‘\r‘,‘‘).replace(‘\n‘,‘‘) 222 except: 223 holes_titles = ‘‘ 224 holes_result_list.append(holes_titles) 225 226 227 #漏洞公告 228 holes_notice_html = holes_detainled_soup1.find(‘div‘,attrs={‘class‘:‘d_ldjj m_t_20‘}) #定义“漏洞公告”的soup 229 holes_notice_html = holes_notice_html.encode().decode() 230 holes_notice_soup2 = BeautifulSoup(holes_notice_html,‘html.parser‘) 231 232 try: 233 holes_notice1 = holes_notice_soup2.find_all(name=‘p‘)[0].string 234 holes_notice2 = holes_notice_soup2.find_all(name=‘p‘)[1].string 235 holes_notice = holes_notice1+holes_notice2 236 holes_notice = holes_notice.replace(‘\n‘,‘‘).replace(‘\r‘,‘‘).replace(‘\t‘,‘‘) 237 except: 238 holes_notice = ‘‘ 239 holes_result_list.append(holes_notice) 240 241 242 #参考网址 243 holes_reference_html = holes_detainled_soup1.find_all(‘div‘,attrs={‘class‘:‘d_ldjj m_t_20‘})[1] #定义“参考网址”的soup 244 holes_reference_html = holes_reference_html.encode().decode() 245 holes_reference_soup2 = BeautifulSoup(holes_reference_html,‘html.parser‘) 246 247 try: 248 holes_reference = holes_reference_soup2.find_all(name=‘p‘)[1].string 249 holes_reference = holes_reference.replace(‘\n‘,‘‘).replace(‘\r‘,‘‘).replace(‘\t‘,‘‘).replace(‘链接:‘,‘‘) 250 except: 251 holes_reference = ‘‘ 252 holes_result_list.append(holes_reference) 253 254 255 #受影响实体 256 holes_effect_html = holes_detainled_soup1.find_all(‘div‘,attrs={‘class‘:‘d_ldjj m_t_20‘})[2] #定义“受影响实体”的soup 257 holes_effect_html = holes_effect_html.encode().decode() 258 holes_effect_soup2 = BeautifulSoup(holes_effect_html,‘html.parser‘) 259 try: 260 holes_effect = holes_effect_soup2.find_all(name=‘p‘)[0].string 261 holes_effect = holes_effect.replace(‘\n‘,‘‘).replace(‘\r‘,‘‘).replace(‘\t‘,‘‘).replace(‘ ‘,‘‘) 262 except: 263 try: 264 holes_effect = holes_effect_soup2.find_all(name=‘a‘)[0].string 265 holes_effect = holes_effect.replace(‘\n‘,‘‘).replace(‘\r‘,‘‘).replace(‘\t‘,‘‘).replace(‘ ‘,‘‘) 266 except: 267 holes_effect = ‘‘ 268 holes_result_list.append(holes_effect) 269 270 271 272 #补丁 273 holes_patch_html = holes_detainled_soup1.find_all(‘div‘,attrs={‘class‘:‘d_ldjj m_t_20‘})[3] #定义“补丁”块soup 274 holes_patch_html = holes_patch_html.encode().decode() 275 holes_patch_soup2 = BeautifulSoup(holes_patch_html,‘html.parser‘) 276 277 278 try: 279 holes_patch = holes_patch_soup2.find_all(name=‘p‘)[0].string 280 holes_patch = holes_patch.replace(‘\n‘,‘‘).replace(‘\r‘,‘‘).replace(‘\t‘,‘‘).replace(‘ ‘,‘‘) 281 except: 282 holes_patch = ‘‘ 283 holes_result_list.append(holes_patch) 284 285 286 #调用漏洞信息函数并爬取漏洞信息 287 holes_result_lists = [] 288 print (‘漏洞数量: ‘,len(holes_lists)) 289 a=0 290 while a < len(holes_lists): 291 try: 292 holes_data(holes_lists[a]) 293 holes_result_lists.append(holes_result_list) 294 a+=1 295 print("完成爬行第%d个漏洞信息"%a) 296 time.sleep(0.5) 297 except: 298 print(‘爬取失败,等待5秒后重新爬取。‘) 299 time.sleep(5) 300 301 holes_result_excel = tuple(holes_result_lists) #列表转换元组 302 303 #漏洞信息写入excel 304 workbook = xlsxwriter.Workbook(‘holes_data.xlsx‘) 305 worksheet = workbook.add_worksheet() 306 307 row = 0 308 col = 0 309 worksheet.write(row,0,‘漏洞名称‘) 310 worksheet.write(row,1,‘CNNVD编号‘) 311 worksheet.write(row,2,‘危害等级‘) 312 worksheet.write(row,3,‘CVE编号‘) 313 worksheet.write(row,4,‘漏洞类型‘) 314 worksheet.write(row,5,‘发布时间‘) 315 worksheet.write(row,6,‘攻击途径‘) 316 worksheet.write(row,7,‘更新时间‘) 317 worksheet.write(row,8,‘厂商‘) 318 worksheet.write(row,9,‘漏洞来源‘) 319 worksheet.write(row,10,‘漏洞描述‘) 320 worksheet.write(row,11,‘解决方案‘) 321 worksheet.write(row,12,‘参考链接‘) 322 worksheet.write(row,13,‘受影响实体‘) 323 worksheet.write(row,14,‘补丁‘) 324 325 row = 1 326 for n1,n2,n3,n4,n5,n6,n7,n8,n9,n10,n11,n12,n13,n14,n15 in holes_result_excel: 327 worksheet.write(row,col,n1) 328 worksheet.write(row,col+1,n2) 329 worksheet.write(row,col+2,n3) 330 worksheet.write(row,col+3,n4) 331 worksheet.write(row,col+4,n5) 332 worksheet.write(row,col+5,n6) 333 worksheet.write(row,col+6,n7) 334 worksheet.write(row,col+7,n8) 335 worksheet.write(row,col+8,n9) 336 worksheet.write(row,col+9,n10) 337 worksheet.write(row,col+10,n11) 338 worksheet.write(row,col+11,n12) 339 worksheet.write(row,col+12,n13) 340 worksheet.write(row,col+13,n14) 341 worksheet.write(row,col+14,n15) 342 row += 1 343 344 workbook.close() 345 346 end = datetime.datetime.now() 347 total_time = end - begin 348 print (‘漏洞信息爬取结束‘) 349 print (‘应该爬行漏洞数量: ‘,len(holes_lists)) 350 print (‘爬行时间: ‘,total_time) 351 352 #holes_data(‘http://www.cnnvd.org.cn/web/xxk/ldxqById.tag?CNNVD=CNNVD-201710-987‘) 353 #print (holes_result_list)

标签:匹配 decode alt list usr not www gecko bit

原文地址:http://www.cnblogs.com/kaiho/p/7804542.html