标签:## 支持 文件的 比较 yum安装 scp 复用 man int

本节内容:

Kubernetes是谷歌开源的容器集群管理系统,是Google多年大规模容器管理技术Borg的 开源版本,主要功能包括:

之前尝试使用kubeadm自动化部署集群,使用yum去安装kubeadm等工具,但是不FQ的情况下,这种方式在国内几乎是不可能安装成功的。于是改为采用二进制文件部署Kubernetes集群,同时开启了集群的TLS安全认证。本篇实践是参照opsnull的文章《创建 kubernetes 各组件 TLS 加密通信的证书和秘钥》,结合公司的实际情况进行部署的。

| 主机名 | 操作系统版本 | IP地址 | 角色 | 安装软件 |

| node1 | CentOS 7.0 | 172.16.7.151 | Kubernetes Master、Node | etcd 3.2.7、kube-apiserver、kube-scheduler、kube-controller-manager、kubelet、kube-proxy、etcd 3.2.7、flannel 0.7.1、docker 1.12.6 |

| node2 | CentOS 7.0 | 172.16.7.152 | Kubernetes Node | kubelet、kube-proxy、flannel 0.7.1、etcd 3.2.7、docker 1.12.6 |

| node3 | CentOS 7.0 | 172.16.7.153 | Kubernetes Node | kubelet、kube-proxy、flannel 0.7.1、etcd 3.2.7、docker 1.12.6 |

| spark32 | CentOS 7.0 | 172.16.206.32 | Harbor | docker-ce 17.06.1、docker-compose 1.15.0、harbor-online-installer-v1.1.2.tar |

spark32主机是harbor私有镜像仓库,关于harbor的安装部署见之前的博客《企业级Docker Registry——Harbor搭建和使用》。

kubernetes各组件需要使用TLS证书对通信进行加密,这里我使用CloudFlare的PKI工具集 cfssl 来生成CA和其它证书。

生成的CA证书和密钥文件如下:

各组件使用证书的情况如下:

kube-controller、kube-scheduler当前需要和kube-apiserver部署在同一台机器上且使用非安全端口通信,故不需要证书。

1. 安装CFSSL

有两种方式安装,一是二进制包安装,二是使用go命令安装。

(1)方式一:二进制包安装

# wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 # chmod +x cfssl_linux-amd64 # mv cfssl_linux-amd64 /root/local/bin/cfssl # wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 # chmod +x cfssljson_linux-amd64 # mv cfssljson_linux-amd64 /root/local/bin/cfssljson # wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 # chmod +x cfssl-certinfo_linux-amd64 # mv cfssl-certinfo_linux-amd64 /root/local/bin/cfssl-certinfo # export PATH=/root/local/bin:$PATH

(2)方式二:使用go命令安装

# 下载地址:https://golang.org/dl/ [root@node1 ~]# cd /usr/local/ [root@node1 local]# wget https://storage.googleapis.com/golang/go1.9.linux-amd64.tar.gz [root@node1 local]# tar zxf go1.9.linux-amd64.tar.gz [root@node1 local]# vim /etc/profile # Go export GO_HOME=/usr/local/go export PATH=$GO_HOME/bin:$PATH [root@node1 local]# source /etc/profile # 查看版本信息 [root@node1 local]# go version go version go1.9 linux/amd64

[root@node1 local]# go get -u github.com/cloudflare/cfssl/cmd/... [root@node1 local]# ls /root/go/bin/ cfssl cfssl-bundle cfssl-certinfo cfssljson cfssl-newkey cfssl-scan mkbundle multirootca [root@node1 local]# mv /root/go/bin/* /usr/local/bin/

2. 创建CA

(1)创建 CA 配置文件

[root@node1 local]# mkdir /opt/ssl [root@node1 local]# cd /opt/ssl/ [root@node1 ssl]# cfssl print-defaults config > config.json [root@node1 ssl]# cfssl print-defaults csr > csr.json

[root@node1 ssl]# vim ca-config.json { "signing": { "default": { "expiry": "8760h" }, "profiles": { "kubernetes": { "usages": [ "signing", "key encipherment", "server auth", "client auth" ], "expiry": "8760h" } } } }

部分字段说明:

(2)创建 CA 证书签名请求

[root@node1 ssl]# vim ca-csr.json { "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] }

部分字段说明:

(3)生成 CA 证书和私钥

[root@node1 ssl]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca 2017/09/10 04:22:13 [INFO] generating a new CA key and certificate from CSR 2017/09/10 04:22:13 [INFO] generate received request 2017/09/10 04:22:13 [INFO] received CSR 2017/09/10 04:22:13 [INFO] generating key: rsa-2048 2017/09/10 04:22:13 [INFO] encoded CSR 2017/09/10 04:22:13 [INFO] signed certificate with serial number 348968532213237181927470194452366329323573808966 [root@node1 ssl]# ls ca* ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem

3. 创建 Kubernetes 证书

(1)创建 kubernetes 证书签名请求

[root@node1 ssl]# vim kubernetes-csr.json { "CN": "kubernetes", "hosts": [ "127.0.0.1", "172.16.7.151", "172.16.7.152", "172.16.7.153", "172.16.206.32", "10.254.0.1", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] }

部分字段说明:

(2)生成 kubernetes 证书和私钥

[root@node1 ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes 2017/09/10 07:44:27 [INFO] generate received request 2017/09/10 07:44:27 [INFO] received CSR 2017/09/10 07:44:27 [INFO] generating key: rsa-2048 2017/09/10 07:44:27 [INFO] encoded CSR 2017/09/10 07:44:27 [INFO] signed certificate with serial number 695308968867503306176219705194671734841389082714 [root@node1 ssl]# ls kubernetes* kubernetes.csr kubernetes-csr.json kubernetes-key.pem kubernetes.pem

或者直接在命令行上指定相关参数:

# echo ‘{"CN":"kubernetes","hosts":[""],"key":{"algo":"rsa","size":2048}}‘ | cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes -hostname="127.0.0.1,172.16.7.151,172.16.7.152,172.16.7.153,172.16.206.32,10.254.0.1,kubernetes,kubernetes.default" - | cfssljson -bare kubernetes

4. 创建 Admin 证书

(1)创建 admin 证书签名请求

[root@node1 ssl]# vim admin-csr.json { "CN": "admin", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "system:masters", "OU": "System" } ] }

说明:

(2)生成 admin 证书和私钥

[root@node1 ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin 2017/09/10 20:01:05 [INFO] generate received request 2017/09/10 20:01:05 [INFO] received CSR 2017/09/10 20:01:05 [INFO] generating key: rsa-2048 2017/09/10 20:01:05 [INFO] encoded CSR 2017/09/10 20:01:05 [INFO] signed certificate with serial number 580169825175224945071583937498159721917720511011 2017/09/10 20:01:05 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements"). [root@node1 ssl]# ls admin* admin.csr admin-csr.json admin-key.pem admin.pem

5. 创建 Kube-Proxy 证书

(1)创建 kube-proxy 证书签名请求

[root@node1 ssl]# vim kube-proxy-csr.json { "CN": "system:kube-proxy", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] }

说明:

(2)生成 kube-proxy 客户端证书和私钥

[root@node1 ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy 2017/09/10 20:07:55 [INFO] generate received request 2017/09/10 20:07:55 [INFO] received CSR 2017/09/10 20:07:55 [INFO] generating key: rsa-2048 2017/09/10 20:07:55 [INFO] encoded CSR 2017/09/10 20:07:55 [INFO] signed certificate with serial number 655306618453852718922516297333812428130766975244 2017/09/10 20:07:55 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements"). [root@node1 ssl]# ls kube-proxy* kube-proxy.csr kube-proxy-csr.json kube-proxy-key.pem kube-proxy.pem

6. 校验证书

以校验Kubernetes证书为例。

(1)使用openssl命令校验证书

[root@node1 ssl]# openssl x509 -noout -text -in kubernetes.pem Certificate: Data: Version: 3 (0x2) Serial Number: 79:ca:bb:84:73:15:b1:db:aa:24:d7:a3:60:65:b0:55:27:a7:e8:5a Signature Algorithm: sha256WithRSAEncryption Issuer: C=CN, ST=BeiJing, L=BeiJing, O=k8s, OU=System, CN=kubernetes Validity Not Before: Sep 10 11:39:00 2017 GMT Not After : Sep 10 11:39:00 2018 GMT Subject: C=CN, ST=BeiJing, L=BeiJing, O=k8s, OU=System, CN=kubernetes ... X509v3 extensions: X509v3 Key Usage: critical Digital Signature, Key Encipherment X509v3 Extended Key Usage: TLS Web Server Authentication, TLS Web Client Authentication X509v3 Basic Constraints: critical CA:FALSE X509v3 Subject Key Identifier: 79:48:C1:1B:81:DD:9C:75:04:EC:B6:35:26:5E:82:AA:2E:45:F6:C5 X509v3 Subject Alternative Name: DNS:kubernetes, DNS:kubernetes.default, DNS:kubernetes.default.svc, DNS:kubernetes.default.svc.cluster, DNS:kubernetes.default.svc.cluster.local, IP Address:127.0.0.1, IP Address:172.16.7.151, IP Address:172.16.7.152, IP Address:172.16.7.153, IP Address:172.16.206.32, IP Address:10.254.0.1 ...

【说明】:

(2)使用 Cfssl-Certinfo 命令校验

[root@node1 ssl]# cfssl-certinfo -cert kubernetes.pem { "subject": { "common_name": "kubernetes", "country": "CN", "organization": "k8s", "organizational_unit": "System", "locality": "BeiJing", "province": "BeiJing", "names": [ "CN", "BeiJing", "BeiJing", "k8s", "System", "kubernetes" ] }, "issuer": { "common_name": "kubernetes", "country": "CN", "organization": "k8s", "organizational_unit": "System", "locality": "BeiJing", "province": "BeiJing", "names": [ "CN", "BeiJing", "BeiJing", "k8s", "System", "kubernetes" ] }, "serial_number": "695308968867503306176219705194671734841389082714", "sans": [ "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local", "127.0.0.1", "172.16.7.151", "172.16.7.152", "172.16.7.153", "172.16.206.32", "10.254.0.1" ], "not_before": "2017-09-10T11:39:00Z", "not_after": "2018-09-10T11:39:00Z", "sigalg": "SHA256WithRSA", "authority_key_id": "", "subject_key_id": "79:48:C1:1B:81:DD:9C:75:4:EC:B6:35:26:5E:82:AA:2E:45:F6:C5", ...

7. 分发证书

将生成的证书和秘钥文件(后缀名为.pem)拷贝到所有机器的 /etc/kubernetes/ssl 目录下备用:

[root@node1 ssl]# mkdir -p /etc/kubernetes/ssl [root@node1 ssl]# cp *.pem /etc/kubernetes/ssl [root@node1 ssl]# scp -p *.pem root@172.16.7.152:/etc/kubernetes/ssl/ [root@node1 ssl]# scp -p *.pem root@172.16.7.153:/etc/kubernetes/ssl/ [root@node1 ssl]# scp -p *.pem root@172.16.206.32:/etc/kubernetes/ssl/

1. 下载kubectl

[root@node1 local]# wget https://dl.k8s.io/v1.6.0/kubernetes-client-linux-amd64.tar.gz [root@node1 local]# tar zxf kubernetes-client-linux-amd64.tar.gz [root@node1 local]# cp kubernetes/client/bin/kube* /usr/bin/ [root@node1 local]# chmod +x /usr/bin/kube*

2. 创建 kubectl kubeconfig 文件

[root@node1 local]# cd /etc/kubernetes/ [root@node1 kubernetes]# export KUBE_APISERVER="https://172.16.7.151:6443" # 设置集群参数 [root@node1 kubernetes]# kubectl config set-cluster kubernetes > --certificate-authority=/etc/kubernetes/ssl/ca.pem > --embed-certs=true > --server=${KUBE_APISERVER} Cluster "kubernetes" set. # 设置客户端认证参数 [root@node1 kubernetes]# kubectl config set-credentials admin > --client-certificate=/etc/kubernetes/ssl/admin.pem > --embed-certs=true > --client-key=/etc/kubernetes/ssl/admin-key.pem User "admin" set. # 设置上下文参数 [root@node1 kubernetes]# kubectl config set-context kubernetes > --cluster=kubernetes > --user=admin Context "kubernetes" set # 设置默认上下文 [root@node1 kubernetes]# kubectl config use-context kubernetes Switched to context "kubernetes". [root@node1 kubernetes]# ls ~/.kube/config /root/.kube/config

【说明】:

kubelet、kube-proxy 等 Node 机器上的进程与 Master 机器的 kube-apiserver 进程通信时需要认证和授权。

kubernetes 1.4 开始支持由 kube-apiserver 为客户端生成 TLS 证书的 TLS Bootstrapping 功能,这样就不需要为每个客户端生成证书了;该功能当前仅支持为 kubelet 生成证书。

1. 创建 TLS Bootstrapping Token

(1)Token auth file

[root@node1 ssl]# export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ‘ ‘) [root@node1 ssl]# cat > token.csv <<EOF > ${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap" > EOF

将token.csv发到所有机器(Master 和 Node)的 /etc/kubernetes/ 目录。

[root@node1 ssl]# cp token.csv /etc/kubernetes/ [root@node1 ssl]# scp -p token.csv root@172.16.7.152:/etc/kubernetes/ [root@node1 ssl]# scp -p token.csv root@172.16.7.153:/etc/kubernetes/

(2)创建 kubelet bootstrapping kubeconfig 文件

[root@node1 ssl]# cd /etc/kubernetes [root@node1 kubernetes]# export KUBE_APISERVER="https://172.16.7.151:6443" # 设置集群参数 > --certificate-authority=/etc/kubernetes/ssl/ca.pem > --embed-certs=true > --server=${KUBE_APISERVER} > --kubeconfig=bootstrap.kubeconfig Cluster "kubernetes" set. # 设置客户端认证参数 [root@node1 kubernetes]# kubectl config set-credentials kubelet-bootstrap > --token=${BOOTSTRAP_TOKEN} > --kubeconfig=bootstrap.kubeconfig User "kubelet-bootstrap" set. # 设置上下文参数 [root@node1 kubernetes]# kubectl config set-context default > --cluster=kubernetes > --user=kubelet-bootstrap > --kubeconfig=bootstrap.kubeconfig Context "default" created. # 设置默认上下文 [root@node1 kubernetes]# kubectl config use-context default --kubeconfig=bootstrap.kubeconfig Switched to context "default".

【说明】:

2. 创建 kube-proxy kubeconfig 文件

[root@node1 kubernetes]# export KUBE_APISERVER="https://172.16.7.151:6443" # 设置集群参数 [root@node1 kubernetes]# kubectl config set-cluster kubernetes > --certificate-authority=/etc/kubernetes/ssl/ca.pem > --embed-certs=true > --server=${KUBE_APISERVER} > --kubeconfig=kube-proxy.kubeconfig Cluster "kubernetes" set. # 设置客户端认证参数 [root@node1 kubernetes]# kubectl config set-credentials kube-proxy > --client-certificate=/etc/kubernetes/ssl/kube-proxy.pem > --client-key=/etc/kubernetes/ssl/kube-proxy-key.pem > --embed-certs=true > --kubeconfig=kube-proxy.kubeconfig User "kube-proxy" set. # 设置上下文参数 [root@node1 kubernetes]# kubectl config set-context default > --cluster=kubernetes > --user=kube-proxy > --kubeconfig=kube-proxy.kubeconfig Context "default" created. # 设置默认上下文 [root@node1 kubernetes]# kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig Switched to context "default".

【说明】:

3. 分发 kubeconfig 文件

将两个 kubeconfig 文件分发到所有 Node 机器的 /etc/kubernetes/ 目录。

[root@node1 kubernetes]# scp -p bootstrap.kubeconfig root@172.16.7.152:/etc/kubernetes/ [root@node1 kubernetes]# scp -p kube-proxy.kubeconfig root@172.16.7.152:/etc/kubernetes/ [root@node1 kubernetes]# scp -p bootstrap.kubeconfig root@172.16.7.153:/etc/kubernetes/ [root@node1 kubernetes]# scp -p kube-proxy.kubeconfig root@172.16.7.153:/etc/kubernetes/

etcd 是 CoreOS 团队发起的开源项目,基于 Go 语言实现,做为一个分布式键值对存储,通过分布式锁,leader选举和写屏障(write barriers)来实现可靠的分布式协作。

kubernetes系统使用etcd存储所有数据。

CoreOS官方推荐集群规模5个为宜,我这里使用了3个节点。

1. 安装配置etcd集群

搭建etcd集群有3种方式,分别为Static, etcd Discovery, DNS Discovery。Discovery请参见官网。这里仅以Static方式展示一次集群搭建过程。

首先请做好3个节点的时间同步,方式有很多,请自行百度搜索。

(1)TLS 认证文件

需要为 etcd 集群创建加密通信的 TLS 证书,这里复用以前创建的 kubernetes 证书。

[root@node1 ssl]# cp ca.pem kubernetes-key.pem kubernetes.pem /etc/kubernetes/ssl

上面这步在之前做过,可以忽略不做。【注意】:kubernetes 证书的 hosts 字段列表中包含上面三台机器的 IP,否则后续证书校验会失败。

(2)下载二进制文件

到 https://github.com/coreos/etcd/releases 页面下载最新版本的二进制文件,并上传到/usr/local/目录下。

[root@node1 local]# tar xf etcd-v3.2.7-linux-amd64.tar [root@node1 local]# mv etcd-v3.2.7-linux-amd64/etcd* /usr/local/bin/

etcd集群中另外两台机器也需要如上操作。

(3)创建 etcd 的 systemd unit 文件

配置文件模板如下,注意替换 ETCD_NAME 和 INTERNAL_IP 变量的值。

[Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify WorkingDirectory=/var/lib/etcd/ EnvironmentFile=-/etc/etcd/etcd.conf ExecStart=/usr/local/bin/etcd --name ${ETCD_NAME} --cert-file=/etc/kubernetes/ssl/kubernetes.pem --key-file=/etc/kubernetes/ssl/kubernetes-key.pem --peer-cert-file=/etc/kubernetes/ssl/kubernetes.pem --peer-key-file=/etc/kubernetes/ssl/kubernetes-key.pem --trusted-ca-file=/etc/kubernetes/ssl/ca.pem --peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem --initial-advertise-peer-urls https://${INTERNAL_IP}:2380 \ --listen-peer-urls https://${INTERNAL_IP}:2380 \ --listen-client-urls https://${INTERNAL_IP}:2379,https://127.0.0.1:2379 \ --advertise-client-urls https://${INTERNAL_IP}:2379 \ --initial-cluster-token etcd-cluster-0 --initial-cluster node1=https://172.16.7.151:2380,node2=https://172.16.7.152:2380,node3=https://172.16.7.153:2380 \ --initial-cluster-state new --data-dir=/var/lib/etcd Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target

针对上面几个配置参数做下简单的解释:

所有以--init开头的配置都是在bootstrap集群的时候才会用到,后续节点的重启会被忽略。

[root@node1 local]# mkdir -p /var/lib/etcd [root@node1 local]# cd /etc/systemd/system/ [root@node1 system]# vim etcd.service [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify WorkingDirectory=/var/lib/etcd/ EnvironmentFile=-/etc/etcd/etcd.conf ExecStart=/usr/local/bin/etcd --name node1 --cert-file=/etc/kubernetes/ssl/kubernetes.pem --key-file=/etc/kubernetes/ssl/kubernetes-key.pem --peer-cert-file=/etc/kubernetes/ssl/kubernetes.pem --peer-key-file=/etc/kubernetes/ssl/kubernetes-key.pem --trusted-ca-file=/etc/kubernetes/ssl/ca.pem --peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem --initial-advertise-peer-urls https://172.16.7.151:2380 \ --listen-peer-urls https://172.16.7.151:2380 \ --listen-client-urls https://172.16.7.151:2379,https://127.0.0.1:2379 \ --advertise-client-urls https://172.16.7.151:2379 \ --initial-cluster-token etcd-cluster-0 --initial-cluster node1=https://172.16.7.151:2380,node2=https://172.16.7.152:2380,node3=https://172.16.7.153:2380 \ --initial-cluster-state new --data-dir=/var/lib/etcd Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target

[Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify WorkingDirectory=/var/lib/etcd/ EnvironmentFile=-/etc/etcd/etcd.conf ExecStart=/usr/local/bin/etcd --name node2 --cert-file=/etc/kubernetes/ssl/kubernetes.pem --key-file=/etc/kubernetes/ssl/kubernetes-key.pem --peer-cert-file=/etc/kubernetes/ssl/kubernetes.pem --peer-key-file=/etc/kubernetes/ssl/kubernetes-key.pem --trusted-ca-file=/etc/kubernetes/ssl/ca.pem --peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem --initial-advertise-peer-urls https://172.16.7.152:2380 \ --listen-peer-urls https://172.16.7.152:2380 \ --listen-client-urls https://172.16.7.152:2379,https://127.0.0.1:2379 \ --advertise-client-urls https://172.16.7.152:2379 \ --initial-cluster-token etcd-cluster-0 --initial-cluster node1=https://172.16.7.151:2380,node2=https://172.16.7.152:2380,node3=https://172.16.7.153:2380 \ --initial-cluster-state new --data-dir=/var/lib/etcd Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target

[Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify WorkingDirectory=/var/lib/etcd/ EnvironmentFile=-/etc/etcd/etcd.conf ExecStart=/usr/local/bin/etcd --name node3 --cert-file=/etc/kubernetes/ssl/kubernetes.pem --key-file=/etc/kubernetes/ssl/kubernetes-key.pem --peer-cert-file=/etc/kubernetes/ssl/kubernetes.pem --peer-key-file=/etc/kubernetes/ssl/kubernetes-key.pem --trusted-ca-file=/etc/kubernetes/ssl/ca.pem --peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem --initial-advertise-peer-urls https://172.16.7.153:2380 \ --listen-peer-urls https://172.16.7.153:2380 \ --listen-client-urls https://172.16.7.153:2379,https://127.0.0.1:2379 \ --advertise-client-urls https://172.16.7.153:2379 \ --initial-cluster-token etcd-cluster-0 --initial-cluster node1=https://172.16.7.151:2380,node2=https://172.16.7.152:2380,node3=https://172.16.7.153:2380 \ --initial-cluster-state new --data-dir=/var/lib/etcd Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target

【说明】:

2. 启动 etcd 服务

集群中的节点都执行以下命令:

# systemctl daemon-reload

# systemctl enable etcd

# systemctl start etcd

3. 验证服务

etcdctl 是一个命令行客户端,它能提供一些简洁的命令,供用户直接跟 etcd 服务打交道,而无需基于 HTTP API 方式。这在某些情况下将很方便,例如用户对服务进行测试或者手动修改数据库内容。我们也推荐在刚接触 etcd 时通过 etcdctl 命令来熟悉相关的操作,这些操作跟 HTTP API 实际上是对应的。

在etcd集群任意一台机器上执行如下命令:

(1)查看集群健康状态

[root@node1 system]# etcdctl --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/kubernetes/ssl/kubernetes.pem --key-file=/etc/kubernetes/ssl/kubernetes-key.pem --endpoints "https://172.16.7.151:2379" cluster-health member 31800ab6b566b2b is healthy: got healthy result from https://172.16.7.151:2379 member 9a0745d96695eec6 is healthy: got healthy result from https://172.16.7.153:2379 member e64edc68e5e81b55 is healthy: got healthy result from https://172.16.7.152:2379 cluster is healthy

结果最后一行为 cluster is healthy 时表示集群服务正常。

(2)查看集群成员,并能看出哪个是leader节点

[root@node1 system]# etcdctl --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/kubernetes/ssl/kubernetes.pem --key-file=/etc/kubernetes/ssl/kubernetes-key.pem --endpoints "https://172.16.7.151:2379" member list 31800ab6b566b2b: name=node1 peerURLs=https://172.16.7.151:2380 clientURLs=https://172.16.7.151:2379 isLeader=false 9a0745d96695eec6: name=node3 peerURLs=https://172.16.7.153:2380 clientURLs=https://172.16.7.153:2379 isLeader=false e64edc68e5e81b55: name=node2 peerURLs=https://172.16.7.152:2380 clientURLs=https://172.16.7.152:2379 isLeader=true

(3)删除一个节点

# 如果你想更新一个节点的IP(peerURLS),首先你需要知道那个节点的ID # etcdctl --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/kubernetes/ssl/kubernetes.pem --key-file=/etc/kubernetes/ssl/kubernetes-key.pem --endpoints "https://172.16.7.151:2379" member list 31800ab6b566b2b: name=node1 peerURLs=https://172.16.7.151:2380 clientURLs=https://172.16.7.151:2379 isLeader=false 9a0745d96695eec6: name=node3 peerURLs=https://172.16.7.153:2380 clientURLs=https://172.16.7.153:2379 isLeader=false e64edc68e5e81b55: name=node2 peerURLs=https://172.16.7.152:2380 clientURLs=https://172.16.7.152:2379 isLeader=true # 删除一个节点 # etcdctl --endpoints "http://192.168.2.210:2379" member remove 9a0745d96695eec6

kubernetes master 节点包含的组件:

目前这三个组件需要部署在同一台机器上。

kube-scheduler、kube-controller-manager 和 kube-apiserver 三者的功能紧密相关;

同时只能有一个 kube-scheduler、kube-controller-manager 进程处于工作状态,如果运行多个,则需要通过选举产生一个 leader。

1. TLS 证书文件

检查之前生成的证书。

[root@node1 kubernetes]# ls /etc/kubernetes/ssl admin-key.pem admin.pem ca-key.pem ca.pem kube-proxy-key.pem kube-proxy.pem kubernetes-key.pem kubernetes.pem

2. 下载二进制文件

有两种下载方式:

[root@node1 local]# cd /opt/ [root@node1 opt]# wget https://github.com/kubernetes/kubernetes/releases/download/v1.6.0/kubernetes.tar.gz [root@node1 opt]# tar zxf kubernetes.tar.gz [root@node1 opt]# cd kubernetes/ [root@node1 kubernetes]# ./cluster/get-kube-binaries.sh Kubernetes release: v1.6.0 Server: linux/amd64 (to override, set KUBERNETES_SERVER_ARCH) Client: linux/amd64 (autodetected) Will download kubernetes-server-linux-amd64.tar.gz from https://storage.googleapis.com/kubernetes-release/release/v1.6.0 Will download and extract kubernetes-client-linux-amd64.tar.gz from https://storage.googleapis.com/kubernetes-release/release/v1.6.0 Is this ok? [Y]/n y ...

wget https://dl.k8s.io/v1.6.0/kubernetes-server-linux-amd64.tar.gz tar -xzvf kubernetes-server-linux-amd64.tar.gz ... cd kubernetes tar -xzvf kubernetes-src.tar.gz

将二进制文件拷贝到指定路径:

[root@node1 kubernetes]# pwd /opt/kubernetes [root@node1 kubernetes]# cd server/ [root@node1 server]# cp -r kubernetes/server/bin/{kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,kube-proxy,kubelet} /usr/local/bin/

3. 配置和启动 kube-apiserver

(1)创建 kube-apiserver的service配置文件

在/usr/lib/systemd/system/下创建kube-apiserver.service,内容如下:

[Unit] Description=Kubernetes API Service Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=network.target After=etcd.service [Service] EnvironmentFile=-/etc/kubernetes/config EnvironmentFile=-/etc/kubernetes/apiserver ExecStart=/usr/local/bin/kube-apiserver $KUBE_LOGTOSTDERR $KUBE_LOG_LEVEL $KUBE_ETCD_SERVERS $KUBE_API_ADDRESS $KUBE_API_PORT $KUBELET_PORT $KUBE_ALLOW_PRIV $KUBE_SERVICE_ADDRESSES $KUBE_ADMISSION_CONTROL $KUBE_API_ARGS Restart=on-failure Type=notify LimitNOFILE=65536 [Install] WantedBy=multi-user.target

上面的配置文件中用到的/etc/kubernetes/config文件的内容为:

### # kubernetes system config # # The following values are used to configure various aspects of all # kubernetes services, including # # kube-apiserver.service # kube-controller-manager.service # kube-scheduler.service # kubelet.service # kube-proxy.service # logging to stderr means we get it in the systemd journal KUBE_LOGTOSTDERR="--logtostderr=true" # journal message level, 0 is debug KUBE_LOG_LEVEL="--v=0" # Should this cluster be allowed to run privileged docker containers KUBE_ALLOW_PRIV="--allow-privileged=true" # How the controller-manager, scheduler, and proxy find the apiserver #KUBE_MASTER="--master=http://domainName:8080" KUBE_MASTER="--master=http://172.16.7.151:8080"

该配置文件同时被kube-apiserver、kube-controller-manager、kube-scheduler、kubelet、kube-proxy使用。

创建apiserver配置文件/etc/kubernetes/apiserver:

### ## kubernetes system config ## ## The following values are used to configure the kube-apiserver ## # ## The address on the local server to listen to. #KUBE_API_ADDRESS="--insecure-bind-address=sz-pg-oam-docker-test-001.tendcloud.com" KUBE_API_ADDRESS="--advertise-address=172.16.7.151 --bind-address=172.16.7.151 --insecure-bind-address=172.16.7.151" # ## The port on the local server to listen on. #KUBE_API_PORT="--port=8080" # ## Port minions listen on #KUBELET_PORT="--kubelet-port=10250" # ## Comma separated list of nodes in the etcd cluster KUBE_ETCD_SERVERS="--etcd-servers=https://172.16.7.151:2379,https://172.16.7.152:2379,https://172.16.7.153:2379" # ## Address range to use for services KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16" # ## default admission control policies KUBE_ADMISSION_CONTROL="--admission-control=ServiceAccount,NamespaceLifecycle,NamespaceExists,LimitRanger,ResourceQuota" # ## Add your own! KUBE_API_ARGS="--authorization-mode=RBAC --runtime-config=rbac.authorization.k8s.io/v1beta1 --kubelet-https=true --experimental-bootstrap-token-auth --token-auth-file=/etc/kubernetes/token.csv --service-node-port-range=30000-32767 --tls-cert-file=/etc/kubernetes/ssl/kubernetes.pem --tls-private-key-file=/etc/kubernetes/ssl/kubernetes-key.pem --client-ca-file=/etc/kubernetes/ssl/ca.pem --service-account-key-file=/etc/kubernetes/ssl/ca-key.pem --etcd-cafile=/etc/kubernetes/ssl/ca.pem --etcd-certfile=/etc/kubernetes/ssl/kubernetes.pem --etcd-keyfile=/etc/kubernetes/ssl/kubernetes-key.pem --enable-swagger-ui=true --apiserver-count=3 --audit-log-maxage=30 --audit-log-maxbackup=3 --audit-log-maxsize=100 --audit-log-path=/var/lib/audit.log --event-ttl=1h"

【说明】:

(2)启动kube-apiserver

# systemctl daemon-reload # systemctl enable kube-apiserver # systemctl start kube-apiserver

启动过程中可以观察日志:

# tail -f /var/log/message

4. 配置和启动 kube-controller-manager

(1)创建 kube-controller-manager 的service配置文件

在/usr/lib/systemd/system/下创建kube-controller-manager.service,内容如下:

Description=Kubernetes Controller Manager Documentation=https://github.com/GoogleCloudPlatform/kubernetes [Service] EnvironmentFile=-/etc/kubernetes/config EnvironmentFile=-/etc/kubernetes/controller-manager ExecStart=/usr/local/bin/kube-controller-manager $KUBE_LOGTOSTDERR $KUBE_LOG_LEVEL $KUBE_MASTER $KUBE_CONTROLLER_MANAGER_ARGS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target

创建kube-controller-manager配置文件/etc/kubernetes/controller-manager:

# vim /etc/kubernetes/controller-manager ### # The following values are used to configure the kubernetes controller-manager # defaults from config and apiserver should be adequate # Add your own! KUBE_CONTROLLER_MANAGER_ARGS="--address=127.0.0.1 --service-cluster-ip-range=10.254.0.0/16 --cluster-name=kubernetes --cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem --cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem --service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem --root-ca-file=/etc/kubernetes/ssl/ca.pem --leader-elect=true"

【说明】:

# kubectl get componentstatuses NAME STATUS MESSAGE ERROR scheduler Unhealthy Get http://127.0.0.1:10251/healthz: dial tcp 127.0.0.1:10251: getsockopt: connection refused controller-manager Healthy ok etcd-2 Unhealthy Get http://172.20.0.113:2379/health: malformed HTTP response "\x15\x03\x01\x00\x02\x02" etcd-0 Healthy {"health": "true"} etcd-1 Healthy {"health": "true"}

参考:https://github.com/kubernetes-incubator/bootkube/issues/64

(2)启动 kube-controller-manager

# systemctl daemon-reload # systemctl enable kube-controller-manager # systemctl start kube-controller-manager

5. 配置和启动 kube-scheduler

(1)创建 kube-scheduler的serivce配置文件

在/usr/lib/systemd/system/下创建kube-scheduler.serivce,内容如下:

[Unit] Description=Kubernetes Scheduler Plugin Documentation=https://github.com/GoogleCloudPlatform/kubernetes [Service] EnvironmentFile=-/etc/kubernetes/config EnvironmentFile=-/etc/kubernetes/scheduler ExecStart=/usr/local/bin/kube-scheduler $KUBE_LOGTOSTDERR $KUBE_LOG_LEVEL $KUBE_MASTER $KUBE_SCHEDULER_ARGS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target

创建kube-scheduler配置文件/etc/kubernetes/scheduler:

# vim /etc/kubernetes/scheduler ### # kubernetes scheduler config # default config should be adequate # Add your own! KUBE_SCHEDULER_ARGS="--leader-elect=true --address=127.0.0.1"

【说明】:

(2)启动 kube-scheduler

# systemctl daemon-reload # systemctl enable kube-scheduler # systemctl start kube-scheduler

6. 验证 master 节点功能

# kubectl get componentstatuses NAME STATUS MESSAGE ERROR scheduler Healthy ok controller-manager Healthy ok etcd-0 Healthy {"health": "true"} etcd-1 Healthy {"health": "true"} etcd-2 Healthy {"health": "true"}

kubernetes node 节点包含如下组件:

1. 安装Docker

参见之前的文章《Docker镜像和容器》。

2. 安装配置Flanneld

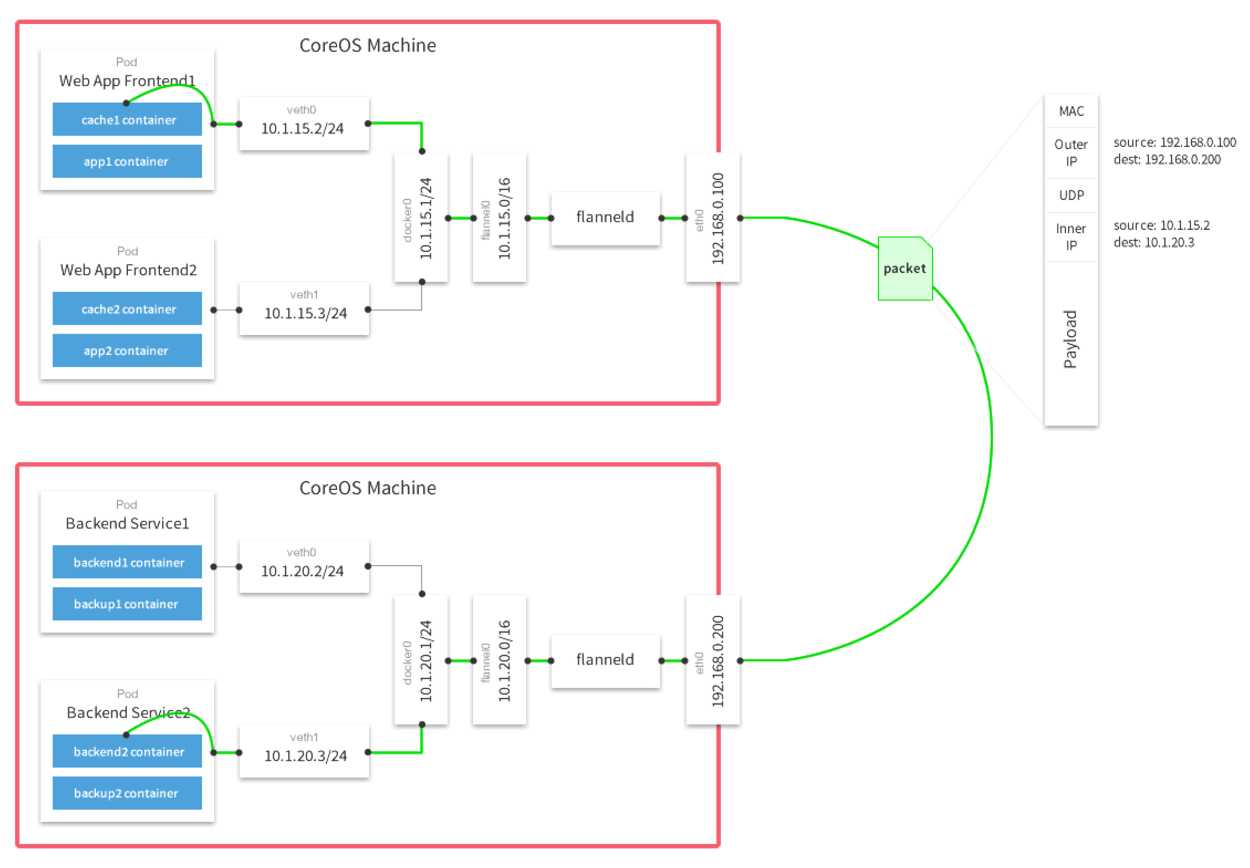

(1)Flannel介绍

在Flannel的GitHub页面有如下的一张原理图:

(2)安装配置flannel

我这里使用yum安装,安装的版本是0.7.1。集群中的3台node都需要安装配置flannel。

# yum install -y flannel # rpm -ql flannel /etc/sysconfig/flanneld /run/flannel /usr/bin/flanneld /usr/bin/flanneld-start /usr/lib/systemd/system/docker.service.d/flannel.conf /usr/lib/systemd/system/flanneld.service /usr/lib/tmpfiles.d/flannel.conf /usr/libexec/flannel /usr/libexec/flannel/mk-docker-opts.sh ...

修改flannel配置文件:

# vim /etc/sysconfig/flanneld # Flanneld configuration options # etcd url location. Point this to the server where etcd runs FLANNEL_ETCD_ENDPOINTS="https://172.16.7.151:2379,https://172.16.7.152:2379,https://172.16.7.153:2379" # etcd config key. This is the configuration key that flannel queries # For address range assignment FLANNEL_ETCD_PREFIX="/kube-centos/network" # Any additional options that you want to pass #FLANNEL_OPTIONS="" FLANNEL_OPTIONS="-etcd-cafile=/etc/kubernetes/ssl/ca.pem -etcd-certfile=/etc/kubernetes/ssl/kubernetes.pem -etcd-keyfile=/etc/kubernetes/ssl/kubernetes-key.pem"

【说明】:

(3)在etcd中初始化flannel网络数据

多个node上的Flanneld依赖一个etcd cluster来做集中配置服务,etcd保证了所有node上flanned所看到的配置是一致的。同时每个node上的flanned监听etcd上的数据变化,实时感知集群中node的变化。

执行下面的命令为docker分配IP地址段:

# etcdctl --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/kubernetes/ssl/kubernetes.pem --key-file=/etc/kubernetes/ssl/kubernetes-key.pem --endpoints "https://172.16.7.151:2379" mkdir /kube-centos/network # etcdctl --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/kubernetes/ssl/kubernetes.pem --key-file=/etc/kubernetes/ssl/kubernetes-key.pem --endpoints "https://172.16.7.151:2379" mk /kube-centos/network/config ‘{"Network": "172.30.0.0/16", "SubnetLen": 24, "Backend": { "Type": "vxlan" }}‘ {"Network": "172.30.0.0/16", "SubnetLen": 24, "Backend": { "Type": "vxlan" }}

(4)启动flannel

集群中的3台node都启动flannel:

# systemctl daemon-reload

# systemctl start flanneld

启动完成后,会在/run/flannel/目录下生成两个文件,以node1为例:

# ls /run/flannel/ docker subnet.env # cd /run/flannel/ [root@node1 flannel]# cat docker DOCKER_OPT_BIP="--bip=172.30.51.1/24" DOCKER_OPT_IPMASQ="--ip-masq=true" DOCKER_OPT_MTU="--mtu=1450" DOCKER_NETWORK_OPTIONS=" --bip=172.30.51.1/24 --ip-masq=true --mtu=1450" # cat subnet.env FLANNEL_NETWORK=172.30.0.0/16 FLANNEL_SUBNET=172.30.51.1/24 FLANNEL_MTU=1450 FLANNEL_IPMASQ=false

现在查询etcd中的内容可以看到:

# etcdctl --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/kubernetes/ssl/kubernetes.pem --key-file=/etc/kubernetes/ssl/kubernetes-key.pem --endpoints "https://172.16.7.151:2379" ls /kube-centos/network/subnets /kube-centos/network/subnets/172.30.51.0-24 /kube-centos/network/subnets/172.30.29.0-24 /kube-centos/network/subnets/172.30.19.0-24

设置docker0网桥的IP地址(集群中node节点都需要设置):

# source /run/flannel/subnet.env # ifconfig docker0 $FLANNEL_SUBNET

这样docker0和flannel网桥会在同一个子网中,查看node1主机网卡:

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 172.30.51.1 netmask 255.255.255.0 broadcast 172.30.51.255 flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 172.30.51.0 netmask 255.255.255.255 broadcast 0.0.0.0

重启docker:

# systemctl restart docker

【注意】:经过测试,docker 17.06.1-ce版本重启后,docker0网桥又会被重置为172.17.0.1,docker 1.12.6版本测试是不会有问题的。

如果想重新设置flannel,先停止flanneld,清理etcd里的数据,然后 ifconfig flannel.1 down,然后启动flanneld,会重新生成子网,并up flannel.1网桥设备。

(5)测试跨主机容器通信

分别在node1和node2上启动一个容器,然后ping对方容器的地址:

[root@node1 flannel]# docker run -i -t centos /bin/bash [root@38be151deb71 /]# yum install net-tools -y [root@38be151deb71 /]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 172.30.51.2 netmask 255.255.255.0 broadcast 0.0.0.0 [root@node2 flannel]# docker run -i -t centos /bin/bash [root@90e85c215fda /]# yum install net-tools -y [root@90e85c215fda /]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 172.30.29.2 netmask 255.255.255.0 broadcast 0.0.0.0 [root@90e85c215fda /]# ping 172.16.51.2 PING 172.16.51.2 (172.16.51.2) 56(84) bytes of data. 64 bytes from 172.16.51.2: icmp_seq=1 ttl=254 time=1.00 ms 64 bytes from 172.16.51.2: icmp_seq=2 ttl=254 time=1.29 ms

(6)补充:下载二进制包安装flannel

从官网 https://github.com/coreos/flannel/releases 下载的flannel release 0.7.1,并将下载的文件上传到服务器的/opt/flannel/目录下。

# mkdir flannel # cd flannel/ # tar xf flannel-v0.7.1-linux-amd64.tar # ls flanneld flannel-v0.7.1-linux-amd64.tar mk-docker-opts.sh README.md

mk-docker-opts.sh是用来Generate Docker daemon options based on flannel env file。

执行 ./mk-docker-opts.sh -i 将会生成如下两个文件环境变量文件。

Flannel的文档中有写Docker Integration:

Docker daemon accepts --bip argument to configure the subnet of the docker0 bridge. It also accepts --mtu to set the MTU for docker0 and veth devices that it will be creating.

Because flannel writes out the acquired subnet and MTU values into a file, the script starting Docker can source in the values and pass them to Docker daemon:

source /run/flannel/subnet.env docker daemon --bip=${FLANNEL_SUBNET} --mtu=${FLANNEL_MTU} &

Systemd users can use EnvironmentFile directive in the .service file to pull in /run/flannel/subnet.env

3. 安装和配置 kubelet

kubelet 启动时向 kube-apiserver 发送 TLS bootstrapping 请求,需要先将 bootstrap token 文件中的 kubelet-bootstrap 用户赋予 system:node-bootstrapper cluster 角色(role), 然后 kubelet 才能有权限创建认证请求(certificate signing requests):

# cd /etc/kubernetes [root@node1 kubernetes]# kubectl create clusterrolebinding kubelet-bootstrap > --clusterrole=system:node-bootstrapper > --user=kubelet-bootstrap clusterrolebinding "kubelet-bootstrap" created

【注意】:以上这步只需要在kubernetes node集群中的一台执行一次就可以了。

【说明】:

(1)下载最新的 kubelet 和 kube-proxy 二进制文件

这个在之前安装kubernetes master时已经下载好了二进制文件,只需要复制到相应目录即可。

[root@node1 kubernetes]# cd /opt/kubernetes/server/kubernetes/server/bin/ [root@node1 bin]# scp -p kubelet root@172.16.7.152:/usr/local/bin/ [root@node1 bin]# scp -p kube-proxy root@172.16.7.152:/usr/local/bin/ [root@node1 bin]# scp -p kubelet root@172.16.7.153:/usr/local/bin/ [root@node1 bin]# scp -p kube-proxy root@172.16.7.153:/usr/local/bin/

(2)配置kubelet

以下操作需要在集群的kubernetes node节点上都要运行,下面以node1服务器为例:

a.创建 kubelet 的service配置文件:

在/usr/lib/systemd/system/下创建文件kubelet.serivce:

[Unit] Description=Kubernetes Kubelet Server Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=docker.service Requires=docker.service [Service] WorkingDirectory=/var/lib/kubelet EnvironmentFile=-/etc/kubernetes/config EnvironmentFile=-/etc/kubernetes/kubelet ExecStart=/usr/local/bin/kubelet $KUBE_LOGTOSTDERR $KUBE_LOG_LEVEL $KUBELET_API_SERVER $KUBELET_ADDRESS $KUBELET_PORT $KUBELET_HOSTNAME $KUBE_ALLOW_PRIV $KUBELET_POD_INFRA_CONTAINER $KUBELET_ARGS Restart=on-failure [Install] WantedBy=multi-user.target

b.创建kubelet配置文件

创建kubelet工作目录(必须创建,否则kubelet启动不了):

# mkdir /var/lib/kubelet

创建kubelet配置文件:

### ## kubernetes kubelet (minion) config # ## The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces) KUBELET_ADDRESS="--address=172.16.7.151" # ## The port for the info server to serve on #KUBELET_PORT="--port=10250" # ## You may leave this blank to use the actual hostname KUBELET_HOSTNAME="--hostname-override=172.16.7.151" # ## location of the api-server KUBELET_API_SERVER="--api-servers=http://172.16.7.151:8080" # ## pod infrastructure container #KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=sz-pg-oam-docker-hub-001.tendcloud.com/library/pod-infrastructure:rhel7" KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure" # ## Add your own! KUBELET_ARGS="--cgroup-driver=systemd --cluster-dns=10.254.0.2 --experimental-bootstrap-kubeconfig=/etc/kubernetes/bootstrap.kubeconfig --kubeconfig=/etc/kubernetes/kubelet.kubeconfig --require-kubeconfig --cert-dir=/etc/kubernetes/ssl --cluster-domain=cluster.local. --hairpin-mode promiscuous-bridge --serialize-image-pulls=false"

【注意】:将配置文件中的IP地址更改为你的每台node节点的IP地址(除了--api-servers=http://172.16.7.151:8080这个ip地址是不用改的)。

【说明】:

(3)启动kubelet

# systemctl daemon-reload

# systemctl enable kubelet

# systemctl start kubelet

(4)通过 kubelet 的 TLS 证书请求

kubelet 首次启动时向 kube-apiserver 发送证书签名请求,必须通过后 kubernetes 系统才会将该 Node 加入到集群。

a. 查看未授权的 CSR 请求

# kubectl get csr

NAME AGE REQUESTOR CONDITION

csr-fv3bj 49s kubelet-bootstrap Pending

b. 通过 CSR 请求

# kubectl certificate approve csr-fv3bj certificatesigningrequest "csr-fv3bj" approved [root@node1 kubernetes]# kubectl get csr NAME AGE REQUESTOR CONDITION csr-fv3bj 42m kubelet-bootstrap Approved,Issued # kubectl get nodes NAME STATUS AGE VERSION 172.16.7.151 Ready 18s v1.6.0

c. 查看自动生成的 kubelet kubeconfig 文件和公私钥

[root@node1 kubernetes]# ls -l /etc/kubernetes/kubelet.kubeconfig -rw-------. 1 root root 2215 Sep 13 09:04 /etc/kubernetes/kubelet.kubeconfig [root@node1 kubernetes]# ls -l /etc/kubernetes/ssl/kubelet* -rw-r--r--. 1 root root 1046 Sep 13 09:04 /etc/kubernetes/ssl/kubelet-client.crt -rw-------. 1 root root 227 Sep 13 09:02 /etc/kubernetes/ssl/kubelet-client.key -rw-r--r--. 1 root root 1111 Sep 13 09:04 /etc/kubernetes/ssl/kubelet.crt -rw-------. 1 root root 1675 Sep 13 09:04 /etc/kubernetes/ssl/kubelet.key

在集群中其它的kubernetes node节点上操作完成后,查看集群kubernetes node情况如下:

# kubectl get csr NAME AGE REQUESTOR CONDITION csr-5n72m 3m kubelet-bootstrap Approved,Issued csr-clwzj 16m kubelet-bootstrap Approved,Issued csr-fv3bj 4h kubelet-bootstrap Approved,Issued # kubectl get nodes NAME STATUS AGE VERSION 172.16.7.151 Ready 4h v1.6.0 172.16.7.152 Ready 6m v1.6.0 172.16.7.153 Ready 12s v1.6.0

【问题】:切记每台node节点上的kubelet配置文件/etc/kubernetes/kubelet中的ip地址要改正确,否则会出现加入不了的情况。我在将node1节点的/etc/kubernetes/kubelet远程复制到node2节点上,没有修改ip,直接启动了,配置文件中写的ip地址是node1的ip地址,这就造成了node2节点并没有加入进来。采取的恢复操作是:

[root@node2 ~]# systemctl stop kubelet [root@node2 ~]# cd /etc/kubernetes [root@node2 kubernetes]# rm -f kubelet.kubeconfig [root@node2 kubernetes]# rm -rf ~/.kube/cache # 修改/etc/kubernetes/kubelet中的ip地址 [root@node2 kubernetes]# vim /etc/kubernetes/kubelet [root@node2 ~]# systemctl start kubelet

这样,再次启动kubelet时,kube-apiserver才收到证书签名请求。

4. 配置 kube-proxy

上面第3步中已经把kube-proxy复制到了kubernetes node节点的/usr/local/bin/目录下了,下面开始做配置。每台kubernetes node节点都需要做如下的操作。

(1)创建 kube-proxy 的service配置文件

在/usr/lib/systemd/system/目录下创建kube-proxy.service:

[Unit] Description=Kubernetes Kube-Proxy Server Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=network.target [Service] EnvironmentFile=-/etc/kubernetes/config EnvironmentFile=-/etc/kubernetes/proxy ExecStart=/usr/local/bin/kube-proxy $KUBE_LOGTOSTDERR $KUBE_LOG_LEVEL $KUBE_MASTER $KUBE_PROXY_ARGS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target

(2)创建kube-proxy配置文件/etc/kubernetes/proxy

【注意】:需要修改每台kubernetes node的ip地址。以下以node1主机为例:

# vim /etc/kubernetes/proxy ### # kubernetes proxy config # default config should be adequate # Add your own! KUBE_PROXY_ARGS="--bind-address=172.16.7.151 --hostname-override=172.16.7.151 --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig --cluster-cidr=10.254.0.0/16"

【说明】:

(3)启动 kube-proxy

# systemctl daemon-reload # systemctl enable kube-proxy # systemctl start kube-proxy

5. 验证测试

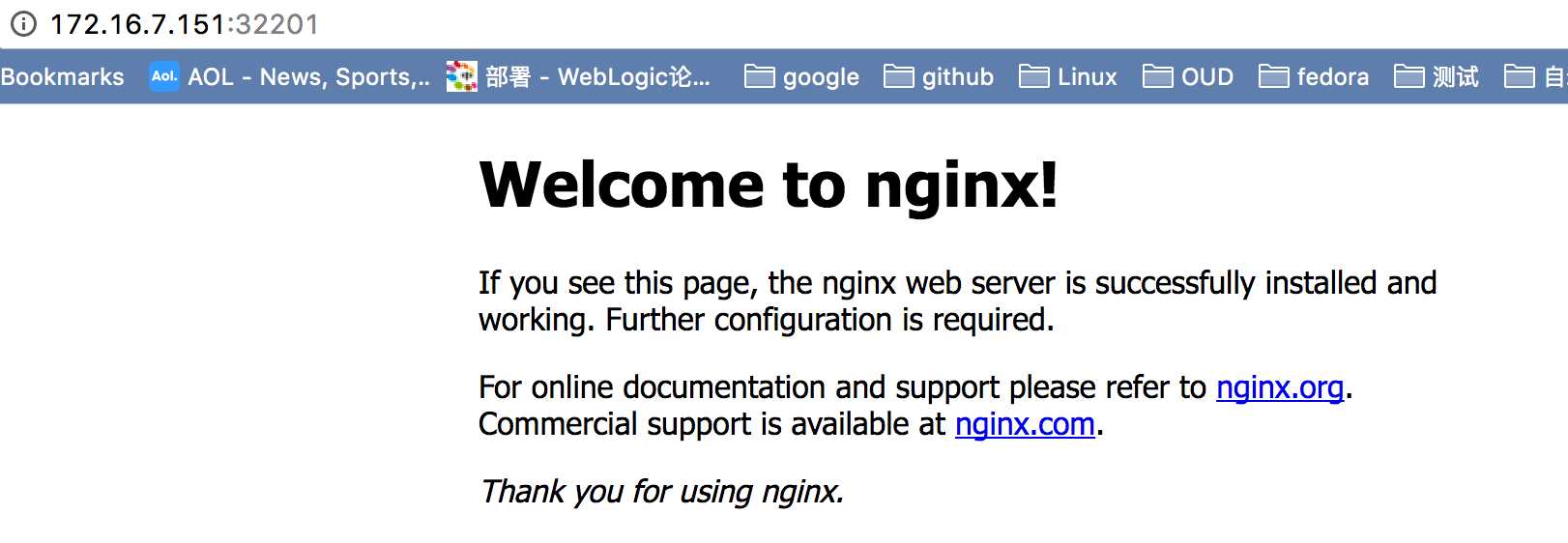

创建一个niginx的service试一下集群是否可用。

# kubectl run nginx --replicas=2 --labels="run=load-balancer-example" --image=docker.io/nginx:latest --port=80 deployment "nginx" created # kubectl expose deployment nginx --type=NodePort --name=example-service service "example-service" exposed # kubectl describe svc example-service Name: example-service Namespace: default Labels: run=load-balancer-example Annotations: <none> Selector: run=load-balancer-example Type: NodePort IP: 10.254.67.61 Port: <unset> 80/TCP NodePort: <unset> 32201/TCP Endpoints: 172.30.32.2:80,172.30.87.2:80 Session Affinity: None Events: <none> # kubectl get all NAME READY STATUS RESTARTS AGE po/nginx-1931613429-nlsj1 1/1 Running 0 5m po/nginx-1931613429-xr7zk 1/1 Running 0 5m NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE svc/example-service 10.254.67.61 <nodes> 80:32201/TCP 1m svc/kubernetes 10.254.0.1 <none> 443/TCP 5h NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE deploy/nginx 2 2 2 2 5m NAME DESIRED CURRENT READY AGE rs/nginx-1931613429 2 2 2 5m # curl "10.254.67.61:80" <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html>

浏览器输入172.16.7.151:32201或172.16.7.152:32201或者172.16.7.153:32201都可以得到nginx的页面。

查看运行的容器(在node1和node2上分别运行了一个pod):

# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 7d2ef8e34e43 docker.io/nginx@sha256:fc6d2ef47e674a9ffb718b7ac361ec4e421e3a0ef2c93df79abbe4e9ffb5fa08 "nginx -g ‘daemon off" 40 minutes ago Up 40 minutes k8s_nginx_nginx-1931613429-xr7zk_default_c628f12f-9912-11e7-9acc-005056b7609a_0 5bbb98fba623 registry.access.redhat.com/rhel7/pod-infrastructure "/usr/bin/pod" 42 minutes ago Up 42 minutes k8s_POD_nginx-1931613429-xr7zk_default_c628f12f-9912-11e7-9acc-005056b7609a_0

如果想删除刚才创建的deployment:

# kubectl get deployments NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE nginx 2 2 2 0 2m # kubectl delete deployment nginx deployment "nginx" deleted

1. kube-dns是什么

刚才在上一步中创建了个Nginx deployment,得到了两个运行nginx服务的Pod。待Pod运行之后查看一下它们的IP,并在k8s集群内通过podIP和containerPort来访问Nginx服务。

获取Pod IP:

# kubectl get pod -o yaml -l run=load-balancer-example|grep podIP podIP: 172.30.32.2 podIP: 172.30.87.2

然后在Kubernetes集群的任一节点上就可以通过podIP在k8s集群内访问Nginx服务了。

# curl "172.30.32.2:80"

但是这样存在几个问题:

使用k8s Service就可以解决。Service为一组Pod(通过labels来选择)提供一个统一的入口,并为它们提供负载均衡和自动服务发现。

所以紧接着就创建了个service:

# kubectl expose deployment nginx --type=NodePort --name=example-service

创建之后,仍需要获取Service的Cluster-IP,再结合Port访问Nginx服务。

获取IP:

# kubectl get service example-service NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE example-service 10.254.67.61 <nodes> 80:32201/TCP 1h

在集群内访问Service:

# curl "10.254.67.61:80"

而在Kubernetes cluster外面,则只能通过http://node-ip:32201来访问。

虽然Service解决了Pod的服务发现和负载均衡问题,但存在着类似的问题:不提前知道Service的IP,还是需要改程序或配置啊。kube-dns就是用来解决上面这个问题的。

kube-dns可以解决Service的发现问题,k8s将Service的名称当做域名注册到kube-dns中,通过Service的名称就可以访问其提供的服务。也就是说其他应用能够直接使用服务的名字,不需要关心它实际的 ip 地址,中间的转换能够自动完成。名字和 ip 之间的转换就是 DNS 系统的功能。

kubu-dns 服务不是独立的系统服务,而是一种 addon ,作为插件来安装的,不是 kubernetes 集群必须的(但是非常推荐安装)。可以把它看做运行在集群上的应用,只不过这个应用比较特殊而已。

2. 安装配置kube-dns

官方的yaml文件目录:https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/dns。

kube-dns 有两种配置方式,在 1.3 之前使用 etcd + kube2sky + skydns 的方式,在 1.3 之后可以使用 kubedns + dnsmasq 的方式。

该插件直接使用kubernetes部署,实际上kube-dns插件只是运行在kube-system命名空间下的Pod,完全可以手动创建它。官方的配置文件中包含以下镜像:

gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.1 gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.1 gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.1

(1)下载yaml文件

从 https://github.com/rootsongjc/follow-me-install-kubernetes-cluster/tree/master/manifests/kubedns 下载 kubedns-cm.yaml、kubedns-sa.yaml、kubedns-controller.yaml和kubedns-svc.yaml这4个文件下来,并上传到/opt/kube-dns/目录下。

# mkdir /opt/kube-dns # cd /opt/kube-dns/ # ls kubedns-* kubedns-cm.yaml kubedns-controller.yaml kubedns-sa.yaml kubedns-svc.yaml

修改kubedns-controller.yaml文件,将其中的镜像地址改为时速云的地址:

index.tenxcloud.com/jimmy/k8s-dns-dnsmasq-nanny-amd64:1.14.1 index.tenxcloud.com/jimmy/k8s-dns-kube-dns-amd64:1.14.1 index.tenxcloud.com/jimmy/k8s-dns-sidecar-amd64:1.14.1

(2)系统预定义的 RoleBinding

预定义的 RoleBinding system:kube-dns 将 kube-system 命名空间的 kube-dns ServiceAccount 与 system:kube-dns Role 绑定, 该 Role 具有访问 kube-apiserver DNS 相关 API 的权限。

[root@node1 ~]# kubectl get clusterrolebindings system:kube-dns -o yaml apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: annotations: rbac.authorization.kubernetes.io/autoupdate: "true" creationTimestamp: 2017-09-14T00:46:08Z labels: kubernetes.io/bootstrapping: rbac-defaults name: system:kube-dns resourceVersion: "56" selfLink: /apis/rbac.authorization.k8s.io/v1beta1/clusterrolebindingssystem%3Akube-dns uid: 18fa2aff-98e6-11e7-a153-005056b7609a roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:kube-dns subjects: - kind: ServiceAccount name: kube-dns namespace: kube-system

kubedns-controller.yaml 中定义的 Pods 时使用了 kubedns-sa.yaml 文件定义的 kube-dns ServiceAccount,所以具有访问 kube-apiserver DNS 相关 API 的权限。

(3)配置 kube-dns ServiceAccount

无需修改。

(4)配置 kube-dns 服务

# diff kubedns-svc.yaml.base kubedns-svc.yaml 30c30 < clusterIP: __PILLAR__DNS__SERVER__ --- > clusterIP: 10.254.0.2

【说明】:

(5)配置 kube-dns Deployment

# diff kubedns-controller.yaml.base kubedns-controller.yaml

【说明】:

(6)执行所有定义文件

# pwd /opt/kube-dns # ls kubedns-cm.yaml kubedns-controller.yaml kubedns-sa.yaml kubedns-svc.yaml # kubectl create -f . configmap "kube-dns" created deployment "kube-dns" created serviceaccount "kube-dns" created service "kube-dns" created

在3台node节点上查看生成的kube-dns相关pod和container:

[root@node2 ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 9b1dbfde7eac index.tenxcloud.com/jimmy/k8s-dns-sidecar-amd64@sha256:947271f3e08b1fd61c4b26478f08d3a8f10bbca90d4dec067e3b33be08066970 "/sidecar --v=2 --log" 4 hours ago Up 4 hours k8s_sidecar_kube-dns-351402727-6vnsj_kube-system_efb96c05-9928-11e7-9acc-005056b7609a_0 a455dc0a9b55 index.tenxcloud.com/jimmy/k8s-dns-dnsmasq-nanny-amd64@sha256:b253876345427dbd626b145897be51d87bfd535e2cd5d7d166deb97ea37701f8 "/dnsmasq-nanny -v=2 " 4 hours ago Up 4 hours k8s_dnsmasq_kube-dns-351402727-6vnsj_kube-system_efb96c05-9928-11e7-9acc-005056b7609a_0 7f18c10c8d60 index.tenxcloud.com/jimmy/k8s-dns-kube-dns-amd64@sha256:94426e872d1a4a0cf88e6c5cd928a1acbe1687871ae5fe91ed751593aa6052d3 "/kube-dns --domain=c" 4 hours ago Up 4 hours k8s_kubedns_kube-dns-351402727-6vnsj_kube-system_efb96c05-9928-11e7-9acc-005056b7609a_0 a6feb213296b registry.access.redhat.com/rhel7/pod-infrastructure "/usr/bin/pod" 4 hours ago Up 4 hours k8s_POD_kube-dns-351402727-6vnsj_kube-system_efb96c05-9928-11e7-9acc-005056b7609a_0

3. 检查 kube-dns 功能

上面是通过 kubectl run 来启动了第一个Pod,但是并不支持所有的功能。使用kubectl run在设定很复杂的时候需要非常长的一条语句,敲半天也很容易出错,也没法保存,在碰到转义字符的时候也经常会很抓狂,所以更多场景下会使用yaml或者json文件,而使用kubectl create或者delete就可以利用这些yaml文件。通过 kubectl create -f file.yaml 来创建资源。kubectl run 并不是直接创建一个Pod,而是先创建一个Deployment资源 (replicas=1),再由Deployment来自动创建Pod。

新建一个 Deployment:

[root@node1 kube-dns]# vim my-nginx.yaml apiVersion: extensions/v1beta1 kind: Deployment metadata: name: my-nginx spec: replicas: 2 template: metadata: labels: run: my-nginx spec: containers: - name: my-nginx image: docker.io/nginx:latest ports: - containerPort: 80

Export 该 Deployment,生成 my-nginx 服务:

# kubectl expose deploy my-nginx service "my-nginx" exposed # kubectl get services --all-namespaces |grep my-nginx default my-nginx 10.254.34.181 <none> 80/TCP 26s

创建另一个 Pod,查看 /etc/resolv.conf 是否包含 kubelet 配置的 --cluster-dns 和 --cluster-domain,是否能够将服务my-nginx 解析到 Cluster IP 10.254.34.181。

[root@node1 kube-dns]# vim dns-test-busybox.yaml apiVersion: v1 kind: Pod metadata: name: busybox namespace: default spec: containers: - image: busybox command: - sleep - "3600" imagePullPolicy: IfNotPresent name: busybox restartPolicy: Always

[root@node1 kube-dns]# kubectl create -f dns-test-busybox.yaml pod "busybox" created [root@node1 kube-dns]# kubectl exec -ti busybox -- nslookup kubernetes.default Server: 10.254.0.2 Address 1: 10.254.0.2 kube-dns.kube-system.svc.cluster.local Name: kubernetes.default Address 1: 10.254.0.1 kubernetes.default.svc.cluster.local kubectl exec -ti busybox -- ping my-nginx PING my-nginx (10.254.34.181): 56 data bytes kubectl exec -ti busybox -- ping kubernetes PING kubernetes (10.254.0.1): 56 data bytes kubectl exec -ti busybox -- ping kube-dns.kube-system.svc.cluster.local PING kube-dns.kube-system.svc.cluster.local (10.254.0.2): 56 data bytes

从结果来看,service名称可以正常解析。

另外,使用kubernetes的时候建议不要再用docker命令操作。

二进制方式部署Kubernetes 1.6.0集群(开启TLS)

标签:## 支持 文件的 比较 yum安装 scp 复用 man int

原文地址:http://www.cnblogs.com/zhaojiankai/p/7853525.html