Initialization

Welcome to the first assignment of the hyper parameters tuning(超参数调整) specialization. It is very important that you regularize your model properly because it could dramatically improve your results.

By completing this assignment you will:

- Understand that different regularization methods that could help your model.

- Implement dropout and see it work on data.

- Recognize that a model without regularization gives you a better accuracy on the training set but nor necessarily on the test set.

- Understand that you could use both dropout and regularization on your model.

This assignment prepares you well for the upcoming assignment. Take your time to complete it and make sure you get the expected outputs when working through the different exercises. In some code blocks, you will find a "#GRADED FUNCTION: functionName" comment. Please do not modify it. After you are done, submit your work and check your results. You need to score 80% to pass. Good luck :) !

【中文翻译】

初始

-实施dropout, 并看到它对数据的有效。

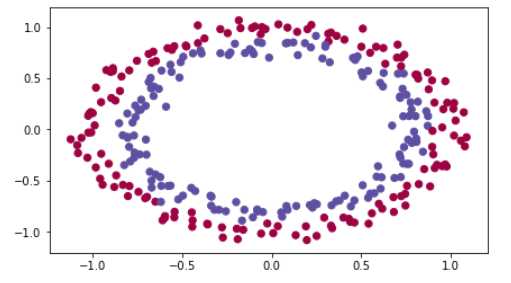

import numpy as np import matplotlib.pyplot as plt import sklearn import sklearn.datasets from init_utils import sigmoid, relu, compute_loss, forward_propagation, backward_propagation from init_utils import update_parameters, predict, load_dataset, plot_decision_boundary, predict_dec %matplotlib inline plt.rcParams[‘figure.figsize‘] = (7.0, 4.0) # set default size of plots plt.rcParams[‘image.interpolation‘] = ‘nearest‘ plt.rcParams[‘image.cmap‘] = ‘gray‘ # load image dataset: blue/red dots in circles train_X, train_Y, test_X, test_Y = load_dataset()

【result】

You would like a classifier to separate the blue dots from the red dots.

-------------------------------------------------------------------------------------------

-------------------------------------------------------------------------------------------

-------------------------------------------------------------------------------------------

-------------------------------------------------------------------------------------------