Multipath

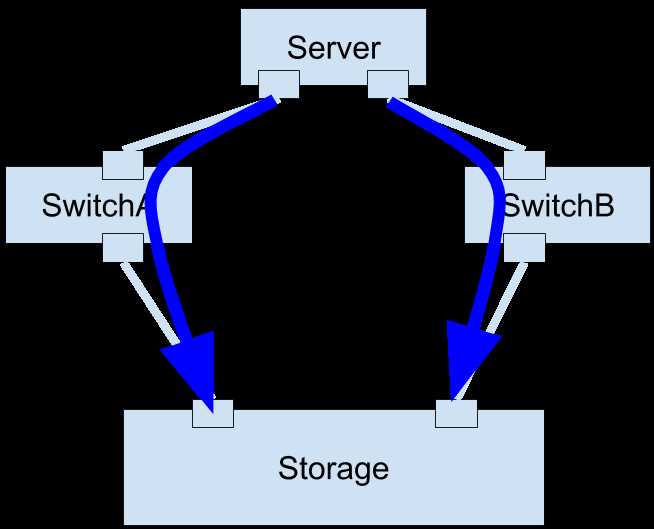

In regular configuration of iSCSI initiator, it only has a single path torwards the target, once the path failed, the initiator will lost the connection to its target, that will lead to a single point of failure. For eliminating the backward, we can introduce the concept of multipathing , which has multiple paths for a single target, only one path failed could not affect the connection between the server and target. The multipath can running in many fasions , such active-backup, load-balance , round-robin,etc.

we can login to a same target twice through different portal in a iSCSI target node, the IP address of the portal can reside either in same network or different networks.

In practice, we just need to login a same target through different networks ,for instance, in the picture above, the SwithA and SwitchB are forwarding for 2 different VLANs.

After the established multipath, there will be 2 block devices appears in the /dev/ directory, they are just the same device that learnt from different path

Here is an example for how to configure the multipath

[root@node3 ~]# iscsiadm -m discovery -t st -p 172.19.0.254 3260

172.19.0.254:3260,1 iqn.2017-12.com.example:pp01

[root@node3 ~]# iscsiadm -m discovery -t st -p 172.18.0.254 3260

172.18.0.254:3260,1 iqn.2017-12.com.example:pp01

Here are the block devices:

[root@node3 ~]# ll /dev/sd*

brw-rw----. 1 root disk 8, 0 Dec 12 07:39 /dev/sda

brw-rw----. 1 root disk 8, 16 Dec 12 07:39 /dev/sdb

Device Mapper

we cannot take any advantages from creating multipath alone, without using Device-mapper, which provides ablity to handle the redundancy of multipath devices,

Here are the steps for configure a basic device mapper , please make sure you have already have multipath deivces appears in your system.

First we need to install the package of device-mapper , in many mordem Linux, this package has been installed by default, the examples here are based on RHEL6.2

yum -y install device-mapper-multipath

then, let‘s start the multipath service

service multipathd start

check the device mapper status, as you can see , you may found below errors that telling you , it cannot find the multipath.conf , that is normal , because we need to create this file after the software has been installed

root@instructor ~]# multipath -ll

Dec 17 15:44:45 | DM multipath kernel driver not loaded

Dec 17 15:44:45 | /etc/multipath.conf does not exist, blacklisting all devices.

Dec 17 15:44:45 | A sample multipath.conf file is located at

Dec 17 15:44:45 | /usr/share/doc/device-mapper-multipath-0.4.9/multipath.conf

Dec 17 15:44:45 | You can run /sbin/mpathconf to create or modify /etc/multipath.conf

Dec 17 15:44:45 | DM multipath kernel driver not loaded

create a initial config for multipath

[root@instructor ~]# mpathconf --enable

[root@instructor ~]# ll /etc/multipath.conf

-rw-------. 1 root root 2612 Dec 17 15:46 /etc/multipath.conf

restart the multipathd ,then you can see the device-mapper has made a fusion of these two devices

[root@node3 ~]# multipath -ll

remote_disk (1IET 00010001) dm-3 IET,VIRTUAL-DISK

size=10G features=‘0‘ hwhandler=‘0‘ wp=rw

|-+- policy=‘round-robin 0‘ prio=1 status=active

| `- 4:0:0:1 sdb 8:16 active ready running

`-+- policy=‘round-robin 0‘ prio=1 status=enabled

`- 5:0:0:1 sda 8:0 active ready running

the "remote_disk" is a alias that I made for it, "1IET 00010001" is the wwid of both sda and sdb , we can name the device-mapper with anything that base on devices‘ uuid, let‘s check how configuration looks like

multipaths {

multipath {

wwid "1IET 00010001"

alias remote_disk

}

}

For determine the wwid of a block device, we can use scsi_id command, as you can see, actually the sda and sdb are refering to a same device.

[root@node3 ~]# scsi_id -gu /dev/sda

1IET_00010001

[root@node3 ~]# scsi_id -gu /dev/sdb

1IET_00010001

in the end , we can do many things on the mappered device , such as fdisk, formatting, or create LVM on it just as a regular disk on the server.

[root@node3 ~]# ll /dev/mapper/remote_disk

lrwxrwxrwx. 1 root root 7 Dec 12 07:39 /dev/mapper/remote_disk -> ../dm-3