实现目标

1.古诗词网站爬取唐诗宋词

2.落地到本地数据库

页面分析

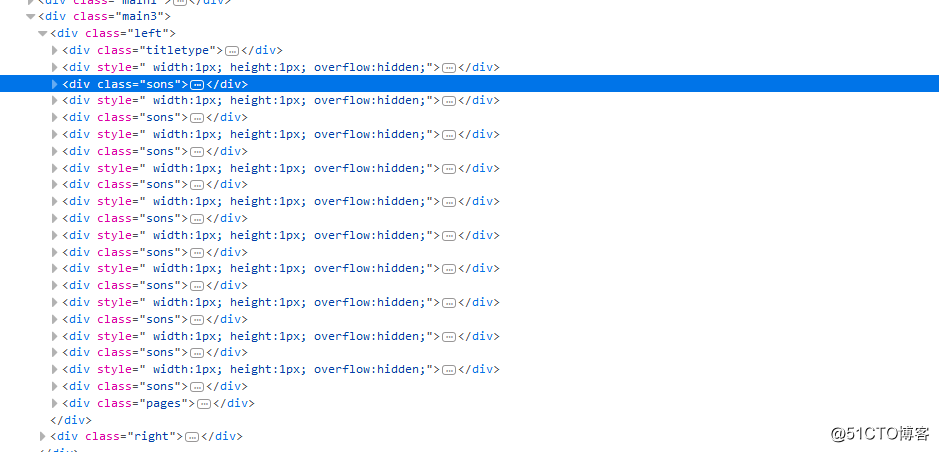

通过firedebug进行页面定位:

源码定位:

根据lxml etree定位div标签:

# 通过 lxml进行页面分析

response = etree.HTML(data)

# div层定位

for row in response.xpath('//div[@class="left"]/div[@class="sons"]'):

# 标题定位

title = row.xpath('div[@class="cont"]/p/a/b/text()')[0] if row.xpath('div[@class="cont"]/p/a/b/text()') else ''

# 朝代定位

dynasty = row.xpath('div[@class="cont"]/p[@class="source"]//text()')[0] if row.xpath('div[@class="cont"]/p[@class="source"]//text()') else ''

# 诗人定位

author = row.xpath('div[@class="cont"]/p[@class="source"]//text()')[-1] if row.xpath('div[@class="cont"]/p[@class="source"]//text()') else ''

# 内容定位

content = ''.join(row.xpath('div[@class="cont"]/div[@class="contson"]//text()')).replace(' ', '').replace('\n', '') if row.xpath('div[@class="cont"]/div[@class="contson"]//text()') else ''

# 标签定位

tag = ','.join(row.xpath('div[@class="tag"]/a/text()')) if row.xpath('div[@class="tag"]/a/text()') else ''脚本源码

#!/usr/bin/env python

# -*- coding: utf-8 -*-

'''

@Date : 2017/12/21 11:12

@Author : kaiqing.huang (kaiqing.huang@ubtrobot.com)

@Contact : kaiqing.huang@ubtrobot.com

@File : shigeSpider.py

'''

from utils import MySpider, MongoBase

from datetime import date

from lxml import etree

import sys

class shigeSpider():

def __init__(self):

self.db = MongoBase()

self.spider = MySpider()

def download(self, url):

self.domain = url.split('/')[2]

data = self.spider.get(url)

if data:

self.parse(data)

def parse(self, data):

response = etree.HTML(data)

for row in response.xpath('//div[@class="left"]/div[@class="sons"]'):

title = row.xpath('div[@class="cont"]/p/a/b/text()')[0] if row.xpath('div[@class="cont"]/p/a/b/text()') else ''

dynasty = row.xpath('div[@class="cont"]/p[@class="source"]//text()')[0] if row.xpath('div[@class="cont"]/p[@class="source"]//text()') else ''

author = row.xpath('div[@class="cont"]/p[@class="source"]//text()')[-1] if row.xpath('div[@class="cont"]/p[@class="source"]//text()') else ''

content = ''.join(row.xpath('div[@class="cont"]/div[@class="contson"]//text()')).replace(' ', '').replace('\n', '') if row.xpath('div[@class="cont"]/div[@class="contson"]//text()') else ''

tag = ','.join(row.xpath('div[@class="tag"]/a/text()')) if row.xpath('div[@class="tag"]/a/text()') else ''

self.db.add_new_row('shigeSpider', { 'title': title, 'dynasty': dynasty, 'author': author, 'content': content, 'tag': tag, 'createTime': str(date.today()) })

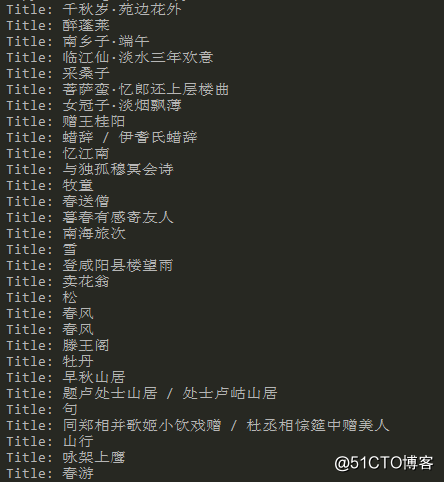

print 'Title: {}'.format(title)

if response.xpath('//div[@class="pages"]/a/@href'):

self.download('http://' + self.domain + response.xpath('//div[@class="pages"]/a/@href')[-1])

if __name__ == '__main__':

sys.setrecursionlimit(100000)

url = 'http://so.gushiwen.org/type.aspx?p=501'

do = shigeSpider()

do.download(url)执行效果:

原文地址:http://blog.51cto.com/8456082/2052864