1、nginx, haproxy, apache(mod_proxy_ajp, mod_proxy_http, mod_jk)反代用户请求至tomcat;

2、nginx, haproxy, apache(mod_proxy_ajp, mod_proxy_http, mod_jk)负载用户请求至tomcat servers;额外实现session sticky;

3、tomcat session cluster;

4、tomcat session server(msm);

规划:

172.16.1.2 apache反代服务器

172.16.1.3 tomcat A

172.16.1.10 tomcat B

说明:

如题要求,可以选择使用nginx, haproxy, apache三个中的一个去反代用户请求至tomcat,因为apache和Tomcat都是Apache 软件基金会的产品,两者有更好的兼容性,所以选择用apache,并且会以mod_proxy_ajp, mod_proxy_http, mod_jk三种方式分别实现题目要求。

1.方案一:mod_proxy_http

vim /etc/httpd/conf.d/lb_tomcat.conf

Header add Set-Cookie "ROUTEID=.%{BALANCER_WORKER_ROUTE}e; path=/" env=BALANCER_ROUTE_CHANGED

<Proxy balancer://tcsrvs>

BalancerMember http://172.16.1.10:8080/testapp route=TomcatA loadfactor=1

BalancerMember http://172.16.1.3:8080/testapp route=TomcatB loadfactor=2

ProxySet lbmethod=byrequests

ProxySet stickysession=ROUTEID

</Proxy>

<VirtualHost *:80>

ServerName lb.zrs.com

ProxyVia On

ProxyRequests Off

<Proxy *>

Require all granted

</Proxy>

ProxyPass / balancer://tcsrvs/

<Location />

Require all granted

</Location>

</VirtualHost>分别为两个Tomcat的server.xml配置文件中的引擎中添加jvmRoute

第一个tomcat配置为TomcatA: <Engine name="Catalina" defaultHost="localhost" jvmRoute="TomcatA"> 第二个tomcat配置为TomcatB: <Engine name="Catalina" defaultHost="localhost" jvmRoute="TomcatB">

分别为两个Tomcat Server的testapp提供测试页面:

tomcatA:

# mkdir -pv /usr/share/tomcat/webapps/testapp/{WEB-INF,classes,lib}

# vim /usr/share/tomcat/webapps/testapp/index.jsp

添加如下内容:

<%@ page language="java" %>

<html>

<head><title>TomcatA</title></head>

<body>

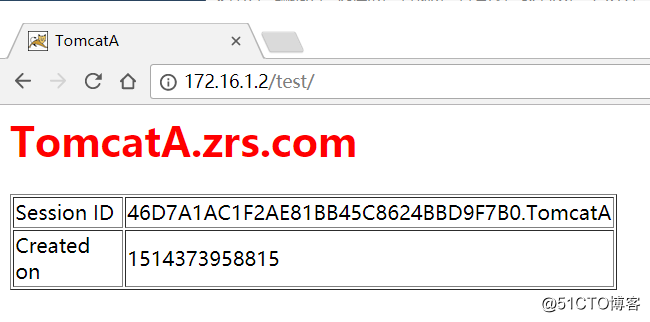

<h1><font color="red">TomcatA.zrs.com</font></h1>

<table align="centre" border="1">

<tr>

<td>Session ID</td>

<% session.setAttribute("zrs.com","zrs.com"); %>

<td><%= session.getId() %></td>

</tr>

<tr>

<td>Created on</td>

<td><%= session.getCreationTime() %></td>

</tr>

</table>

</body>

</html>tomcatB:

# mkdir -pv /usr/share/tomcat/webapps/testapp/{WEB-INF,classes,lib}

# vim /usr/share/tomcat/webapps/testapp/index.jsp

添加如下内容:

<%@ page language="java" %>

<html>

<head><title>TomcatB</title></head>

<body>

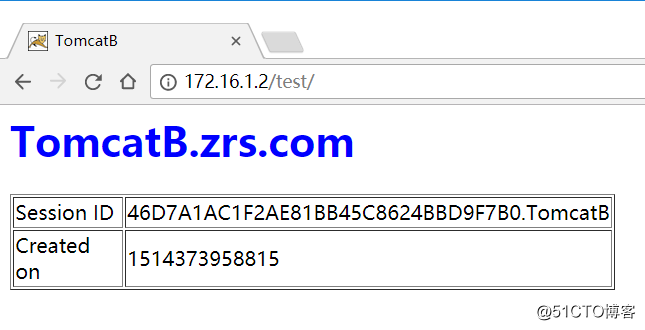

<h1><font color="blue">TomcatB.zrs.com</font></h1>

<table align="centre" border="1">

<tr>

<td>Session ID</td>

<% session.setAttribute("zrs.com","zrs.com"); %>

<td><%= session.getId() %></td>

</tr>

<tr>

<td>Created on</td>

<td><%= session.getCreationTime() %></td>

</tr>

</table>

</body>

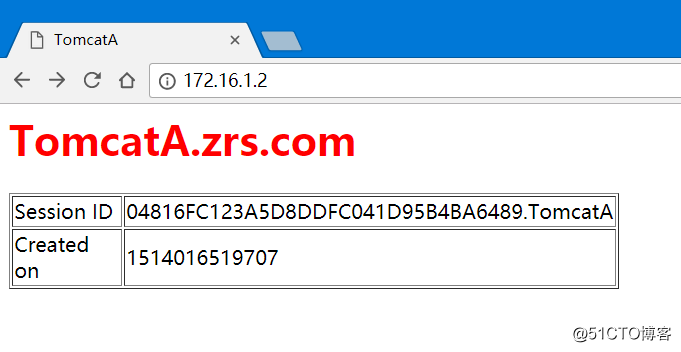

</html>客户端测试,因为做了session sticky,会一直调度到后端一台服务器上,如我的一直是TomcatA

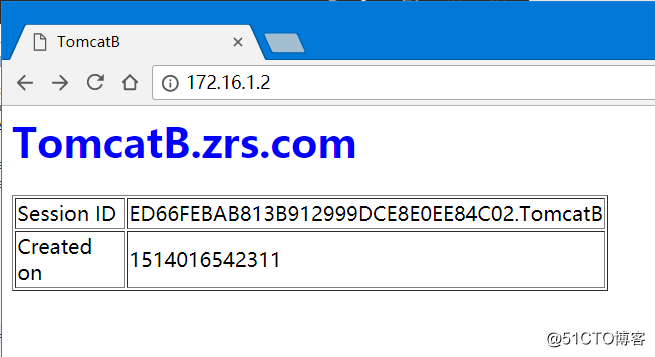

关掉TomcatA,便会调度到TomcatB上。

2.方案二:mod_proxy_ajp

使用mod_proxy_ajp,仅需要把mod_proxy_http的http改为ajp,端口8080改为8009即可,需要注意的是前端用了ajp,可以把后端的tomcat的8080端口注释掉,不让其监听,从而使安全性更高。

vim /etc/httpd/conf.d/lb_tomcat.conf

Header add Set-Cookie "ROUTEID=.%{BALANCER_WORKER_ROUTE}e; path=/" env=BALANCER_ROUTE_CHANGED

<Proxy balancer://tcsrvs>

BalancerMember ajp://172.16.1.10:8009/testapp route=TomcatA loadfactor=1

BalancerMember ajp://172.16.1.3:8009/testapp route=TomcatB loadfactor=2

ProxySet lbmethod=byrequests

ProxySet stickysession=ROUTEID

</Proxy>

<VirtualHost *:80>

ServerName lb.qhdlink.com

ProxyVia On

ProxyRequests Off

<Proxy *>

Require all granted

</Proxy>

ProxyPass / balancer://tcsrvs/

<Location />

Require all granted

</Location>

</VirtualHost>关闭session sticky,客户端查看

3.方案三:mod_jk

此方案需要mod_jk模块

需要安装这个文件tomcat-connectors-VERSION.tar.gz

配置文件:

vim /etc/httpd/conf.d/mod_jk_lb.conf

LoadModule jk_module modules/mod_jk.so JkWorkersFile /etc/httpd/conf.d/workers.properties JkLogFile logs/mod_jk.log JkLogLevel debug JkMount /* tcsrvs JkMount /jk-status Stat

vim /etc/httpd/conf.d/workers.properties

worker.list=tcsrvs,Stat worker.TomcatA.host=172.16.1.10 worker.TomcatA.port=8009 worker.TomcatA.type=ajp13 worker.TomcatA.lbfactor=1 worker.TomcatB.host=172.16.1.3 worker.TomcatB.port=8009 worker.TomcatB.type=ajp13 worker.TomcatB.lbfactor=2 worker.tcsrvs.type=lb worker.tcsrvs.balance_workers=TomcatA,TomcatB worker.tcsrvs.sticky_session=1 worker.Stat.type=status

4.tomcat session cluster

在两个tomcat的server.xml配置启用集群

添加如下配置内容,并且注释掉8080端口

需要注意的是下面的内容中,将address="auto"的auto改为该主机的IP地址

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"

channelSendOptions="8">

<Manager className="org.apache.catalina.ha.session.DeltaManager"

expireSessionsOnShutdown="false"

notifyListenersOnReplication="true"/>

<Channel className="org.apache.catalina.tribes.group.GroupChannel">

<Membership className="org.apache.catalina.tribes.membership.McastService"

address="228.100.0.4"

port="45564"

frequency="500"

dropTime="3000"/>

<Receiver className="org.apache.catalina.tribes.transport.nio.NioReceiver"

address="auto"

port="4000"

autoBind="100"

selectorTimeout="5000"

maxThreads="6"/>

<Sender className="org.apache.catalina.tribes.transport.ReplicationTransmitter">

<Transport className="org.apache.catalina.tribes.transport.nio.PooledParallelSender"/>

</Sender>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.TcpFailureDetector"/>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.MessageDispatch15Interceptor"/>

</Channel>

<Valve className="org.apache.catalina.ha.tcp.ReplicationValve"

filter=""/>

<Valve className="org.apache.catalina.ha.session.JvmRouteBinderValve"/>

<Deployer className="org.apache.catalina.ha.deploy.FarmWarDeployer"

tempDir="/tmp/war-temp/"

deployDir="/tmp/war-deploy/"

watchDir="/tmp/war-listen/"

watchEnabled="false"/>

<ClusterListener className="org.apache.catalina.ha.session.JvmRouteSessionIDBinderListener"/>

<ClusterListener className="org.apache.catalina.ha.session.ClusterSessionListener"/>

</Cluster>

并且在Host中添加一个Context组件

<Host name="localhost" appBase="webapps"

unpackWARs="true" autoDeploy="true">

<Context path="/test" docBase="/myapps/webapps" reloadable="true"/>

创建目录

[root@zrs tomcat]# mkdir -pv /myapps/webapps/{classes,lib,WEB-INF}

创建测试页面

跟上面的一样,创建AB两个不同的页面

[root@zrs tomcat]# vim /myapps/webapps/index.jsp

复制web.xml到指定目录

[root@zrs tomcat]# cp web.xml /myapps/webapps/WEB-INF/

编辑WEB-INF/web.xml

在任意非段落外添加<distributable/>

[root@zrs tomcat]# vim /myapps/webapps/WEB-INF/web.xml

反代服务器配置

[root@director conf.d]# vim mod_jk_proxy.conf

LoadModule jk_module modules/mod_jk.so JkWorkersFile /etc/httpd/conf.d/workers.properties JkLogFile logs/mod_jk.log JkLogLevel debug JkMount /* tcsrvs JkMount /jk-status Stat

[root@director conf.d]# vim workers.properties

worker.list=tcsrvs,Stat worker.TomcatA.host=172.16.1.10 worker.TomcatA.port=8009 worker.TomcatA.type=ajp13 worker.TomcatA.lbfactor=1 worker.TomcatB.host=172.16.1.3 worker.TomcatB.port=8009 worker.TomcatB.type=ajp13 worker.TomcatB.lbfactor=2 worker.tcsrvs.type=lb worker.tcsrvs.balance_workers=TomcatA,TomcatB worker.tcsrvs.sticky_session=0 worker.Stat.type=status

分别开启三台主机的httpd服务和tomcat服务

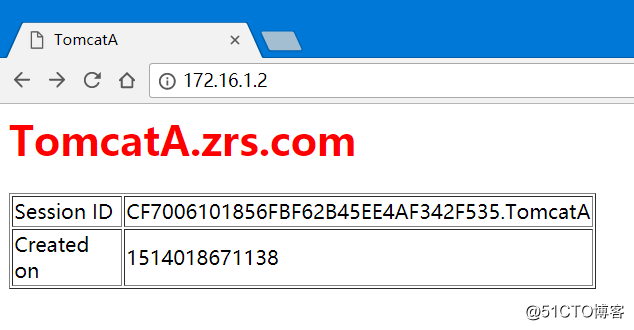

客户端测试

可以看到反代服务器以ABB的次序轮询后端两台tomcat服务器,但是ID不变,从而实现session cluster。

5.tomcat session server(msm)

接着刚才的配置环境,这个构建方案的原理是将所有会话放在同一台后端tomcat服务器上,当后端这台tomcat服务器宕机时,整个搭建便无法正常提供服务,所以也要给tomcat服务器做高可用。

这个构建方案需要memcached作为旁挂式缓存服务器,所以需要提前下载并启动memcached服务。

停止两台tomcat服务,还原server.xml配置文件

注释掉8080端口,在Engine中添加jvmRoute,如下

<Engine name="Catalina" defaultHost="localhost" jvmRoute="TomcatA" >

或

<Engine name="Catalina" defaultHost="localhost" jvmRoute="TomcatB" >

在Host段添加配置内容

<Context path="/test" docBase="/myapps/webapps" reloadable="true">

<Manager className="de.javakaffee.web.msm.MemcachedBackupSessionManager"

memcachedNodes="n1:172.16.1.10:11211,n2:172.16.1.3:11211"

failoverNodes="n1"

requestUriIgnorePattern=".*\.(ico|png|gif|jpg|css|js|html|htm)$"

transcoderFactoryClass="de.javakaffee.web.msm.serializer.javolution.JavolutionTranscoderFactory"

/>

</Context>

导入类文件

memcached-session-manager项目地址,http://code.google.com/p/memcached-session-manager/

下载如下jar文件至各tomcat节点的tomcat安装目录下的lib目录中,其中的${version}要换成你所需要的版本号,tc${6,7,8}要换成与tomcat版本相同的版本号。

memcached-session-manager-${version}.jar

memcached-session-manager-tc${6,7,8}-${version}.jar

spymemcached-${version}.jar

msm-javolution-serializer-${version}.jar

javolution-${version}.jar

将所有的类放在/usr/share/java/tomcat目录中。

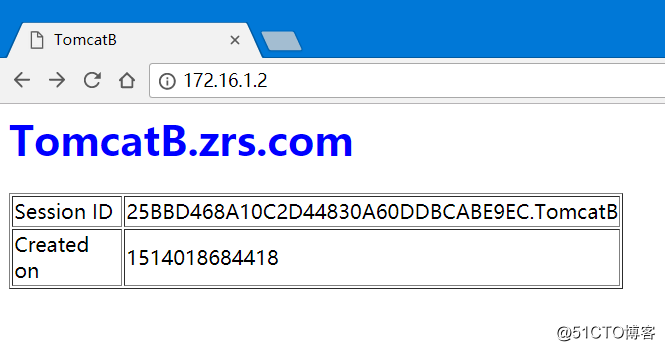

启动tomcat服务即可看到反代服务器以AB的次序轮询后端两台tomcat服务器,但是session ID不变。

apache分别基于三种方案实现tomcat的代理、负载均衡及会话绑定

原文地址:http://blog.51cto.com/12667170/2055667