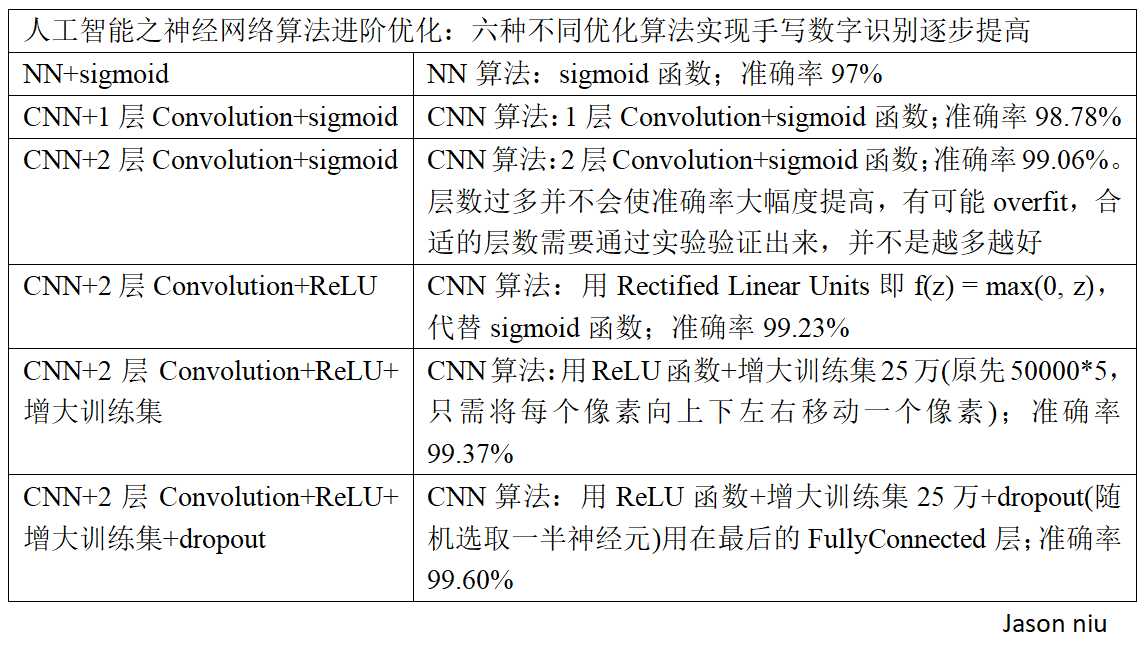

import mnist_loader from network3 import Network from network3 import ConvPoolLayer, FullyConnectedLayer, SoftmaxLayer training_data, validation_data, test_data = mnist_loader.load_data_wrapper() mini_batch_size = 10 #NN算法:sigmoid函数;准确率97% net = Network([ FullyConnectedLayer(n_in=784, n_out=100), SoftmaxLayer(n_in=100, n_out=10)], mini_batch_size) net.SGD(training_data, 60, mini_batch_size, 0.1, validation_data, test_data) #CNN算法:1层Convolution+sigmoid函数;准确率98.78% net = Network([ ConvPoolLayer(image_shape=(mini_batch_size, 1, 28, 28), filter_shape=(20, 1, 5, 5), poolsize=(2, 2)), FullyConnectedLayer(n_in=20*12*12, n_out=100), SoftmaxLayer(n_in=100, n_out=10)], mini_batch_size) #CNN算法:2层Convolution+sigmoid函数;准确率99.06%。层数过多并不会使准确率大幅度提高,有可能overfit,合适的层数需要通过实验验证出来,并不是越多越好 net = Network([ ConvPoolLayer(image_shape=(mini_batch_size, 1, 28, 28), filter_shape=(20, 1, 5, 5), poolsize=(2, 2)), ConvPoolLayer(image_shape=(mini_batch_size, 20, 12, 12), filter_shape=(40, 20, 5, 5), poolsize=(2, 2)), FullyConnectedLayer(n_in=40*4*4, n_out=100), SoftmaxLayer(n_in=100, n_out=10)], mini_batch_size) #CNN算法:用Rectified Linear Units即f(z) = max(0, z),代替sigmoid函数;准确率99.23% net = Network([ ConvPoolLayer(image_shape=(mini_batch_size, 1, 28, 28), filter_shape=(20, 1, 5, 5), poolsize=(2, 2), activation_fn=ReLU), #激活函数采用ReLU函数 ConvPoolLayer(image_shape=(mini_batch_size, 20, 12, 12), filter_shape=(40, 20, 5, 5), poolsize=(2, 2), activation_fn=ReLU), FullyConnectedLayer(n_in=40*4*4, n_out=100, activation_fn=ReLU), SoftmaxLayer(n_in=100, n_out=10)], mini_batch_size) #CNN算法:用ReLU函数+增大训练集25万(原先50000*5,只需将每个像素向上下左右移动一个像素);准确率99.37% $ python expand_mnist.py #将图片像素向上下左右移动 expanded_training_data, _, _ = network3.load_data_shared("../data/mnist_expanded.pkl.gz") net = Network([ ConvPoolLayer(image_shape=(mini_batch_size, 1, 28, 28), filter_shape=(20, 1, 5, 5), poolsize=(2, 2), activation_fn=ReLU), ConvPoolLayer(image_shape=(mini_batch_size, 20, 12, 12), filter_shape=(40, 20, 5, 5), poolsize=(2, 2), activation_fn=ReLU), FullyConnectedLayer(n_in=40*4*4, n_out=100, activation_fn=ReLU), SoftmaxLayer(n_in=100, n_out=10)], mini_batch_size) net.SGD(expanded_training_data, 60, mini_batch_size, 0.03,validation_data, test_data, lmbda=0.1) #CNN算法:用ReLU函数+增大训练集25万+dropout(随机选取一半神经元)用在最后的FullyConnected层;准确率99.60% expanded_training_data, _, _ = network3.load_data_shared("../data/mnist_expanded.pkl.gz") net = Network([ ConvPoolLayer(image_shape=(mini_batch_size, 1, 28, 28), filter_shape=(20, 1, 5, 5), poolsize=(2, 2), activation_fn=ReLU), ConvPoolLayer(image_shape=(mini_batch_size, 20, 12, 12), filter_shape=(40, 20, 5, 5), poolsize=(2, 2), activation_fn=ReLU), FullyConnectedLayer( n_in=40*4*4, n_out=1000, activation_fn=ReLU, p_dropout=0.5), FullyConnectedLayer( n_in=1000, n_out=1000, activation_fn=ReLU, p_dropout=0.5), SoftmaxLayer(n_in=1000, n_out=10, p_dropout=0.5)], mini_batch_size) net.SGD(expanded_training_data, 40, mini_batch_size, 0.03,validation_data, test_data)