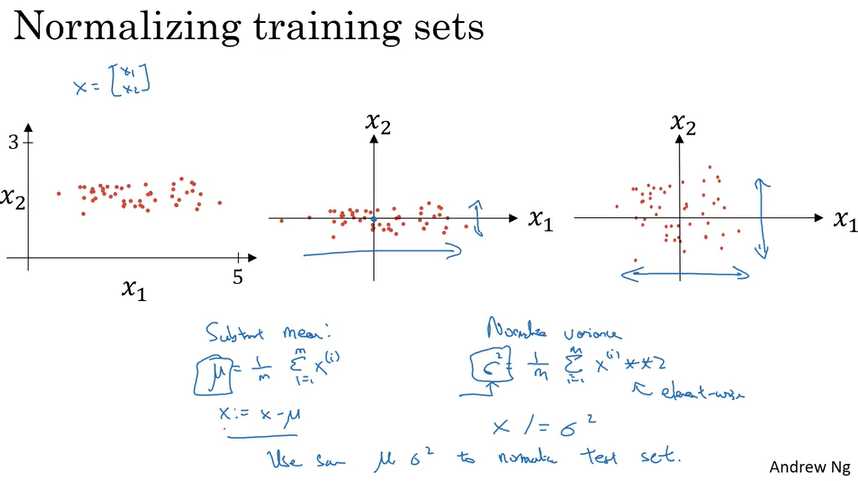

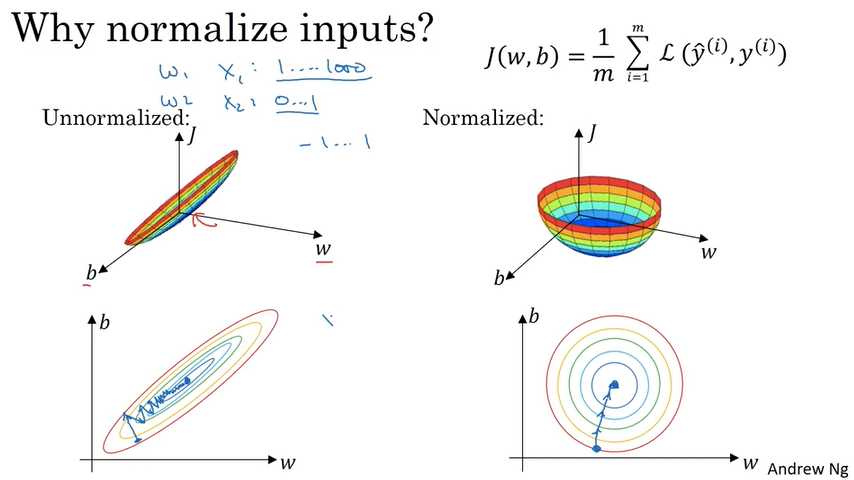

Normalizing input

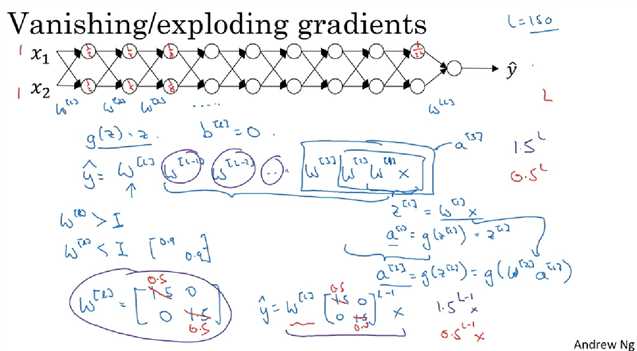

Vanishing/Exploding gradients

deep neural network suffer from these issues. they are huge barrier to training deep neural network.

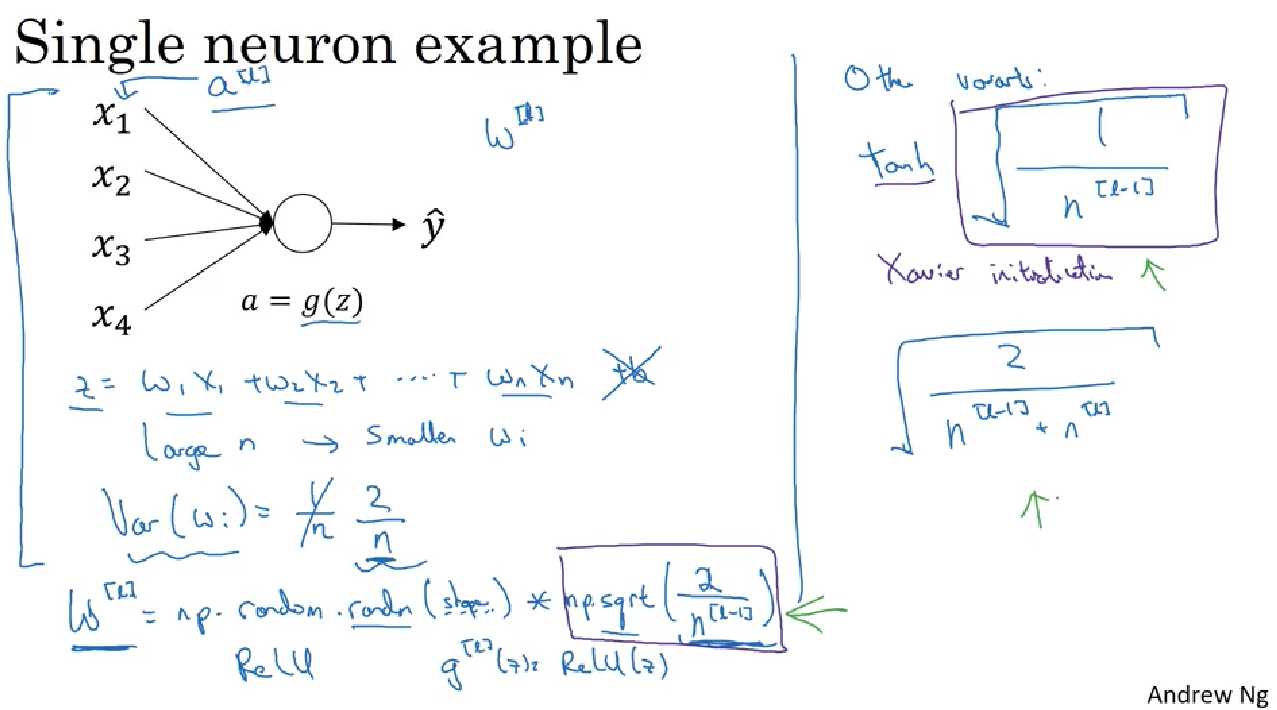

There is a partial solution to solve the above problem but help a lot which is careful choice how you initialize the weights. 主要目的是使得weight W[l]不要比1太大或者太小,这样最后在算W的指数级的时候就不会有vanishing 和 exploding的问题

Weight Initialization for Deep Networks

Ref:

1. Coursera