之前写过一篇 C# SqlBulkCopy 大量数据导入到数据库 的文章介绍了大量数据导入到数据库的高效方法。

这篇文章与之有些关联,在这之前所想的是做全量插入,每次run这个job就会清空然后插入,但是面对大量的数据,每次产生的流量是很大的,尤其是数据来自一些付费的API时,无疑增大了很多开销。所以我们只获取7天内更新的数据然后再选择未插入的数据插入到表中,对表作增量操作,由此达到控制成本的目的。

在此之前看过多篇博客都介绍了解决这个问题的方法,用到了一些零时表和触发器的知识,本人对此不胜了解,项目也比较紧,未研究,遂放弃,在此介绍一个相对简单的方法。

介绍其他人的正规解决方案一例,SqlBulkCopy与触发器,批量插入表(存在则更新,不存在则插入),有兴趣的可以研究下

//////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////////

想法:

我们将获取到的需要插入的数据先存放到一个临时表A_tem中(这个临时表是我每次执行就创建,结尾删除的表)

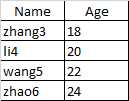

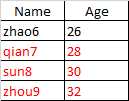

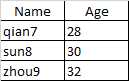

表A 表A_tem

创建一个存储过程

select * from A_tem except select * from A

由此得到需要插入的在表A中不存在的数据

result

-------------------------------------------------------------------------------------------

Code Sample:

string connString = ConfigurationManager.ConnectionStrings["connString"].ToString(); SqlConnection conn = new SqlConnection(connString); conn.Open(); Logger.LogMessage(string.Format("Createing Temporary table CsvFileVCQData_Tem......")); //Creater Temporary Table string sql = "CREATE TABLE CsvFileVCQData_Tem( [StringFormatted] [nvarchar](max) NULL, [ProjectName] [nvarchar](256) NULL, [ResID_MD4Hash] [nvarchar](max) NULL, [Wordcount] [numeric](18, 0) NULL, [CharacterCount] [numeric](18, 0) NULL, [SentanceCount] [numeric](18, 0) NULL, [SingleWord] [bit] NULL, [TwoWordsOnly] [bit] NULL, [HasPunctuation] [bit] NULL,[ContainsProductName] [bit]NULL, [HasPlaceholder] [bit] NULL, [EndsInColon] [bit] NULL, [HasVCQ] [bit] NULL,[HasDevComment] [bit] NULL, [HasLocComment] [bit] NULL,[HasScreenshot] [bit] NULL,[IsMobile] [bit] NULL,[IsDesktop] [bit] NULL,[IsWeb] [bit] NULL,[IsConsumer] [bit] NULL,[IsBusiness] [bit] NULL,[TotalSteelheadResults] [nvarchar](256) NULL,[SteelheadPasses] [numeric](18, 0) NULL,[SteelheadFailures] [numeric](18, 0) NULL, [S_Pass_Over_Total] [nvarchar](256) NULL, [S_Failed_Over_Total] [nvarchar](256) NULL,[ScoredLabels] [bit] NULL,[ScoredProbabilities] [nvarchar](256) NULL) ON[PRIMARY] TEXTIMAGE_ON[PRIMARY]"; Execute_Sql(sql); SqlBulkCopy sqlbulkcopy = new SqlBulkCopy(conn); sqlbulkcopy.DestinationTableName = "CsvFileVCQData_Tem"; //sqlbulkcopy.DestinationTableName = itemTable.TableName;//数据库中的表名 sqlbulkcopy.BulkCopyTimeout = 300; Logger.LogMessage(string.Format("Finding " + itemTable.Rows.Count + " pieces of data")); Logger.LogMessage(string.Format("Importing into the temporary table......")); DataTable datNew = itemTable.DefaultView.ToTable(false, new string[] { "StringFormatted","ProjectName","ResID_MD4Hash","Wordcount","CharacterCount", "SentanceCount","SingleWord","TwoWordsOnly","HasPunctuation","ContainsProductName", "HasPlaceholder","EndsInColon","HasVCQ","HasDevComment","HasLocComment", "HasScreenshot","IsMobile","IsDesktop","IsWeb", "IsConsumer","IsBusiness","Total Steelhead Results","SteelheadPasses","SteelheadFailures", "S_Pass_Over_Total","S_Failed_Over_Total","Scored Labels","Scored Probabilities" }); sqlbulkcopy.WriteToServer(datNew); DbHelper DBH = new DbHelper(); DataTable result = DBH.ExecuteDataTable("get_NewData");//调用存储过程 Logger.LogMessage(string.Format("Finding "+result.Rows.Count+" pieces of new data......")); sqlbulkcopy.DestinationTableName = "CsvFileVCQData"; sqlbulkcopy.WriteToServer(result); Logger.LogMessage(string.Format("Droping temporary table CsvFileVCQData_Tem......")); string sql_ = "drop table CsvFileVCQData_Tem"; Execute_Sql(sql_); conn.Close(); Logger.LogMessage(string.Format("Operation Done!"));