Weakly Supervised Deep Detection Networks,Hakan Bilen,Andrea Vedaldi

亮点

- 把弱监督检测问题解释为proposal排序的问题,通过比较所有proposal的类别分数得到一个比较正确的排序,这种思想与检测中评测标准的计算方法一致

相关工作

The MIL strategy results in a non-convex optimization problem; in practice, solvers tend to get stuck in local optima

such that the quality of the solution strongly depends on the initialization.

- developing various initialization strategies [19, 5, 32, 4]

- [19] propose a self-paced learning strategy

- [5] initialize object locations based on the objectness score.

- [4] propose a multi-fold split of the training data to escape local optima.

- on regularizing the optimization problem [31, 1].

- [31] apply Nesterov’s smoothing technique to the latent SVM formulation

- [1] propose a smoothed version of MIL that softly labels object instances instead of choosing the highest scoring ones.

- Another line of research in WSD is based on the idea of identifying the similarity between image parts.

- [31] propose a discriminative graph-based algorithm that selects a subset of windows such that each window is connected to its nearest neighbors in positive images.

- [32] extend this method to discover multiple co-occurring part configurations.

- [36] propose an iterative technique that applies a latent semantic clustering via latent Semantic Analysis (pLSA)

- [2] propose a formulation that jointly learns a discriminative model and enforces the similarity of the selected object regions via a discriminative convex clustering algorithm

方法

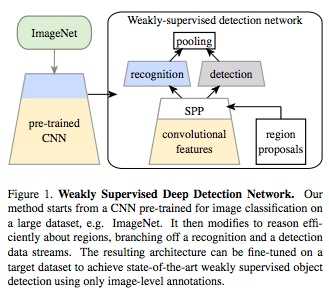

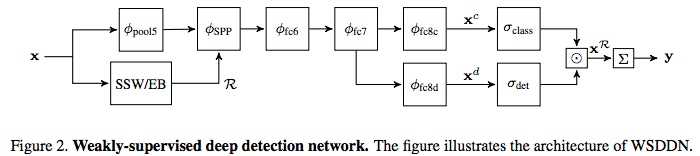

本文采用的方法非常简单易懂,主要分为以下三部:

- 将特征和region proposal的结果输入spatial pyramid pooling层,取出与区域相关的特征向量,并输入两个fc层

- 分类:fc层的输出通过softmax分类器,计算出这一区域类别

- 检测:fc层的输出通过softmax分类器,与上面不同的是归一化的时候不是用类别归一化,而是用所有区域的分数进行归一化,通过区域之间的对比找到包含该类别信息最多的区域

- 某区域r属于某类别c的得分,为后两部分的积

- 全图的类别得分,为所有区域属于该类别的得分之和

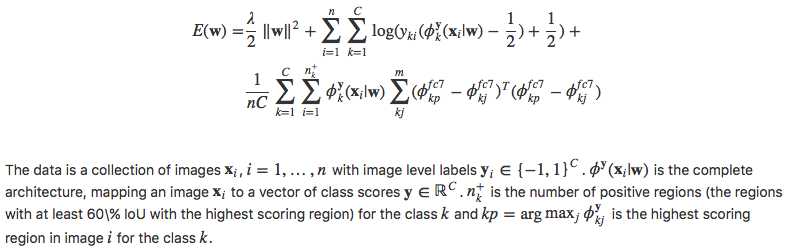

训练的loss function如下

最后一项是一个校准项(按照理解轻微更改了,感觉论文notation有点问题),其目的是通过拉近feature的距离约束解的平滑性(即与正确解相近的proposal也应该得到高分)。

实验结果

本文根据basenet不同给出了4种model:S (VGG-F), M (VGG-M-1024), L (VGG-VD16)和Ens(前三种ensemble的模型)

- Ablation:

- Object proposal

- Baseline mAP: Selective Search S 31.1%, M 30.9%, L 24.3%, Ens. 33.3%

- Edge Box: +0~1.2%

- Edge Box + Edge Box Score: +1.8~5.9%

- Spatial regulariser (compared with Edge Box + Edge Box Score) mAP +1.2~4.4%

- VOC2007

- mAP on test: S +2.9%, M +3.3%, L +3.2%, Ens. +7.7% compared with [36] + context

- CorLoc on trainval: S +5.7%, M +7.6%, L +5%, Ens. +9.5% compared with [36]

- Classification AP on test: S +7.9% compared with VGG-F, M +6.5% compared with VGG-M-1024, L +0.4% compared with VGG-VD16, Ens. -0.3% compared with VGG-VD16

- VOC2010

- mAP on test: +8.8% compared with [4]

- CorLoc on trainval: +4.5% compared with [4]

缺点

本文有一个明显的缺点是只考虑了一张图中某类别物体只出现一次的情况(regulariser中仅限制了最大值及其周围的框),这一点在文中给出的failure cases中也有所体现。