亮点

- 一个好名字给了让读者开始阅读的理由

- global max pooling over sliding window的定位方法值得借鉴

方法

本文的目标是:设计一个弱监督分类网络,注意本文的目标主要是提升分类。因为是2015年的文章,方法比较简单原始。

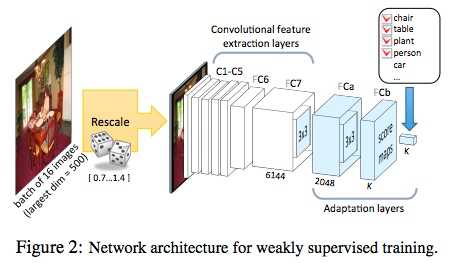

Following three modifications to a classification network.

- Treat the fully connected layers as convolutions, which allows us to deal with nearly arbitrary-sized images as input.

- The aim is to apply the network to bigger images in a sliding window manner thus extending its output to n×m× K, where n and m denote the number of sliding window positions in the x- and y- direction in the image, respectively.

- 3xhxw —> convs —> kxmxn (k: number of classes)

- Explicitly search for the highest scoring object position in the image by adding a single global max-pooling layer at the output.

- kxmxn —> kx1x1

- The max-pooling operation hypothesizes the location of the object in the image at the position with the maximum score

- Use a cost function that can explicitly model multiple objects present in the image.

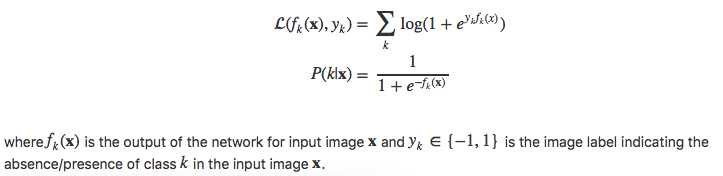

因为图中可能有很多物体,所以多类的分类loss不适用。作者把这个任务视为多个二分类问题,loss function和分类的分数如下

training

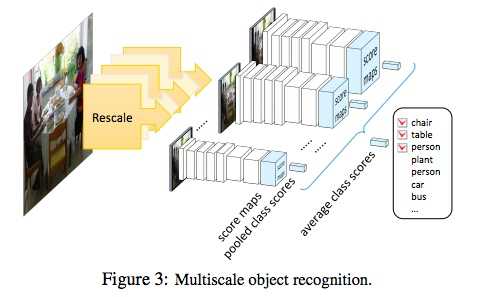

muti-scale test

实验

classification

- mAP on VOC 2012 test: +3.1% compared with [56]

- mAP on VOC 2012 test: +7.6% compared with kx1x1 output and single scale training

- mAP on VOC: +2.6% compared with RCNN

- mAP on COCO 62.8%

Localisation

- Metric: if the maximal response across scales falls within the ground truth bounding box of an object of the same class within 18 pixels tolerance, we label the predicted location as correct. If not, then we count the response as a false positive (it hit the background), and we also increment the false negative count (no object was found).

- metric on VOC 2012 val: -0.3% compared with RCNN

- mAP on COCO 41.2%

缺点

- 定位评测的metric不具有权威性

- max pooling改为average pooling会不会对于多个instance的情况更好一些