package it.xuegod.hadoop.hdfs;

import java.io.IOException;

import java.io.InputStream;

import java.net.MalformedURLException;

import java.net.URL;

import java.net.URLConnection;

import java.nio.file.FileSystem;

import org.apache.hadoop.fs.FsUrlStreamHandlerFactory;

import org.junit.Test;

import org.apache.hadoop.conf.Configuration;

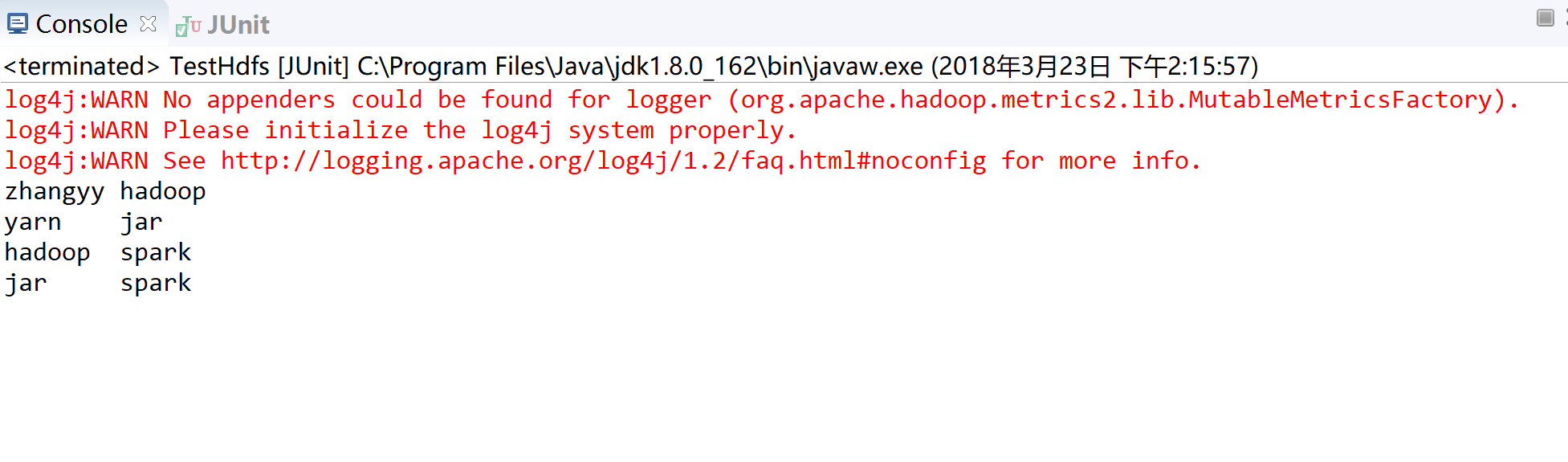

public class TestHdfs {

@Test

public void readFile() throws IOException {

URL.setURLStreamHandlerFactory(new FsUrlStreamHandlerFactory());

URL url = new URL("hdfs://172.17.100.11:8020/input/file1");

URLConnection conn = url.openConnection();

InputStream is = conn.getInputStream();

byte[] buf = new byte[is.available()];

is.read(buf);

is.close();

String str = new String(buf);

System.out.println(str);

}

}

package it.xuegod.hadoop.hdfs;

import java.io.ByteArrayOutputStream;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.junit.Test;

/**

*

*

* @author zhangyy

*

*read file

*

*/

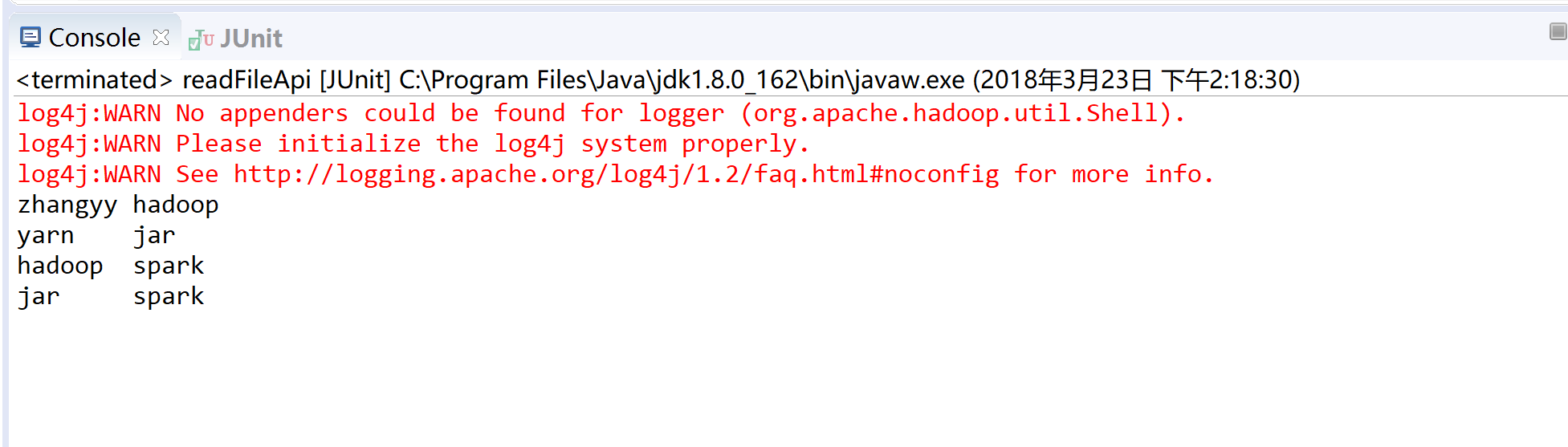

public class readFileApi {

@Test

public void readFileByApi() throws IOException {

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://172.17.100.11:8020");

FileSystem fs = FileSystem.get(conf);

Path p = new Path("/input/file1");

FSDataInputStream fis = fs.open(p);

byte[] buf = new byte[1024];

int len = -1;

ByteArrayOutputStream baos = new ByteArrayOutputStream();

while((len = fis.read(buf)) != -1) {

baos.write(buf, 0, len);

}

fis.close();

baos.close();

System.out.println(new String(baos.toByteArray()));

}

}

package it.xuegod.hadoop.hdfs;

import java.io.ByteArrayOutputStream;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.junit.Test;

/**

*

*

* @author zhangyy

*

* readfile

*

*/

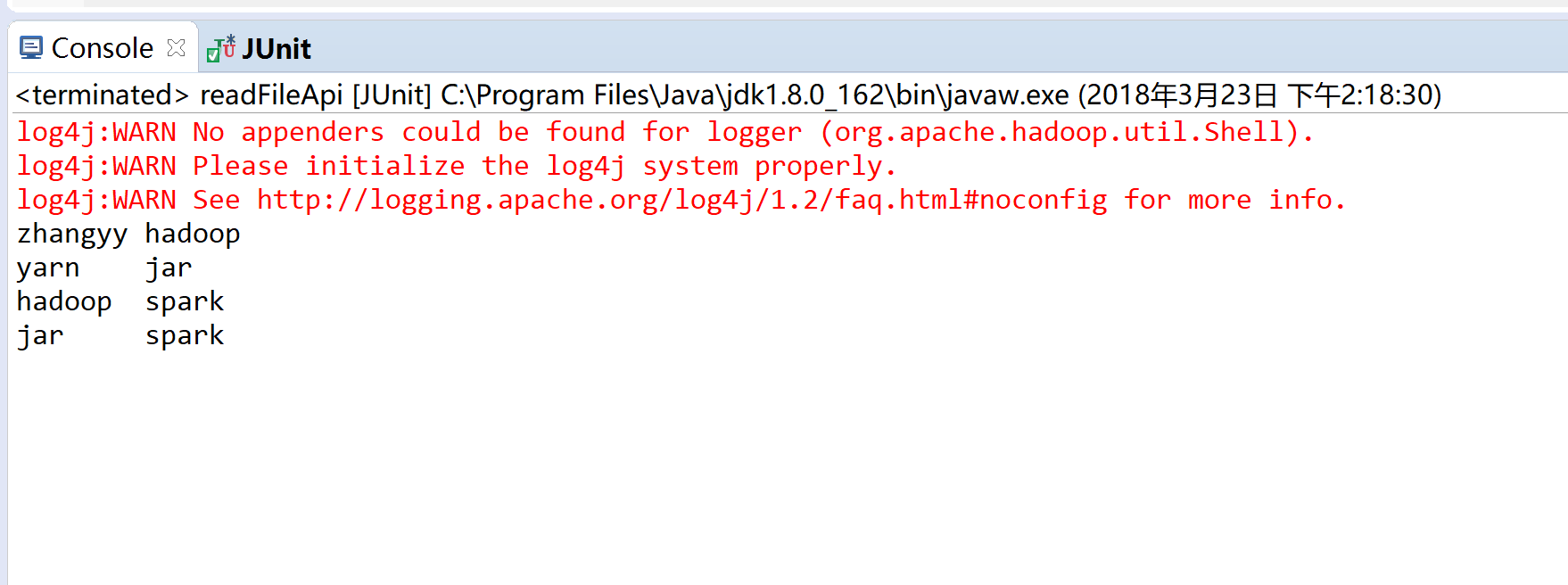

public class readFileApi2 {

@Test

public void readFileByApi() throws IOException {

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://172.17.100.11:8020");

FileSystem fs = FileSystem.get(conf);

ByteArrayOutputStream baos = new ByteArrayOutputStream();

Path p = new Path("/input/file1");

FSDataInputStream fis = fs.open(p);

IOUtils.copyBytes(fis, baos, 1024);

System.out.println(new String(baos.toByteArray()));

}

}

package it.xuegod.hadoop.hdfs;

import java.io.ByteArrayOutputStream;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.junit.Test;

/**

*

*

* @author zhangyy

*

* readfile

*

*/

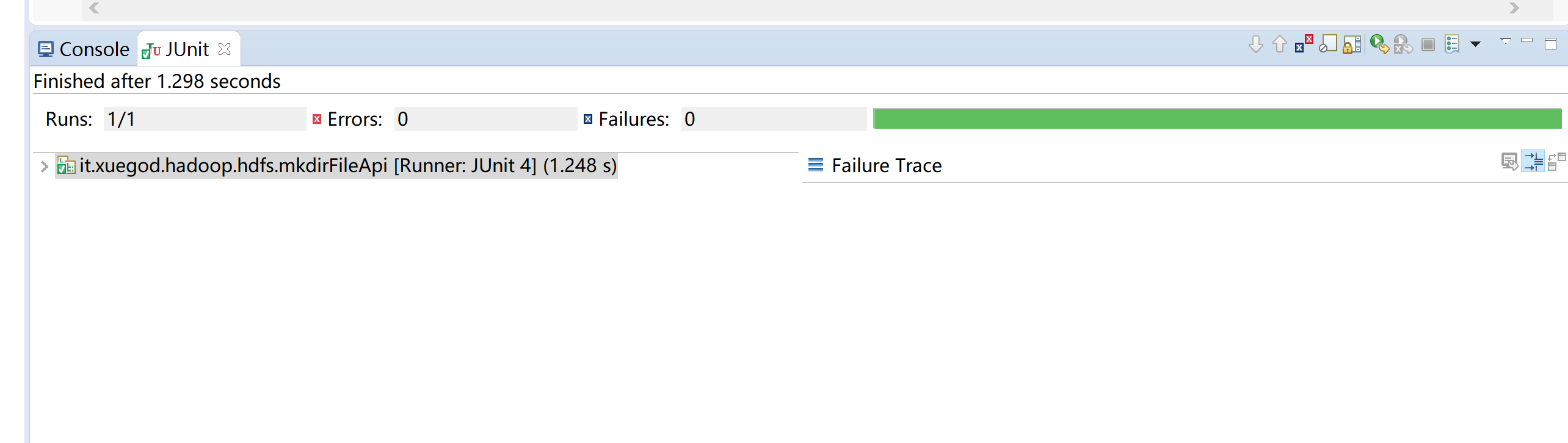

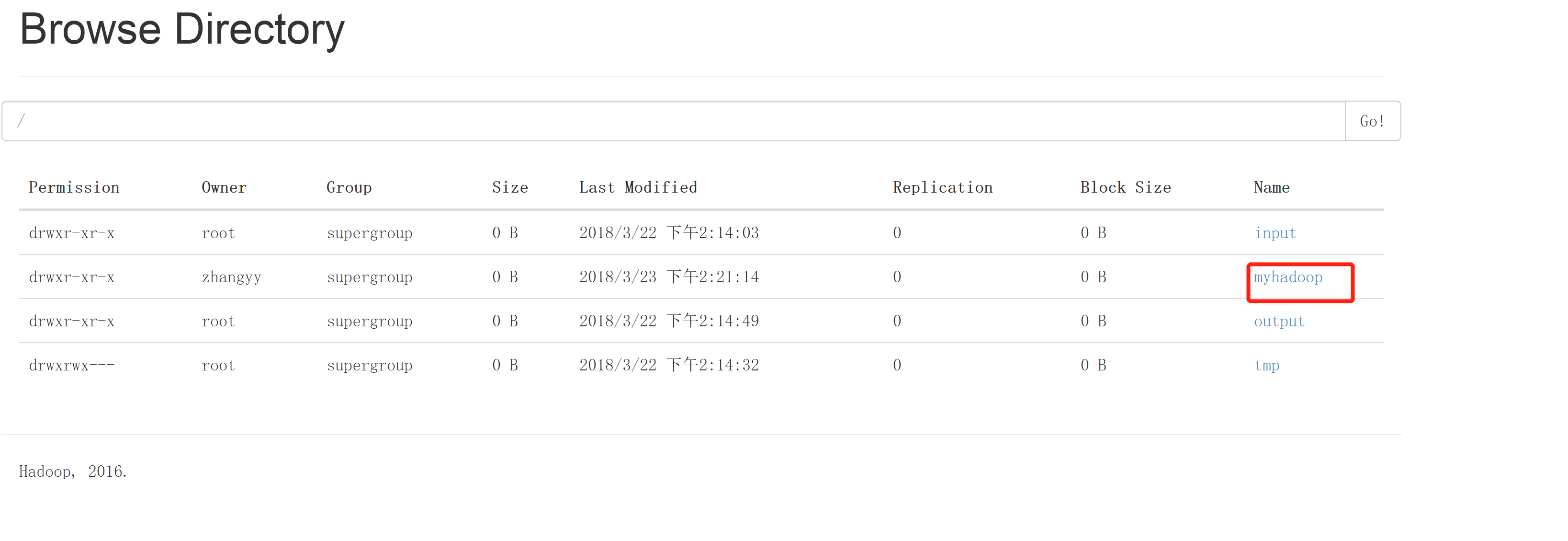

public class mkdirFileApi {

@Test

public void mkdirFileByApi() throws IOException {

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://172.17.100.11:8020");

FileSystem fs = FileSystem.get(conf);

fs.mkdirs(new Path("/myhadoop"));

}

}

###1.4: put写入文件

package it.xuegod.hadoop.hdfs;

import java.io.ByteArrayOutputStream;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.junit.Test;

/**

*

*

* @author zhangyy

*

* readfile

*

*/

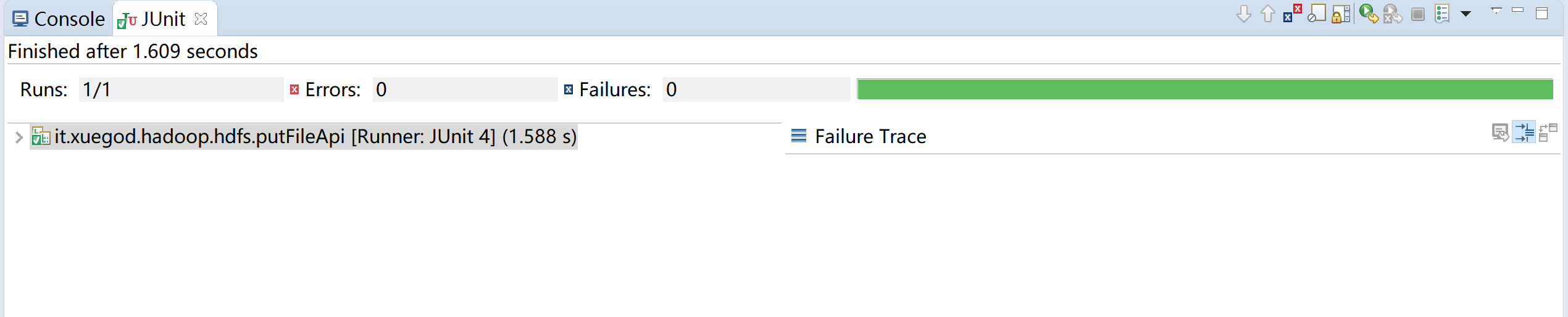

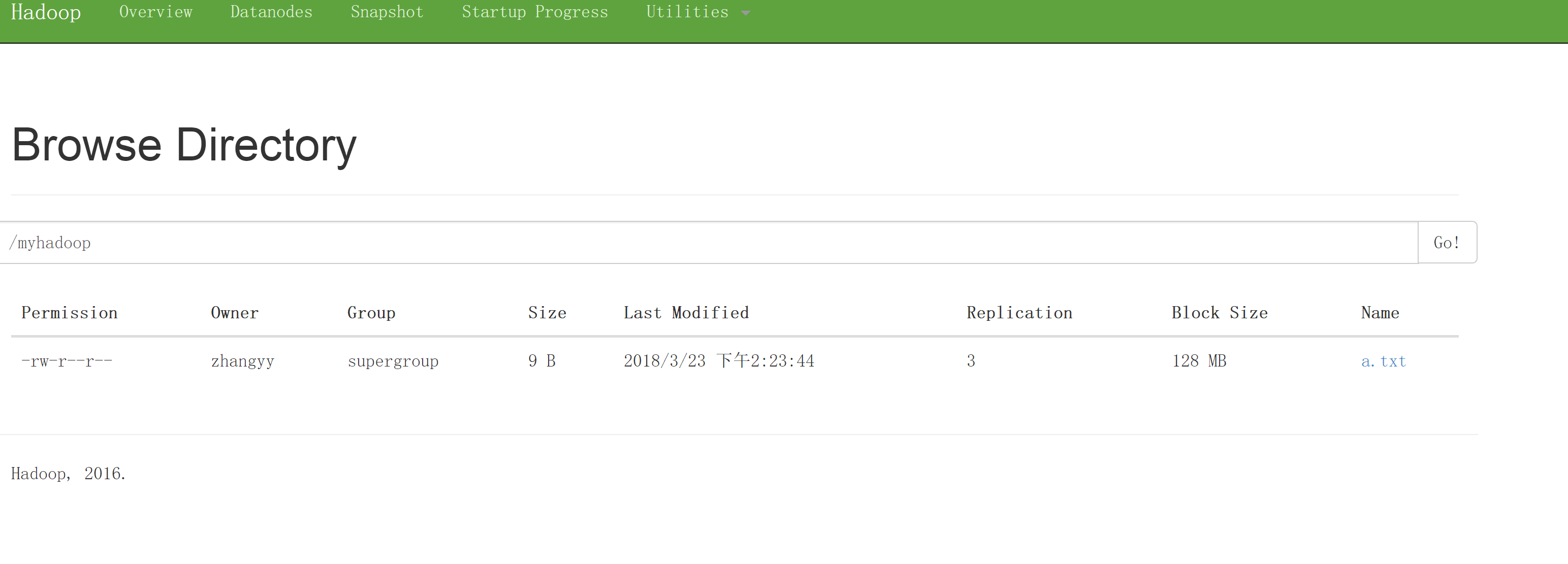

public class putFileApi {

@Test

public void putFileByApi() throws IOException {

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://172.17.100.11:8020");

FileSystem fs = FileSystem.get(conf);

FSDataOutputStream out = fs.create(new Path("/myhadoop/a.txt"));

out.write("helloword".getBytes());

out.close();

}

}

package it.xuegod.hadoop.hdfs;

import java.io.ByteArrayOutputStream;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.junit.Test;

/**

*

*

* @author zhangyy

*

* rmfile

*

*/

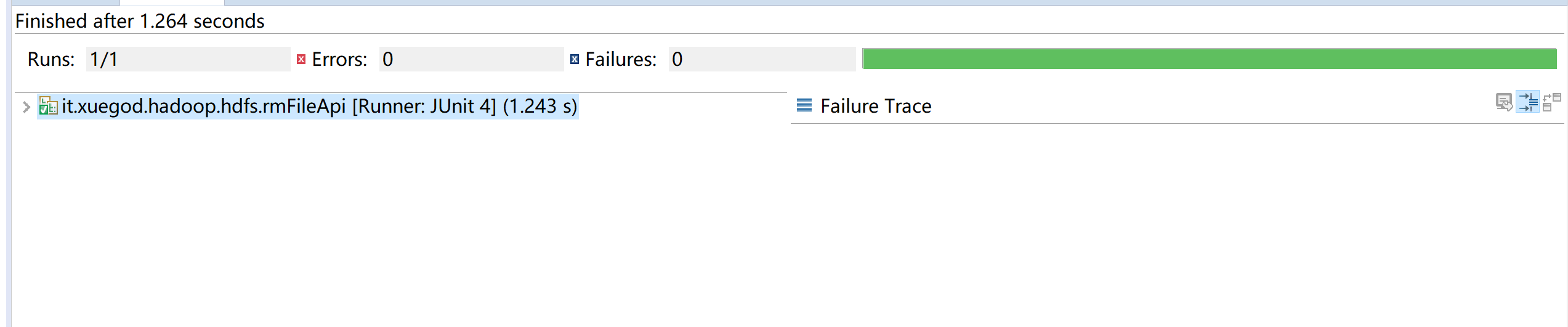

public class rmFileApi {

@Test

public void rmFileByApi() throws IOException {

Configuration conf = new Configuration();

conf.set("fs.defaultFS", "hdfs://172.17.100.11:8020");

FileSystem fs = FileSystem.get(conf);

Path p = new Path("/myhadoop");

fs.delete(p,true);

}

}

原文地址:http://blog.51cto.com/flyfish225/2096394