标签:搜索关键词 进程 三国 dex 通过 hasd 词库 comm net

Lucene.net是Lucene的.net移植版本,在较早之前是比较受欢迎的一个开源的全文检索引擎开发包,即它不是一个完整的全文检索引擎,而是一个全文检索引擎的架构,提供了完整的查询引擎和索引引擎。

例子的组件版本

Lucene.Net:3.0.3.0

盘古分词:2.4.0.0

Lucene.net有内置的分词算法,所有分词算法都继承Analyzer类;但对中文是按照单个字进行分词的,显然满足不了日常需求,所以常用到其他分词算法如盘古分词。

盘古分词下载后有以下dll内容

PanGu.dll :核心组件

PanGu.Lucene.Analyzer.dll :盘古分词针对Lucene.net 的接口组件,貌似词库写死在了dll文件里,没有配置词库的话,会读取dll里面的,否则直接读取配置的词库。

PanGu.HighLight.dll:高亮组件

PanGu.xml:xml文件配置,其中DictionaryPath 指明字典词库所在目录,可以为相对路径也可以为绝对路径

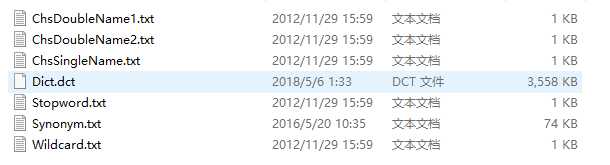

所需词库:

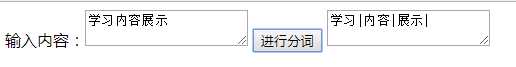

简单分词只引入PanGu.Lucene.Analyzer.dll 即可,例子如下:

[HttpPost] public ActionResult Cut_2(string str) { //盘古分词 StringBuilder sb = new StringBuilder(); Analyzer analyzer = new PanGuAnalyzer(); TokenStream tokenStream = analyzer.TokenStream("", new StringReader(str)); ITermAttribute item = tokenStream.GetAttribute<ITermAttribute>(); while (tokenStream.IncrementToken()) { sb.Append(item.Term + "|"); } tokenStream.CloneAttributes(); analyzer.Close(); return Content(sb.ToString()); }

效果如下:

项目前期准备:

PanGu.Segment.Init(@"D:\seo_lucene.net_demo\bin\PanGu\PanGu.xml"); //或 PanGu.Segment.Init();

2.创建索引

//索引地址

string indexPath = @"D:\学习代码\Seo-Lucene.Net\seo_lucene.net_demo\bin\LuceneIndex";

/// <summary> /// 索引目录 /// </summary> public Lucene.Net.Store.Directory directory { get { //创建索引目录 if (!System.IO.Directory.Exists(indexPath)) { System.IO.Directory.CreateDirectory(indexPath); } FSDirectory directory = FSDirectory.Open(new DirectoryInfo(indexPath), new NativeFSLockFactory()); return directory; } }

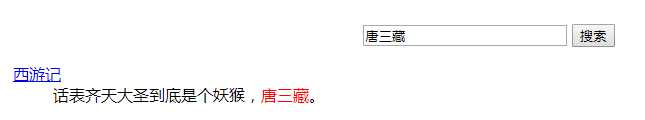

/// <summary> /// 创建索引 /// </summary> private void CreateIndex() { bool isExists = IndexReader.IndexExists(directory);//判断索引库是否存在 if (isExists) { //如果因异常情况索引目录被锁定,先解锁 //Lucene.Net每次操作索引库之前会自动加锁,在close的时候会自动解锁 //不能多线程执行,只能处理意外被永远锁定的情况 if (IndexWriter.IsLocked(directory)) { IndexWriter.Unlock(directory);//解锁 } } //IndexWriter第三个参数:true指重新创建索引,false指从当前索引追加,第一次新建索引库true,之后直接追加就可以了 IndexWriter writer = new IndexWriter(directory, new PanGuAnalyzer(), !isExists, Lucene.Net.Index.IndexWriter.MaxFieldLength.UNLIMITED); //Field.Store.YES:存储原文并且索引 //Field.Index. ANALYZED:分词存储 //Field.Index.NOT_ANALYZED:不分词存储 //一条Document相当于一条记录 //所有自定义的字段都是string try { //以下语句可通过id判断是否存在重复索引,存在则删除,如果不存在则删除0条 //writer.DeleteDocuments(new Term("id", "1"));//防止存在的数据 //writer.DeleteDocuments(new Term("id", "2"));//防止存在的数据 //writer.DeleteDocuments(new Term("id", "3"));//防止存在的数据 //或是删除所有索引 writer.DeleteAll(); writer.Commit(); //是否删除成功 var IsSuccess = writer.HasDeletions(); Document doc = new Document(); doc.Add(new Field("id", "1", Field.Store.YES, Field.Index.NOT_ANALYZED)); doc.Add(new Field("title", "三国演义", Field.Store.YES, Field.Index.ANALYZED, Lucene.Net.Documents.Field.TermVector.WITH_POSITIONS_OFFSETS)); doc.Add(new Field("Content", "刘备、云长、翼德点精兵三千,往北海郡进发。", Field.Store.YES, Field.Index.ANALYZED, Lucene.Net.Documents.Field.TermVector.WITH_POSITIONS_OFFSETS)); writer.AddDocument(doc); doc = new Document(); doc.Add(new Field("id", "2", Field.Store.YES, Field.Index.NOT_ANALYZED)); doc.Add(new Field("title", "西游记", Field.Store.YES, Field.Index.ANALYZED, Lucene.Net.Documents.Field.TermVector.WITH_POSITIONS_OFFSETS)); doc.Add(new Field("Content", "话表齐天大圣到底是个妖猴,唐三藏。", Field.Store.YES, Field.Index.ANALYZED, Lucene.Net.Documents.Field.TermVector.WITH_POSITIONS_OFFSETS)); writer.AddDocument(doc); doc = new Document(); doc.Add(new Field("id", "3", Field.Store.YES, Field.Index.NOT_ANALYZED)); doc.Add(new Field("title", "水浒传", Field.Store.YES, Field.Index.ANALYZED, Lucene.Net.Documents.Field.TermVector.WITH_POSITIONS_OFFSETS)); doc.Add(new Field("Content", "梁山泊义士尊晁盖 郓城县月夜走刘唐。", Field.Store.YES, Field.Index.ANALYZED, Lucene.Net.Documents.Field.TermVector.WITH_POSITIONS_OFFSETS)); writer.AddDocument(doc); } catch (FileNotFoundException fnfe) { throw fnfe; } catch (Exception ex) { throw ex; } finally { writer.Optimize(); writer.Dispose(); directory.Dispose(); } }

3.搜索

/// <summary> /// 搜索 /// </summary> /// <param name="txtSearch">搜索字符串</param> /// <param name="id">当前页</param> /// <returns></returns> [HttpGet] public ActionResult Search(string txtSearch,int id=1) { int pageNum = 1; int currentPageNo = id; IndexSearcher search = new IndexSearcher(directory, true); BooleanQuery bQuery = new BooleanQuery(); //总的结果条数 List<Article> list = new List<Article>(); int recCount = 0; //处理搜索关键词 txtSearch = LuceneHelper.GetKeyWordsSplitBySpace(txtSearch); //多个字段查询 标题和内容title, content MultiFieldQueryParser parser = new MultiFieldQueryParser(Lucene.Net.Util.Version.LUCENE_30,new string[] { "title", "Content" }, new PanGuAnalyzer()); Query query =parser.Parse(txtSearch); //Occur.Should 表示 Or 或查询, Occur.MUST 表示 and 与查询 bQuery.Add(query, Occur.MUST); if (bQuery != null && bQuery.GetClauses().Length > 0) { //盛放查询结果的容器 TopScoreDocCollector collector = TopScoreDocCollector.Create(1000, true); //使用query这个查询条件进行搜索,搜索结果放入collector search.Search(bQuery, null, collector); recCount = collector.TotalHits; //从查询结果中取出第m条到第n条的数据 ScoreDoc[] docs = collector.TopDocs((currentPageNo - 1) * pageNum, pageNum).ScoreDocs; //遍历查询结果 for (int i = 0; i < docs.Length; i++) { //只有 Field.Store.YES的字段才能用Get查出来 Document doc = search.Doc(docs[i].Doc); list.Add(new Article() { Id = doc.Get("id"), Title = LuceneHelper.CreateHightLight(txtSearch, doc.Get("title")),//高亮显示 Content = LuceneHelper.CreateHightLight(txtSearch, doc.Get("Content"))//高亮显示 }); } } //分页 PagedList<Article> plist = new PagedList<Article>(list, currentPageNo, pageNum, recCount); plist.TotalItemCount = recCount; plist.CurrentPageIndex = currentPageNo; return View("Index", plist); }

效果如下:

标签:搜索关键词 进程 三国 dex 通过 hasd 词库 comm net

原文地址:https://www.cnblogs.com/qiuguochao/p/9017154.html