标签:windows result 问题 tor cti nat cache 官方文档 callbacks

环境: Spark2.1.0 、Hadoop-2.7.5 代码运行系统:Win 7

在运行Spark程序写出文件(savaAsTextFile)的时候,我遇到了这个错误:

18/06/12 20:13:34 ERROR Utils: Aborting task

java.lang.UnsatisfiedLinkError: org.apache.hadoop.util.NativeCrc32.nativeComputeChunkedSumsByteArray(II[BI[BIILjava/lang/String;JZ)V

at org.apache.hadoop.util.NativeCrc32.nativeComputeChunkedSumsByteArray(Native Method)

at org.apache.hadoop.util.NativeCrc32.calculateChunkedSumsByteArray(NativeCrc32.java:86)

at org.apache.hadoop.util.DataChecksum.calculateChunkedSums(DataChecksum.java:430)

at org.apache.hadoop.fs.FSOutputSummer.writeChecksumChunks(FSOutputSummer.java:202)

at org.apache.hadoop.fs.FSOutputSummer.flushBuffer(FSOutputSummer.java:163)

at org.apache.hadoop.fs.FSOutputSummer.flushBuffer(FSOutputSummer.java:144)

at org.apache.hadoop.fs.FSOutputSummer.write1(FSOutputSummer.java:135)

at org.apache.hadoop.fs.FSOutputSummer.write(FSOutputSummer.java:110)

at org.apache.hadoop.fs.FSDataOutputStream$PositionCache.write(FSDataOutputStream.java:58)

at java.io.DataOutputStream.write(DataOutputStream.java:107)

at org.apache.hadoop.mapred.TextOutputFormat$LineRecordWriter.writeObject(TextOutputFormat.java:81)

at org.apache.hadoop.mapred.TextOutputFormat$LineRecordWriter.write(TextOutputFormat.java:102)

at org.apache.spark.internal.io.SparkHadoopWriter.write(SparkHadoopWriter.scala:94)

at org.apache.spark.rdd.PairRDDFunctions$$anonfun$saveAsHadoopDataset$1$$anonfun$12$$anonfun$apply$4.apply$mcV$sp(PairRDDFunctions.scala:1139)

at org.apache.spark.rdd.PairRDDFunctions$$anonfun$saveAsHadoopDataset$1$$anonfun$12$$anonfun$apply$4.apply(PairRDDFunctions.scala:1137)

at org.apache.spark.rdd.PairRDDFunctions$$anonfun$saveAsHadoopDataset$1$$anonfun$12$$anonfun$apply$4.apply(PairRDDFunctions.scala:1137)

at org.apache.spark.util.Utils$.tryWithSafeFinallyAndFailureCallbacks(Utils.scala:1371)

at org.apache.spark.rdd.PairRDDFunctions$$anonfun$saveAsHadoopDataset$1$$anonfun$12.apply(PairRDDFunctions.scala:1145)

at org.apache.spark.rdd.PairRDDFunctions$$anonfun$saveAsHadoopDataset$1$$anonfun$12.apply(PairRDDFunctions.scala:1125)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:87)

at org.apache.spark.scheduler.Task.run(Task.scala:108)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:338)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

18/06/12 20:13:34 ERROR Executor: Exception in task 0.0 in stage 11.0 (TID 16)

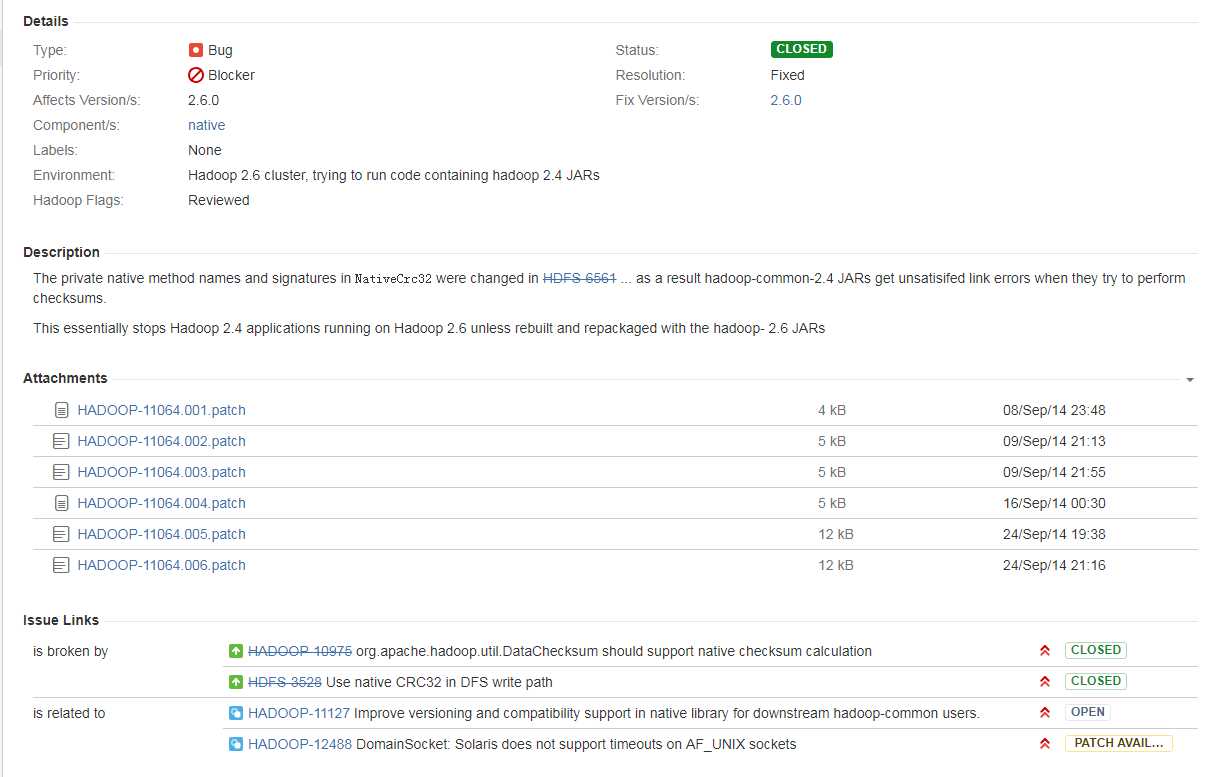

查到的还是什么window远程访问Hadoop的错误,最后查阅官方文档HADOOP-11064

后来在网上找到解决方案是:由于hadoop.dll 版本问题出现的,这是由于hadoop.dll 版本问题,2.4之前的和之后的需要的不一样,需要选择正确的版本(包括操作系统的版本),并且在 Hadoop/bin上将其替换。

我的hadoop是2.7.5的,我之前用的是1.2的hadoop.dll,后来根据网上的说法换了hadoop.dll,找了2.6的还是不行,原来我找的2.6的是32位操作系统的,后来幸运找到了2.6的64位的hadoop.dll。

Hadoop的在windows系统中运行时需要添加win系统的支持,最开始找的版本比较老换成新的dell文件即可

标签:windows result 问题 tor cti nat cache 官方文档 callbacks

原文地址:https://www.cnblogs.com/itboys/p/9176116.html