标签:pig asi 不能 lse 基础 数据库 自己的 能力 重启

https://www.cnblogs.com/ejiyuan/p/5591613.html

HBase是Apache Hadoop中的一个子项目,是一个HBase是一个开源的、分布式的、多版本的、面向列的、非关系(NoSQL)的、可伸缩性分布式数据存储模型,Hbase依托于Hadoop的HDFS作为最基本存储基础单元。HBase的服务器体系结构遵从简单的主从服务器架构,它由HRegion Server群和HMaster Server构成。HMaster Server负责管理所有的HRegion Server,而HBase中的所有Server都是通过Zookeeper进行的分布式信息共享与任务协调的工作。HMaster Server本身并不存储HBase中的任何数据,HBase逻辑上的表可能会被划分成多个Region,然后存储到HRegionServer群中,HRegionServer响应用户I/O请求,向HDFS文件系统中读写数据。HBase Master Server中存储的是从数据到HRegion Server的映射。

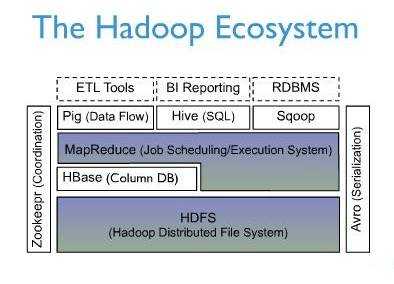

下面一幅图是Hbase在Hadoop Ecosystem中的位置

上图描述了Hadoop EcoSystem中的各层系统,其中HBase位于结构化存储层,Hadoop HDFS为HBase提供了高可靠性的底层存储支持,Hadoop MapReduce为HBase提供了高性能的计算能力,Zookeeper为HBase提供了稳定服务和failover机制。 此外,Pig和Hive还为HBase提供了高层语言支持,使得在HBase上进行数据统计处理变的非常简单。 Sqoop则为HBase提供了方便的RDBMS数据导入功能,使得传统数据库数据向HBase中迁移变的非常方便。

|

1

2

3

|

cd /usr/localtar -zxvf hbase-1.2.1-bin.tar.gzmv /home/hbase |

配置工作具体如下:

|

1

2

3

|

export JAVA_HOME=/usr/local/jdk1.8export HBASE_PID_DIR=/home/hbase/pid #使用mkdir /home/hbase/pid命令先创建export HBASE_MANAGES_ZK=false #不适用内置zookeeper,使用我们自己安装的(具体指定使用哪个zookeeper是通过/etc/profile中的ZK_HOME变量来指定的) |

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://master:9000/hbase</value>

<description>设置 hbase 数据库存放数据的目录,这里是放在hadoop hdfs上,这里要与hadoop的core-site.xml文件中的fs.default.name中的值一致,然后在后面添加自己的子目录,我这里定义是hbase</description>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

<description>打开 hbase 分布模式</description>

</property>

<property>

<name>hbase.master</name>

<value>master</value>

<description>指定 hbase 集群主控节点</description>

</property>

<property>

<name>hbase.tmp.dir</name>

<value>/home/user/tmp/hbase</value>

<description>hbase的一些临时文件存放目录。</description>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>master,slave1,slave2</value>

<description> 指定 zookeeper 集群节点名 , 因为是由 zookeeper 表决算法决定的</description>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

<description> 连接到zookeeper的端口,默认是2181</description>

</property>

</configuration>

msater slave1 slave2

|

1

2

|

scp /home/hbase root@slave1:/home/scp /home/hbase root@slave2:/home/ |

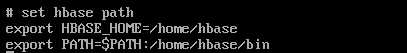

完成后使用vi /etc/profile 设置各自节点的环境变量

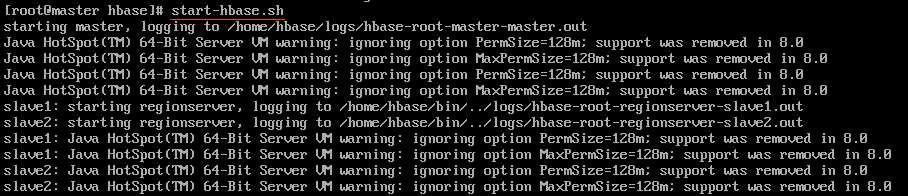

启动hbase前要确保,hadoop,zookeeper已经启动,进入$HBASE_HOME/bin目录下,输入命令start-hbase.sh

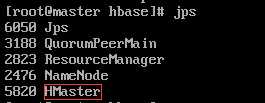

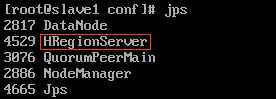

执行jps查看系统进程

其他节点

启动日志会输出到/home/hbase/logs/hbase-root-master-master.log中,可以查看排除异常

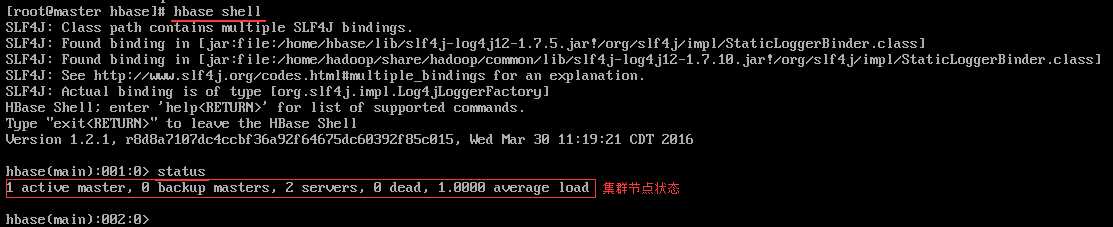

启动完成后,执行如下命令可以进入到hbase shell界面,使用命令status检查集群节点状态

这里可以使用 hbase shell命令执行数据库操作,具体参考 http://www.cnblogs.com/nexiyi/p/hbase_shell.html

另外也可以直接打开网址:http://192.168.137.122:16010/master-status,在web中查看集群状态,其中192.168.137.122是master所在节点的IP,16010为hbase默认端口(老版本中为60010)

本次安装测试中主要出现了一下几个错误:

|

1

2

3

4

5

6

7

8

9

10

11

|

org.apache.hadoop.hbase.ClockOutOfSyncException: org.apache.hadoop.hbase.ClockOutOfSyncException: Server hadoopslave2,60020,1372320861420 has been rejected; Reported time is too far out of sync with master. Time difference of 143732ms > max allowed of 30000ms at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:525) at org.apache.hadoop.ipc.RemoteException.instantiateException(RemoteException.java:95) at org.apache.hadoop.ipc.RemoteException.unwrapRemoteException(RemoteException.java:79) at org.apache.hadoop.hbase.regionserver.HRegionServer.reportForDuty(HRegionServer.java:2093) at org.apache.hadoop.hbase.regionserver.HRegionServer.run(HRegionServer.java:744) at java.lang.Thread.run(Thread.java:722)Caused by: org.apache.hadoop.ipc.RemoteException: org.apache.hadoop.hbase.ClockOutOfSyncException: Server hadoopslave2,60020,1372320861420 has been rejected; Reported time is too far out of sync with master. Time difference of 143732ms > max allowed of 30000ms |

在各节点的hbase-site.xml文件中加入下列代码

<property>

<name>hbase.master.maxclockskew</name>

<value>200000</value>

</property>

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

|

org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.fs.PathIsNotEmptyDirectoryException): `/hbase/WALs/slave1,16000,1446046595488-splitting is non empty‘: Directory is not empty at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.deleteInternal(FSNamesystem.java:3524) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.deleteInt(FSNamesystem.java:3479) at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.delete(FSNamesystem.java:3463) at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.delete(NameNodeRpcServer.java:751) at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.delete(ClientNamenodeProtocolServerSideTranslatorPB.java:562) at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java) at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:585) at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:928) at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2013) at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2009) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:415) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1614) at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2007) at org.apache.hadoop.ipc.Client.call(Client.java:1411) at org.apache.hadoop.ipc.Client.call(Client.java:1364) at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:206) at com.sun.proxy.$Proxy15.delete(Unknown Source) at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.delete(ClientNamenodeProtocolTranslatorPB.java:490) at sun.reflect.GeneratedMethodAccessor7.invoke(Unknown Source) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:187) at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102) at com.sun.proxy.$Proxy16.delete(Unknown Source) at sun.reflect.GeneratedMethodAccessor7.invoke(Unknown Source) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.hadoop.hbase.fs.HFileSystem$1.invoke(HFileSystem.java:279) at com.sun.proxy.$Proxy17.delete(Unknown Source) at sun.reflect.GeneratedMethodAccessor7.invoke(Unknown Source) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.hadoop.hbase.fs.HFileSystem$1.invoke(HFileSystem.java:279) at com.sun.proxy.$Proxy17.delete(Unknown Source) at org.apache.hadoop.hdfs.DFSClient.delete(DFSClient.java:1726) at org.apache.hadoop.hdfs.DistributedFileSystem$11.doCall(DistributedFileSystem.java:588) at org.apache.hadoop.hdfs.DistributedFileSystem$11.doCall(DistributedFileSystem.java:584) at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81) at org.apache.hadoop.hdfs.DistributedFileSystem.delete(DistributedFileSystem.java:584) at org.apache.hadoop.hbase.master.SplitLogManager.splitLogDistributed(SplitLogManager.java:297) at org.apache.hadoop.hbase.master.MasterFileSystem.splitLog(MasterFileSystem.java:400) at org.apache.hadoop.hbase.master.MasterFileSystem.splitLog(MasterFileSystem.java:373) at org.apache.hadoop.hbase.master.MasterFileSystem.splitLog(MasterFileSystem.java:295) at org.apache.hadoop.hbase.master.procedure.ServerCrashProcedure.splitLogs(ServerCrashProcedure.java:388) at org.apache.hadoop.hbase.master.procedure.ServerCrashProcedure.executeFromState(ServerCrashProcedure.java:228) at org.apache.hadoop.hbase.master.procedure.ServerCrashProcedure.executeFromState(ServerCrashProcedure.java:72) at org.apache.hadoop.hbase.procedure2.StateMachineProcedure.execute(StateMachineProcedure.java:119) at org.apache.hadoop.hbase.procedure2.Procedure.doExecute(Procedure.java:452) at org.apache.hadoop.hbase.procedure2.ProcedureExecutor.execProcedure(ProcedureExecutor.java:1050) at org.apache.hadoop.hbase.procedure2.ProcedureExecutor.execLoop(ProcedureExecutor.java:841) at org.apache.hadoop.hbase.procedure2.ProcedureExecutor.execLoop(ProcedureExecutor.java:794) at org.apache.hadoop.hbase.procedure2.ProcedureExecutor.access$400(ProcedureExecutor.java:75) at org.apache.hadoop.hbase.procedure2.ProcedureExecutor$2.run(ProcedureExecutor.java:479) |

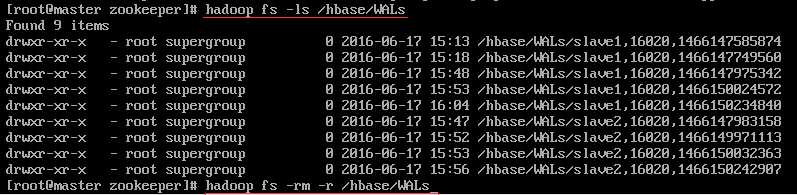

参考https://issues.apache.org/jira/browse/HBASE-14729,进入hadoop文件系统,删除掉报错的目录或真个WALs

|

1

2

3

4

5

6

7

8

9

10

|

zookeeper.MetaTableLocator: Failed verification of hbase:meta,,1 at address=slave1,16020,1428456823337, exception=org.apache.hadoop.hbase.NotServingRegionException: Region hbase:meta,,1 is not online on worker05,16020,1428461295266 at org.apache.hadoop.hbase.regionserver.HRegionServer.getRegionByEncodedName(HRegionServer.Java:2740) at org.apache.hadoop.hbase.regionserver.RSRpcServices.getRegion(RSRpcServices.java:859) at org.apache.hadoop.hbase.regionserver.RSRpcServices.getRegionInfo(RSRpcServices.java:1137) at org.apache.hadoop.hbase.protobuf.generated.AdminProtos$AdminService$2.callBlockingMethod(AdminProtos.java:20862) at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:2031) at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:107) at org.apache.hadoop.hbase.ipc.RpcExecutor.consumerLoop(RpcExecutor.java:130) at org.apache.hadoop.hbase.ipc.RpcExecutor$1.run(RpcExecutor.java:107) at java.lang.Thread.run(Thread.java:745) |

HMaster启动之后自动挂掉(或非正常重启),并且master的log里出现“TableExistsException: hbase:namespace”字样;

很可能是更换了Hbase的版本过后zookeeper还保留着上一次的Hbase设置,所以造成了冲突.

删除zookeeper信息,重启之后就没问题了

|

1

2

3

4

|

# sh zkCli.sh -server slave1:2181[zk: slave1:2181(CONNECTED) 0] ls /[zk: slave1:2181(CONNECTED) 0] rmr /hbase[zk: slave1:2181(CONNECTED) 0] quit |

标签:pig asi 不能 lse 基础 数据库 自己的 能力 重启

原文地址:https://www.cnblogs.com/qqflying/p/9186381.html