标签:red ddr 3.x https pad provider 部署安装 mic break

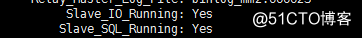

前两天逛博客偶然发现了某大神写的关于MMM的文章,看完就迫不及待的自己试了一下,大神写的很顺畅,以为自己也能操作的很顺畅,但是实际情况是手脚太不麻利,碰到很多坑,如果大神的文章是“平地起高楼”,那我这篇则是“平房起别墅”,因为我生产环境中已经有两台Mysql做双主同步,这篇文章说的是如何改造升级现有架构!环境介绍:

开始部署

第一部分MMM部署安装

一.安装mysql5.6-34(双主双从都进行安装)

1.yum -y install gcc perl lua-devel pcre-devel openssl-devel gd-devel gcc-c++ ncurses-devel libaio autoconf

2.tar xvf mysql-5.6.34-linux-glibc2.5-x86_64.tar.gz

3.useradd mysql

4.mv mysql-5.6.34-linux-glibc2.5-x86_64 /data/mysql && chown -R mysql.mysql /data/mysql/

5.vim my.cnf(4台服务器server_id不同)

[mysqld] socket = /data/mysql/mysql.sock pid-file = /data/mysql/mysql.pid basedir = /data/mysql datadir = /data/mysql/data tmpdir = /data/mysql/data log_error = error.log relay_log = relay.log binlog-ignore-db=mysql,information_schema character_set_server=utf8 log_bin=mysql_bin server_id=1 log_slave_updates=true sync_binlog=1 auto_increment_increment=2 auto_increment_offset=1

6.cd /data/mysql/ && scripts/mysql_install_db --user=mysql --basedir=/data/mysql --datadir=/data/mysql/data/

7.vim /root/.bash_profile 修改 PATH=$PATH:$HOME/bin 为:

PATH=$PATH:$HOME/bin:/data/mysql/bin:/data/mysql/lib

8.source /root/.bash_profile

9.cp support-files/mysql.server /etc/init.d/mysql

10.service mysql start

二.实现双主复制

因为我的环境已经是双主复制,这里只把实现的命令写出来

1.在192.168.6.30(master1)上执行

grant replication slave on *.* to 'replication'@'192.168.6.%' identified by '123456'; show master status; change master to master_host='192.168.6.31',master_user='replication',master_password='123456',master_log_file='mysql_bin.000001',master_log_pos=330; start slave; show slave status\G;

2.,在192.168.6.31(master2)上执行

grant replication slave on *.* to 'replication'@'192.168.6.%' identified by '123456'; show master status; change master to master_host='192.168.6.30',master_user='replication',master_password='123456',master_log_file='mysql_bin.000001',master_log_pos=334; start slave; show slave status\G;

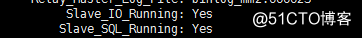

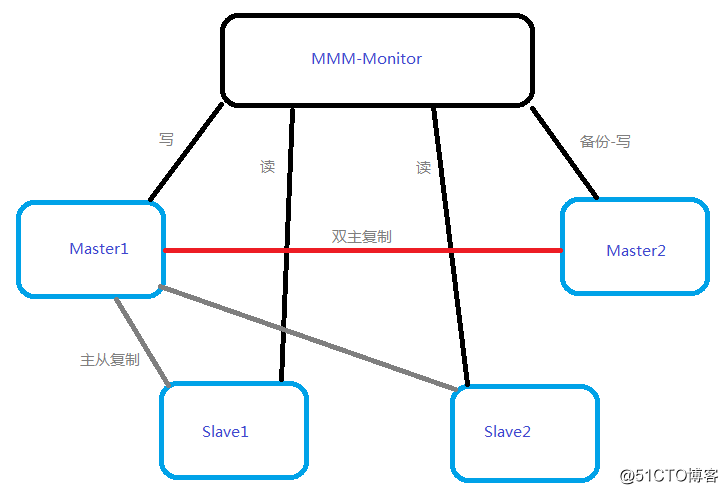

观察是否是两个YES,如果是则双主同步成功

观察是否是两个YES,如果是则双主同步成功

三.如何加入正在运行的mysql双主复制(不停服务加slave复制)

其实平时能让运维从0搭建一套Mysql集群是很少见的,大都是现成的环境然后进行升级,里面有数据,并且有重要业务不能停服务,笔者在做实验的时候以为可以直接加入,结果每秒都在变化的POS根本不能直接加入进去,于是上网查了一些资料,找到了不停服务也能加入的方法。

1.导出全库,将binlog点和pos写到文件中

mysqldump --skip-lock-tables --single-transaction --master-data=2 -A > ~/dump.sql

--master-data:默认等于1,将dump起始(change master to)binlog点和pos值写到结果中,等于2是将change master to写到结果中并注释。-A就是备份全库

--single_transaction:导出开始时设置事务隔离状态,并使用一致性快照开始事务,然后unlock tables;而lock-tables是锁住一张表不能写操作,直到dump完毕。

2.查询里面的POS值和binlog值

head dump.sql -n80 | grep "MASTER_LOG_POS"

CHANGE MASTER TO MASTER_LOG_FILE='mysql_bin.000149', MASTER_LOG_POS=120;

3.将文件进行压缩

gzip ~/dump.sql

4.拷贝文件到两个从服务器,解压后导入

scp dump.gz 192.168.6.107:/root scp dump.gz 192.168.6.108:/root gunzip dump.gz mysql < dump.sql

5.加入两台Slave服务器

在192.168.6.107(slave1)上执行:

change master to master_host='192.168.6.30',master_user='replication',master_password='123456',master_log_file='mysql_bin.000149',master_log_pos=120; start slave; show slave status\G;

在192.168.6.108(slave2)上执行:

change master to master_host='192.168.6.30',master_user='replication',master_password='123456',master_log_file='mysql_bin.000149',master_log_pos=120; start slave; show slave status\G;

观察是否是两个YES,如果是则主从同步成功

观察是否是两个YES,如果是则主从同步成功

四.安装部署MMM服务器

1.安装mmm软件包(4台都装)

yum -y install mysql-mmm* #Centos7和6安装的包数量不一致,这个可以暂时忽略不计

2.在Monitor端编辑mysql-mmm配置文件

vim /etc/mysql-mmm/mmm_common.conf

active_master_role writer <host default> cluster_interface ens160 #Centos6的网卡名称为eth0 pid_path /run/mysql-mmm-agent.pid #Centos6的路径为/var/run/mysql-mmm-agent.pid bin_path /usr/libexec/mysql-mmm/ replication_user replication replication_password 123456 agent_user mmm_agent agent_password RepAgent </host> <host db1> #agent连接时使用的名称 ip 192.168.6.30 mode master #角色 peer db1 </host> <host db2> ip 192.168.6.31 mode master peer db2 </host> <host db3> ip 192.168.6.107 mode slave </host> <host db4> ip 192.168.6.108 mode slave </host> <role writer> hosts db1, db2 ips 192.168.6.35 #VIP mode exclusive </role> <role reader> hosts db3, db4 ips 192.168.6.36,192.168.6.37 mode balanced #负载均衡 </role>

3.将此文件复制到4个服务器端的指定位置,并更改上面指定位置的文字

scp /etc/mysql-mmm/mmm_common.conf 192.168.6.30:/etc/mysql-mmm/mmm_common.conf scp /etc/mysql-mmm/mmm_common.conf 192.168.6.31:/etc/mysql-mmm/mmm_common.conf scp /etc/mysql-mmm/mmm_common.conf 192.168.6.107:/etc/mysql-mmm/mmm_common.conf scp /etc/mysql-mmm/mmm_common.conf 192.168.6.108:/etc/mysql-mmm/mmm_common.conf

4.在Monitor端编辑mmm-mon文件

vim /etc/mysql-mmm/mmm_mon.conf

include mmm_common.conf <monitor> ip 127.0.0.1 pid_path /run/mysql-mmm-monitor.pid #因为Monitor端是Centos7所以不需要更改 bin_path /usr/libexec/mysql-mmm status_path /var/lib/mysql-mmm/mmm_mond.status ping_ips 192.168.6.30,192.168.6.31,192.168.6.107,192.168.6.108 #把4台服务器的ip写好 auto_set_online 10 #设置10秒无响应就显示离线 </monitor> <host default> monitor_user mmm_monitor monitor_password RepMonitor </host> debug 0

5.启动监控

systemctl start mysql-mmm-monitor

五.配置四台mysql服务器的mysql-mmm代理

1.刚才在配置文件中有设置监控用户和代理用户,需要在数据库中进行授权,因为是主从复制,只需要在主上进行授权即可

grant super, replication client, process on *.* to 'mmm_agent'@'192.168.6.%' identified by 'RepAgent'; grant replication client on *.* to 'mmm_monitor'@'192.168.6.%' identified by 'RepMonitor'; flush privleges;

2.分别在4台服务器上编辑mmm_agent文件

vim /etc/mysql-mmm/mmm_agent.conf

this db3 #是哪个服务器就写哪个host,跟mmm_common.conf对应

3.开启对应防火墙端口

iptables -I INPUT -p tcp --dport 9989 -j ACCEPT #代理服务的端口,需要与Monitor端进行通讯

4.启动代理服务

systemctl start mysql-mmm-agent #Centos7 service mysql-mmm-agent start #Centos6

六.查看Mysql集群状态及故障测试

1.在Monitor端执行mmm_control show

db1(192.168.6.30) master/ONLINE. Roles: writer(192.168.6.35)

db2(192.168.6.31) master/ONLINE. Roles:

db3(192.168.6.107) slave/ONLINE. Roles: reader(192.168.6.36)

db4(192.168.6.108) slave/ONLINE. Roles: reader(192.168.6.37)

出现如上显示则表示配置正确

2.故障测试

①在master端执行 service mysql stop

db1(192.168.6.30) master/HARD_OFFLINE. Roles:

db2(192.168.6.31) master/ONLINE. Roles: writer(192.168.6.35)

db3(192.168.6.107) slave/ONLINE. Roles: reader(192.168.6.36)

db4(192.168.6.108) slave/ONLINE. Roles: reader(192.168.6.37)

VIP成功转移

②在slave端执行 service mysql stop

db1(192.168.6.30) master/HARD_OFFLINE. Roles:

db2(192.168.6.31) master/ONLINE. Roles: writer(192.168.6.35)

db3(192.168.6.107) slave/ONLINE. Roles: reader(192.168.6.36),reader(192.168.6.37)

db4(192.168.6.108) slave/HARD_OFFLINE. Roles:

VIP成功转移,当重新启动mysql服务时,状态就会恢复到ONLINE,到此MMM架构的MySQL集群就已经配置完成了

第二部分 利用Amoeba实现单点登录

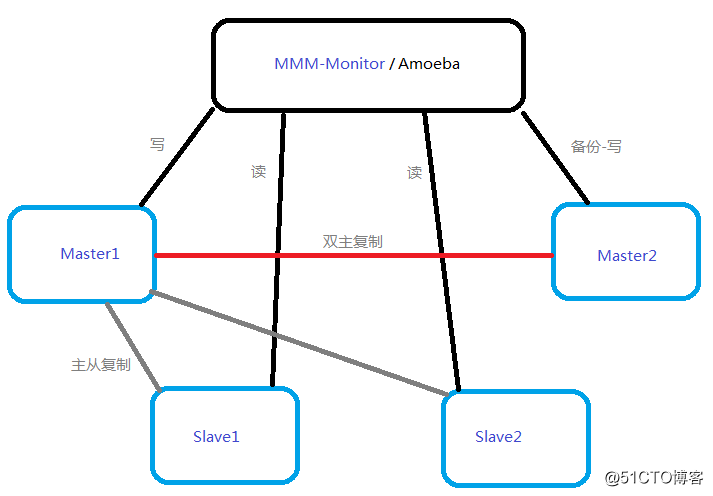

由于MMM提供3个出口,1个写2个读,要想连接这个架构需要把应用也分成读和写两部分,这里就可以使用中间件来整合这个架构

Amoeba是什么?

Amoeba(变形虫)项目,该开源框架于2008年 开始发布一款 Amoeba for Mysql软件。这个软件致力于MySQL的分布式数据库前端代理层,它主要在应用层访问MySQL的时候充当SQL路由功能,专注于分布式数据库代理层(Database Proxy)开发。座落与 Client、DB Server(s)之间,对客户端透明。具有负载均衡、高可用性、SQL 过滤、读写分离、可路由相关的到目标数据库、可并发请求多台数据库合并结果。 通过Amoeba你能够完成多数据源的高可用、负载均衡、数据切片的功能,目前Amoeba已在很多 企业的生产线上面使用。

开始部署

1.下载amoeba

wget https://sourceforge.net/projects/amoeba/files/Amoeba%20for%20mysql/3.x/amoeba-mysql-3.0.5-RC-distribution.zip

2.安装

unzip amoeba-mysql-3.0.5-RC-distribution.zip mv amoeba-mysql-3.0.5-RC /usr/local/amoeba/

3.Java环境安装

tar xvf jdk-8u111-linux-x64.tar.gz mv jdk1.8.0_111/ /usr/local/jdk

vim /etc/profile

export JAVA_HOME=/usr/local/jdk

export PATH=${PATH}:${JAVA_HOME}/bin

export CLASSPATH=${CLASSPATH}:${JAVA_HOME}/libsource /etc/profile

4.配置amoeba

vim /usr/local/amoeba/conf/dbServers.xml

<?xml version="1.0" encoding="gbk"?>

<!DOCTYPE amoeba:dbServers SYSTEM "dbserver.dtd">

<amoeba:dbServers xmlns:amoeba="http://amoeba.meidusa.com/">

<!--

Each dbServer needs to be configured into a Pool,

If you need to configure multiple dbServer with load balancing that can be simplified by the following configuration:

add attribute with name virtual = "true" in dbServer, but the configuration does not allow the element with name factoryConfig

such as 'multiPool' dbServer

-->

<dbServer name="abstractServer" abstractive="true">

<factoryConfig class="com.meidusa.amoeba.mysql.net.MysqlServerConnectionFactory">

<property name="connectionManager">${defaultManager}</property>

<property name="sendBufferSize">64</property>

<property name="receiveBufferSize">128</property>

<!-- mysql port --

<property name="port">3306</property> <!--设置Amoeba要连接的mysql数据库的端口,默认是3306-->

<!-- mysql schema -->

<property name="schema">amoeba</property> <!--设置缺省的数据库-->

<!-- mysql user -->

<property name="user">amoeba</property> <!--设置amoeba连接后端数据库服务器的账号和密码,需要在Mysql中授权-->

<property name="password">12345</property>

</factoryConfig>

<poolConfig class="com.meidusa.toolkit.common.poolable.PoolableObjectPool">

<property name="maxActive">500</property>

<property name="maxIdle">500</property>

<property name="minIdle">1</property>

<property name="minEvictableIdleTimeMillis">600000</property>

<property name="timeBetweenEvictionRunsMillis">600000</property>

<property name="testOnBorrow">true</property>

<property name="testOnReturn">true</property>

<property name="testWhileIdle">true</property>

</poolConfig>

</dbServer>

<dbServer name="writedb" parent="abstractServer"> <!--设置写服务器名称-->

<factoryConfig>

<!-- mysql ip -->

<property name="ipAddress">192.168.6.35</property> <!--写服务器VIP-->

</factoryConfig>

</dbServer>

<dbServer name="slave1" parent="abstractServer"> <!--设置读服务器名称-->

<factoryConfig>

<!-- mysql ip -->

<property name="ipAddress">192.168.6.36</property> <!--读服务器VIP-->

</factoryConfig>

</dbServer>

<dbServer name="slave2" parent="abstractServer">

<factoryConfig>

<!-- mysql ip -->

<property name="ipAddress">192.168.6.37</property>

</factoryConfig>

</dbServer>

<dbServer name="myslaves" virtual="true"> <!--读服务器池的名称-->

<poolConfig class="com.meidusa.amoeba.server.MultipleServerPool">

<!-- Load balancing strategy: 1=ROUNDROBIN , 2=WEIGHTBASED , 3=HA-->

<property name="loadbalance">1</property> <!--调度算法选择轮询-->

<!-- Separated by commas,such as: server1,server2,server1 -->

<property name="poolNames">slave1,slave2</property> <!--读服务器池的服务器-->

</poolConfig>

</dbServer>

</amoeba:dbServers>vim /usr/local/amoeba/conf/amoeba.xml

<?xml version="1.0" encoding="gbk"?>

<!DOCTYPE amoeba:configuration SYSTEM "amoeba.dtd">

<amoeba:configuration xmlns:amoeba="http://amoeba.meidusa.com/">

<proxy>

<!-- service class must implements com.meidusa.amoeba.service.Service -->

<service name="Amoeba for Mysql" class="com.meidusa.amoeba.mysql.server.MySQLService">

<!-- port -->

<property name="port">8066</property> <!--登录数据库时连接的端口-->

<!-- bind ipAddress -->

<!--

<property name="ipAddress">127.0.0.1</property> <!--登录数据库时连接的IP-->

-->

<property name="connectionFactory">

<bean class="com.meidusa.amoeba.mysql.net.MysqlClientConnectionFactory">

<property name="sendBufferSize">128</property>

<property name="receiveBufferSize">64</property>

</bean>

</property>

<property name="authenticateProvider">

<bean class="com.meidusa.amoeba.mysql.server.MysqlClientAuthenticator">

<property name="user">root</property> <!--连接数据库时使用的用户名-->

<property name="password">123456</property> <!--连接数据库时使用的密码-->

<property name="filter">

<bean class="com.meidusa.toolkit.net.authenticate.server.IPAccessController">

<property name="ipFile">${amoeba.home}/conf/access_list.conf</property>

</bean>

</property>

</bean>

</property>

</service>

<runtime class="com.meidusa.amoeba.mysql.context.MysqlRuntimeContext">

<!-- proxy server client process thread size -->

<property name="executeThreadSize">128</property>

<!-- per connection cache prepared statement size -->

<property name="statementCacheSize">500</property>

<!-- default charset -->

<property name="serverCharset">utf8</property>

<!-- query timeout( default: 60 second , TimeUnit:second) -->

<property name="queryTimeout">60</property>

</runtime>

</proxy>

<!--

Each ConnectionManager will start as thread

manager responsible for the Connection IO read , Death Detection

-->

<connectionManagerList>

<connectionManager name="defaultManager" class="com.meidusa.toolkit.net.MultiConnectionManagerWrapper">

<property name="subManagerClassName">com.meidusa.toolkit.net.AuthingableConnectionManager</property>

</connectionManager>

</connectionManagerList>

<!-- default using file loader -->

<dbServerLoader class="com.meidusa.amoeba.context.DBServerConfigFileLoader">

<property name="configFile">${amoeba.home}/conf/dbServers.xml</property>

</dbServerLoader>

<queryRouter class="com.meidusa.amoeba.mysql.parser.MysqlQueryRouter">

<property name="ruleLoader">

<bean class="com.meidusa.amoeba.route.TableRuleFileLoader">

<property name="ruleFile">${amoeba.home}/conf/rule.xml</property>

<property name="functionFile">${amoeba.home}/conf/ruleFunctionMap.xml</property>

</bean>

</property>

<property name="sqlFunctionFile">${amoeba.home}/conf/functionMap.xml</property>

<property name="LRUMapSize">1500</property>

<property name="defaultPool">writedb</property> <!--默认使用的服务器名-->

<property name="writePool">writedb</property> <!--写服务器池的名称-->

<property name="readPool">myslaves</property> <!--读服务器池的名称-->

<property name="needParse">true</property>

</queryRouter>

</amoeba:configuration>5.授权amoeba用户

在master1上执行

create database amoeba; grant all on *.* to amoeba@"192.168.6.109"; flush privilegs;

6.修改JVM数值

vim /usr/local/amoeba/jvm.properties

JVM_OPTIONS="-server -Xms256m -Xmx1024m -Xss256k #将Xss数值改成256k以上,否则服务无法启动

7.启动服务

/usr/local/amoeba/bin/launcher &

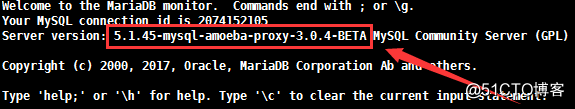

8.在Monitor端登录Amoeba

mysql -uroot -p123456 -h127.0.0.1 -P8066

出现如上显示登录成功。

附上最终架构图:

MMM架构实现MySQL高可用读写分离(进阶版,包含Amoeba)

标签:red ddr 3.x https pad provider 部署安装 mic break

原文地址:http://blog.51cto.com/forall/2147017