标签:generator minutes tcp ip地址 mib running sele logs openjdk

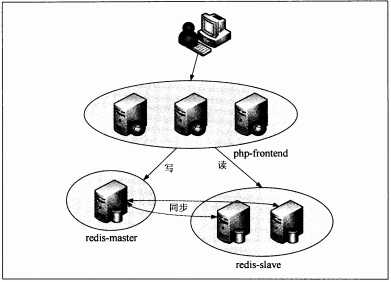

首先看一个例子,Guestbook,对k8s对于容器应用的基本操作和用法进行理解,本例通过pod 、RC、Service等资源对象创建完成,架构图:

①、创建redis-master的RC和Service

[root@k8s_master php-redis]# cat redis-master-controller-RC.yaml apiVersion: v1 kind: ReplicationController metadata: name: redis-master #rc的名称 labels: name: redis-master #rc的标签 spec: replicas: 1 #pod的副本数量 selector: name: redis-master #选择管理的pod (这里是标签名称) template: metadata: labels: name: redis-master #pod的标签名称,名称与上面的seletor一样 spec: #pod内的容器属性信息 containers: - name: master image: docker.io/kubeguide/redis-master imagePullPolicy: IfNotPresent ports: - containerPort: 6379 [root@k8s_master php-redis]# cat redis-master-controller-Service.yaml apiVersion: v1 kind: Service metadata: name: redis-master labels: name: redis-master spec: ports: - port: 6379 #自定义指定的对外提供服务的端口 targetPort: 6379 #pod里容器暴露的端口 selector: name: redis-master #选择的pod标签

执行命令创建

kubectl create -f redis-master-controller-RC.yaml -f redis-master-controller-Service.yaml

②、创建redis-slave RC和Service

[root@k8s_master php-redis]# cat redis-slave-controller-RC.yaml apiVersion: v1 kind: ReplicationController metadata: name: redis-slave labels: name: redis-slave spec: replicas: 2 selector: name: redis-slave template: metadata: labels: name: redis-slave spec: containers: - name: slave image: docker.io/kubeguide/guestbook-redis-slave imagePullPolicy: IfNotPresent env: #这里表示从slave-pod的环境变量中获取redis-master服务的ip地址信息 - name: GET_HOSTS_FROM value: env ports: - containerPort: 6379 [root@k8s_master php-redis]# cat redis-slave-controller-Service.yaml apiVersion: v1 kind: Service metadata: name: redis-slave labels: name: redis-slave spec: ports: - port: 6379 selector: name: redis-slave

说明:

在创建redis-slave pod时,系统将自动在容器内部生成之前已经创建好的redis-master service相关的环境变量,所以redis-slave应用程序redis-server 可以直接使用环境变量 REDIS_MASTER_SERVICE_HOST来获取redis-master服务的ip地址,如果不设置该env,则将使用redis-master服务的名称“redis-master”来访问他,使用DNS服务发现的方式,需要配置skydns服务

查看该slave的环境变量如下

[root@k8s_master php-redis]# kubectl get pods NAME READY STATUS RESTARTS AGE frontend-7kkwx 1/1 Running 0 1h frontend-9hbct 1/1 Running 0 1h frontend-vpq8c 1/1 Running 0 1h redis-master-0k0m2 1/1 Running 0 2h redis-slave-c5nlk 1/1 Running 0 1h redis-slave-qr0pn 1/1 Running 0 1h [root@k8s_master php-redis]# kubectl exec redis-slave-c5nlk env PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin HOSTNAME=redis-slave-c5nlk GET_HOSTS_FROM=env KUBERNETES_PORT=tcp://10.254.0.1:443 KUBERNETES_PORT_443_TCP_ADDR=10.254.0.1 REDIS_MASTER_SERVICE_HOST=10.254.85.180 KUBERNETES_SERVICE_PORT=443 REDIS_MASTER_PORT_6379_TCP_PROTO=tcp KUBERNETES_SERVICE_PORT_HTTPS=443 KUBERNETES_PORT_443_TCP=tcp://10.254.0.1:443 REDIS_MASTER_PORT_6379_TCP=tcp://10.254.85.180:6379 REDIS_MASTER_PORT_6379_TCP_PORT=6379 REDIS_MASTER_PORT_6379_TCP_ADDR=10.254.85.180 KUBERNETES_SERVICE_HOST=10.254.0.1 KUBERNETES_PORT_443_TCP_PROTO=tcp KUBERNETES_PORT_443_TCP_PORT=443 REDIS_MASTER_SERVICE_PORT=6379 REDIS_MASTER_PORT=tcp://10.254.85.180:6379 REDIS_VERSION=3.0.3 REDIS_DOWNLOAD_URL=http://download.redis.io/releases/redis-3.0.3.tar.gz REDIS_DOWNLOAD_SHA1=0e2d7707327986ae652df717059354b358b83358 HOME=/root

创建

kubectl create -f redis-slave-controller-RC.yaml -f redis-slave-controller-Service.yaml

③、创建frontend RC和Service

[root@k8s_master php-redis]# cat frontend-controller.yaml apiVersion: v1 kind: ReplicationController metadata: name: frontend labels: name: frontend spec: replicas: 3 selector: name: frontend template: metadata: labels: name: frontend spec: containers: - name: frontend image: docker.io/kubeguide/guestbook-php-frontend imagePullPolicy: IfNotPresent env: - name: GET_HOSTS_FROM value: env ports: - containerPort: 80 [root@k8s_master php-redis]# cat frontend-Service.yaml apiVersion: v1 kind: Service metadata: name: frontend labels: name: frontend spec: type: NodePort ports: - port: 80 nodePort: 30001 selector: name: frontend

注:

容器的配置中加了环境变量,意思是从环境变量中获取 redis-master和redis-slave服务的ip地址信息

创建

kubectl create -f frontend-controller.yaml -f frontend-Service.yaml

查看所有信息

[root@k8s_master ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE frontend-7kkwx 1/1 Running 0 2h 10.1.34.3 192.168.132.136 frontend-9hbct 1/1 Running 0 2h 10.1.20.4 192.168.132.149 frontend-vpq8c 1/1 Running 0 2h 10.1.34.4 192.168.132.136 redis-master-0k0m2 1/1 Running 0 3h 10.1.20.2 192.168.132.149 redis-slave-c5nlk 1/1 Running 0 2h 10.1.34.2 192.168.132.136 redis-slave-qr0pn 1/1 Running 0 2h 10.1.20.3 192.168.132.149

[root@k8s_master ~]# kubectl get rc -o wide NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR frontend 3 3 3 2h frontend docker.io/kubeguide/guestbook-php-frontend name=frontend redis-master 1 1 1 3h master docker.io/kubeguide/redis-master name=redis-master redis-slave 2 2 2 2h slave docker.io/kubeguide/guestbook-redis-slave name=redis-slave

[root@k8s_master ~]# kubectl get service -o wide NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR frontend 10.254.51.228 <nodes> 80:30001/TCP 2h name=frontend kubernetes 10.254.0.1 <none> 443/TCP 272d <none> redis-master 10.254.85.180 <none> 6379/TCP 3h name=redis-master redis-slave 10.254.70.40 <none> 6379/TCP 2h name=redis-slave

[root@k8s_master ~]# kubectl describe service redis-master redis-slave frontend Name: redis-master Namespace: default Labels: name=redis-master Selector: name=redis-master Type: ClusterIP IP: 10.254.85.180 Port: <unset> 6379/TCP Endpoints: 10.1.20.2:6379 Session Affinity: None No events. Name: redis-slave Namespace: default Labels: name=redis-slave Selector: name=redis-slave Type: ClusterIP IP: 10.254.70.40 Port: <unset> 6379/TCP Endpoints: 10.1.20.3:6379,10.1.34.2:6379 Session Affinity: None No events. Name: frontend Namespace: default Labels: name=frontend Selector: name=frontend Type: NodePort IP: 10.254.51.228 Port: <unset> 80/TCP NodePort: <unset> 30001/TCP Endpoints: 10.1.20.4:80,10.1.34.3:80,10.1.34.4:80 Session Affinity: None No events.

访问node节点的30001端口,可看到界面,添加和查询都是经过redis,并实时显示出来

yaml格式的pod定义文件完整内容

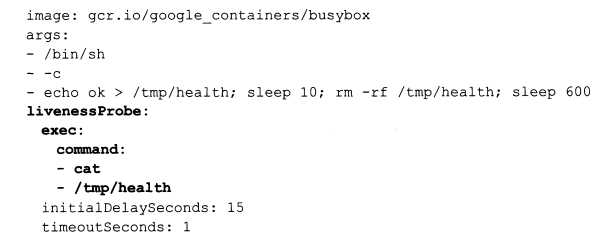

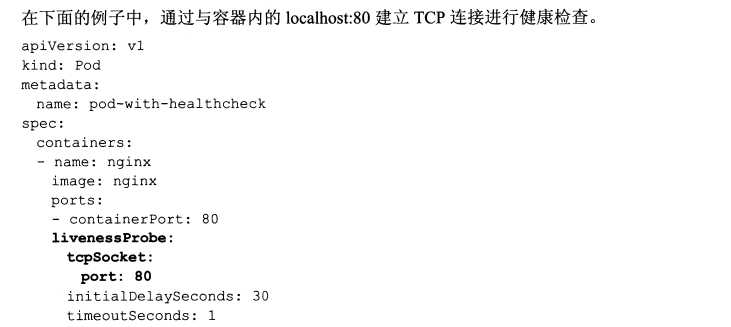

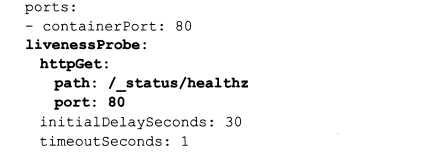

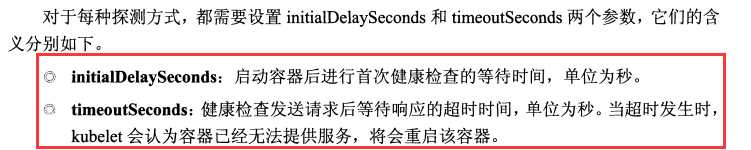

apiVersion: v1 #必选,版本号,例如v1,版本号必须可以用 kubectl api-versions 查询到 . kind: Pod #必选,Pod metadata: #必选,元数据 name: string #必选,Pod名称 namespace: string #可以不选,Pod所属的命名空间,默认为"default" labels: #自定义标签 - name: string #自定义容器的标签名字 annotations: #自定义注释列表 - name: string spec: #必选,Pod中容器的详细定义 containers: #必选,Pod中容器列表 - name: string #必选,容器名称,需符合RFC 1035规范 image: string #必选,容器的镜像名称或镜像地址 imagePullPolicy: [ Always|Never|IfNotPresent ] #获取镜像的策略 Alawys表示下载镜像 IfnotPresent表示优先使用本地镜像,否则下载镜像,Nerver表示仅使用本地镜像 command: [string] #容器的启动命令列表,如不指定,使用打包时使用的启动命令 args: [string] #容器的启动命令参数列表 workingDir: string #容器的工作目录 volumeMounts: #挂载到容器内部的存储卷配置 - name: string #引用pod定义的共享存储卷的名称,需用volumes[]部分定义的的卷名 mountPath: string #存储卷在容器内mount的绝对路径,应少于512字符 readOnly: boolean #是否为只读模式 ports: #需要暴露的端口库号列表 - name: string #端口的名称 containerPort: int #容器需要监听的端口号 hostPort: int #容器所在主机需要监听的端口号,默认与Container相同 protocol: string #端口协议,支持TCP和UDP,默认TCP env: #容器运行前需设置的环境变量列表 - name: string #环境变量名称 value: string #环境变量的值 resources: #资源限制和请求的设置 limits: #资源限制的设置 cpu: string #Cpu的限制,单位为core数,将用于docker run --cpu-shares参数 memory: string #内存限制,单位可以为Mib/Gib,将用于docker run --memory参数 requests: #资源请求的设置 cpu: string #Cpu请求,容器启动的初始可用数量 memory: string #内存请求,容器启动的初始可用数量 livenessProbe: #对Pod内各容器健康检查的设置,当探测无响应几次后将自动重启该容器,检查方法有exec、httpGet和tcpSocket,对一个容器只需设置其中一种方法即可 exec: #对Pod容器内检查方式设置为exec方式 command: [string] #exec方式需要制定的命令或脚本 httpGet: #对Pod内个容器健康检查方法设置为HttpGet,需要制定Path、port path: string port: number host: string scheme: string HttpHeaders: - name: string value: string tcpSocket: #对Pod内个容器健康检查方式设置为tcpSocket方式 port: number initialDelaySeconds: 0 #容器启动完成后首次探测的时间,单位为秒 timeoutSeconds: 0 #对容器健康检查探测等待响应的超时时间,单位秒,默认1秒 periodSeconds: 0 #对容器监控检查的定期探测时间设置,单位秒,默认10秒一次 successThreshold: 0 failureThreshold: 0 securityContext: privileged: false restartPolicy: [Always | Never | OnFailure] #Pod的重启策略,Always表示一旦不管以何种方式终止运行,kubelet都将重启,OnFailure表示只有Pod以非0退出码退出才重启,Nerver表示不再重启该Pod nodeSelector: obeject #设置NodeSelector表示将该Pod调度到包含这个label的node上,以key:value的格式指定 imagePullSecrets: #Pull镜像时使用的secret名称,以key:secretkey格式指定 - name: string hostNetwork: false #是否使用主机网络模式,默认为false,如果设置为true,表示使用宿主机网络 volumes: #在该pod上定义共享存储卷列表 - name: string #共享存储卷名称 (volumes类型有很多种) emptyDir: {} #类型为emtyDir的存储卷,与Pod同生命周期的一个临时目录。为空值 hostPath: string #类型为hostPath的存储卷,表示挂载Pod所在宿主机的目录 path: string #Pod所在宿主机的目录,将被用于同期中mount的目录 secret: #类型为secret的存储卷,挂载集群与定义的secre对象到容器内部 scretname: string items: - key: string path: string configMap: #类型为configMap的存储卷,挂载预定义的configMap对象到容器内部 name: string items: - key: string path: string

docker容器运行以一个前台命令运行,但是有些应用程序无法改造为前台应用,此时需要使用开源工具supervisor辅助进行前台运行的功能

supervisor提供了一种可以同时启动多个后台应用,并保持superviosr自身在前台执行的机制,可以满足k8s对容器的启动要求

pod由一个或多个容器组成,上述例子中,可以将redis容器和frontend容器放到一个pod中,如下

[root@k8s_master php-redis]# cat frontend-localredis-pod.yaml apiVersion: v1 kind: Pod metadata: name: redis-php namespace: kube-system labels: name: redis-php spec: containers: - name: frontend image: docker.io/kubeguide/guestbook-php-frontend ports: - containerPort: 80 - name: redis image: docker.io/kubeguide/redis-master ports: - containerPort: 6379 [root@k8s_master php-redis]# kubectl create -f frontend-localredis-pod.yaml [root@k8s_master php-redis]# kubectl get pod --namespace=kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE kube-dns-v11-dtfxq 4/4 Running 30 7d 10.1.34.5 192.168.132.136 redis-php 2/2 Running 0 5m 10.1.20.5 192.168.132.149

[root@k8s_master php-redis]# kubectl describe pod redis-php --namespace=kube-system Name: redis-php Namespace: kube-system Node: 192.168.132.149/192.168.132.149 Start Time: Wed, 18 Jul 2018 20:13:01 +0800 Labels: name=redis-php Status: Running IP: 10.1.20.5 Controllers: <none> Containers: frontend: Container ID: docker://0796e31721992a15dc465de5cbd2eb52674593564525982442996f8bfa484261 Image: docker.io/kubeguide/guestbook-php-frontend Image ID: docker-pullable://docker.io/kubeguide/guestbook-php-frontend@sha256:195181e0263bcee4ae0c3e79352bbd3487224c0042f1b9ca8543b788962188ce Port: 80/TCP State: Running Started: Wed, 18 Jul 2018 20:13:30 +0800 Ready: True Restart Count: 0 Volume Mounts: <none> Environment Variables: <none> redis: Container ID: docker://7faf99750a540f7f13e2464d41a0d56059935cdf49692434396deba03b82bb56 Image: docker.io/kubeguide/redis-master Image ID: docker-pullable://docker.io/kubeguide/redis-master@sha256:e11eae36476b02a195693689f88a325b30540f5c15adbf531caaecceb65f5b4d Port: 6379/TCP State: Running Started: Wed, 18 Jul 2018 20:13:42 +0800 Ready: True Restart Count: 0 Volume Mounts: <none> Environment Variables: <none> Conditions: Type Status Initialized True Ready True PodScheduled True No volumes. QoS Class: BestEffort Tolerations: <none> Events: FirstSeen LastSeen Count From SubObjectPath Type Reason Message --------- -------- ----- ---- ------------- -------- ------ ------- 5m 5m 1 {default-scheduler } Normal Scheduled Successfully assigned redis-php to 192.168.132.149 3m 3m 1 {kubelet 192.168.132.149} spec.containers{frontend} Normal Pulling pulling image "docker.io/kubeguide/guestbook-php-frontend" 3m 3m 1 {kubelet 192.168.132.149} spec.containers{frontend} Normal Pulled Successfully pulled image "docker.io/kubeguide/guestbook-php-frontend" 3m 3m 1 {kubelet 192.168.132.149} spec.containers{frontend} Normal Created Created container with docker id 0796e3172199; Security:[seccomp=unconfined] 3m 3m 1 {kubelet 192.168.132.149} spec.containers{frontend} Normal Started Started container with docker id 0796e3172199 3m 3m 1 {kubelet 192.168.132.149} spec.containers{redis} Normal Pulling pulling image "docker.io/kubeguide/redis-master" 3m 3m 1 {kubelet 192.168.132.149} spec.containers{redis} Normal Pulled Successfully pulled image "docker.io/kubeguide/redis-master" 3m 3m 1 {kubelet 192.168.132.149} spec.containers{redis} Normal Created Created container with docker id 7faf99750a54; Security:[seccomp=unconfined] 3m 3m 1 {kubelet 192.168.132.149} spec.containers{redis} Normal Started Started container with docker id 7faf99750a54

因为在同一个pod中,两个容器之间可以通过localhost互相通信(可通过进入pod进行telnet或者curl测试)

静态pod是有kubelet进行管理的存在于特定node上的pod,静态pod不能通过api server进行管理,无法使用rc service deployment进行关联,并且kubelet无法对他们进行检查,静态pod总是有kubelet进行创建,并且总是在kubelet所在的node上运行

创建静态pod的两种方式:配置文件和http

配置文件方式创建

首先需要设置kubeclt的启动参数 “--config”,指定需要监控的配置文件所在的目录,kubelet会定期扫描该目录,并根据目录中的yaml或者json文件执行创建操作

如下:

[root@k8s_node1 kubernetes]# cat kubelet |grep KUBELET_ARGS #修改配置文件 KUBELET_ARGS="--config=/etc/kubelet.d/ --cluster_dns=10.254.10.2 --pod_infra_container_image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"

#重启服务

[root@k8s_node1 kubernetes]# systemctl restart kubelet

#pod文件 [root@k8s_node1 kubernetes]# cat /etc/kubelet.d/static-web.yaml apiVersion: v1 kind: Pod metadata: name: static-web namespace: kube-system labels: name: static-web spec: containers: - name: static-web image: nginx ports: - name: web containerPort: 80 #等待一会会自动创建容器 [root@k8s_node1 ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES a420210bcaf1 nginx "nginx -g ‘daemon off" 8 minutes ago Up 8 minutes k8s_static-web.68ee0075_static-web-192.168.132.136_kube-system_11ce1c67a043c4209fb23dfb082b23da_14a4ab0e

#master节点查看 [root@k8s_master etc]# kubectl get pods --namespace=kube-system NAME READY STATUS RESTARTS AGE kube-dns-v11-dtfxq 4/4 Running 30 7d redis-php 2/2 Running 0 42m static-web-192.168.132.136 1/1 Running 0 2m

#注:删除kubelet.d目录下的yaml文件会,创建的容器会自动删除

同一个pod内多个容器共享volume

示例: 创建一个pod,包含两个容器,pod设置volume为app-logs,用于tomcat写日志,busybox读日志

[root@k8s_master pod_test]# cat pod-volume-applogs.yaml apiVersion: v1 kind: Pod metadata: name: volume-pod namespace: kube-system labels: name: volume-pod spec: containers: - name: tomcat image: tomcat imagePullPolicy: IfNotPresent ports: - containerPort: 8080 volumeMounts: - name: app-logs mountPath: /usr/local/tomcat/logs/ - name: busybox image: busybox imagePullPolicy: IfNotPresent command: ["sh","-c","tail -f /logs/catalina*.log"] volumeMounts: - name: app-logs mountPath: /logs volumes: - name: app-logs emptyDir: {}

#设置 volume名为app-logs;类型为emptyDir,挂在到tomcat 容器的/usr/local/tomcat/logs目录,同时挂载到busybox容器内的/logs目录,tomcat启动时会向目录中写文件

# busybox容器的启动命令为查看容器的日志

查看日志和相关文件

#查看日志 [root@k8s_master pod_test]# kubectl logs volume-pod -c busybox --namespace=kube-system 18-Jul-2018 13:29:48.498 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Server version: Apache Tomcat/8.5.32 18-Jul-2018 13:29:48.500 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Server built: Jun 20 2018 19:50:35 UTC 18-Jul-2018 13:29:48.500 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Server number: 8.5.32.0 18-Jul-2018 13:29:48.501 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log OS Name: Linux 18-Jul-2018 13:29:48.501 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log OS Version: 3.10.0-514.26.2.el7.x86_64 18-Jul-2018 13:29:48.501 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Architecture: amd64 18-Jul-2018 13:29:48.501 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Java Home: /usr/lib/jvm/java-8-openjdk-amd64/jre 18-Jul-2018 13:29:48.501 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log JVM Version: 1.8.0_171-8u171-b11-1~deb9u1-b11 18-Jul-2018 13:29:48.515 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log JVM Vendor: Oracle Corporation 18-Jul-2018 13:29:48.515 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log CATALINA_BASE: /usr/local/tomcat 18-Jul-2018 13:29:48.515 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log CATALINA_HOME: /usr/local/tomcat 18-Jul-2018 13:29:48.519 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Djava.util.logging.config.file=/usr/local/tomcat/conf/logging.properties 18-Jul-2018 13:29:48.526 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Djava.util.logging.manager=org.apache.juli.ClassLoaderLogManager 18-Jul-2018 13:29:48.526 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Djdk.tls.ephemeralDHKeySize=2048 18-Jul-2018 13:29:48.530 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Djava.protocol.handler.pkgs=org.apache.catalina.webresources 18-Jul-2018 13:29:48.530 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Dorg.apache.catalina.security.SecurityListener.UMASK=0027 18-Jul-2018 13:29:48.530 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Dignore.endorsed.dirs= 18-Jul-2018 13:29:48.531 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Dcatalina.base=/usr/local/tomcat 18-Jul-2018 13:29:48.532 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Dcatalina.home=/usr/local/tomcat 18-Jul-2018 13:29:48.532 INFO [main] org.apache.catalina.startup.VersionLoggerListener.log Command line argument: -Djava.io.tmpdir=/usr/local/tomcat/temp 18-Jul-2018 13:29:48.532 INFO [main] org.apache.catalina.core.AprLifecycleListener.lifecycleEvent Loaded APR based Apache Tomcat Native library [1.2.17] using APR version [1.5.2]. 18-Jul-2018 13:29:48.533 INFO [main] org.apache.catalina.core.AprLifecycleListener.lifecycleEvent APR capabilities: IPv6 [true], sendfile [true], accept filters [false], random [true]. 18-Jul-2018 13:29:48.533 INFO [main] org.apache.catalina.core.AprLifecycleListener.lifecycleEvent APR/OpenSSL configuration: useAprConnector [false], useOpenSSL [true] 18-Jul-2018 13:29:48.547 INFO [main] org.apache.catalina.core.AprLifecycleListener.initializeSSL OpenSSL successfully initialized [OpenSSL 1.1.0f 25 May 2017] 18-Jul-2018 13:29:50.419 INFO [main] org.apache.coyote.AbstractProtocol.init Initializing ProtocolHandler ["http-nio-8080"] 18-Jul-2018 13:29:50.818 INFO [main] org.apache.tomcat.util.net.NioSelectorPool.getSharedSelector Using a shared selector for servlet write/read 18-Jul-2018 13:29:50.856 INFO [main] org.apache.coyote.AbstractProtocol.init Initializing ProtocolHandler ["ajp-nio-8009"] 18-Jul-2018 13:29:50.867 INFO [main] org.apache.tomcat.util.net.NioSelectorPool.getSharedSelector Using a shared selector for servlet write/read 18-Jul-2018 13:29:50.872 INFO [main] org.apache.catalina.startup.Catalina.load Initialization processed in 10254 ms 18-Jul-2018 13:29:50.952 INFO [main] org.apache.catalina.core.StandardService.startInternal Starting service [Catalina] 18-Jul-2018 13:29:50.952 INFO [main] org.apache.catalina.core.StandardEngine.startInternal Starting Servlet Engine: Apache Tomcat/8.5.32 18-Jul-2018 13:29:50.975 INFO [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/usr/local/tomcat/webapps/ROOT] 18-Jul-2018 13:29:55.132 WARNING [localhost-startStop-1] org.apache.catalina.util.SessionIdGeneratorBase.createSecureRandom Creation of SecureRandom instance for session ID generation using [SHA1PRNG] took [1,458] milliseconds. 18-Jul-2018 13:29:55.291 INFO [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/usr/local/tomcat/webapps/ROOT] has finished in [4,315] ms 18-Jul-2018 13:29:55.291 INFO [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/usr/local/tomcat/webapps/docs] 18-Jul-2018 13:29:55.367 INFO [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/usr/local/tomcat/webapps/docs] has finished in [76] ms 18-Jul-2018 13:29:55.367 INFO [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/usr/local/tomcat/webapps/examples] 18-Jul-2018 13:29:56.747 INFO [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/usr/local/tomcat/webapps/examples] has finished in [1,380] ms 18-Jul-2018 13:29:56.747 INFO [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/usr/local/tomcat/webapps/host-manager] 18-Jul-2018 13:29:57.305 INFO [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/usr/local/tomcat/webapps/host-manager] has finished in [558] ms 18-Jul-2018 13:29:57.317 INFO [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/usr/local/tomcat/webapps/manager] 18-Jul-2018 13:29:57.381 INFO [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/usr/local/tomcat/webapps/manager] has finished in [75] ms 18-Jul-2018 13:29:57.401 INFO [main] org.apache.coyote.AbstractProtocol.start Starting ProtocolHandler ["http-nio-8080"] 18-Jul-2018 13:29:57.419 INFO [main] org.apache.coyote.AbstractProtocol.start Starting ProtocolHandler ["ajp-nio-8009"] 18-Jul-2018 13:29:57.560 INFO [main] org.apache.catalina.startup.Catalina.start Server startup in 6687 ms #获取日志文件 [root@k8s_master pod_test]# kubectl exec -it volume-pod -c tomcat --namespace=kube-system -- ls /usr/local/tomcat/logs catalina.2018-07-18.log host-manager.2018-07-18.log localhost.2018-07-18.log localhost_access_log.2018-07-18.txt manager.2018-07-18.log

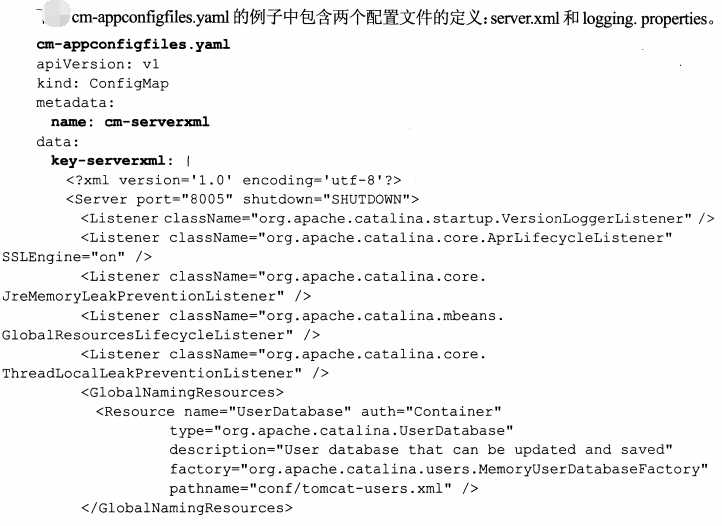

将应用所需的配置信息与程序进行分离,可以使程序更好的复用,k8s提供了统一的集群配置管理方案-ConfigMap

ConfigMap用法

ConfigMap以一个或多个key:value的形式保存在k8s系统中供应用使用,既可以用于表示一个变量的值,也可以用于表示一个完整的配置文件内容,可以通过yaml配置文件或者直接使用kubectl create configmap命令行的方式来创建ConfigMap

yaml文件方式

创建yaml文件然后执行create

[root@k8s_master configmap]# cat cm.appvars.yaml apiVersion: v1 kind: ConfigMap metadata: name: cm-appvars data: apploglevel: info #这里value可以写一个配置文件的所有内容(如tomcat配置文件), appdatadir: /var/data/ [root@k8s_master configmap]# kubectl create -f cm.appvars.yaml configmap "cm-appvars" created [root@k8s_master configmap]# kubectl get configmap NAME DATA AGE cm-appvars 2 14s [root@k8s_master configmap]# kubectl describe configmap cm-appvars Name: cm-appvars Namespace: default Labels: <none> Annotations: <none> Data ==== appdatadir: 10 bytes apploglevel: 4 bytes

命令行方式创建ConfigMap

使用参数 --from-file或者--from-literal,一行命令中可以指定多个参数,示例

#通过--from-file从文件中创建,可以指定key的名称 kubectl create configmap NAME --from-file=[key=]source --from-file=[key=]source #通过--from-file从目录中创建,该目录下的每个配置文件名都被设置为key,文件的内容被设置为value kubectl create configmap NAME --from-file=config-files-dir #--from-literal从文本中进行创建,直接指定为key#=value#创建为configmap kubectl create configmap NAME --from-literal=key1=value1 --from-literal=key2=value2 #这里参照上面配置文件创建方式为: --from-literal=loglevel=info --from-literal=appdatadir=/var/data

容器应用对ConfigMap的使用方法

使用环境变量方式

#configmap创建 [root@k8s_master configmap]# cat cm.appvars.yaml apiVersion: v1 kind: ConfigMap metadata: name: cm-appvars #configmap创建后的名称 data: apploglevel: info #configmap里的key和value appdatadir: /var/data/ #创建一个pod,打印环境变量并销毁 [root@k8s_master configmap]# cat cm-testpod.yaml apiVersion: v1 kind: Pod metadata: name: cm-test-pod spec: containers: - name: cm-test image: busybox command: ["/bin/sh", "-c", "env|grep APP"] env: - name: APPLOGLEVEL #定义环境变量名称 valueFrom: #定义key "apploglevel"对应的值从哪里获取 configMapKeyRef: name: cm-appvars #环境变量的值取自 cm-appvars这个configmap(文件名称?。。),这个configmap必须存在,且必须与要创建的此pod在同一个namespace

key: apploglevel #key为apploglevel - name: APPDATADIR valueFrom: configMapKeyRef: name: cm-appvars key: appdatadir restartPolicy: Never #重启策略(不被重启)

查看效果

[root@k8s_master configmap]# kubectl logs cm-test-pod #这里为创建的pod的名称 APPDATADIR=/var/data/ APPLOGLEVEL=info

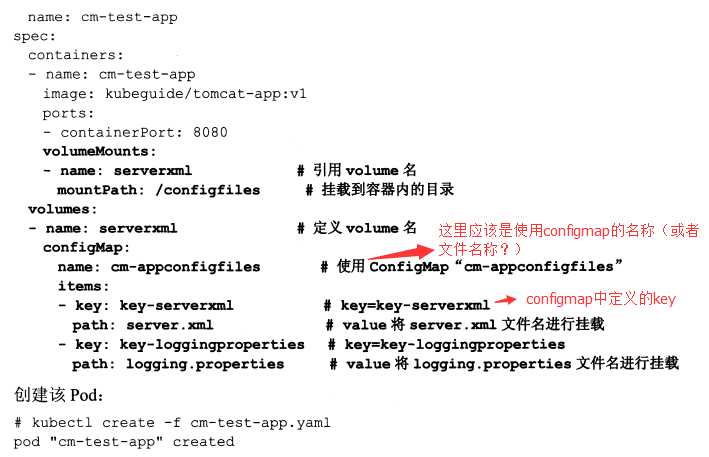

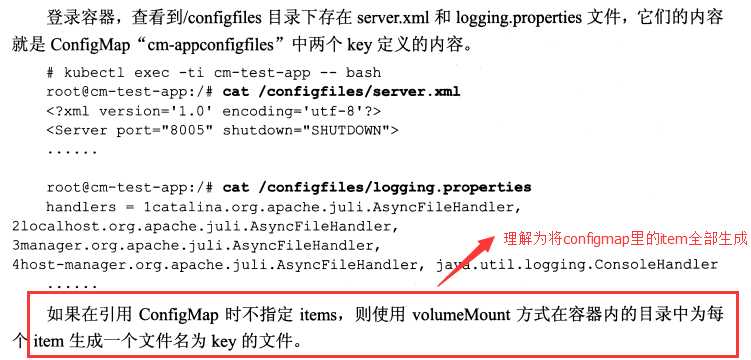

使用volumemount方式

configmap

pod的yaml文件

测试

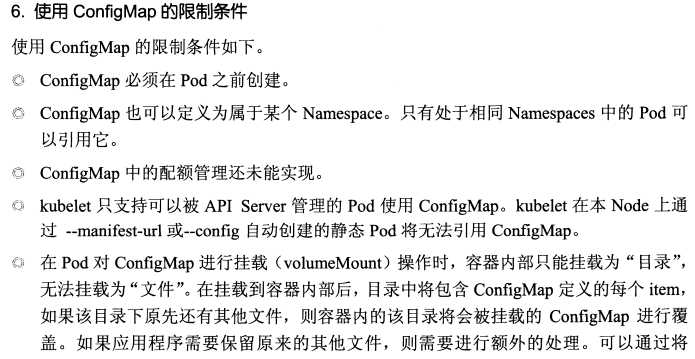

限制条件

LivebessProbe 实现的三种方式

必须条件

根据rc deployment创建pod时,可以再创建时给yaml文件添加配置,使pod定向调度到指定的node节点上去

#首先给每个node打上一些标签,语法如下 kubectl label nodes <node-name> <label-key>=<label-value> 示例: kubectl label nodes 192.168.132.149 labels=node-149 #然后在rc或者deployment的yaml文件中的pod定义中加上nodeSelector设置 ,示例 spec: containers: - name: nginx image: docker.io/nginx ports: - containerPort: 80 nodeSelector: labels: node-149 #上面创建的标签

如果给多个node定义了相同的标签,shceduler会根据调度算法从这些node中挑选一个可用的node进行调度;

如果指定了pod的nodeselector条件,且集群中不存在相应的标签,即便有其它可使用的node,pod也不会创建成功

利用rc的scale机制进行pod扩容缩容

#将redis-slave rc控制的pod数量由2变成3 kubectl scale rc redis-slace --replicas=3

HPA自动扩容

#例如以一个web为例,这里只指定了cpu request为200m 未设置limit上限的值 [root@k8s_master php-redis]# cat frontend-controller.yaml apiVersion: v1 kind: ReplicationController metadata: name: frontend labels: name: frontend spec: replicas: 3 selector: name: frontend template: metadata: labels: name: frontend spec: containers: - name: frontend image: docker.io/kubeguide/guestbook-php-frontend imagePullPolicy: IfNotPresent env: - name: GET_HOSTS_FROM value: env resources: requests: cpu: 200m ports: - containerPort: 80 #创建rc [root@k8s_master php-redis]# kubectl create -f frontend-controller.yaml #创建service [root@k8s_master php-redis]# cat frontend-Service.yaml apiVersion: v1 kind: Service metadata: name: frontend labels: name: frontend spec: type: NodePort ports: - port: 80 nodePort: 30001 selector: name: frontend #接下来为RC创建一个HPA控制器 #扩容方法① kubectl autoscale rc frontend --min=1 --max=10 --cpu-percent=50 #为rc名称为frontend的扩容 #扩容方法② [root@k8s_master php-redis]# cat frontend-hpa.yaml apiVersion: autoscaling/v1 kind: HorizontalPodAutoscaler metadata: name: frontend spec: scaleTargetRef apiVersion: v1 kind: ReplicationController name: frontend minReplicas: 1 maxReplicas: 10 targetCPUUtilizationPercentage: 50

查看创建的hpa

kubectl get hpa

然后创建一个pod进行压力测试

#cat busybox-pod.yaml apiVersion: v1 kind: Pod metadata: name: busybox spec: containers: - name: busybox image: busybox command: ["sleep","3600"] #创建pod kubectl create -f busybox-pod.yaml #登录容器,执行一个无限循环的wget命令来访问web服务 kubectl exec -ti busybox -- /bin/bash #while true;do wget -q -O- http://cluster ip >/dev/null;done

等待一段时间,观察hpa控制器搜集到的pod的使用率

#查看hpa kubectl get hpa #查看rc下 frontend副本的数量变化 kubectl get rc

#然后关掉循环,等一会再看pod副本的数量

滚动升级通过执行kubectl rolling-update命令一键完成,该命令创建了一个新的Rc,然后自动控制旧的rc中的pod副本数量逐渐减少到0,同时新的RC中的副本数量从0逐渐增加到目标值,最终实现pod升级。

需要注意的是,新的rc和旧的rc要在同一个namespace

示例:在上面redis-master的基础上进行滚动升级

redis-master-controller-RC-v2.yaml

[root@k8s_master php-redis]# cat redis-master-controller-RC-v2.yaml apiVersion: v1 kind: ReplicationController metadata: name: redis-master-v2 #注意,rc的名字不能与旧的rc的名字相同 labels: name: redis-master version: v2 spec: replicas: 1 selector: name: redis-master-v2 #在select中至少有一个label与旧的rc的label的名字不同,以标识其为新的rc version: v2 template: metadata: labels: name: redis-master-v2 version: v2 spec: containers: - name: master image: docker.io/kubeguide/redis-master:2.0 imagePullPolicy: IfNotPresent ports: - containerPort: 6379

执行滚动升级

[root@k8s_master php-redis]# kubectl rolling-update redis-master -f redis-master-controller-RC-v2.yaml

#不使用配置文件进行滚动升级

kubectl rolling-update redis-master --image=redis-master:latest

查看结果

[root@k8s_master php-redis]# kubectl rolling-update redis-master -f redis-master-controller-RC-v2.yaml Created redis-master-v2 Scaling up redis-master-v2 from 0 to 1, scaling down redis-master from 1 to 0 (keep 1 pods available, don‘t exceed 2 pods) Scaling redis-master-v2 up to 1 Scaling redis-master down to 0 Update succeeded. Deleting redis-master replicationcontroller "redis-master" rolling updated to "redis-master-v2" #查看rc [root@k8s_master php-redis]# kubectl get rc -o wide NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR frontend 3 3 3 13h frontend docker.io/kubeguide/guestbook-php-frontend name=frontend redis-master-v2 1 1 1 3m master docker.io/kubeguide/redis-master:latest name=redis-master-v2,version=v2

回滚操作

#回滚,执行的时候出错,查看 kubectl rolling-update -h 查看一下说明 kubectl rolling-update redis-master --image=redis-master --rollback

#此示例中执行如下进行回滚

kubectl rolling-update redis-master redis-master-v2 --rollback

查看结果

[root@k8s_master php-redis]# kubectl rolling-update redis-master redis-master-v2 --rollback

Setting "redis-master" replicas to 1

Continuing update with existing controller redis-master.

Scaling up redis-master from 1 to 1, scaling down redis-master-v2 from 1 to 0 (keep 1 pods available, don‘t exceed 2 pods)

Scaling redis-master-v2 down to 0

Update succeeded. Deleting redis-master-v2

replicationcontroller "redis-master" rolling updated to "redis-master-v2"

[root@k8s_master php-redis]# kubectl get rc -o wide NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR frontend 3 3 3 14h frontend docker.io/kubeguide/guestbook-php-frontend name=frontend redis-master 1 1 1 29m master docker.io/kubeguide/redis-master name=redis-master [root@k8s_master php-redis]# kubectl get pods NAME READY STATUS RESTARTS AGE redis-master-cgbjd 1/1 Running 0 29m

标签:generator minutes tcp ip地址 mib running sele logs openjdk

原文地址:https://www.cnblogs.com/FRESHMANS/p/9330806.html