标签:lsb done kubenetes using img mod 网络 efault 查看

1、节点介绍

master cluster-1 cluster-2 cluster-3

hostname k8s-55 k8s-54 k8s-53 k8s-52

ip 10.2.49.55 10.2.49.54 10.2.49.53 10.2.49.52

2、配置网络,配置/etc/hosts 略过。。。。

3、安装kubernets

1 sudo apt-get update && apt-get install -y apt-transport-https 2 sudo curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add - 3 sudo cat <<EOF >/etc/apt/sources.list.d/kubernetes.list 4 deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main 5 EOF 6 sudo apt-get update

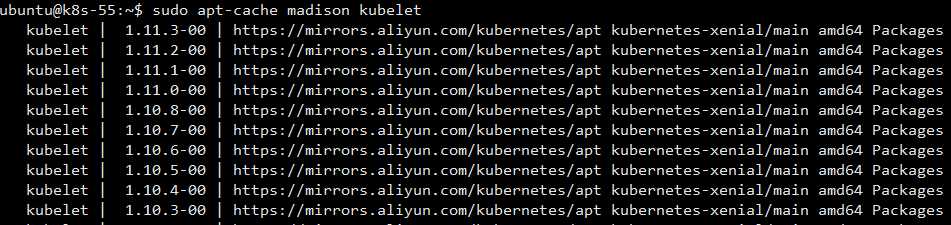

查看源中的软件版本 sudo apt-cache madison kubelet

一般不安装最新的,也不会安装最老的,我们先安装1.11.2的

1 sudo apt install kubelet=1.11.2-00 kubeadm=1.11.2-00 kubectl=1.11.2-00

至此kubernetes的二进制部分安装成功

3、安装docker

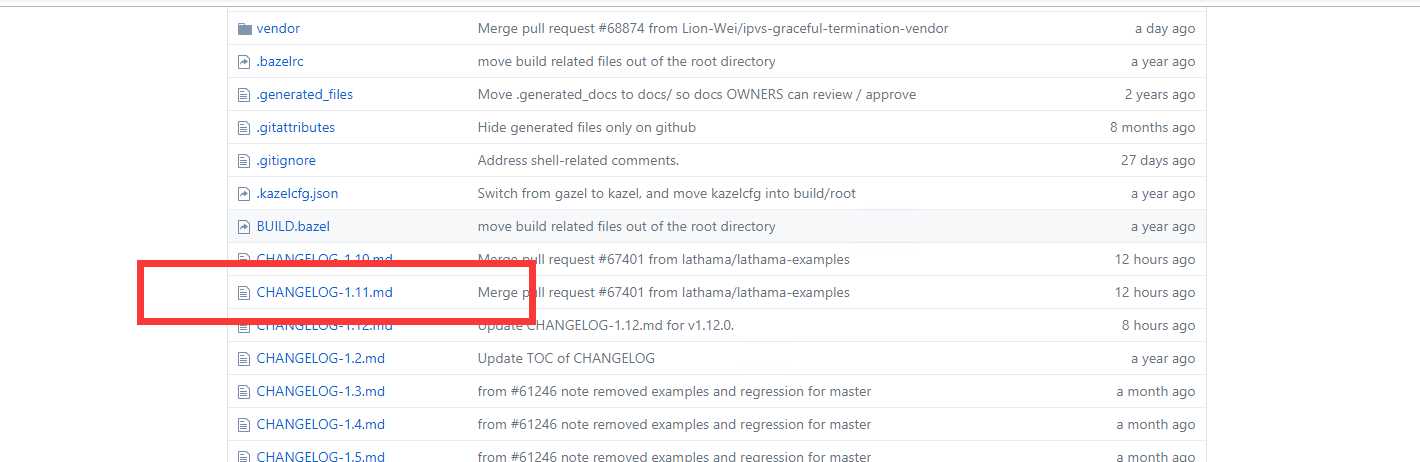

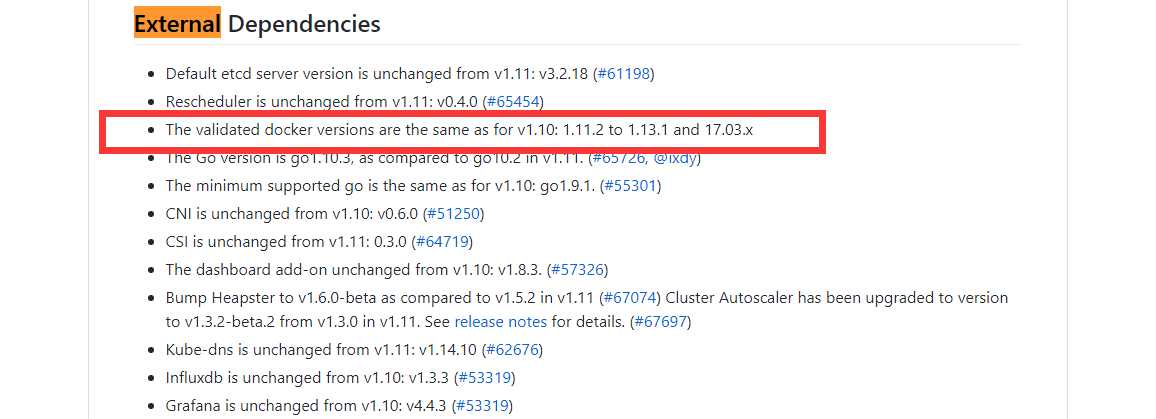

由于是用Kubernetes管理docker ,docker的版本要兼容kubernetes,去网站找兼容性列表,网站https://github.com/kubernetes/kubernetes,查看安装的是哪个版本,就看哪个版本的changlog,本文中安装的是1.11.2版本,

从这里可以看出来兼容最高版本的docker是17.03.x,docker版本尽量装新版本

安装docker

1 sudo apt-get update 2 sudo apt-get -y install apt-transport-https ca-certificates curl software-properties-common 3 curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add - 4 sudo add-apt-repository "deb [arch=amd64] http://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable" 5 sudo apt-get -y update

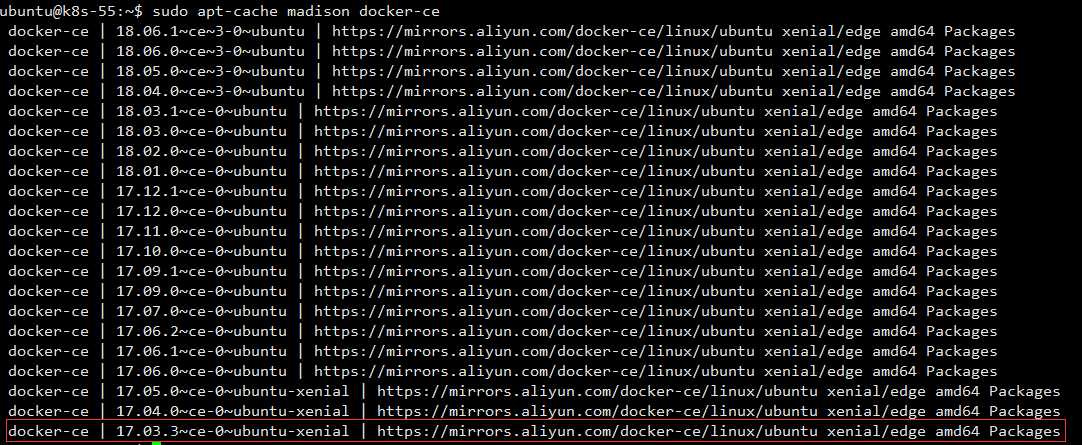

查看docker版本

1 sudo apt install docker-ce=17.03.3~ce-0~ubuntu-xenial 2 sudo systemctl enable docker

如果需要配置加速器,请编辑/etc/systemd/system/multi-user.target.wants/docker.service文件

4、拉取kubernetes初始化镜像

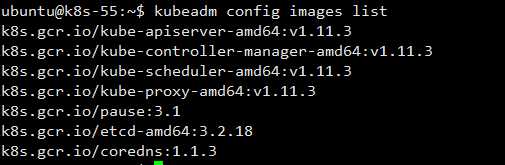

查看初始镜像要求

1 kubeadm config images list

由于国内无法直接拉取google镜像,可以使用别人的镜像,也可以自己通过阿里云等制作。本文使用anjia的镜像源,给出脚本

1 #!/bin/bash 2 KUBE_VERSION=v1.11.3 3 KUBE_PAUSE_VERSION=3.1 4 ETCD_VERSION=3.2.18 5 DNS_VERSION=1.1.3 6 username=anjia0532 7 8 images="google-containers.kube-proxy-amd64:${KUBE_VERSION} 9 google-containers.kube-scheduler-amd64:${KUBE_VERSION} 10 google-containers.kube-controller-manager-amd64:${KUBE_VERSION} 11 google-containers.kube-apiserver-amd64:${KUBE_VERSION} 12 pause:${KUBE_PAUSE_VERSION} 13 etcd-amd64:${ETCD_VERSION} 14 coredns:${DNS_VERSION} 15 " 16 17 for image in $images 18 do 19 docker pull ${username}/${image} 20 docker tag ${username}/${image} k8s.gcr.io/${image} 21 #docker tag ${username}/${image} gcr.io/google_containers/${image} 22 docker rmi ${username}/${image} 23 done 24 25 unset ARCH version images username 26 27 docker tag k8s.gcr.io/google-containers.kube-apiserver-amd64:${KUBE_VERSION} k8s.gcr.io/kube-apiserver-amd64:${KUBE_VERSION} 28 docker rmi k8s.gcr.io/google-containers.kube-apiserver-amd64:${KUBE_VERSION} 29 docker tag k8s.gcr.io/google-containers.kube-controller-manager-amd64:${KUBE_VERSION} k8s.gcr.io/kube-controller-manager-amd64:${KUBE_VERSION} 30 docker rmi k8s.gcr.io/google-containers.kube-controller-manager-amd64:${KUBE_VERSION} 31 docker tag k8s.gcr.io/google-containers.kube-scheduler-amd64:${KUBE_VERSION} k8s.gcr.io/kube-scheduler-amd64:${KUBE_VERSION} 32 docker rmi k8s.gcr.io/google-containers.kube-scheduler-amd64:${KUBE_VERSION} 33 docker tag k8s.gcr.io/google-containers.kube-proxy-amd64:${KUBE_VERSION} k8s.gcr.io/kube-proxy-amd64:${KUBE_VERSION} 34 docker rmi k8s.gcr.io/google-containers.kube-proxy-amd64:${KUBE_VERSION}

执行sh pull.sh,会自动将所需镜像拉取

5、初始化Kubernetes

sudo kubeadm init --kubernetes-version=v1.11.3 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address 10.2.49.55

--kubernetes-version 用来指定版本

--pod-network-cidr 用于后期采用flannel作为网络组建而准备

--apiserver-advertise-address 如果机器上只有单个网卡,可以不进行指定

初始化成功的结果

1 [init] Using Kubernetes version: vX.Y.Z 2 [preflight] Running pre-flight checks 3 [kubeadm] WARNING: starting in 1.8, tokens expire after 24 hours by default (if you require a non-expiring token use --token-ttl 0) 4 [certificates] Generated ca certificate and key. 5 [certificates] Generated apiserver certificate and key. 6 [certificates] apiserver serving cert is signed for DNS names [kubeadm-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.138.0.4] 7 [certificates] Generated apiserver-kubelet-client certificate and key. 8 [certificates] Generated sa key and public key. 9 [certificates] Generated front-proxy-ca certificate and key. 10 [certificates] Generated front-proxy-client certificate and key. 11 [certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki" 12 [kubeconfig] Wrote KubeConfig file to disk: "admin.conf" 13 [kubeconfig] Wrote KubeConfig file to disk: "kubelet.conf" 14 [kubeconfig] Wrote KubeConfig file to disk: "controller-manager.conf" 15 [kubeconfig] Wrote KubeConfig file to disk: "scheduler.conf" 16 [controlplane] Wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml" 17 [controlplane] Wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml" 18 [controlplane] Wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml" 19 [etcd] Wrote Static Pod manifest for a local etcd instance to "/etc/kubernetes/manifests/etcd.yaml" 20 [init] Waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests" 21 [init] This often takes around a minute; or longer if the control plane images have to be pulled. 22 [apiclient] All control plane components are healthy after 39.511972 seconds 23 [uploadconfig] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace 24 [markmaster] Will mark node master as master by adding a label and a taint 25 [markmaster] Master master tainted and labelled with key/value: node-role.kubernetes.io/master="" 26 [bootstraptoken] Using token: <token> 27 [bootstraptoken] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials 28 [bootstraptoken] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token 29 [bootstraptoken] Creating the "cluster-info" ConfigMap in the "kube-public" namespace 30 [addons] Applied essential addon: CoreDNS 31 [addons] Applied essential addon: kube-proxy 32 33 Your Kubernetes master has initialized successfully! 34 35 To start using your cluster, you need to run (as a regular user): 36 37 mkdir -p $HOME/.kube 38 sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config 39 sudo chown $(id -u):$(id -g) $HOME/.kube/config 40 41 You should now deploy a pod network to the cluster. 42 Run "kubectl apply -f [podnetwork].yaml" with one of the addon options listed at: 43 http://kubernetes.io/docs/admin/addons/ 44 45 You can now join any number of machines by running the following on each node 46 as root: 47 48 kubeadm join --token <token> <master-ip>:<master-port> --discovery-token-ca-cert-hash sha256:<hash>

1 mkdir -p $HOME/.kube 2 sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config 3 sudo chown $(id -u):$(id -g) $HOME/.kube/config

6、安装flannel网络组件

1 wget https://raw.githubusercontent.com/coreos/flannel/v0.10.0/Documentation/kube-flannel.yml 2 3 kubectl apply -f kube-flannel.yml

查看kube-flannel.yml,使用的镜像是quay.io/coreos/flannel:v0.10.0-amd64

如果一直卡顿,可以自行下载

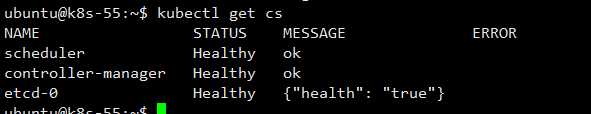

安装组建结束后正常情况下执行,一般都是正常,如果有错误,那就是某些镜像没有下载成功

7、安装客户端

加载内核模块

1 modprobe ip_vs ip_vs_rr ip_vs_wrr ip_vs_sh

加入kubenetes集群,执行的是kubeadm初始化最后显示的token部分

1 sudo kubeadm join --token <token> <master-ip>:<master-port> --discovery-token-ca-cert-hash sha256:<hash>

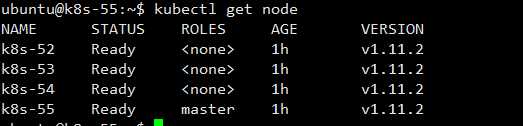

mster节点执行kubectl get node

ready状态是正常的,如果不正常,一般是某些镜像没有下载成功,一般是pause镜像比较难下载,可以采用前文pull.sh方法进行下载

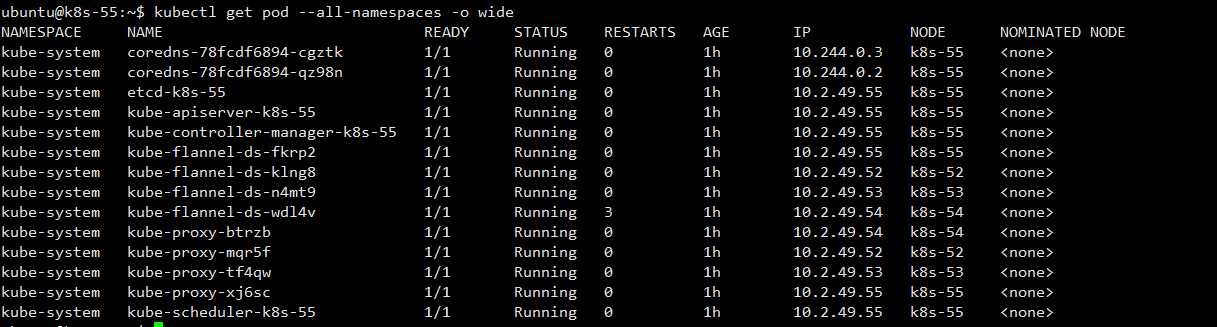

mster节点执行kubectl get pod --all-namespaces -o wide

如上图,为集群创建成功,并能够正常运行。

创建CA证书等下次分享。

Ubuntu16.04搭建kubernetes v1.11.2集群

标签:lsb done kubenetes using img mod 网络 efault 查看

原文地址:https://www.cnblogs.com/sumoning/p/9718854.html