标签:iptable primary master .sh 14.04 eset 1.3 iat mkdir

一、介绍

Replicat Set比起传统的Master - Slave结构而言,应用场景更加多,也有了自动failover的能力

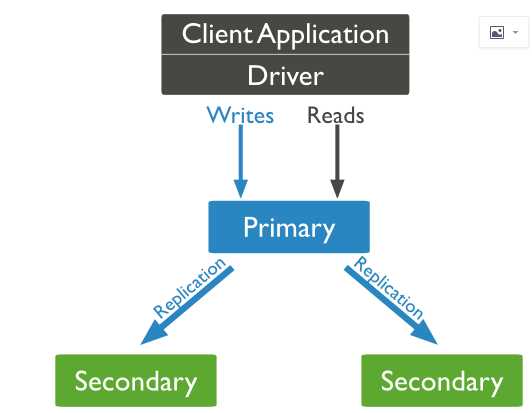

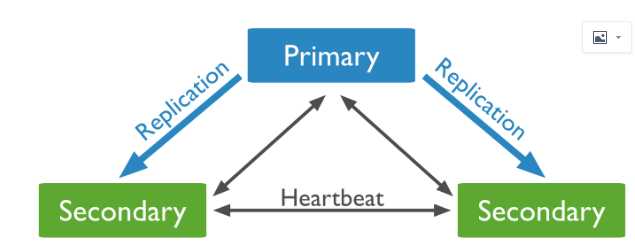

二、副本集结构图(参考:https://www.linuxidc.com/Linux/2017-03/142379.htm)

类似于“MySQL中1主2从+mha_manager”的结构。

Replication通过Oplog实现语句复现

三、副本集成员的属性

Replication通过Oplog实现语句复现

分别为Primary、Secondary(Secondaries)

Primary负责处理所有的write请求,并记录到oplog(operation log)中。

Secondary负责复制oplog并应用这些write请求到他们自己的数据集中。(有一些类似于MySQL Replication)

所有的副本集都可以处理读操作,但是需要设置。

最小化的副本集配置建议有三个成员:

1个Primary + 2个Secondary

或者

1个Primary + 1个Secondary + 1个Arbiter(仲裁者)

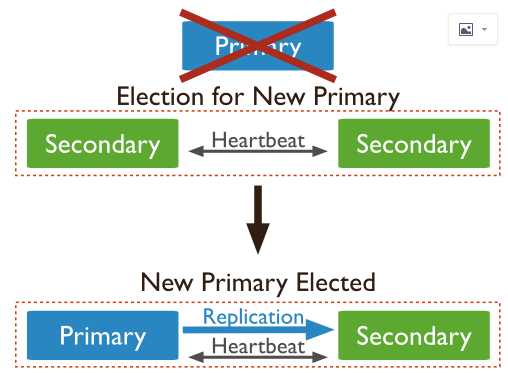

四、Arbiter和选举机制

Arbiter可以作为副本集的一部分,但它不是一个数据副本,故它不会成为Primary。

Arbiter在Primary不可用的时候,作为一个选举的角色存在。

如果Arbiter不存在,Primary挂掉的情况下,剩下的两个Secondary则也会进行选举。

在1个Primary + 2个Secondary的情况下,failover如下:

五、搭建

1、实验环境

192.168.1.34(Primary)

192.168.1.35(Secondary1)

192.168.1.36(Secondary2)

192.168.1.37(Arbiter)

2、安装mongodb并启动

先在三台安装mongodb, 先关闭防火墙

service iptables stop

在三台机器分别创建对应目录并启动mongodb

# mkdir -p /data/mongo_replset

#./mongod --dbpath=/data/mongo_replset --logpath=/data/mongo_replset/mongo.log --fork --replSet=first_replset

3、配置副本集

登录192.168.1.34(Primary)的mongo shell

# ./mongo > use admin switched to db admin 输入 > cnf = {_id:"first_replset", members:[ ... {_id:1, host:"192.168.1.34:27017"}, ... {_id:2, host:"192.168.1.35:27017"}, ... {_id:3, host:"192.168.1.36:27017"}, ... ] ... } 输出: { "_id" : "first_replset", "members" : [ { "_id" : 1, "host" : "192.168.1.34:27017" }, { "_id" : 2, "host" : "192.168.1.35:27017" }, { "_id" : 3, "host" : "192.168.1.36:27017" } ] }

初始化配置:

> rs.initiate(cnf); { "ok" : 1 } first_replset:OTHER> first_replset:PRIMARY> first_replset:PRIMARY>

此时可以发现该mongodb实例已经成为PRIMARY了。

可以查看下这个副本集的各个成员的状态:

first_replset:PRIMARY> rs.status(); { "set" : "first_replset", "date" : ISODate("2018-01-03T06:37:10.559Z"), "myState" : 1, "members" : [ { "_id" : 1, "name" : "192.168.1.34:27017", "health" : 1, "state" : 1, "stateStr" : "PRIMARY", "uptime" : 599, "optime" : Timestamp(1514961357, 1), "optimeDate" : ISODate("2018-01-03T06:35:57Z"), "electionTime" : Timestamp(1514961361, 1), "electionDate" : ISODate("2018-01-03T06:36:01Z"), "configVersion" : 1, "self" : true }, { "_id" : 2, "name" : "192.168.1.35:27017", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 73, "optime" : Timestamp(1514961357, 1), "optimeDate" : ISODate("2018-01-03T06:35:57Z"), "lastHeartbeat" : ISODate("2018-01-03T06:37:09.308Z"), "lastHeartbeatRecv" : ISODate("2018-01-03T06:37:09.316Z"), "pingMs" : 1, "lastHeartbeatMessage" : "could not find member to sync from", "configVersion" : 1 }, { "_id" : 3, "name" : "192.168.1.36:27017", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 73, "optime" : Timestamp(1514961357, 1), "optimeDate" : ISODate("2018-01-03T06:35:57Z"), "lastHeartbeat" : ISODate("2018-01-03T06:37:09.306Z"), "lastHeartbeatRecv" : ISODate("2018-01-03T06:37:09.313Z"), "pingMs" : 1, "lastHeartbeatMessage" : "could not find member to sync from", "configVersion" : 1 } ], "ok" : 1 }

4、副本集复制状态测试

在PRIMARY上insert:

# ./mongo 127.0.0.1/test first_replset:PRIMARY> db.test_table.insert({"id": 1}) WriteResult({ "nInserted" : 1 }) first_replset:PRIMARY> db.test_table.find() { "_id" : ObjectId("5a4c7b44ecb269292f0de224"), "id" : 1 }

在35、36任意SECONDARY上查询:

同master - slave结构一样,默认SECONDARY是不可读的,需要执行rs.slaveOk()。

first_replset:SECONDARY> rs.slaveOk() first_replset:SECONDARY> db.test_table.find() { "_id" : ObjectId("5a4c7b44ecb269292f0de224"), "id" : 1 }

5、failover测试

此时情况:

192.168.1.34(Primary)

192.168.1.35(Secondary)

192.168.1.36(Secondary)

停掉PRIAMRY:

first_replset:PRIMARY> use admin switched to db admin first_replset:PRIMARY> db.shutdownServer() 2018-01-03T14:46:14.048+0800 I NETWORK DBClientCursor::init call() failed server should be down... 2018-01-03T14:46:14.052+0800 I NETWORK trying reconnect to 127.0.0.1:27017 (127.0.0.1) failed 2018-01-03T14:46:14.052+0800 I NETWORK reconnect 127.0.0.1:27017 (127.0.0.1) ok 2018-01-03T14:46:14.053+0800 I NETWORK DBClientCursor::init call() failed 2018-01-03T14:46:14.056+0800 I NETWORK trying reconnect to 127.0.0.1:27017 (127.0.0.1) failed 2018-01-03T14:46:14.056+0800 I NETWORK reconnect 127.0.0.1:27017 (127.0.0.1) ok 2018-01-03T14:46:14.397+0800 I NETWORK Socket recv() errno:104 Connection reset by peer 127.0.0.1:27017 2018-01-03T14:46:14.397+0800 I NETWORK SocketException: remote: 127.0.0.1:27017 error: 9001 socket exception [RECV_ERROR] server [127.0.0.1:27017] 2018-01-03T14:46:14.397+0800 I NETWORK DBClientCursor::init call() failed

(原SECONDARY)35上查到,此时35已经为PRIMARY了

first_replset:SECONDARY> first_replset:PRIMARY> rs.status() { "set" : "first_replset", "date" : ISODate("2018-01-03T06:47:14.289Z"), "myState" : 1, "members" : [ { "_id" : 1, "name" : "192.168.1.34:27017", "health" : 0, "state" : 8, "stateStr" : "(not reachable/healthy)", "uptime" : 0, "optime" : Timestamp(0, 0), "optimeDate" : ISODate("1970-01-01T00:00:00Z"), "lastHeartbeat" : ISODate("2018-01-03T06:47:14.034Z"), "lastHeartbeatRecv" : ISODate("2018-01-03T06:46:13.934Z"), "pingMs" : 0, "lastHeartbeatMessage" : "Failed attempt to connect to 192.168.1.34:27017; couldn‘t connect to server 192.168.1.34:27017 (192.168.1.34), connection attempt failed", "configVersion" : -1 }, { "_id" : 2, "name" : "192.168.1.35:27017", "health" : 1, "state" : 1, "stateStr" : "PRIMARY", "uptime" : 1180, "optime" : Timestamp(1514961732, 2), "optimeDate" : ISODate("2018-01-03T06:42:12Z"), "electionTime" : Timestamp(1514961976, 1), "electionDate" : ISODate("2018-01-03T06:46:16Z"), "configVersion" : 1, "self" : true }, { "_id" : 3, "name" : "192.168.1.36:27017", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 676, "optime" : Timestamp(1514961732, 2), "optimeDate" : ISODate("2018-01-03T06:42:12Z"), "lastHeartbeat" : ISODate("2018-01-03T06:47:13.997Z"), "lastHeartbeatRecv" : ISODate("2018-01-03T06:47:13.995Z"), "pingMs" : 0, "configVersion" : 1 } ], "ok" : 1 }

6、在副本集中添加一个属性为Arbiter的成员

当然此处只做添加实践,实际上并不建议在Secondary-Primary-Secondary的结构上再多一个Arbiter成员形成偶数个节点。

在192.168.1.37上启动一个mongod实例:

# mkdir -p /data/arb

# ./mongod --dbpath=/data/arb/ --logpath=/data/arb/mongo.log --fork --replSet=first_replset

在failover后的PRIMARY节点添加Arbiter

first_replset:PRIMARY> rs.addArb("192.168.1.37:27017") { "ok" : 1 } first_replset:PRIMARY> rs.status() { "_id" : 4, "name" : "192.168.1.37:27017", "health" : 1, "state" : 7, "stateStr" : "ARBITER", "uptime" : 82, "lastHeartbeat" : ISODate("2018-01-03T07:16:14.872Z"), "lastHeartbeatRecv" : ISODate("2018-01-03T07:16:15.497Z"), "pingMs" : 1, "configVersion" : 2 } ], "ok" : 1

此时回到arbiter的mongo shell,发现正如文档所说,arbiter是不会存有副本集中数据的。

first_replset:ARBITER> rs.slaveOk() first_replset:ARBITER> use test; switched to db test first_replset:ARBITER> show tables; first_replset:ARBITER> db.test_table.find(); Error: error: { "$err" : "not master or secondary; cannot currently read from this replSet member", "code" : 13436 }

标签:iptable primary master .sh 14.04 eset 1.3 iat mkdir

原文地址:https://www.cnblogs.com/guantou1992/p/9729616.html