标签:技术分享 news fork worker return tgid sys real exit

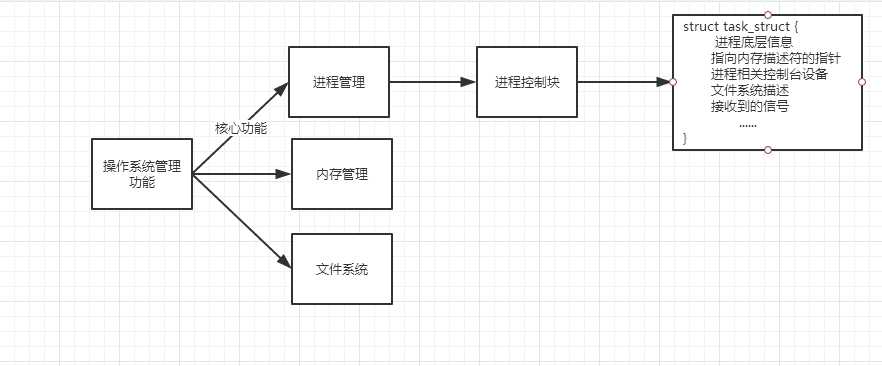

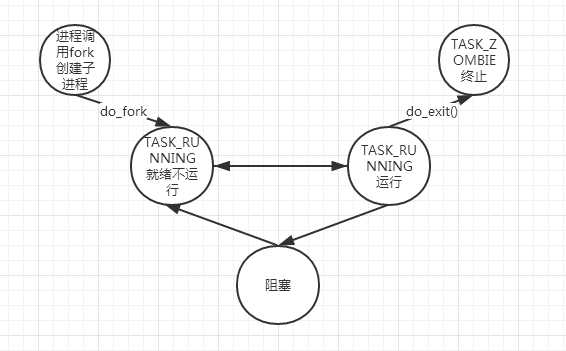

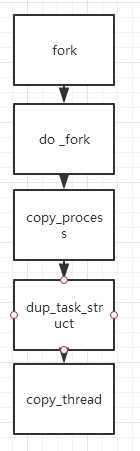

fork创建了两个进程,一个父进程,一个子进程,其中子进程是对父进程的拷贝,它从父进程处复制了整个进程的地址空间,只有进程号和一些计时器等等是自己独有的,由于要复制很多资源,所以fork创建进程是很慢的。fork执行一次有两次返回值,在父进程里返回新建的子进程编号,在子进程里返回0。进程创建相关代码如下:

/*

1694 * Create a kernel thread.

1695 */

1696pid_t kernel_thread(int (*fn)(void *), void *arg, unsigned long flags)

1697{

1698 return do_fork(flags|CLONE_VM|CLONE_UNTRACED, (unsigned long)fn,

1699 (unsigned long)arg, NULL, NULL);

1700}

1701

1702#ifdef __ARCH_WANT_SYS_FORK

1703SYSCALL_DEFINE0(fork)

1704{

1705#ifdef CONFIG_MMU

1706 return do_fork(SIGCHLD, 0, 0, NULL, NULL);

1707#else

1708 /* can not support in nommu mode */

1709 return -EINVAL;

1710#endif

1711}

1712#endif

1713

1714#ifdef __ARCH_WANT_SYS_VFORK

1715SYSCALL_DEFINE0(vfork)

1716{

1717 return do_fork(CLONE_VFORK | CLONE_VM | SIGCHLD, 0,

1718 0, NULL, NULL);

1719}

1720#endif

1721

1722#ifdef __ARCH_WANT_SYS_CLONE

1723#ifdef CONFIG_CLONE_BACKWARDS

1724SYSCALL_DEFINE5(clone, unsigned long, clone_flags, unsigned long, newsp,

1725 int __user *, parent_tidptr,

1726 int, tls_val,

1727 int __user *, child_tidptr)

1728#elif defined(CONFIG_CLONE_BACKWARDS2)

1729SYSCALL_DEFINE5(clone, unsigned long, newsp, unsigned long, clone_flags,

1730 int __user *, parent_tidptr,

1731 int __user *, child_tidptr,

1732 int, tls_val)

1733#elif defined(CONFIG_CLONE_BACKWARDS3)

1734SYSCALL_DEFINE6(clone, unsigned long, clone_flags, unsigned long, newsp,

1735 int, stack_size,

1736 int __user *, parent_tidptr,

1737 int __user *, child_tidptr,

1738 int, tls_val)

1739#else

1740SYSCALL_DEFINE5(clone, unsigned long, clone_flags, unsigned long, newsp,

1741 int __user *, parent_tidptr,

1742 int __user *, child_tidptr,

1743 int, tls_val)

1744#endif

1745{

1746 return do_fork(clone_flags, newsp, 0, parent_tidptr, child_tidptr);

1747}

1748#endif由于创建进程的三种方式最终都是调用do_fork,所以我们分析do_fork的源码:

long do_fork(unsigned long clone_flags,

1624 unsigned long stack_start,

1625 unsigned long stack_size,

1626 int __user *parent_tidptr,

1627 int __user *child_tidptr)

1628{

1629 struct task_struct *p;

1630 int trace = 0;

1631 long nr;

1632

1633 /*

1634 * Determine whether and which event to report to ptracer. When

1635 * called from kernel_thread or CLONE_UNTRACED is explicitly

1636 * requested, no event is reported; otherwise, report if the event

1637 * for the type of forking is enabled.

1638 */

1639 if (!(clone_flags & CLONE_UNTRACED)) {

1640 if (clone_flags & CLONE_VFORK)

1641 trace = PTRACE_EVENT_VFORK;

1642 else if ((clone_flags & CSIGNAL) != SIGCHLD)

1643 trace = PTRACE_EVENT_CLONE;

1644 else

1645 trace = PTRACE_EVENT_FORK;

1646

1647 if (likely(!ptrace_event_enabled(current, trace)))

1648 trace = 0;

1649 }

1650

1651 p = copy_process(clone_flags, stack_start, stack_size,

1652 child_tidptr, NULL, trace);

1653 /*

1654 * Do this prior waking up the new thread - the thread pointer

1655 * might get invalid after that point, if the thread exits quickly.

1656 */

1657 if (!IS_ERR(p)) {

1658 struct completion vfork;

1659 struct pid *pid;

1660

1661 trace_sched_process_fork(current, p);

1662

1663 pid = get_task_pid(p, PIDTYPE_PID);

1664 nr = pid_vnr(pid);

1665

1666 if (clone_flags & CLONE_PARENT_SETTID)

1667 put_user(nr, parent_tidptr);

1668

1669 if (clone_flags & CLONE_VFORK) {

1670 p->vfork_done = &vfork;

1671 init_completion(&vfork);

1672 get_task_struct(p);

1673 }

1674

1675 wake_up_new_task(p);

1676

1677 /* forking complete and child started to run, tell ptracer */

1678 if (unlikely(trace))

1679 ptrace_event_pid(trace, pid);

1680

1681 if (clone_flags & CLONE_VFORK) {

1682 if (!wait_for_vfork_done(p, &vfork))

1683 ptrace_event_pid(PTRACE_EVENT_VFORK_DONE, pid);

1684 }

1685

1686 put_pid(pid);

1687 } else {

1688 nr = PTR_ERR(p);

1689 }

1690 return nr;

1691}do_fork主要完成了copy_process的调用,来复制父进程

82static struct task_struct *copy_process(unsigned long clone_flags,

1183 unsigned long stack_start,

1184 unsigned long stack_size,

1185 int __user *child_tidptr,

1186 struct pid *pid,

1187 int trace)

1188{

1189 int retval;

1190 struct task_struct *p;

1191

1192 if ((clone_flags & (CLONE_NEWNS|CLONE_FS)) == (CLONE_NEWNS|CLONE_FS))

1193 return ERR_PTR(-EINVAL);

1194

1195 if ((clone_flags & (CLONE_NEWUSER|CLONE_FS)) == (CLONE_NEWUSER|CLONE_FS))

1196 return ERR_PTR(-EINVAL);

1197

1198 /*

1199 * Thread groups must share signals as well, and detached threads

1200 * can only be started up within the thread group.

1201 */

1202 if ((clone_flags & CLONE_THREAD) && !(clone_flags & CLONE_SIGHAND))

1203 return ERR_PTR(-EINVAL);

1204

1205 /*

1206 * Shared signal handlers imply shared VM. By way of the above,

1207 * thread groups also imply shared VM. Blocking this case allows

1208 * for various simplifications in other code.

1209 */

1210 if ((clone_flags & CLONE_SIGHAND) && !(clone_flags & CLONE_VM))

1211 return ERR_PTR(-EINVAL);

1212

1213 /*

1214 * Siblings of global init remain as zombies on exit since they are

1215 * not reaped by their parent (swapper). To solve this and to avoid

1216 * multi-rooted process trees, prevent global and container-inits

1217 * from creating siblings.

1218 */

1219 if ((clone_flags & CLONE_PARENT) &&

1220 current->signal->flags & SIGNAL_UNKILLABLE)

1221 return ERR_PTR(-EINVAL);

1222

1223 /*

1224 * If the new process will be in a different pid or user namespace

1225 * do not allow it to share a thread group or signal handlers or

1226 * parent with the forking task.

1227 */

1228 if (clone_flags & CLONE_SIGHAND) {

1229 if ((clone_flags & (CLONE_NEWUSER | CLONE_NEWPID)) ||

1230 (task_active_pid_ns(current) !=

1231 current->nsproxy->pid_ns_for_children))

1232 return ERR_PTR(-EINVAL);

1233 }

1234

1235 retval = security_task_create(clone_flags);

1236 if (retval)

1237 goto fork_out;

1238

1239 retval = -ENOMEM;

1240 p = dup_task_struct(current);

1241 if (!p)

1242 goto fork_out;

1243

1244 ftrace_graph_init_task(p);

1245

1246 rt_mutex_init_task(p);

1247

1248#ifdef CONFIG_PROVE_LOCKING

1249 DEBUG_LOCKS_WARN_ON(!p->hardirqs_enabled);

1250 DEBUG_LOCKS_WARN_ON(!p->softirqs_enabled);

1251#endif

1252 retval = -EAGAIN;

1253 if (atomic_read(&p->real_cred->user->processes) >=

1254 task_rlimit(p, RLIMIT_NPROC)) {

1255 if (p->real_cred->user != INIT_USER &&

1256 !capable(CAP_SYS_RESOURCE) && !capable(CAP_SYS_ADMIN))

1257 goto bad_fork_free;

1258 }

1259 current->flags &= ~PF_NPROC_EXCEEDED;

1260

1261 retval = copy_creds(p, clone_flags);

1262 if (retval < 0)

1263 goto bad_fork_free;

1264

1265 /*

1266 * If multiple threads are within copy_process(), then this check

1267 * triggers too late. This doesn‘t hurt, the check is only there

1268 * to stop root fork bombs.

1269 */

1270 retval = -EAGAIN;

1271 if (nr_threads >= max_threads)

1272 goto bad_fork_cleanup_count;

1273

1274 if (!try_module_get(task_thread_info(p)->exec_domain->module))

1275 goto bad_fork_cleanup_count;

1276

1277 delayacct_tsk_init(p); /* Must remain after dup_task_struct() */

1278 p->flags &= ~(PF_SUPERPRIV | PF_WQ_WORKER);

1279 p->flags |= PF_FORKNOEXEC;

1280 INIT_LIST_HEAD(&p->children);

1281 INIT_LIST_HEAD(&p->sibling);

1282 rcu_copy_process(p);

1283 p->vfork_done = NULL;

1284 spin_lock_init(&p->alloc_lock);

1285

1286 init_sigpending(&p->pending);

1287

1288 p->utime = p->stime = p->gtime = 0;

1289 p->utimescaled = p->stimescaled = 0;

1290#ifndef CONFIG_VIRT_CPU_ACCOUNTING_NATIVE

1291 p->prev_cputime.utime = p->prev_cputime.stime = 0;

1292#endif

1293#ifdef CONFIG_VIRT_CPU_ACCOUNTING_GEN

1294 seqlock_init(&p->vtime_seqlock);

1295 p->vtime_snap = 0;

1296 p->vtime_snap_whence = VTIME_SLEEPING;

1297#endif

1298

1299#if defined(SPLIT_RSS_COUNTING)

1300 memset(&p->rss_stat, 0, sizeof(p->rss_stat));

1301#endif

1302

1303 p->default_timer_slack_ns = current->timer_slack_ns;

1304

1305 task_io_accounting_init(&p->ioac);

1306 acct_clear_integrals(p);

1307

1308 posix_cpu_timers_init(p);

1309

1310 p->start_time = ktime_get_ns();

1311 p->real_start_time = ktime_get_boot_ns();

1312 p->io_context = NULL;

1313 p->audit_context = NULL;

1314 if (clone_flags & CLONE_THREAD)

1315 threadgroup_change_begin(current);

1316 cgroup_fork(p);

1317#ifdef CONFIG_NUMA

1318 p->mempolicy = mpol_dup(p->mempolicy);

1319 if (IS_ERR(p->mempolicy)) {

1320 retval = PTR_ERR(p->mempolicy);

1321 p->mempolicy = NULL;

1322 goto bad_fork_cleanup_threadgroup_lock;

1323 }

1324#endif

1325#ifdef CONFIG_CPUSETS

1326 p->cpuset_mem_spread_rotor = NUMA_NO_NODE;

1327 p->cpuset_slab_spread_rotor = NUMA_NO_NODE;

1328 seqcount_init(&p->mems_allowed_seq);

1329#endif

1330#ifdef CONFIG_TRACE_IRQFLAGS

1331 p->irq_events = 0;

1332 p->hardirqs_enabled = 0;

1333 p->hardirq_enable_ip = 0;

1334 p->hardirq_enable_event = 0;

1335 p->hardirq_disable_ip = _THIS_IP_;

1336 p->hardirq_disable_event = 0;

1337 p->softirqs_enabled = 1;

1338 p->softirq_enable_ip = _THIS_IP_;

1339 p->softirq_enable_event = 0;

1340 p->softirq_disable_ip = 0;

1341 p->softirq_disable_event = 0;

1342 p->hardirq_context = 0;

1343 p->softirq_context = 0;

1344#endif

1345#ifdef CONFIG_LOCKDEP

1346 p->lockdep_depth = 0; /* no locks held yet */

1347 p->curr_chain_key = 0;

1348 p->lockdep_recursion = 0;

1349#endif

1350

1351#ifdef CONFIG_DEBUG_MUTEXES

1352 p->blocked_on = NULL; /* not blocked yet */

1353#endif

1354#ifdef CONFIG_BCACHE

1355 p->sequential_io = 0;

1356 p->sequential_io_avg = 0;

1357#endif

1358

1359 /* Perform scheduler related setup. Assign this task to a CPU. */

1360 retval = sched_fork(clone_flags, p);

1361 if (retval)

1362 goto bad_fork_cleanup_policy;

1363

1364 retval = perf_event_init_task(p);

1365 if (retval)

1366 goto bad_fork_cleanup_policy;

1367 retval = audit_alloc(p);

1368 if (retval)

1369 goto bad_fork_cleanup_perf;

1370 /* copy all the process information */

1371 shm_init_task(p);

1372 retval = copy_semundo(clone_flags, p);

1373 if (retval)

1374 goto bad_fork_cleanup_audit;

1375 retval = copy_files(clone_flags, p);

1376 if (retval)

1377 goto bad_fork_cleanup_semundo;

1378 retval = copy_fs(clone_flags, p);

1379 if (retval)

1380 goto bad_fork_cleanup_files;

1381 retval = copy_sighand(clone_flags, p);

1382 if (retval)

1383 goto bad_fork_cleanup_fs;

1384 retval = copy_signal(clone_flags, p);

1385 if (retval)

1386 goto bad_fork_cleanup_sighand;

1387 retval = copy_mm(clone_flags, p);

1388 if (retval)

1389 goto bad_fork_cleanup_signal;

1390 retval = copy_namespaces(clone_flags, p);

1391 if (retval)

1392 goto bad_fork_cleanup_mm;

1393 retval = copy_io(clone_flags, p);

1394 if (retval)

1395 goto bad_fork_cleanup_namespaces;

1396 retval = copy_thread(clone_flags, stack_start, stack_size, p);

1397 if (retval)

1398 goto bad_fork_cleanup_io;

1399

1400 if (pid != &init_struct_pid) {

1401 retval = -ENOMEM;

1402 pid = alloc_pid(p->nsproxy->pid_ns_for_children);

1403 if (!pid)

1404 goto bad_fork_cleanup_io;

1405 }

1406

1407 p->set_child_tid = (clone_flags & CLONE_CHILD_SETTID) ? child_tidptr : NULL;

1408 /*

1409 * Clear TID on mm_release()?

1410 */

1411 p->clear_child_tid = (clone_flags & CLONE_CHILD_CLEARTID) ? child_tidptr : NULL;

1412#ifdef CONFIG_BLOCK

1413 p->plug = NULL;

1414#endif

1415#ifdef CONFIG_FUTEX

1416 p->robust_list = NULL;

1417#ifdef CONFIG_COMPAT

1418 p->compat_robust_list = NULL;

1419#endif

1420 INIT_LIST_HEAD(&p->pi_state_list);

1421 p->pi_state_cache = NULL;

1422#endif

1423 /*

1424 * sigaltstack should be cleared when sharing the same VM

1425 */

1426 if ((clone_flags & (CLONE_VM|CLONE_VFORK)) == CLONE_VM)

1427 p->sas_ss_sp = p->sas_ss_size = 0;

1428

1429 /*

1430 * Syscall tracing and stepping should be turned off in the

1431 * child regardless of CLONE_PTRACE.

1432 */

1433 user_disable_single_step(p);

1434 clear_tsk_thread_flag(p, TIF_SYSCALL_TRACE);

1435#ifdef TIF_SYSCALL_EMU

1436 clear_tsk_thread_flag(p, TIF_SYSCALL_EMU);

1437#endif

1438 clear_all_latency_tracing(p);

1439

1440 /* ok, now we should be set up.. */

1441 p->pid = pid_nr(pid);

1442 if (clone_flags & CLONE_THREAD) {

1443 p->exit_signal = -1;

1444 p->group_leader = current->group_leader;

1445 p->tgid = current->tgid;

1446 } else {

1447 if (clone_flags & CLONE_PARENT)

1448 p->exit_signal = current->group_leader->exit_signal;

1449 else

1450 p->exit_signal = (clone_flags & CSIGNAL);

1451 p->group_leader = p;

1452 p->tgid = p->pid;

1453 }

1454

1455 p->nr_dirtied = 0;

1456 p->nr_dirtied_pause = 128 >> (PAGE_SHIFT - 10);

1457 p->dirty_paused_when = 0;

1458

1459 p->pdeath_signal = 0;

1460 INIT_LIST_HEAD(&p->thread_group);

1461 p->task_works = NULL;

1462

1463 /*

1464 * Make it visible to the rest of the system, but dont wake it up yet.

1465 * Need tasklist lock for parent etc handling!

1466 */

1467 write_lock_irq(&tasklist_lock);

1468

1469 /* CLONE_PARENT re-uses the old parent */

1470 if (clone_flags & (CLONE_PARENT|CLONE_THREAD)) {

1471 p->real_parent = current->real_parent;

1472 p->parent_exec_id = current->parent_exec_id;

1473 } else {

1474 p->real_parent = current;

1475 p->parent_exec_id = current->self_exec_id;

1476 }

1477

1478 spin_lock(¤t->sighand->siglock);

1479

1480 /*

1481 * Copy seccomp details explicitly here, in case they were changed

1482 * before holding sighand lock.

1483 */

1484 copy_seccomp(p);

1485

1486 /*

1487 * Process group and session signals need to be delivered to just the

1488 * parent before the fork or both the parent and the child after the

1489 * fork. Restart if a signal comes in before we add the new process to

1490 * it‘s process group.

1491 * A fatal signal pending means that current will exit, so the new

1492 * thread can‘t slip out of an OOM kill (or normal SIGKILL).

1493 */

1494 recalc_sigpending();

1495 if (signal_pending(current)) {

1496 spin_unlock(¤t->sighand->siglock);

1497 write_unlock_irq(&tasklist_lock);

1498 retval = -ERESTARTNOINTR;

1499 goto bad_fork_free_pid;

1500 }

1501

1502 if (likely(p->pid)) {

1503 ptrace_init_task(p, (clone_flags & CLONE_PTRACE) || trace);

1504

1505 init_task_pid(p, PIDTYPE_PID, pid);

1506 if (thread_group_leader(p)) {

1507 init_task_pid(p, PIDTYPE_PGID, task_pgrp(current));

1508 init_task_pid(p, PIDTYPE_SID, task_session(current));

1509

1510 if (is_child_reaper(pid)) {

1511 ns_of_pid(pid)->child_reaper = p;

1512 p->signal->flags |= SIGNAL_UNKILLABLE;

1513 }

1514

1515 p->signal->leader_pid = pid;

1516 p->signal->tty = tty_kref_get(current->signal->tty);

1517 list_add_tail(&p->sibling, &p->real_parent->children);

1518 list_add_tail_rcu(&p->tasks, &init_task.tasks);

1519 attach_pid(p, PIDTYPE_PGID);

1520 attach_pid(p, PIDTYPE_SID);

1521 __this_cpu_inc(process_counts);

1522 } else {

1523 current->signal->nr_threads++;

1524 atomic_inc(¤t->signal->live);

1525 atomic_inc(¤t->signal->sigcnt);

1526 list_add_tail_rcu(&p->thread_group,

1527 &p->group_leader->thread_group);

1528 list_add_tail_rcu(&p->thread_node,

1529 &p->signal->thread_head);

1530 }

1531 attach_pid(p, PIDTYPE_PID);

1532 nr_threads++;

1533 }

1534

1535 total_forks++;

1536 spin_unlock(¤t->sighand->siglock);

1537 syscall_tracepoint_update(p);

1538 write_unlock_irq(&tasklist_lock);

1539

1540 proc_fork_connector(p);

1541 cgroup_post_fork(p);

1542 if (clone_flags & CLONE_THREAD)

1543 threadgroup_change_end(current);

1544 perf_event_fork(p);

1545

1546 trace_task_newtask(p, clone_flags);

1547 uprobe_copy_process(p, clone_flags);

1548

1549 return p;

1550

1551bad_fork_free_pid:

1552 if (pid != &init_struct_pid)

1553 free_pid(pid);

1554bad_fork_cleanup_io:

1555 if (p->io_context)

1556 exit_io_context(p);

1557bad_fork_cleanup_namespaces:

1558 exit_task_namespaces(p);

1559bad_fork_cleanup_mm:

1560 if (p->mm)

1561 mmput(p->mm);

1562bad_fork_cleanup_signal:

1563 if (!(clone_flags & CLONE_THREAD))

1564 free_signal_struct(p->signal);

1565bad_fork_cleanup_sighand:

1566 __cleanup_sighand(p->sighand);

1567bad_fork_cleanup_fs:

1568 exit_fs(p); /* blocking */

1569bad_fork_cleanup_files:

1570 exit_files(p); /* blocking */

1571bad_fork_cleanup_semundo:

1572 exit_sem(p);

1573bad_fork_cleanup_audit:

1574 audit_free(p);

1575bad_fork_cleanup_perf:

1576 perf_event_free_task(p);

1577bad_fork_cleanup_policy:

1578#ifdef CONFIG_NUMA

1579 mpol_put(p->mempolicy);

1580bad_fork_cleanup_threadgroup_lock:

1581#endif

1582 if (clone_flags & CLONE_THREAD)

1583 threadgroup_change_end(current);

1584 delayacct_tsk_free(p);

1585 module_put(task_thread_info(p)->exec_domain->module);

1586bad_fork_cleanup_count:

1587 atomic_dec(&p->cred->user->processes);

1588 exit_creds(p);

1589bad_fork_free:

1590 free_task(p);

1591fork_out:

1592 return ERR_PTR(retval);

}copy_process主要完成了dup_task_struct的调用来复制父进程的进程描述符和初始化子进程内核栈

static struct task_struct *dup_task_struct(struct task_struct *orig)

306{

307 struct task_struct *tsk;

308 struct thread_info *ti;

309 int node = tsk_fork_get_node(orig);

310 int err;

311

312 tsk = alloc_task_struct_node(node);

313 if (!tsk)

314 return NULL;

315

316 ti = alloc_thread_info_node(tsk, node);

317 if (!ti)

318 goto free_tsk;

319

320 err = arch_dup_task_struct(tsk, orig);

321 if (err)

322 goto free_ti;

323

324 tsk->stack = ti;

325#ifdef CONFIG_SECCOMP

326 /*

327 * We must handle setting up seccomp filters once we‘re under

328 * the sighand lock in case orig has changed between now and

329 * then. Until then, filter must be NULL to avoid messing up

330 * the usage counts on the error path calling free_task.

331 */

332 tsk->seccomp.filter = NULL;

333#endif

334

335 setup_thread_stack(tsk, orig);

336 clear_user_return_notifier(tsk);

337 clear_tsk_need_resched(tsk);

338 set_task_stack_end_magic(tsk);

339

340#ifdef CONFIG_CC_STACKPROTECTOR

341 tsk->stack_canary = get_random_int();

342#endif

343

344 /*

345 * One for us, one for whoever does the "release_task()" (usually

346 * parent)

347 */

348 atomic_set(&tsk->usage, 2);

349#ifdef CONFIG_BLK_DEV_IO_TRACE

350 tsk->btrace_seq = 0;

351#endif

352 tsk->splice_pipe = NULL;

353 tsk->task_frag.page = NULL;

354

355 account_kernel_stack(ti, 1);

356

357 return tsk;

358

359free_ti:

360 free_thread_info(ti);

361free_tsk:

362 free_task_struct(tsk);

363 return NULL;

364}

365

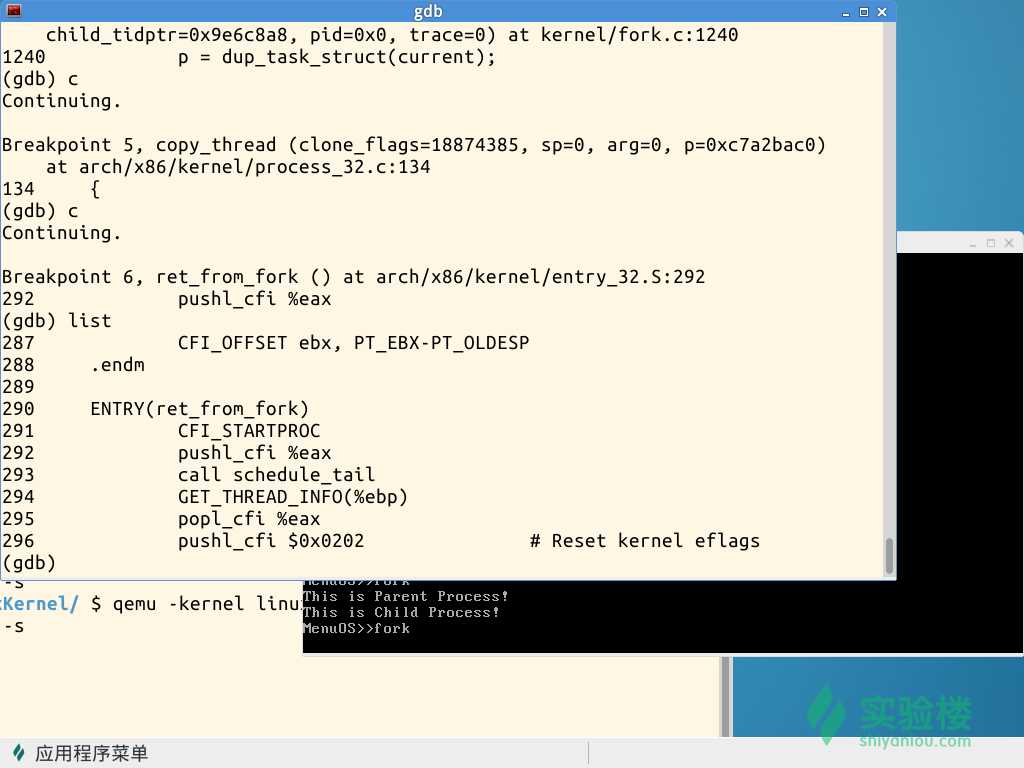

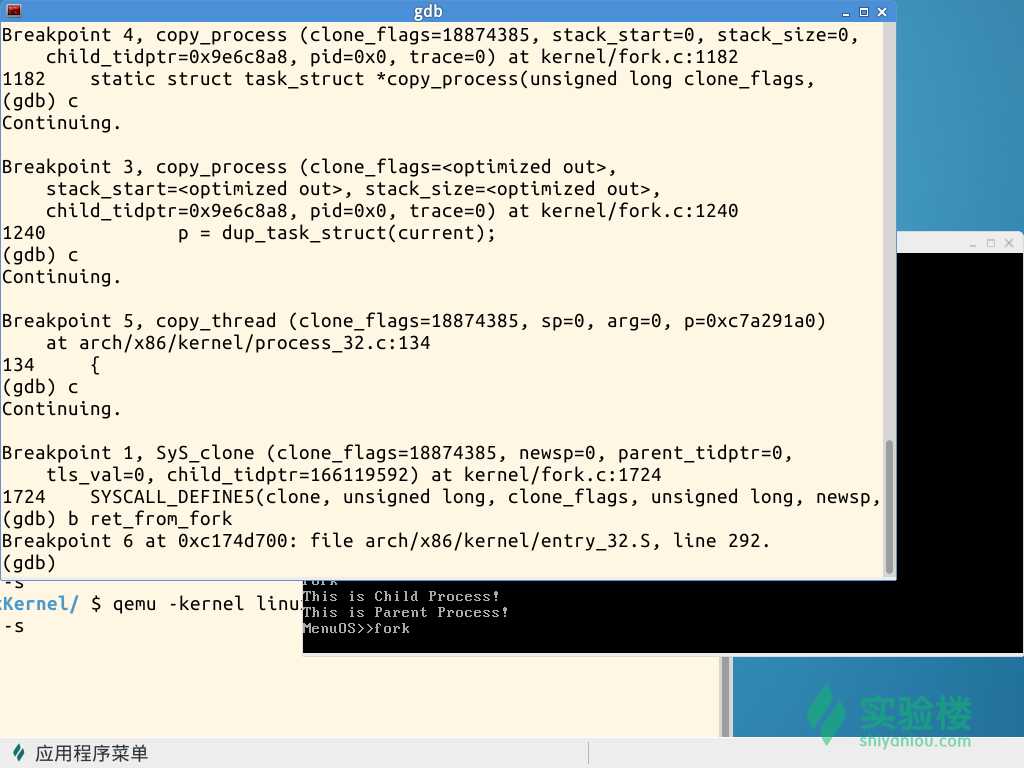

366#ifdef CONFIG_MMUcopy_thread完成内核栈关键信息初始化

int copy_thread(unsigned long clone_flags, unsigned long sp,

133 unsigned long arg, struct task_struct *p)

134{

135 struct pt_regs *childregs = task_pt_regs(p);

136 struct task_struct *tsk;

137 int err;

138

139 p->thread.sp = (unsigned long) childregs;

140 p->thread.sp0 = (unsigned long) (childregs+1);

141 memset(p->thread.ptrace_bps, 0, sizeof(p->thread.ptrace_bps));

142

143 if (unlikely(p->flags & PF_KTHREAD)) {

144 /* kernel thread */

145 memset(childregs, 0, sizeof(struct pt_regs));

146 p->thread.ip = (unsigned long) ret_from_kernel_thread;

147 task_user_gs(p) = __KERNEL_STACK_CANARY;

148 childregs->ds = __USER_DS;

149 childregs->es = __USER_DS;

150 childregs->fs = __KERNEL_PERCPU;

151 childregs->bx = sp; /* function */

152 childregs->bp = arg;

153 childregs->orig_ax = -1;

154 childregs->cs = __KERNEL_CS | get_kernel_rpl();

155 childregs->flags = X86_EFLAGS_IF | X86_EFLAGS_FIXED;

156 p->thread.io_bitmap_ptr = NULL;

157 return 0;

158 }

159 *childregs = *current_pt_regs();

160 childregs->ax = 0;

161 if (sp)

162 childregs->sp = sp;

163

164 p->thread.ip = (unsigned long) ret_from_fork;

165 task_user_gs(p) = get_user_gs(current_pt_regs());

166

167 p->thread.io_bitmap_ptr = NULL;

168 tsk = current;

169 err = -ENOMEM;

170

171 if (unlikely(test_tsk_thread_flag(tsk, TIF_IO_BITMAP))) {

172 p->thread.io_bitmap_ptr = kmemdup(tsk->thread.io_bitmap_ptr,

173 IO_BITMAP_BYTES, GFP_KERNEL);

174 if (!p->thread.io_bitmap_ptr) {

175 p->thread.io_bitmap_max = 0;

176 return -ENOMEM;

177 }

178 set_tsk_thread_flag(p, TIF_IO_BITMAP);

179 }

180

181 err = 0;

182

183 /*

184 * Set a new TLS for the child thread?

185 */

186 if (clone_flags & CLONE_SETTLS)

187 err = do_set_thread_area(p, -1,

188 (struct user_desc __user *)childregs->si, 0);

189

190 if (err && p->thread.io_bitmap_ptr) {

191 kfree(p->thread.io_bitmap_ptr);

192 p->thread.io_bitmap_max = 0;

193 }

194 return err;

195}

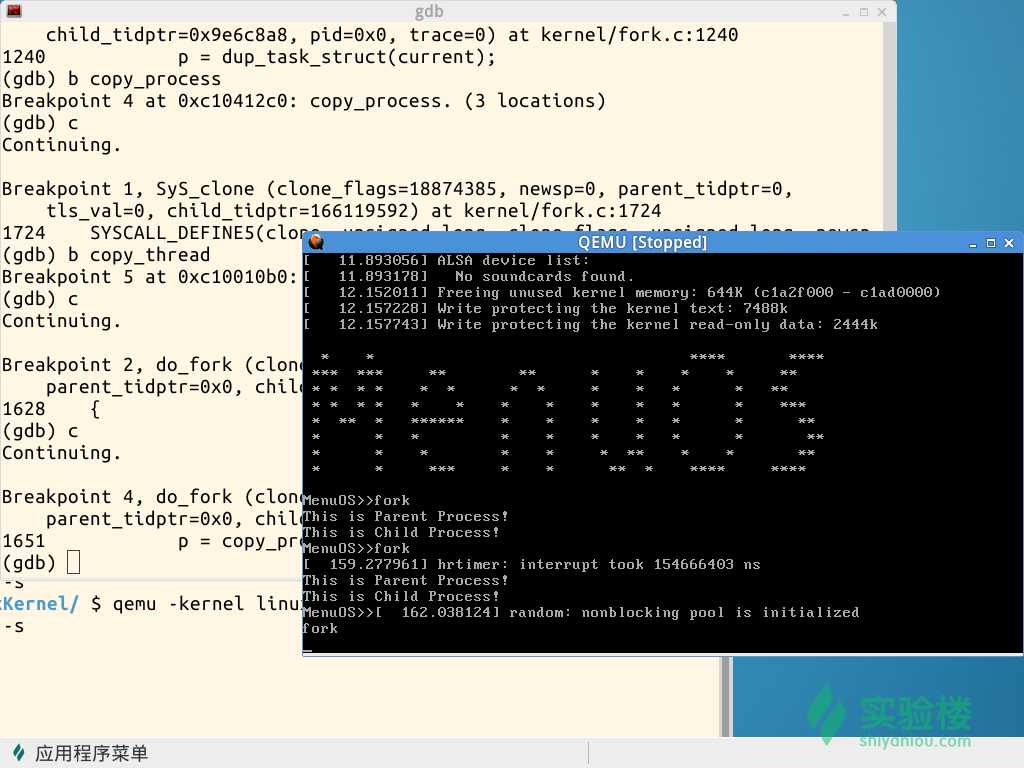

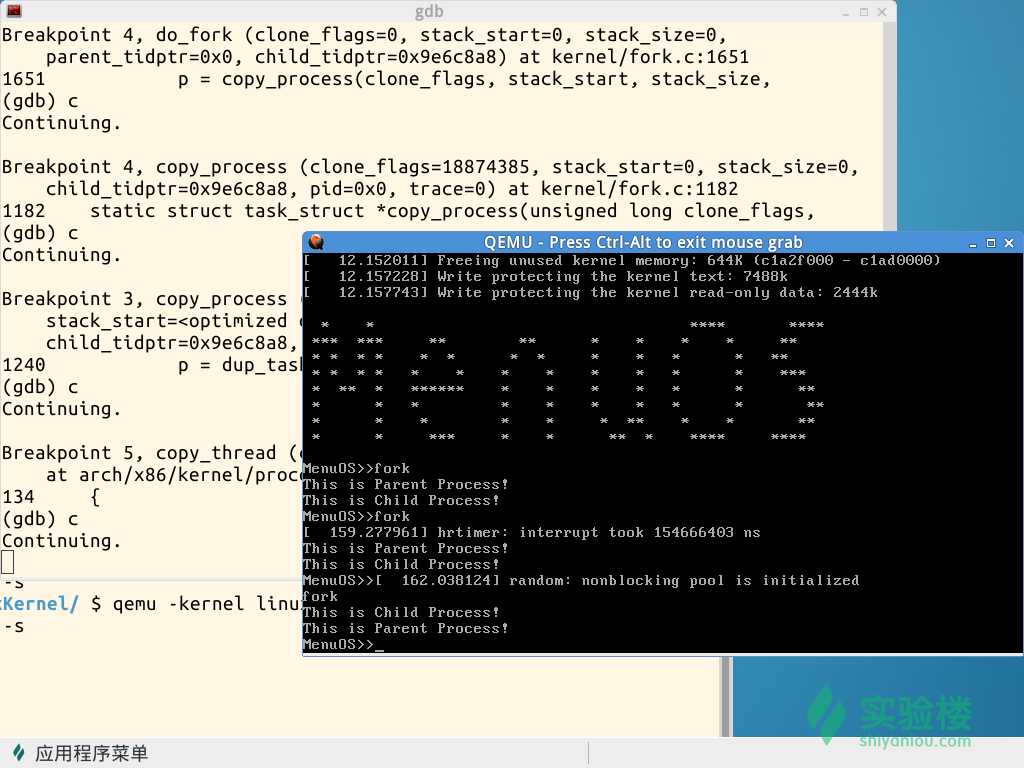

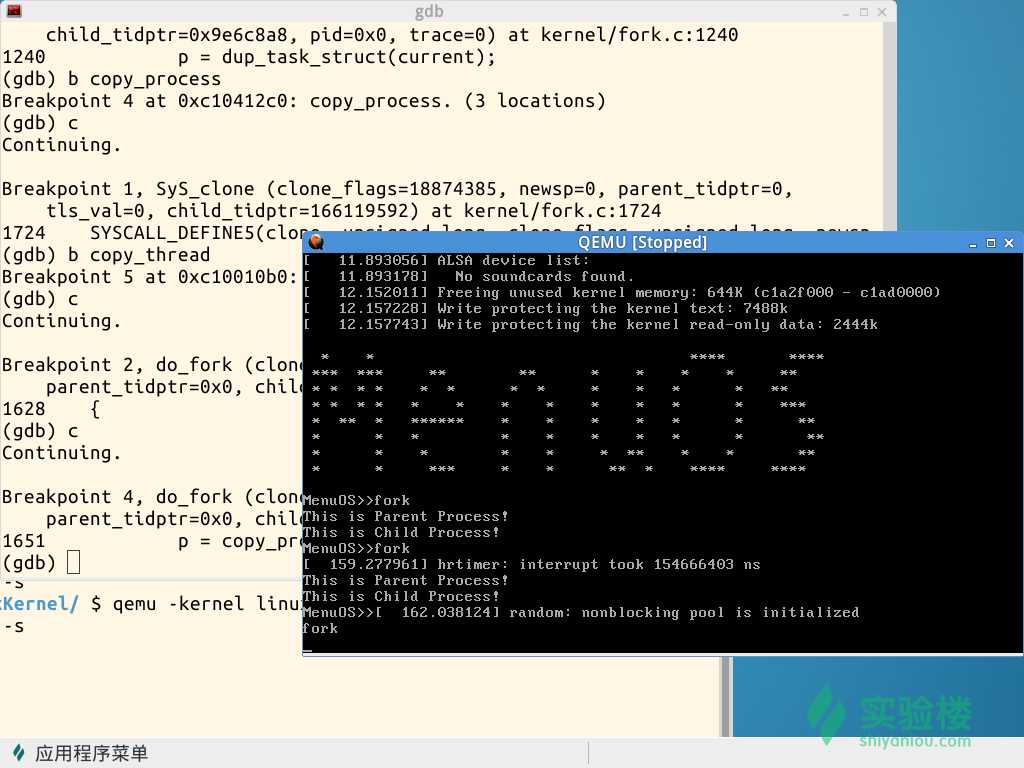

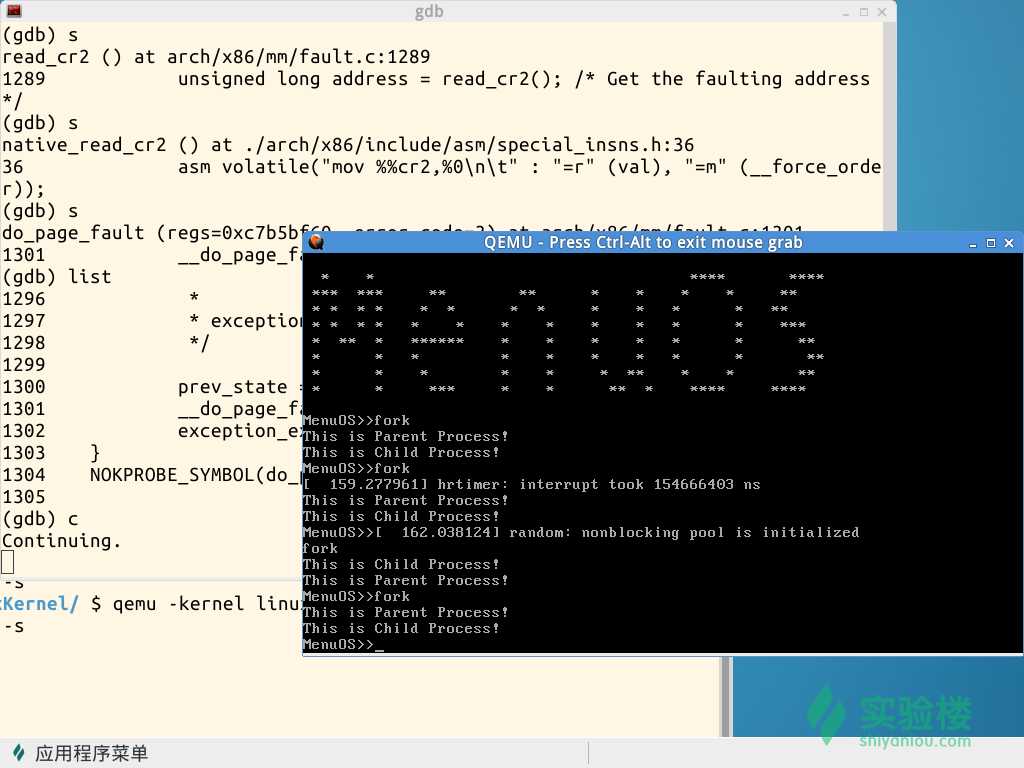

实验过程:

标签:技术分享 news fork worker return tgid sys real exit

原文地址:https://www.cnblogs.com/20189224sxy/p/10017376.html