标签:error ack put back pen 长度 lib 测试文件 1.4

利用C++语言实现BP神经网络, 并利用BP神经网络解决螨虫分类问题:

蠓虫分类问题:对两种蠓虫(A与B)进行鉴别,依据的资料是触角和翅膀的长度,已知了9支Af和6支Apf 的数据如下:A: (1.24,1.27), (1.36,1.74),(1.38,1.64) , (1.38,1.82) , (1.38,1.90) , (1.40,1.70) , (1.48,1.82) , (1.54,1.82) ,(1.56,2.08).B: (1.14,1.82), (1.18,1.96), (1.20,1.86), (1.26,2.00), (1.28,2.00),(1.30,1.96).

要求:(1)阐述BP神经网络的结构构成及数学原理;

(2)利用C++实现BP神经网络;

(3)利用BP神经网络实现螨虫分类.

头文件necessary.h:

1 #include<iostream>

2 #include<fstream>

3 #include<iomanip>

4 #include<stdlib.h>

5 #include<math.h>

6 #include<time.h>

7

8 #define SampleCount 15 //学习样本个数

9 #define INnum 2 //输入层神经元数目

10 #define HN 15//隐层神经元数目

11 #define ONnum 1 //输出层神经元数目

12

13 using namespace std;

头文件bp.h:

1 #ifndef BP_H

2 #define BP_H

3

4 #include "necessary.h"

5

6

7 class Back_propagation

8 {

9

10 public:

11 Back_propagation();

12 double W[HN][INnum]; //输入层至隐层权值

13 double V[ONnum][HN]; //隐层至输出层权值

14 double P[INnum]; //单个样本输入数据

15 double T[ONnum]; //单个样本期望输出值

16

17 double OLD_W[HN][INnum]; //保存HN-IN旧权!

18 double OLD_V[ONnum][HN]; //保存ON-HN旧权!

19 double HI[HN]; //隐层的输入

20 double OI[ONnum]; //输出层的输入

21 double hidenLayerOutput[HN]; //隐层的输出

22 double OO[ONnum]; //输出层的输出

23 double err_m[SampleCount]; //第m个样本的总误差

24 double studyRate;//学习效率效率

25 double b;//步长

26 double e_err[HN];

27 double d_err[ONnum];

28 void input_p(int m);

29 void input_t(int m);

30 void H_I_O();

31 void O_I_O();

32 void Err_Output_Hidden(int m);

33 void Err_Hidden_Input();

34 void Adjust_O_H(int m,int n);

35 void Adjust_H_I(int m,int n);

36 void saveWV();

37 struct

38 {

39 double input[INnum];

40 double teach[ONnum];

41 }Study_Data[SampleCount];

42 private:

43 };

44

45 #endif

源文件necessary.cpp:

1 #include "necessary.h"

源文件bp.cpp:

1 #include"bp.h"

2

3 Back_propagation::Back_propagation()

4 {

5

6

7 srand( (unsigned)time( NULL ) );

8

9 for( int i = 0; i < HN; i ++ )

10 {

11 for( int j = 0; j < INnum; j ++ )

12 {

13 W[i][j] = double( rand() % 100 ) / 100; //初始化输入层到隐层的权值

14 }

15 }

16 for( int ii = 0; ii < ONnum; ii ++ )

17 {

18 for( int jj = 0; jj < HN; jj ++ )

19 {

20 V[ii][jj] = double( rand() % 100 ) / 100; //初始化隐层到输出层的权值

21 }

22 }

23 }

24

25 void Back_propagation::input_p(int m)

26 {

27 for( int i = 0; i < INnum; i ++ )

28 {

29 P[i] = Study_Data[m].input[i];

30 }

31 }

32

33 void Back_propagation::input_t(int m)

34 {

35 for( int k = 0; k < ONnum; k ++ )

36 {

37 T[k] = Study_Data[m].teach[k];

38 }

39 }

40

41 void Back_propagation::H_I_O()

42 {

43 double net;

44 int i,j;

45 for( j = 0; j < HN; j ++ )

46 {

47 net = 0;

48 for( i = 0; i < INnum; i ++ )

49 {

50 net += W[j][i] * P[i];//求隐层内积

51 }

52 HI[j] = net;// - Thread_Hiden[j];//求隐层输入

53 hidenLayerOutput[j] = 1.0 / ( 1.0 + exp(-HI[j]) );//求隐层输出

54 }

55 }

56

57 void Back_propagation::O_I_O()

58 {

59 double net;

60 int k,j;

61 for( k = 0; k < ONnum; k ++ )

62 {

63 net = 0;

64 for( j = 0; j < HN; j ++ )

65 {

66 net += V[k][j] * hidenLayerOutput[j];//求输出层内积

67 }

68 OI[k] = net; //求输出层输入

69 OO[k] = 1.0 / ( 1.0 + exp(-OI[k]) );//求输出层输出

70 }

71 }

72

73 void Back_propagation::Err_Output_Hidden( int m )

74 {

75 double abs_err[ONnum];//样本误差

76 double sqr_err = 0;//临时保存误差平方

77

78 for( int k = 0; k < ONnum; k ++ )

79 {

80 abs_err[k] = T[k] - OO[k]; //求第m个样本下的第k个神经元的绝对误差

81

82 sqr_err += (abs_err[k]) * (abs_err[k]);//求第m个样本下输出层的平方误差

83

84 d_err[k] = abs_err[k] * OO[k] * (1.0-OO[k]);//d_err[k]输出层各神经元的一般化误差

85 }

86 err_m[m] = sqr_err / 2;//第m个样本下输出层的平方误差/2=第m个样本的均方误差,据ppt1.5-3

87

88 }

89

90 void Back_propagation::Err_Hidden_Input()

91 {

92 double sigma;

93 for( int j = 0; j < HN; j ++ )

94 {

95 sigma = 0.0;

96 for( int k = 0; k < ONnum; k ++ )

97 {

98 sigma += d_err[k] * V[k][j];

99 }

100

101 e_err[j] = sigma * hidenLayerOutput[j] * ( 1 - hidenLayerOutput[j] );//隐层各神经元的一般化误差

102 }

103 }

104

105 void Back_propagation::Adjust_O_H( int m,int n )

106 {

107 if( n <= 1 )

108 {

109 for( int k = 0; k < ONnum; k ++ )

110 {

111 for( int j = 0; j < HN; j ++ )

112 {

113 V[k][j] = V[k][j] + studyRate * d_err[k] * hidenLayerOutput[j];//输出层至隐层的权值调整

114 }

115 }

116 }

117 else if( n > 1 )

118 {

119 for( int k = 0; k < ONnum; k ++ )

120 {

121 for( int j = 0; j < HN; j ++ )

122 {

123 V[k][j] = V[k][j] + studyRate * d_err[k] * hidenLayerOutput[j] + b * ( V[k][j] - OLD_V[k][j] );//输出层至隐层的权值调整

124 }

125 }

126 }

127 }

128

129 void Back_propagation::Adjust_H_I( int m,int n )

130 {

131 if( n <= 1 )

132 {

133 for( int j = 0; j < HN; j ++ )

134 {

135 for ( int i = 0; i < INnum; i ++ )

136 {

137 W[j][i] = W[j][i] + studyRate * e_err[j] * P[i];//隐层至输入层的权值调整

138 }

139 }

140

141 }

142 else if( n > 1 )

143 {

144 for( int j = 0; j < HN; j ++ )

145 {

146 for( int i = 0; i < INnum; i ++ )

147 {

148 W[j][i] += studyRate * e_err[j] * P[i] + b * ( W[j][i] - OLD_W[j][i] );//隐层至输入层的权值调整

149 }

150 }

151 }

152 }

153

154 void Back_propagation::saveWV()

155 {

156 for( int i = 0; i < HN; i ++ )

157 {

158 for( int j = 0; j < INnum; j ++ )

159 {

160 OLD_W[i][j] = W[i][j];

161 }

162 }

163

164 for( int ii = 0; ii < ONnum; ii ++ )

165 {

166 for( int jj = 0; jj < HN; jj ++ )

167 {

168 OLD_V[ii][jj] = V[ii][jj];

169 }

170 }

171 }

源文件main.cpp:

1 #include "bp.h"

2

3 void saveWV( Back_propagation bp )

4 {

5 for( int i = 0; i < HN; i ++ )

6 {

7 for( int j = 0; j < INnum; j ++ )

8 {

9 bp.OLD_W[i][j] = bp.W[i][j];

10 }

11 }

12

13 for( int ii = 0; ii < ONnum; ii ++ )

14 {

15 for( int jj = 0; jj < HN; jj ++ )

16 {

17 bp.OLD_V[ii][jj] = bp.V[ii][jj];

18 }

19 }

20 }

21

22 //保存数据

23 void savequan( Back_propagation bp )

24 {

25 ofstream outW( "w.txt" );

26 ofstream outV( "v.txt" );

27

28 for( int i = 0; i < HN; i ++ )

29 {

30 for( int j = 0; j < INnum; j ++ )

31 {

32 outW << bp.W[i][j] << " ";

33 }

34 outW << "\n";

35 }

36

37 for( int ii = 0; ii < ONnum; ii ++ )

38 {

39 for( int jj = 0; jj < HN; jj ++ )

40 {

41 outV << bp.V[ii][jj] << " ";

42 }

43 outV << "\n";

44 }

45

46

47 outW.close();

48 outV.close();

49

50 }

51

52 double Err_Sum( Back_propagation bp )

53 {

54 double total_err = 0;

55 for ( int m = 0; m < SampleCount; m ++ )

56 {

57 total_err += bp.err_m[m];//每个样本的均方误差加起来就成了全局误差

58 }

59 return total_err;

60 }

61

62 int main()

63 {

64 double sum_err;

65 int study;

66 int m;

67 double check_in, check_out;

68 ifstream Train_in( "trainin.txt", ios::in );

69 ifstream Train_out( "trainout.txt", ios::in );

70

71 if( ( Train_in.fail() ) || ( Train_out.fail() ) )

72 {

73 //printf( "Error input file!\n" );

74 cerr << "Error input file!" << endl;

75 exit(0);

76 }

77

78 Back_propagation bp;

79

80 cout << "请输入学习效率: studyRate = ";

81 cin >> bp.studyRate;

82

83 cout << "\n请输入步长: b= ";

84 cin >> bp.b;

85

86 study = 0;

87 double Pre_error ; //预定误差

88 cout << "\n请输入预定误差: Pre_error = ";

89 cin >> Pre_error;

90

91 int Pre_times;

92 cout << "\n请输入预定最大学习次数:Pre_times=";

93 cin >> Pre_times;

94

95 for( m = 0; m < SampleCount; m ++ )

96 {

97 for( int i = 0; i < INnum; i ++ )

98 {

99 Train_in >> bp.Study_Data[m].input[i];

100 }

101 }

102

103 cout << endl;

104 for( m = 0; m < SampleCount; m ++ )

105 {

106 for( int k = 0; k < ONnum; k ++ )

107 {

108 Train_out >> bp.Study_Data[m].teach[k];

109 }

110 }

111

112 cout << endl;

113

114 do

115 {

116 ++ study;

117 if( study > Pre_times )

118 {

119 cout << "训练失败!" << endl;

120 break;

121 }

122

123 for ( int m = 0; m < SampleCount; m ++ )

124 {

125 bp.input_p(m); //输入第m个学习样本 (2)

126 bp.input_t(m);//输入第m个样本的教师信号 (3)

127 bp.H_I_O(); //第m个学习样本隐层各单元输入、输出值 (4)

128 bp.O_I_O();

129 bp.Err_Output_Hidden(m); //第m个学习样本输出层至隐层一般化误差 (6)

130 bp.Err_Hidden_Input(); //第m个学习样本隐层至输入层一般化误差 (7)

131 bp.Adjust_O_H(m,study);

132 bp.Adjust_H_I(m,study);

133 if( m == 0 )

134 {

135 cout << bp.V[0][0] << " " << bp.V[0][1] << endl;

136 }

137 }//全部样本训练完毕

138 sum_err = Err_Sum(bp); //全部样本全局误差计算 (10)

139 bp.saveWV();

140 }while( sum_err > Pre_error );

141

142 if( ( study <= Pre_times ) & ( sum_err < Pre_error ) )

143 {

144 cout << "训练结束!" << endl;

145 cout << "你已经学习了 " << study << "次" << endl;

146 }

147 double net;

148 int k, j;

149 while(1)

150 {

151 printf( "请输入蠓虫的触角及翅膀的长度:" );

152 cin >> check_in;

153 cin >> check_out;

154 bp.P[0] = check_in;

155 bp.P[1] = check_out;

156 bp.H_I_O();

157 for ( k = 0; k < ONnum; k ++ )

158 {

159 net = 0;

160 for( j = 0; j < HN; j ++ )

161 {

162 net += bp.V[k][j] * bp.hidenLayerOutput[j];//求输出层内积

163 }

164 bp.OI[k] = net; //求输出层输入

165 bp.OO[k] = 1.0 / ( 1.0 + exp(-bp.OI[k]) );//求输出层输出

166 }

167 if( bp.OO[0] > 0.5 )

168 {

169 printf( "该蠓虫是af!\n" );

170 }

171 else if( bp.OO[0] >= 0 )

172 {

173 printf( "该蠓虫是apf!\n" );

174 }

175 }

176 return 0;

177 }

文件trainin.txt:

1 1.24 1.27 2 1.36 1.74 3 1.38 1.64 4 1.38 1.82 5 1.38 1.90 6 1.40 1.70 7 1.48 1.82 8 1.54 1.82 9 1.56 2.08 10 1.14 1.82 11 1.18 1.96 12 1.20 1.86 13 1.26 2.00 14 1.28 2.00 15 1.30 1.96

文件trainout.txt:

1 1 2 1 3 1 4 1 5 1 6 1 7 1 8 1 9 1 10 0 11 0 12 0 13 0 14 0 15 0

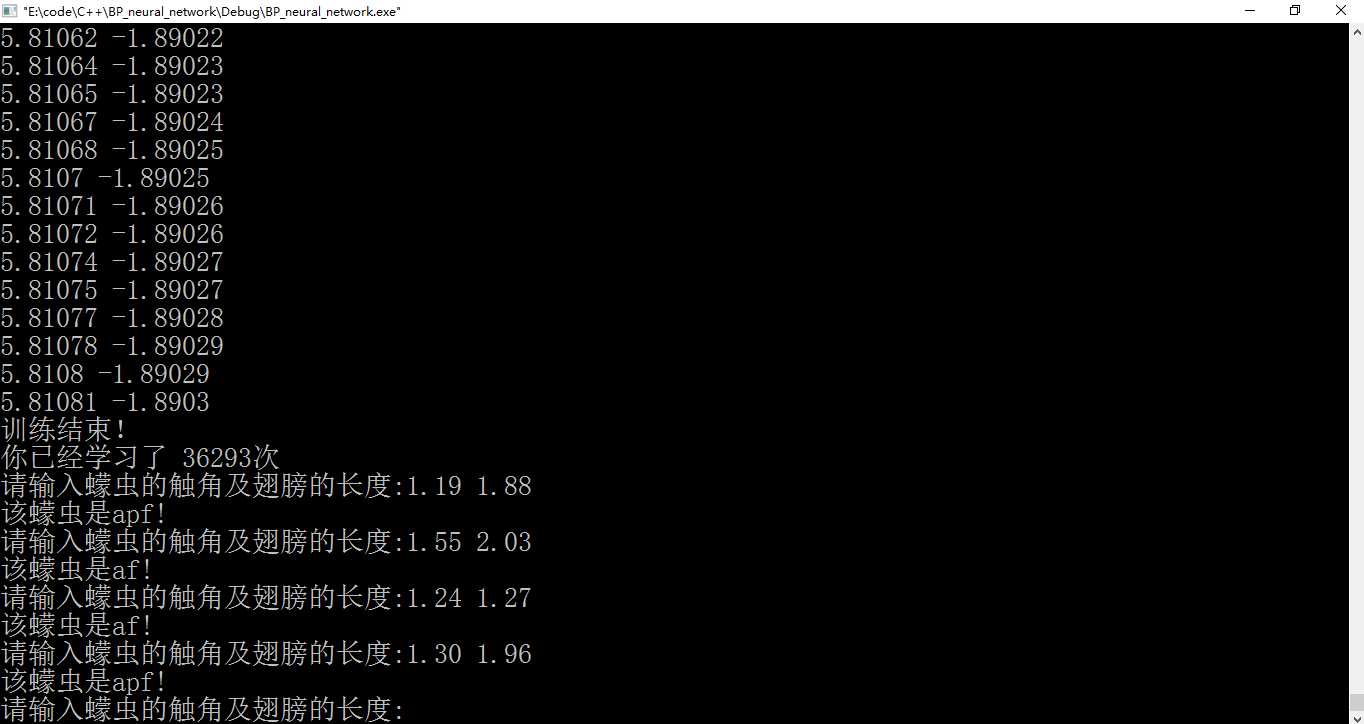

图1 输入数据

图2 测试算法“学习”结果

题目里面既有“螨(音mǎn)虫”,也有“蠓(音měng)虫”,程序里面用的是“蠓虫”。

“程序设计与算法训练”课程设计:“BP神经网络的实现”(C++类封装实现)

标签:error ack put back pen 长度 lib 测试文件 1.4

原文地址:https://www.cnblogs.com/25th-engineer/p/10352454.html