标签:-- nim minus 中文 ram false rand 合数 cost

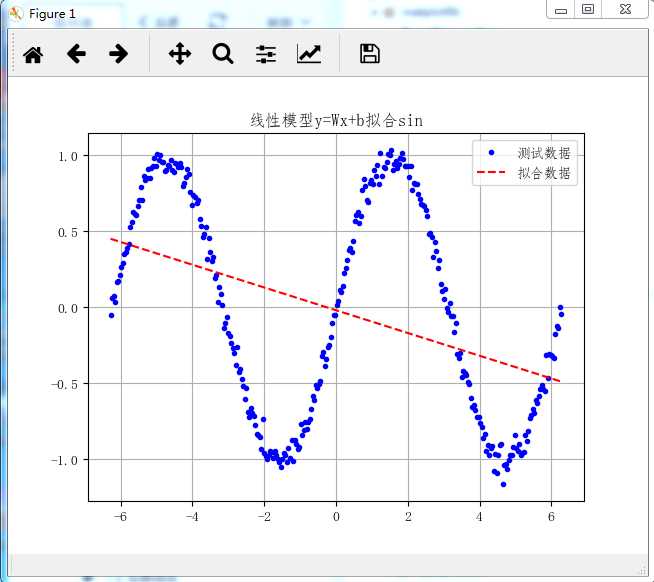

1 #线性回归:用线性模型y=Wx+b拟合sin 2 import numpy as np 3 import matplotlib.pyplot as plt 4 import tensorflow as tf 5 6 #数据,标签 7 x_data = np.linspace(-2*np.pi,2*np.pi,300) 8 noise = np.random.normal(-0.01,0.05,x_data.shape) 9 y_label = np.sin(x_data) + noise 10 plt.rcParams[‘font.sans-serif‘]=[‘FangSong‘] # 用来正常显示中文标签 11 plt.rcParams[‘axes.unicode_minus‘]=False# 用来正常显示负号 12 plt.title(‘线性模型y=Wx+b拟合sin‘) 13 plt.legend() 14 plt.grid(True) 15 plt.plot(x_data, y_label, ‘b.‘, label=‘测试数据‘) 16 17 #静态图定义 18 mg = tf.Graph() 19 with mg.as_default(): 20 #图输入 21 X = tf.placeholder("float") 22 Y = tf.placeholder("float") 23 24 #训练权重w,b 25 W = tf.Variable(np.random.randn(), name="weight") 26 b = tf.Variable(np.random.randn(), name="bias") 27 28 #线性模型y=Wx+b 29 pred = tf.add(tf.multiply(X, W), b) 30 31 #损失函数:使用样本方差 32 cost = tf.reduce_sum(tf.pow(pred-Y, 2)) / (len(x_data)-1) 33 34 #使用梯度下降法优化 35 optimizer = tf.train.GradientDescentOptimizer(0.01).minimize(cost) 36 37 #初始化图变量 38 init = tf.group(tf.global_variables_initializer(), 39 tf.local_variables_initializer()) 40 41 with tf.Session(graph=mg) as sess: 42 sess.run(init) 43 for epoch in range(500): 44 for (x, y) in zip(x_data, y_label): 45 sess.run(optimizer, feed_dict={X: x, Y: y}) 46 if (epoch+1) % 10 == 0: 47 c = sess.run(cost, feed_dict={X: x_data, Y:y_label}) 48 print(‘epoch={:} cost={:0.6f} W={:0.6f} b={:0.6f}‘.format(epoch+1,c,sess.run(W),sess.run(b))) 49 training_cost = sess.run(cost, feed_dict={X: x_data, Y: y_label}) 50 print(‘训练结果 cost={:0.6f} W={:0.6f} b={:0.6f}‘.format(training_cost,sess.run(W),sess.run(b))) 51 plt.plot(x_data, sess.run(W) * x_data + sess.run(b),‘r--‘ , label=‘拟合数据‘) 52 plt.legend() 53 plt.show()

标签:-- nim minus 中文 ram false rand 合数 cost

原文地址:https://www.cnblogs.com/ace007/p/10360547.html