标签:支持 etc value password 登录密码 history sql generate har

早期的ProxySQL若需要做高可用,需要搭建两个实例,进行冗余。但两个ProxySQL实例之间的数据并不能共通,在主实例上配置后,仍需要在备用节点上进行配置,对管理来说非常不方便。但是ProxySQl 从1.4.2版本后,ProxySQL支持原生的Cluster集群搭建,实例之间可以互通一些配置数据,大大简化了管理与维护操作。

ProxySQL是一个非中心化代理,在拓扑中,建议将它部署在靠近应用程序服务器的位置处。ProxySQL节点可以很方便地扩展到上百个节点,因为它支持runtime修改配置并立即生效。这意味着可以通过一些配置管理工具来协调、重配置ProxySQL集群中的各实例,例如 Ansible/Chef/Puppet/Salt 等自动化工具,或者Etcd/Consul/ZooKeeper等服务发现软件。这些特性使得可以高度定制ProxySQL的集群。但尽管如此,这些管理方式还是有些缺点:

- 需要且依赖于外部软件(配置管理软件);

- 正因为上面的一点,使得这种管理方式不是原生支持的;

- 管理时间长度不可预测;

- 无法解决网络分区带来的问题;

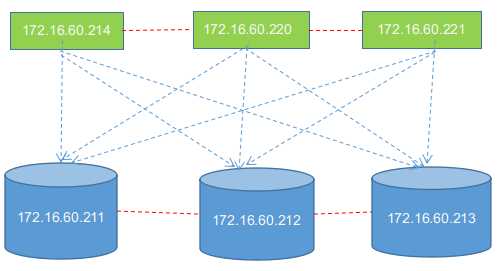

基于此,ProxySQL 1.4.x 版本尝试支持原生集群功能。集群的搭建有很多种方式,如1+1+1的方式,还可以(1+1)+1的方式。采用(1+1)+1的集群部署方式比较简单,即先将两个节点作为集群启动,然后其他节点选择性加入的方式。

ProxySQL Cluster + MGR 高可用集群方案部署记录

这里针对MGR模式 (GTID模式也是一样的) ,采用较简单的(1+1)+1,即先将两个ProxySQL节点作为集群启动,然后其他节点选择性加入的方式。

一、环境准备

172.16.60.211 MGR-node1 (master1)

172.16.60.212 MGR-node2 (master2)

172.16.60.213 MGR-node3 (master3)

172.16.60.214 ProxySQL-node1 [最初启动]

172.16.60.220 ProxySQL-node2 [最初启动]

172.16.60.221 ProxySQL-node3 [后面加入]

[root@MGR-node1 ~]# cat /etc/redhat-release

CentOS Linux release 7.5.1804 (Core)

为了方便实验,关闭所有节点的防火墙

[root@MGR-node1 ~]# systemctl stop firewalld

[root@MGR-node1 ~]# firewall-cmd --state

not running

[root@MGR-node1 ~]# cat /etc/sysconfig/selinux |grep "SELINUX=disabled"

SELINUX=disabled

[root@MGR-node1 ~]# setenforce 0

setenforce: SELinux is disabled

[root@MGR-node1 ~]# getenforce

Disabled

特别要注意一个关键点: 必须设置好各个mysql节点的主机名,并且保证能通过主机名找到各成员!

则必须要在每个节点的/etc/hosts里面做主机名绑定,否则后续将节点加入group组会失败!报错RECOVERING!!

[root@MGR-node1 ~]# cat /etc/hosts

........

172.16.60.211 MGR-node1

172.16.60.212 MGR-node2

172.16.60.213 MGR-node3

二、在三个MGR节点安装Mysql5.7

在三个mysql节点机上使用yum方式安装Mysql5.7,参考:https://www.cnblogs.com/kevingrace/p/8340690.html

安装MySQL yum资源库

[root@MGR-node1 ~]# yum localinstall https://dev.mysql.com/get/mysql57-community-release-el7-8.noarch.rpm

安装MySQL 5.7

[root@MGR-node1 ~]# yum install -y mysql-community-server

启动MySQL服务器和MySQL的自动启动

[root@MGR-node1 ~]# systemctl start mysqld.service

[root@MGR-node1 ~]# systemctl enable mysqld.service

设置登录密码

由于MySQL从5.7开始不允许首次安装后使用空密码进行登录!为了加强安全性,系统会随机生成一个密码以供管理员首次登录使用,

这个密码记录在/var/log/mysqld.log文件中,使用下面的命令可以查看此密码:

[root@MGR-node1 ~]# cat /var/log/mysqld.log|grep ‘A temporary password‘

2019-01-11T05:53:17.824073Z 1 [Note] A temporary password is generated for root@localhost: TaN.k:*Qw2xs

使用上面查看的密码TaN.k:*Qw2xs 登录mysql,并重置密码为123456

[root@MGR-node1 ~]# mysql -p #输入默认的密码:TaN.k:*Qw2xs

.............

mysql> set global validate_password_policy=0;

Query OK, 0 rows affected (0.00 sec)

mysql> set global validate_password_length=1;

Query OK, 0 rows affected (0.00 sec)

mysql> set password=password("123456");

Query OK, 0 rows affected, 1 warning (0.00 sec)

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)

查看mysql版本

[root@MGR-node1 ~]# mysql -p123456

........

mysql> select version();

+-----------+

| version() |

+-----------+

| 5.7.24 |

+-----------+

1 row in set (0.00 sec)

=====================================================================

温馨提示

mysql5.7通过上面默认安装后,执行语句可能会报错:

ERROR 1819 (HY000): Your password does not satisfy the current policy requirements

这个报错与Mysql 密码安全策略validate_password_policy的值有关,validate_password_policy可以取0、1、2三个值:

解决办法:

set global validate_password_policy=0;

set global validate_password_length=1;

三、MGR组复制环境部署 (多写模式)

可以参考:https://www.cnblogs.com/kevingrace/p/10260685.html

由于之前做了其他测试,这里需要将三个节点的mysql环境抹干净:

# systemctl stop mysqld

# rm -rf /var/lib/mysql

# systemctl start mysqld

然后重启密码

# cat /var/log/mysqld.log|grep ‘A temporary password‘

# mysql -p123456

mysql> set global validate_password_policy=0;

mysql> set global validate_password_length=1;

mysql> set password=password("123456");

mysql> flush privileges;

=======================================================

1) MGR-node1节点操作

[root@MGR-node1 ~]# mysql -p123456

.........

mysql> select uuid();

+--------------------------------------+

| uuid() |

+--------------------------------------+

| ae09faae-34bb-11e9-9f91-005056ac6820 |

+--------------------------------------+

1 row in set (0.00 sec)

[root@MGR-node1 ~]# cp /etc/my.cnf /etc/my.cnf.bak

[root@MGR-node1 ~]# >/etc/my.cnf

[root@MGR-node1 ~]# vim /etc/my.cnf

[mysqld]

datadir = /var/lib/mysql

socket = /var/lib/mysql/mysql.sock

symbolic-links = 0

log-error = /var/log/mysqld.log

pid-file = /var/run/mysqld/mysqld.pid

#GTID:

server_id = 1

gtid_mode = on

enforce_gtid_consistency = on

master_info_repository=TABLE

relay_log_info_repository=TABLE

binlog_checksum=NONE

#binlog

log_bin = mysql-bin

log-slave-updates = 1

binlog_format = row

sync-master-info = 1

sync_binlog = 1

#relay log

skip_slave_start = 1

transaction_write_set_extraction=XXHASH64

loose-group_replication_group_name="5db40c3c-180c-11e9-afbf-005056ac6820"

loose-group_replication_start_on_boot=off

loose-group_replication_local_address= "172.16.60.211:24901"

loose-group_replication_group_seeds= "172.16.60.211:24901,172.16.60.212:24901,172.16.60.213:24901"

loose-group_replication_bootstrap_group=off

loose-group_replication_single_primary_mode=off

loose-group_replication_enforce_update_everywhere_checks=on

loose-group_replication_ip_whitelist="172.16.60.0/24,127.0.0.1/8"

重启mysql服务

[root@MGR-node1 ~]# systemctl restart mysqld

登录mysql进行相关设置操作

[root@MGR-node1 ~]# mysql -p123456

............

mysql> SET SQL_LOG_BIN=0;

Query OK, 0 rows affected (0.00 sec)

mysql> GRANT REPLICATION SLAVE ON *.* TO rpl_slave@‘%‘ IDENTIFIED BY ‘slave@123‘;

Query OK, 0 rows affected, 1 warning (0.00 sec)

mysql> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.01 sec)

mysql> reset master;

Query OK, 0 rows affected (0.19 sec)

mysql> SET SQL_LOG_BIN=1;

Query OK, 0 rows affected (0.00 sec)

mysql> CHANGE MASTER TO MASTER_USER=‘rpl_slave‘, MASTER_PASSWORD=‘slave@123‘ FOR CHANNEL ‘group_replication_recovery‘;

Query OK, 0 rows affected, 2 warnings (0.33 sec)

mysql> INSTALL PLUGIN group_replication SONAME ‘group_replication.so‘;

Query OK, 0 rows affected (0.03 sec)

mysql> SHOW PLUGINS;

+----------------------------+----------+--------------------+----------------------+---------+

| Name | Status | Type | Library | License |

+----------------------------+----------+--------------------+----------------------+---------+

...............

...............

| group_replication | ACTIVE | GROUP REPLICATION | group_replication.so | GPL |

+----------------------------+----------+--------------------+----------------------+---------+

46 rows in set (0.00 sec)

mysql> SET GLOBAL group_replication_bootstrap_group=ON;

Query OK, 0 rows affected (0.00 sec)

mysql> START GROUP_REPLICATION;

Query OK, 0 rows affected (2.34 sec)

mysql> SET GLOBAL group_replication_bootstrap_group=OFF;

Query OK, 0 rows affected (0.00 sec)

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| group_replication_applier | 42ca8591-34bb-11e9-8296-005056ac6820 | MGR-node1 | 3306 | ONLINE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

1 row in set (0.00 sec)

比如要保证上面的group_replication_applier的状态为"ONLINE"才对!

创建一个测试库

mysql> CREATE DATABASE kevin CHARACTER SET utf8 COLLATE utf8_general_ci;

Query OK, 1 row affected (0.03 sec)

mysql> use kevin;

Database changed

mysql> create table if not exists haha (id int(10) PRIMARY KEY AUTO_INCREMENT,name varchar(50) NOT NULL);

Query OK, 0 rows affected (0.24 sec)

mysql> insert into kevin.haha values(1,"wangshibo"),(2,"guohuihui"),(3,"yangyang"),(4,"shikui");

Query OK, 4 rows affected (0.07 sec)

Records: 4 Duplicates: 0 Warnings: 0

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 3 | yangyang |

| 4 | shikui |

+----+-----------+

4 rows in set (0.00 sec)

=====================================================================

2) MGR-node2节点操作

[root@MGR-node2 ~]# cp /etc/my.cnf /etc/my.cnf.bak

[root@MGR-node2 ~]# >/etc/my.cnf

[root@MGR-node2 ~]# vim /etc/my.cnf

[mysqld]

datadir = /var/lib/mysql

socket = /var/lib/mysql/mysql.sock

symbolic-links = 0

log-error = /var/log/mysqld.log

pid-file = /var/run/mysqld/mysqld.pid

#GTID:

server_id = 2

gtid_mode = on

enforce_gtid_consistency = on

master_info_repository=TABLE

relay_log_info_repository=TABLE

binlog_checksum=NONE

#binlog

log_bin = mysql-bin

log-slave-updates = 1

binlog_format = row

sync-master-info = 1

sync_binlog = 1

#relay log

skip_slave_start = 1

transaction_write_set_extraction=XXHASH64

loose-group_replication_group_name="5db40c3c-180c-11e9-afbf-005056ac6820"

loose-group_replication_start_on_boot=off

loose-group_replication_local_address= "172.16.60.212:24901"

loose-group_replication_group_seeds= "172.16.60.211:24901,172.16.60.212:24901,172.16.60.213:24901"

loose-group_replication_bootstrap_group=off

loose-group_replication_single_primary_mode=off

loose-group_replication_enforce_update_everywhere_checks=on

loose-group_replication_ip_whitelist="172.16.60.0/24,127.0.0.1/8"

重启mysql服务

[root@MGR-node2 ~]# systemctl restart mysqld

登录mysql进行相关设置操作

[root@MGR-node2 ~]# mysql -p123456

.........

mysql> SET SQL_LOG_BIN=0;

Query OK, 0 rows affected (0.00 sec)

mysql> GRANT REPLICATION SLAVE ON *.* TO rpl_slave@‘%‘ IDENTIFIED BY ‘slave@123‘;

Query OK, 0 rows affected, 1 warning (0.00 sec)

mysql> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.00 sec)

mysql> reset master;

Query OK, 0 rows affected (0.17 sec)

mysql> SET SQL_LOG_BIN=1;

Query OK, 0 rows affected (0.00 sec)

mysql> CHANGE MASTER TO MASTER_USER=‘rpl_slave‘, MASTER_PASSWORD=‘slave@123‘ FOR CHANNEL ‘group_replication_recovery‘;

Query OK, 0 rows affected, 2 warnings (0.21 sec)

mysql> INSTALL PLUGIN group_replication SONAME ‘group_replication.so‘;

Query OK, 0 rows affected (0.20 sec)

mysql> SHOW PLUGINS;

+----------------------------+----------+--------------------+----------------------+---------+

| Name | Status | Type | Library | License |

+----------------------------+----------+--------------------+----------------------+---------+

.............

.............

| group_replication | ACTIVE | GROUP REPLICATION | group_replication.so | GPL |

+----------------------------+----------+--------------------+----------------------+---------+

46 rows in set (0.00 sec)

mysql> START GROUP_REPLICATION;

Query OK, 0 rows affected (6.25 sec)

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| group_replication_applier | 4281f7b7-34bb-11e9-8949-00505688047c | MGR-node2 | 3306 | ONLINE |

| group_replication_applier | 42ca8591-34bb-11e9-8296-005056ac6820 | MGR-node1 | 3306 | ONLINE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

2 rows in set (0.00 sec)

查看下,发现已经将MGR-node1节点添加的数据同步过来了

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| kevin |

| mysql |

| performance_schema |

| sys |

+--------------------+

5 rows in set (0.00 sec)

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 3 | yangyang |

| 4 | shikui |

+----+-----------+

4 rows in set (0.00 sec)

=====================================================================

3) MGR-node3节点操作

[root@MGR-node3 ~]# cp /etc/my.cnf /etc/my.cnf.bak

[root@MGR-node3 ~]# >/etc/my.cnf

[root@MGR-node3 ~]# vim /etc/my.cnf

[mysqld]

datadir = /var/lib/mysql

socket = /var/lib/mysql/mysql.sock

symbolic-links = 0

log-error = /var/log/mysqld.log

pid-file = /var/run/mysqld/mysqld.pid

#GTID:

server_id = 3

gtid_mode = on

enforce_gtid_consistency = on

master_info_repository=TABLE

relay_log_info_repository=TABLE

binlog_checksum=NONE

#binlog

log_bin = mysql-bin

log-slave-updates = 1

binlog_format = row

sync-master-info = 1

sync_binlog = 1

#relay log

skip_slave_start = 1

transaction_write_set_extraction=XXHASH64

loose-group_replication_group_name="5db40c3c-180c-11e9-afbf-005056ac6820"

loose-group_replication_start_on_boot=off

loose-group_replication_local_address= "172.16.60.213:24901"

loose-group_replication_group_seeds= "172.16.60.211:24901,172.16.60.212:24901,172.16.60.213:24901"

loose-group_replication_bootstrap_group=off

loose-group_replication_single_primary_mode=off

loose-group_replication_enforce_update_everywhere_checks=on

loose-group_replication_ip_whitelist="172.16.60.0/24,127.0.0.1/8"

重启mysql服务

[root@MGR-node3 ~]# systemctl restart mysqld

登录mysql进行相关设置操作

[root@MGR-node3 ~]# mysql -p123456

..........

mysql> SET SQL_LOG_BIN=0;

Query OK, 0 rows affected (0.00 sec)

mysql> GRANT REPLICATION SLAVE ON *.* TO rpl_slave@‘%‘ IDENTIFIED BY ‘slave@123‘;

Query OK, 0 rows affected, 1 warning (0.00 sec)

mysql> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.01 sec)

mysql> reset master;

Query OK, 0 rows affected (0.10 sec)

mysql> SET SQL_LOG_BIN=1;

Query OK, 0 rows affected (0.00 sec)

mysql> CHANGE MASTER TO MASTER_USER=‘rpl_slave‘, MASTER_PASSWORD=‘slave@123‘ FOR CHANNEL ‘group_replication_recovery‘;

Query OK, 0 rows affected, 2 warnings (0.27 sec)

mysql> INSTALL PLUGIN group_replication SONAME ‘group_replication.so‘;

Query OK, 0 rows affected (0.04 sec)

mysql> SHOW PLUGINS;

+----------------------------+----------+--------------------+----------------------+---------+

| Name | Status | Type | Library | License |

+----------------------------+----------+--------------------+----------------------+---------+

.............

| group_replication | ACTIVE | GROUP REPLICATION | group_replication.so | GPL |

+----------------------------+----------+--------------------+----------------------+---------+

46 rows in set (0.00 sec)

mysql> START GROUP_REPLICATION;

Query OK, 0 rows affected (4.54 sec)

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| group_replication_applier | 4281f7b7-34bb-11e9-8949-00505688047c | MGR-node2 | 3306 | ONLINE |

| group_replication_applier | 42ca8591-34bb-11e9-8296-005056ac6820 | MGR-node1 | 3306 | ONLINE |

| group_replication_applier | 456216bd-34bb-11e9-bbd1-005056880888 | MGR-node3 | 3306 | ONLINE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

3 rows in set (0.00 sec)

查看下,发现已经将在其他节点上添加的数据同步过来了

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| kevin |

| mysql |

| performance_schema |

| sys |

+--------------------+

5 rows in set (0.00 sec)

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 3 | yangyang |

| 4 | shikui |

+----+-----------+

4 rows in set (0.00 sec)

=====================================================================

4) 组复制数据同步测试

在任意一个节点上执行

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

| group_replication_applier | 2658b203-1565-11e9-9f8b-005056880888 | MGR-node3 | 3306 | ONLINE |

| group_replication_applier | 2c1efc46-1565-11e9-ab8e-00505688047c | MGR-node2 | 3306 | ONLINE |

| group_replication_applier | 317e2aad-1565-11e9-9c2e-005056ac6820 | MGR-node1 | 3306 | ONLINE |

+---------------------------+--------------------------------------+-------------+-------------+--------------+

3 rows in set (0.00 sec)

如上,说明已经在MGR-node1、MGR-node2、MGR-node3 三个节点上成功部署了基于GTID的组复制同步环境。

现在在三个节点中的任意一个上面更新数据,那么其他两个节点的数据库都会将新数据同步过去的!

1)在MGR-node1节点数据库更新数据

mysql> delete from kevin.haha where id>2;

Query OK, 2 rows affected (0.14 sec)

接着在MGR-node2、MGR-node3节点数据库查看,发现更新后数据已经同步过来了!

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

+----+-----------+

2 rows in set (0.00 sec)

2)在MGR-node2节点数据库更新数据

mysql> insert into kevin.haha values(11,"beijing"),(12,"shanghai"),(13,"anhui");

Query OK, 3 rows affected (0.06 sec)

Records: 3 Duplicates: 0 Warnings: 0

接着在MGR-node1、MGR-node3节点数据库查看,发现更新后数据已经同步过来了!

mysql> select * from kevin.haha;

+----+-----------+

| id | name |

+----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 11 | beijing |

| 12 | shanghai |

| 13 | anhui |

+----+-----------+

5 rows in set (0.00 sec)

3)在MGR-node3节点数据库更新数据

mysql> update kevin.haha set id=100 where name="anhui";

Query OK, 1 row affected (0.16 sec)

Rows matched: 1 Changed: 1 Warnings: 0

mysql> delete from kevin.haha where id=12;

Query OK, 1 row affected (0.22 sec)

接着在MGR-node1、MGR-node2节点数据库查看,发现更新后数据已经同步过来了!

mysql> select * from kevin.haha;

+-----+-----------+

| id | name |

+-----+-----------+

| 1 | wangshibo |

| 2 | guohuihui |

| 11 | beijing |

| 100 | anhui |

+-----+-----------+

4 rows in set (0.00 sec)

四、ProxySQL 安装、读写分离配置、集群部署

1) 三个ProxySQL节点均安装mysql客户端,用于在本机连接到ProxySQL的管理接口

[root@ProxySQL-node1 ~]# vim /etc/yum.repos.d/mariadb.repo

[mariadb]

name = MariaDB

baseurl = http://yum.mariadb.org/10.3.5/centos6-amd64

gpgkey=https://yum.mariadb.org/RPM-GPG-KEY-MariaDB

gpgcheck=1

安装mysql-clinet客户端

[root@ProxySQL-node1 ~]# yum install -y MariaDB-client

============================================================================

如果遇到报错:

Error: MariaDB-compat conflicts with 1:mariadb-libs-5.5.60-1.el7_5.x86_64

You could try using --skip-broken to work around the problem

You could try running: rpm -Va --nofiles --nodigest

解决办法:

[root@ProxySQL-node1 ~]# rpm -qa|grep mariadb

mariadb-libs-5.5.60-1.el7_5.x86_64

[root@ProxySQL-node1 ~]# rpm -e mariadb-libs-5.5.60-1.el7_5.x86_64 --nodeps

[root@ProxySQL-node1 ~]# yum install -y MariaDB-client

2) 三个ProxySQL 均安装ProxySQL

proxysql的rpm包下载地址: https://pan.baidu.com/s/1S1_b5DKVCpZSOUNmtCXrrg

提取密码: 5t1c

[root@ProxySQL-node ~]# yum install -y perl-DBI perl-DBD-MySQL

[root@ProxySQL-node ~]# rpm -ivh proxysql-1.4.8-1-centos7.x86_64.rpm --force

启动proxysql

[root@ProxySQL-node ~]# /etc/init.d/proxysql start

Starting ProxySQL: DONE!

[root@ProxySQL-node ~]# ss -lntup|grep proxy

tcp LISTEN 0 128 *:6080 *:* users:(("proxysql",pid=29931,fd=11))

tcp LISTEN 0 128 *:6032 *:* users:(("proxysql",pid=29931,fd=28))

tcp LISTEN 0 128 *:6033 *:* users:(("proxysql",pid=29931,fd=27))

tcp LISTEN 0 128 *:6033 *:* users:(("proxysql",pid=29931,fd=26))

tcp LISTEN 0 128 *:6033 *:* users:(("proxysql",pid=29931,fd=25))

tcp LISTEN 0 128 *:6033 *:* users:(("proxysql",pid=29931,fd=24))

[root@ProxySQL-node ~]# mysql -uadmin -padmin -h127.0.0.1 -P6032

............

............

MySQL [(none)]> show databases;

+-----+---------------+-------------------------------------+

| seq | name | file |

+-----+---------------+-------------------------------------+

| 0 | main | |

| 2 | disk | /var/lib/proxysql/proxysql.db |

| 3 | stats | |

| 4 | monitor | |

| 5 | stats_history | /var/lib/proxysql/proxysql_stats.db |

+-----+---------------+-------------------------------------+

5 rows in set (0.000 sec)

接着初始化Proxysql,将之前的proxysql数据都删除

MySQL [(none)]> delete from scheduler ;

Query OK, 0 rows affected (0.000 sec)

MySQL [(none)]> delete from mysql_servers;

Query OK, 3 rows affected (0.000 sec)

MySQL [(none)]> delete from mysql_users;

Query OK, 1 row affected (0.000 sec)

MySQL [(none)]> delete from mysql_query_rules;

Query OK, 0 rows affected (0.000 sec)

MySQL [(none)]> delete from mysql_group_replication_hostgroups ;

Query OK, 1 row affected (0.000 sec)

MySQL [(none)]> LOAD MYSQL VARIABLES TO RUNTIME;

Query OK, 0 rows affected (0.000 sec)

MySQL [(none)]> SAVE MYSQL VARIABLES TO DISK;

Query OK, 94 rows affected (0.175 sec)

MySQL [(none)]> LOAD MYSQL SERVERS TO RUNTIME;

Query OK, 0 rows affected (0.003 sec)

MySQL [(none)]> SAVE MYSQL SERVERS TO DISK;

Query OK, 0 rows affected (0.140 sec)

MySQL [(none)]> LOAD MYSQL USERS TO RUNTIME;

Query OK, 0 rows affected (0.000 sec)

MySQL [(none)]> SAVE MYSQL USERS TO DISK;

Query OK, 0 rows affected (0.050 sec)

MySQL [(none)]> LOAD SCHEDULER TO RUNTIME;

Query OK, 0 rows affected (0.000 sec)

MySQL [(none)]> SAVE SCHEDULER TO DISK;

Query OK, 0 rows affected (0.096 sec)

MySQL [(none)]> LOAD MYSQL QUERY RULES TO RUNTIME;

Query OK, 0 rows affected (0.000 sec)

MySQL [(none)]> SAVE MYSQL QUERY RULES TO DISK;

Query OK, 0 rows affected (0.156 sec)

MySQL [(none)]>

3)配置ProxySQL Cluster 环境 (三个proxysql节点)

ProxySQL Cluster 配置详解 以及 高可用集群方案部署记录(完结篇)

标签:支持 etc value password 登录密码 history sql generate har

原文地址:https://www.cnblogs.com/kevingrace/p/10411457.html