标签:names mon epo network 详情 seconds ast instance pre

代码复制过来可能有问题, 详情看码云仓库: https://gitee.com/love-docker/k8s/tree/master/v1.11/monitor/%E5%91%8A%E8%AD%A6/1)修改prometheus的configmap, 配置告警规则

rule_files:

- /etc/prometheus/rules.yml

rules.yml: |+

groups:

- name: noah_pod.rules

rules:

- alert: Pod_all_cpu_usage

expr: (sum by(name)(irate(container_cpu_usage_seconds_total{image!=""}[5m]))*100) > 50

for: 10s

labels:

severity: critical

service: pods

annotations:

description: 容器 {{ $labels.name }} CPU 资源利用率大于 50% , (current value is {{ $value }})

summary: Dev CPU 负载告警

- alert: Pod_all_memory_usage

expr: sort_desc(avg by(name)(irate(container_memory_usage_bytes{name!=""}[5m]))*100) > 1024*1024*100

for: 10s

labels:

severity: critical

annotations:

description: 容器 {{ $labels.name }} Memory 资源利用率大于 100M , (current value is {{ $value }})

summary: Dev Memory 负载告警

- alert: Pod_all_network_receive_usage

expr: sum (container_memory_working_set_bytes{image!=""}) by (pod_name) > 1024*1024*100

for: 10m

labels:

severity: critical

annotations:

description: 容器 {{ $labels.name }} network_receive 资源利用率大于 50M , (current value is {{ $value }})

summary: network_receive 负载告警2)指定alertmanagers地址信息

alerting:

alertmanagers:

- static_configs:

- targets: ["alertmanager:9093"]3)完整的configmap配置如下

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-system

data:

prometheus.yml: |

global:

scrape_interval: 15s

evaluation_interval: 15s

rule_files:

- /etc/prometheus/rules.yml

alerting:

alertmanagers:

- static_configs:

- targets: ["alertmanager:9093"]

#- targets: ["192.168.1.51:9093"]

scrape_configs:

- job_name: ‘kubernetes-apiservers‘

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: ‘kubernetes-nodes‘

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics

- job_name: ‘kubernetes-cadvisor‘

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: ‘kubernetes-service-endpoints‘

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- job_name: ‘kubernetes-services‘

kubernetes_sd_configs:

- role: service

metrics_path: /probe

params:

module: [http_2xx]

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name

- job_name: ‘kubernetes-ingresses‘

kubernetes_sd_configs:

- role: ingress

relabel_configs:

- source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path]

regex: (.+);(.+);(.+)

replacement: ${1}://${2}${3}

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_ingress_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_ingress_name]

target_label: kubernetes_name

- job_name: ‘kubernetes-pods‘

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

rules.yml: |+

groups:

- name: noah_pod.rules

rules:

- alert: Pod_all_cpu_usage

expr: (sum by(name)(irate(container_cpu_usage_seconds_total{image!=""}[5m]))*100) > 50

for: 10s

labels:

severity: critical

service: pods

annotations:

description: 容器 {{ $labels.name }} CPU 资源利用率大于 50% , (current value is {{ $value }})

summary: Dev CPU 负载告警

- alert: Pod_all_memory_usage

expr: sort_desc(avg by(name)(irate(container_memory_usage_bytes{name!=""}[5m]))*100) > 1024*1024*100

for: 10s

labels:

severity: critical

annotations:

description: 容器 {{ $labels.name }} Memory 资源利用率大于 100M , (current value is {{ $value }})

summary: Dev Memory 负载告警

- alert: Pod_all_network_receive_usage

expr: sum (container_memory_working_set_bytes{image!=""}) by (pod_name) > 1024*1024*100

for: 10m

labels:

severity: critical

annotations:

description: 容器 {{ $labels.name }} network_receive 资源利用率大于 50M , (current value is {{ $value }})

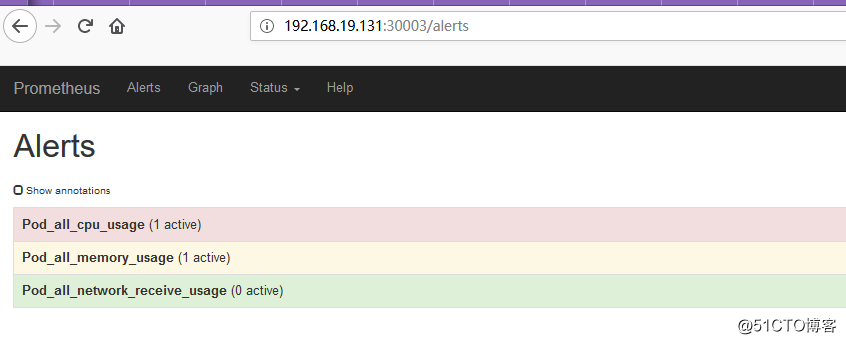

summary: network_receive 负载告警4)登录prometheus后台验证

#(2)创建altermanger组件, 用于发送告警

1)创建告警模板文件

# cat alertmanager-templates.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: alertmanager-templates

namespace: kube-system

data:

wechat.tmpl: |

{{ define "wechat.default.message" }}

{{ range .Alerts }}

========start==========

告警程序:prometheus_alert

告警级别:{{ .Labels.severity }}

告警类型:{{ .Labels.alertname }}

故障主机: {{ .Labels.instance }}

告警主题: {{ .Annotations.summary }}

告警详情: {{ .Annotations.description }}

触发时间: {{ .StartsAt.Format "2006-01-02 15:04:05" }}

========end==========

{{ end }}

{{ end }}2)创建配置文件, 注意需要提前注册好微信企业公众号

# cat configmap.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: alertmanager

namespace: kube-system

data:

config.yml: |-

global:

resolve_timeout: 5m

templates:

- ‘/etc/alertmanager-templates/wechat.tmpl‘

route:

group_by: [‘alertname‘]

group_wait: 10s

group_interval: 10s

repeat_interval: 1h

receiver: ‘wechat‘

receivers:

- name: ‘wechat‘

wechat_configs:

- corp_id: ‘‘

to_party: ‘‘

agent_id: ‘‘

api_secret: ‘‘

send_resolved: true 3)创建deployment资源

# cat deployment.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: alertmanager

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

app: alertmanager

template:

metadata:

name: alertmanager

labels:

app: alertmanager

spec:

containers:

- name: alertmanager

image: registry.cn-hangzhou.aliyuncs.com/wangfang-k8s/prometheus-alertmanager:v0.15.2

args:

- ‘--config.file=/etc/alertmanager/config.yml‘

- ‘--storage.path=/alertmanager‘

ports:

- name: alertmanager

containerPort: 9093

volumeMounts:

- name: config-volume

mountPath: /etc/alertmanager

- name: templates-volume

mountPath: /etc/alertmanager-templates

- name: alertmanager

mountPath: /alertmanager

serviceAccountName: prometheus

volumes:

- name: config-volume

configMap:

name: alertmanager

- name: templates-volume

configMap:

name: alertmanager-templates

- name: alertmanager

emptyDir: {}4)创建service

# cat service.yaml

apiVersion: v1

kind: Service

metadata:

annotations:

prometheus.io/scrape: ‘true‘

prometheus.io/path: ‘/metrics‘

labels:

name: alertmanager

name: alertmanager

namespace: kube-system

spec:

selector:

app: alertmanager

type: NodePort

ports:

- name: alertmanager

protocol: TCP

port: 9093

targetPort: 9093

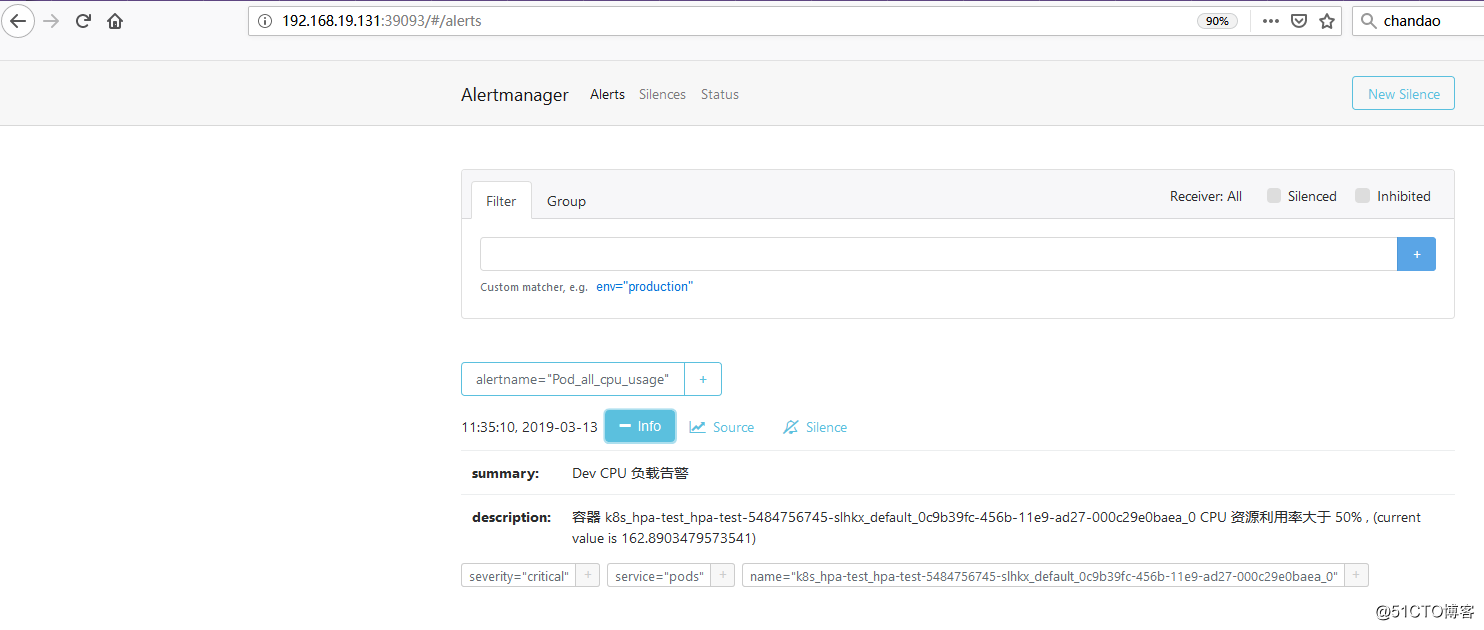

nodePort: 390935)登录到altermanger后台

收到了prometheus发送过来的告警

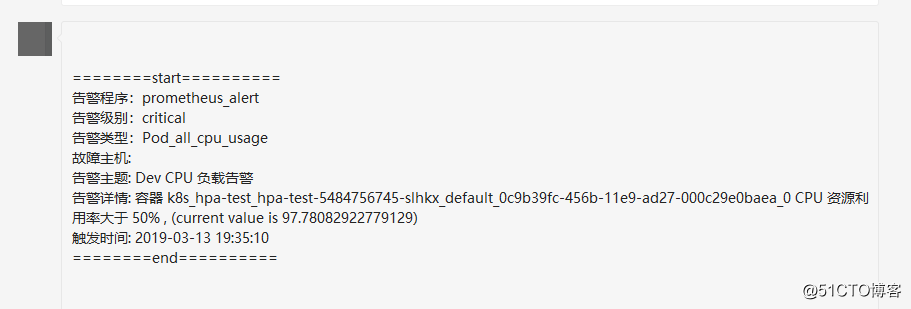

6)微信公众号收到了报警信息

7)演示告警

创建一个pod, 使用压测工具对其不停的压测, 使其cpu使用率过高;

kubectl run hpa-test --image=registry.cn-hangzhou.aliyuncs.com/wangfang-k8s/hpa-example:latest --requests=cpu=200m --expose --port=80

kubectl run -i --tty load-generator --image=busybox /bin/sh

#while true; do wget -q -O- http://hpa-test.default.svc.cluster.local; done参考文档:https://blog.csdn.net/ywq935/article/details/80818982

标签:names mon epo network 详情 seconds ast instance pre

原文地址:https://blog.51cto.com/1000682/2362597