标签:标记 inpu size 一起 origin while else sys write

FMM和BMM的编程实现,其实两个算法思路都挺简单,一个是从前取最大词长度的小分句,查找字典是否有该词,若无则分句去掉最后面一个字,再次查找,直至分句变成单词或者在字典中找到,并将其去除,然后重复上述步骤。BMM则是从后取分句,字典中不存在则分句最前去掉一个字,也是重复类似的步骤。

readCorpus.py

import sys

output = {}

with open('语料库.txt', mode='r', encoding='UTF-8') as f:

for line in f.readlines():

if line is not None:

# 去除每行的换行符

t_line = line.strip('\n')

# 按空格分开每个词

words = t_line.split(' ')

for word in words:

# 按/分开标记和词

t_word = word.split('/')

# 左方括号去除

tf_word = t_word[0].split('[')

if len(tf_word) == 2:

f_word = tf_word[1]

else:

f_word = t_word[0]

# 若在输出字典中,则value+1

if f_word in output.keys():

output[f_word] = output[f_word]+1

# 不在输出字典中则新建

else:

output[f_word] = 1

big_word1 = t_line.split('[')

for i in range(1, len(big_word1)):

big_word2 = big_word1[i].split(']')[0]

words = big_word2.split(' ')

big_word = ""

for word in words:

# 按/分开标记和词

t_word = word.split('/')

big_word = big_word + t_word[0]

# 若在输出字典中,则value+1

if big_word in output.keys():

output[big_word] = output[big_word]+1

# 不在输出字典中则新建

else:

output[big_word] = 1

f.close()

with open('output.txt', mode='w', encoding='UTF-8') as f:

while output:

minNum = sys.maxsize

minName = ""

for key, values in output.items():

if values < minNum:

minNum = values

minName = key

f.write(minName+": "+str(minNum)+"\n")

del output[minName]

f.close()

BMM.py

MAX_WORD = 19

word_list = []

ans_word = []

with open('output.txt', mode='r', encoding='UTF-8')as f:

for line in f.readlines():

if line is not None:

word = line.split(':')

word_list.append(word[0])

f.close()

#num = input("输入句子个数:")

#for i in range(int(num)):

while True:

ans_word = []

try:

origin_sentence = input("输入:\n")

while len(origin_sentence) != 0:

len_word = MAX_WORD

while len_word > 0:

# 从后读取最大词长度的数据,若该数据在字典中,则存入数组,并将其去除

if origin_sentence[-len_word:] in word_list:

ans_word.append(origin_sentence[-len_word:])

len_sentence = len(origin_sentence)

origin_sentence = origin_sentence[0:len_sentence-len_word]

break

# 不在词典中,则从后取词长度-1

else:

len_word = len_word - 1

# 单词直接存入数组

if len_word == 0:

if origin_sentence[-1:] != ' ':

ans_word.append(origin_sentence[-1:])

len_sentence = len(origin_sentence)

origin_sentence = origin_sentence[0:len_sentence - 1]

for j in range(len(ans_word)-1, -1, -1):

print(ans_word[j] + '/', end='')

print('\n')

except (KeyboardInterrupt, EOFError):

break

FMM.py

MAX_WORD = 19

word_list = []

with open('output.txt', mode='r', encoding='UTF-8')as f:

for line in f.readlines():

if line is not None:

word = line.split(':')

word_list.append(word[0])

f.close()

#num = input("输入句子个数:")

#for i in range(int(num)):

while True:

try:

origin_sentence = input("输入:\n")

while len(origin_sentence) != 0:

len_word = MAX_WORD

while len_word > 0:

# 读取前最大词长度数据,在数组中则输出,并将其去除

if origin_sentence[0:len_word] in word_list:

print(origin_sentence[0:len_word]+'/', end='')

origin_sentence = origin_sentence[len_word:]

break

# 不在字典中,则读取长度-1

else:

len_word = len_word - 1

# 为0则表示为单词,输出

if len_word == 0:

if origin_sentence[0] != ' ':

print(origin_sentence[0]+'/', end='')

origin_sentence = origin_sentence[1:]

print('\n')

except (KeyboardInterrupt, EOFError):

break

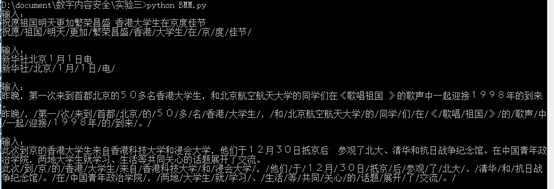

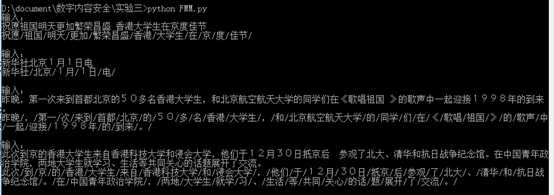

效果图

我们可以观察到含大粒度分词的情况将香港科技大学,北京航空航天大学等表意能力强的词分在了一起而不是拆开,更符合分词要求。

标签:标记 inpu size 一起 origin while else sys write

原文地址:https://www.cnblogs.com/FZfangzheng/p/10952070.html