标签:agg poc 提升 epo oss gtx 1080 com enter 深度学习

在mbp的i5的cpu上训练了3轮,花的时间如下

Epoch 1/3

- 737s - loss: 0.1415 - val_loss: 0.0874

Epoch 2/3

- 608s - loss: 0.0807 - val_loss: 0.0577

Epoch 3/3

- 518s - loss: 0.0636 - val_loss: 0.0499Epoch 1/3

- 40s - loss: 0.1544 - val_loss: 0.0956

Epoch 2/3

- 38s - loss: 0.0871 - val_loss: 0.0665

Epoch 3/3

- 38s - loss: 0.0690 - val_loss: 0.0478对比gpu和cpu,时间相差了1,2个数量级

Epoch 1/3

- 47s - loss: 0.1349 - val_loss: 0.0890

Epoch 2/3

- 45s - loss: 0.0787 - val_loss: 0.0670

Epoch 3/3

- 43s - loss: 0.0625 - val_loss: 0.0466

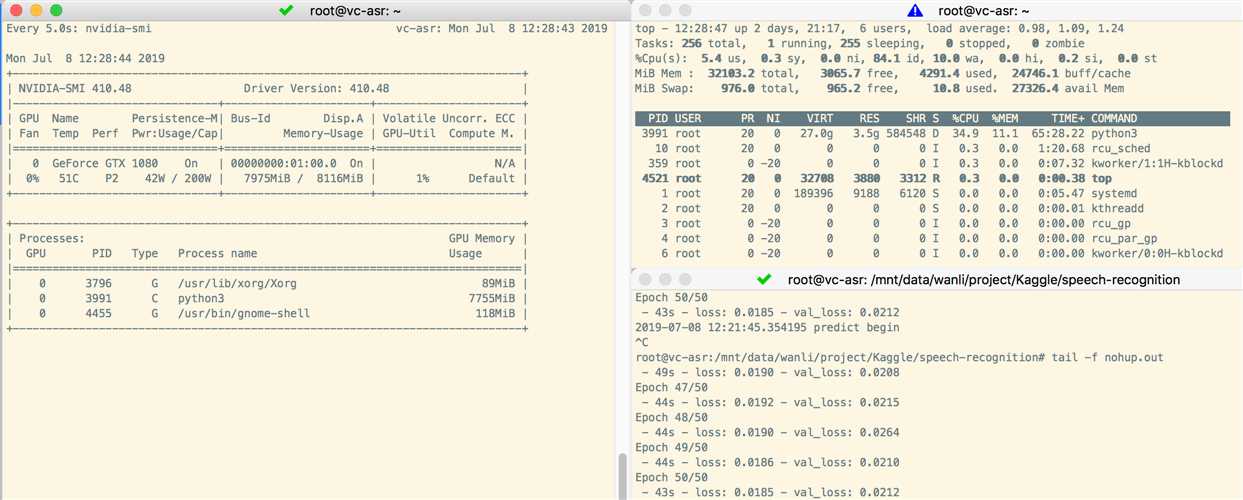

在本地开发环境上的入门级显卡1080上,训练时间后和kaggle的环境相差不多。

输出前后几轮的训练时间

Epoch 1/50

- 52s - loss: 0.1253 - val_loss: 0.0795

Epoch 2/50

- 48s - loss: 0.0738 - val_loss: 0.0565

Epoch 3/50

- 48s - loss: 0.0616 - val_loss: 0.0477

Epoch 4/50

- 49s - loss: 0.0534 - val_loss: 0.0378

Epoch 5/50

- 49s - loss: 0.0484 - val_loss: 0.0375

####################

Epoch 19/50

- 50s - loss: 0.0270 - val_loss: 0.0249

Epoch 20/50

- 50s - loss: 0.0257 - val_loss: 0.0241

Epoch 21/50

- 48s - loss: 0.0256 - val_loss: 0.0255

Epoch 22/50

- 50s - loss: 0.0247 - val_loss: 0.0255

Epoch 23/50

- 48s - loss: 0.0246 - val_loss: 0.0219

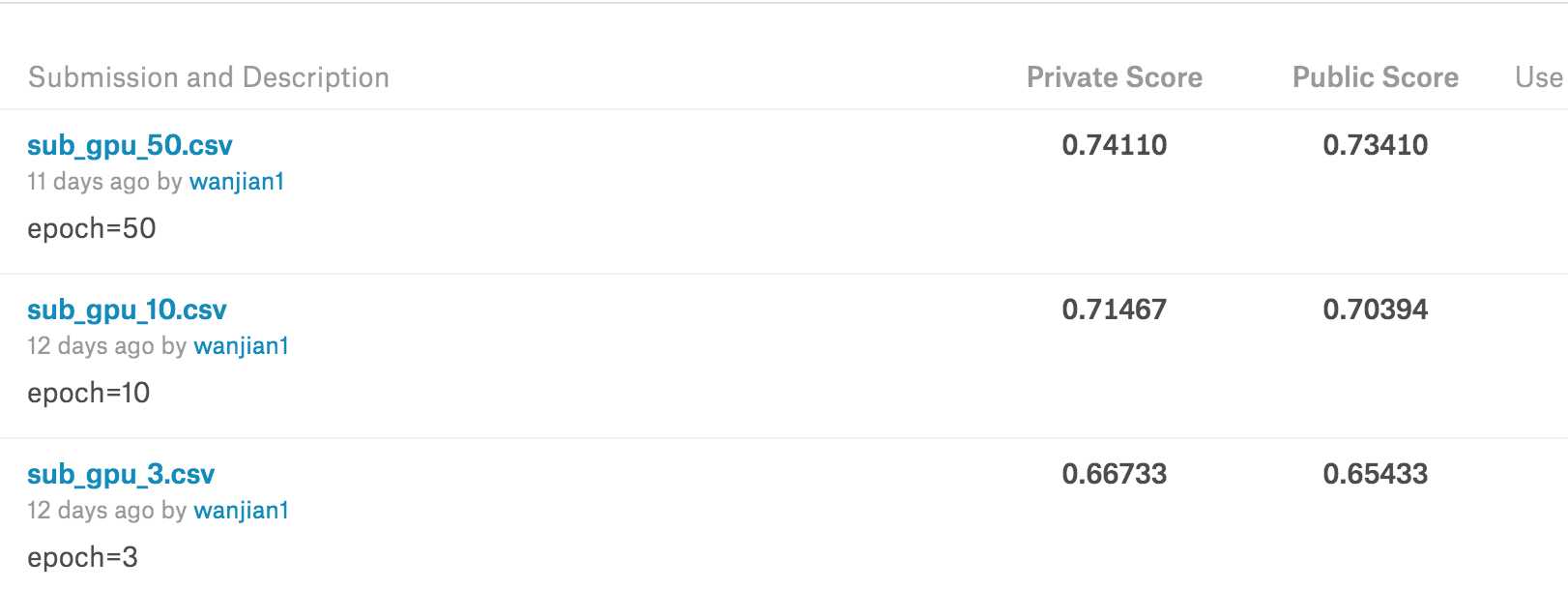

50轮次,大概花了一个多小时,kaggle上的准确率从0.66提升到0.74,后续再考虑优化其他超参数,继续提升准确率

标签:agg poc 提升 epo oss gtx 1080 com enter 深度学习

原文地址:https://www.cnblogs.com/wanli002/p/11211616.html