标签:flannel 影响 pass 加速 mode pack within exe 节点配置

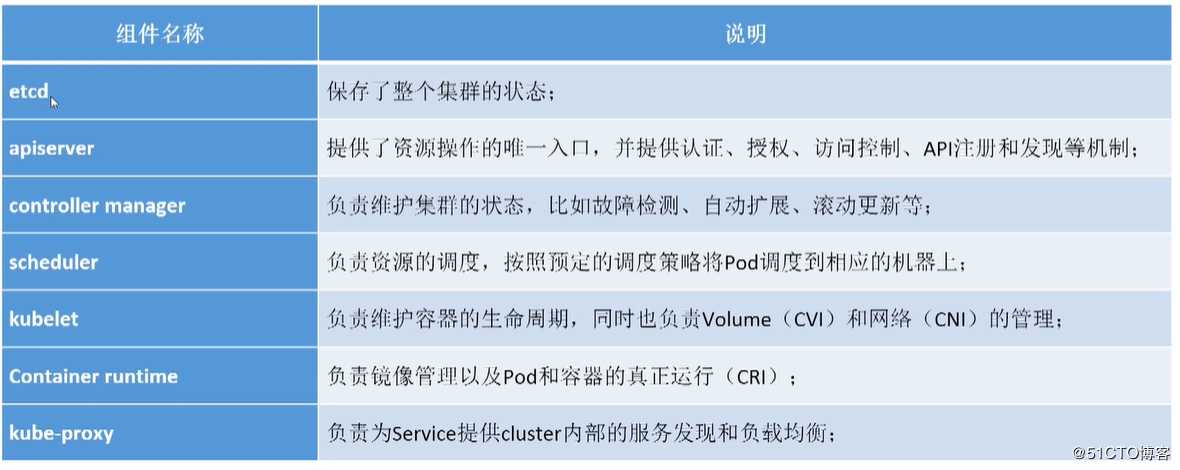

1.kubernetes介绍1.自我修复

2.服务发现和负载均衡

3.自动部署和回滚

4.弹性伸缩1.方便业务升级,增加新功能

2.支持更好的性能扩展

3.k8s弹性伸缩

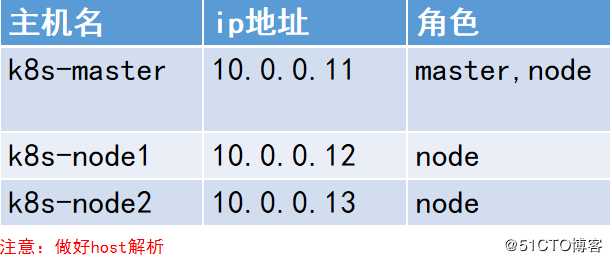

建议使用7.4,7.5会有些问题

[root@k8s-node1 ~]# cat /etc/redhat-release

CentOS Linux release 7.4.1708 (Core)

[root@k8s-node1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.0.0.11 k8s-master

10.0.0.12 k8s-node1

10.0.0.13 k8s-node2

[root@k8s-master ~]# systemctl stop firewalld

[root@k8s-master ~]# systemctl disable firewalld

[root@k8s-master ~]# cat /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of three two values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

[root@k8s-master ~]# systemctl stop NetworkManager

[root@k8s-master ~]# systemctl disable NetworkManager

[root@k8s-master ~]# systemctl stop postfix

[root@k8s-master ~]# systemctl disable postfix

Removed symlink /etc/systemd/system/multi-user.target.wants/postfix.service.

[root@k8s-master ~]# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

[root@k8s-master ~]# yum install -y net-tools vim lrzsz wget tree screen lsof tcpdump安装:

[root@k8s-master ~]#yum install etcd -y

[root@k8s-master ~]#vim /etc/etcd/etcd.conf

6行:ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

21行:ETCD_ADVERTISE_CLIENT_URLS="http://10.0.0.11:2379"

[root@k8s-master ~]# systemctl start etcd.service

[root@k8s-master ~]# systemctl enable etcd.service

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /usr/lib/systemd/system/etcd.service.

测试:

[root@k8s-master ~]# etcdctl set testdir/testkey0 0

0

[root@k8s-master ~]# etcdctl get testdir/testkey0

0

[root@k8s-master ~]# etcdctl -C http://10.0.0.11:2379 cluster-health

member 8e9e05c52164694d is healthy: got healthy result from http://10.0.0.11:2379

cluster is healthy

[root@k8s-master ~]#

#2379对外提供服务使用的,k8s往etcd中写入数据,使用改端口

#2380集群间数据同步,使用2380端口

[root@k8s-master ~]# netstat -antlp

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 127.0.0.1:6379 0.0.0.0:* LISTEN 1416/redis-server 1

tcp 0 0 127.0.0.1:2380 0.0.0.0:* LISTEN 1771/etcd

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1085/sshd

tcp 0 0 127.0.0.1:9002 0.0.0.0:* LISTEN 1449/python

tcp 0 0 0.0.0.0:3306 0.0.0.0:* LISTEN 1372/mysqld

tcp 0 0 127.0.0.1:54748 127.0.0.1:2379 ESTABLISHED 1771/etcd

tcp 0 0 10.0.0.11:33926 10.0.0.11:2379 TIME_WAIT -

tcp 0 0 10.0.0.11:33924 10.0.0.11:2379 TIME_WAIT -

tcp 0 0 10.0.0.11:22 10.0.0.1:3116 ESTABLISHED 1480/sshd: root@pts

tcp 0 52 10.0.0.11:22 10.0.0.1:4765 ESTABLISHED 1698/sshd: root@pts

tcp6 0 0 :::2379 :::* LISTEN 1771/etcd

tcp6 0 0 :::80 :::* LISTEN 1428/httpd

tcp6 0 0 :::22 :::* LISTEN 1085/sshd

tcp6 0 0 127.0.0.1:2379 127.0.0.1:54748 ESTABLISHED 1771/etcd [root@k8s-master ~]# yum install kubernetes-master.x86_64 -y

#apiserver配置,apiserver在哪个服务器上,那个就是master节点

#下面是修改的地方

[root@k8s-master ~]# vim /etc/kubernetes/apiserver

# The address on the local server to listen to.

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

# The port on the local server to listen on.

KUBE_API_PORT="--port=8080"

# Port minions listen on

KUBELET_PORT="--kubelet-port=10250"

# Comma separated list of nodes in the etcd cluster

KUBE_ETCD_SERVERS="--etcd-servers=http://10.0.0.11:2379"

#controller-manager, scheduler, and proxy共用一个配置文件

[root@k8s-master ~]# vim /etc/kubernetes/config

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=http://10.0.0.11:8080"

[root@k8s-master ~]# systemctl start kube-apiserver.service

[root@k8s-master ~]# systemctl start kube-controller-manager.service

[root@k8s-master ~]# systemctl start kube-scheduler.service

[root@k8s-master ~]# systemctl enable kube-apiserver.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service.

[root@k8s-master ~]# systemctl enable kube-controller-manager.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service.

[root@k8s-master ~]# systemctl enable kube-scheduler.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service.

[root@k8s-master ~]#

[root@k8s-master ~]# kubectl get componentstatus

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

[root@k8s-master ~]#

#1.每个节点都安装Node

yum install kubernetes-node.x86_64 -y

#2.node1和node2上修改配置文件,master上已经配置完了,不需要改了

[root@k8s-node1 ~]# vim /etc/kubernetes/config

[root@k8s-node2 ~]# vim /etc/kubernetes/config

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=http://10.0.0.11:8080"

#3.master上修改kuberlet配置文件

[root@k8s-master ~]# cat /etc/kubernetes/kubelet

###

# kubernetes kubelet (minion) config

# The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces)

KUBELET_ADDRESS="--address=10.0.0.11"

# The port for the info server to serve on

KUBELET_PORT="--port=10250"

# You may leave this blank to use the actual hostname

KUBELET_HOSTNAME="--hostname-override=k8s-master"

# location of the api-server

KUBELET_API_SERVER="--api-servers=http://10.0.0.11:8080"

# pod infrastructure container

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

# Add your own!

KUBELET_ARGS=""

[root@k8s-master ~]#

[root@k8s-master ~]# systemctl start kubelet.service

[root@k8s-master ~]# systemctl start kube-proxy.service

[root@k8s-master ~]# systemctl enable kubelet.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@k8s-master ~]# systemctl enable kube-proxy.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

[root@k8s-master ~]#

[root@k8s-master ~]# kubectl get nodes

NAME STATUS AGE

k8s-master Ready 1m

#4.node1节点

[root@k8s-node1 ~]# cat /etc/kubernetes/kubelet

###

# kubernetes kubelet (minion) config

# The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces)

KUBELET_ADDRESS="--address=10.0.0.12"

# The port for the info server to serve on

KUBELET_PORT="--port=10250"

# You may leave this blank to use the actual hostname

KUBELET_HOSTNAME="--hostname-override=k8s-node1"

# location of the api-server

KUBELET_API_SERVER="--api-servers=http://10.0.0.11:8080"

# pod infrastructure container

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

# Add your own!

KUBELET_ARGS=""

[root@k8s-node1 ~]# systemctl start kubelet.service

[root@k8s-node1 ~]# systemctl start kube-proxy.service

[root@k8s-node1 ~]# systemctl enable kubelet.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@k8s-node1 ~]# systemctl enable kube-proxy.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

[root@k8s-node1 ~]#

#5.node2节点

[root@k8s-node2 ~]# cat /etc/kubernetes/kubelet

###

# kubernetes kubelet (minion) config

# The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces)

KUBELET_ADDRESS="--address=10.0.0.13"

# The port for the info server to serve on

KUBELET_PORT="--port=10250"

# You may leave this blank to use the actual hostname

KUBELET_HOSTNAME="--hostname-override=k8s-node2"

# location of the api-server

KUBELET_API_SERVER="--api-servers=http://10.0.0.11:8080"

# pod infrastructure container

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

# Add your own!

KUBELET_ARGS=""

[root@k8s-node2 ~]# systemctl start kubelet.service

[root@k8s-node2 ~]# systemctl start kube-proxy.service

[root@k8s-node2 ~]# systemctl enable kubelet.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@k8s-node2 ~]# systemctl enable kube-proxy.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

#6.master节点上查看

[root@k8s-master ~]# kubectl get nodes

NAME STATUS AGE

k8s-master Ready 8m

k8s-node1 Ready 2m

k8s-node2 Ready 3m

[root@k8s-master ~]#

#1.所有节点安装flannel

yum install flannel -y

#2.master修改配置文件

[root@k8s-master ~]# sed -i ‘s#http://127.0.0.1:2379#http://10.0.0.11:2379#g‘ /etc/sysconfig/flanneld

[root@k8s-master ~]# cat /etc/sysconfig/flanneld

# Flanneld configuration options

# etcd url location. Point this to the server where etcd runs

FLANNEL_ETCD_ENDPOINTS="http://10.0.0.11:2379"

# etcd config key. This is the configuration key that flannel queries

# For address range assignment

FLANNEL_ETCD_PREFIX="/atomic.io/network"

# Any additional options that you want to pass

#FLANNEL_OPTIONS=""

#2.1master容器的ip地址范围

[root@k8s-master ~]# etcdctl mk /atomic.io/network/config ‘{ "Network": "172.16.0.0/16" }‘

{ "Network": "172.16.0.0/16" }

#2.2master节点重启服务

[root@k8s-master ~]# systemctl start flanneld.service

[root@k8s-master ~]# systemctl enable flanneld.service

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

Created symlink from /etc/systemd/system/docker.service.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

[root@k8s-master ~]#

[root@k8s-master ~]# service docker restart

Redirecting to /bin/systemctl restart docker.service

[root@k8s-master ~]# systemctl restart kube-apiserver.service

[root@k8s-master ~]# systemctl restart kube-controller-manager.service

[root@k8s-master ~]# systemctl restart kube-scheduler.service

[root@k8s-master ~]#

#2.3master查看docker网段

[root@k8s-master ~]# ifconfig

#这里是172.16网段

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.16.93.1 netmask 255.255.255.0 broadcast 0.0.0.0

ether 02:42:35:9d:71:e8 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

docker_gwbridge: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.18.0.1 netmask 255.255.0.0 broadcast 0.0.0.0

ether 02:42:e8:6b:f7:fe txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 3 bytes 144 (144.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.0.0.11 netmask 255.255.255.0 broadcast 10.0.0.255

inet6 fe80::20c:29ff:fee1:5b21 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:e1:5b:21 txqueuelen 1000 (Ethernet)

RX packets 70391 bytes 88495191 (84.3 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 36010 bytes 5584671 (5.3 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.16.1.11 netmask 255.255.255.0 broadcast 172.16.1.255

inet6 fe80::20c:29ff:fee1:5b2b prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:e1:5b:2b txqueuelen 1000 (Ethernet)

RX packets 42 bytes 2520 (2.4 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 12 bytes 868 (868.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

#这里是172.16网段

flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472

inet 172.16.93.0 netmask 255.255.0.0 destination 172.16.93.0

inet6 fe80::32f2:2a0e:a07e:990d prefixlen 64 scopeid 0x20<link>

unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 3 bytes 144 (144.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1 (Local Loopback)

RX packets 90828 bytes 43054764 (41.0 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 90828 bytes 43054764 (41.0 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@k8s-master ~]#

#3.node节点:

[root@k8s-node1 ~]# sed -i ‘s#http://127.0.0.1:2379#http://10.0.0.11:2379#g‘ /etc/sysconfig/flanneld

[root@k8s-node1 ~]# systemctl start flanneld.service

[root@k8s-node1 ~]# systemctl enable flanneld.service

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

Created symlink from /etc/systemd/system/docker.service.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

[root@k8s-node1 ~]# systemctl restart docker.service

[root@k8s-node1 ~]# systemctl restart kubelet.service

[root@k8s-node1 ~]# systemctl restart kube-proxy.service

[root@k8s-node1 ~]#

[root@k8s-node2 ~]#sed -i ‘s#http://127.0.0.1:2379#http://10.0.0.11:2379#g‘ /etc/sysconfig/flanneld

[root@k8s-node2 ~]# systemctl start flanneld.service

[root@k8s-node2 ~]# systemctl enable flanneld.service

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

Created symlink from /etc/systemd/system/docker.service.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

[root@k8s-node2 ~]# systemctl restart docker.service

[root@k8s-node2 ~]# systemctl restart kube-proxy.service

[root@k8s-node2 ~]# systemctl restart kubelet.service

[root@k8s-node2 ~]#

#4.测试跨宿主机容器之间的互通性

#4.1所有节点

[root@k8s-master ~]# docker pull busybox

[root@k8s-node1 ~]# docker pull busybox

[root@k8s-node2 ~]# docker pull busybox

使用xshell的send text to all sessions

[root@k8s-master ~]# docker run -it busybox

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:AC:10:5D:02

inet addr:172.16.93.2 Bcast:0.0.0.0 Mask:255.255.255.0

inet6 addr: fe80::42:acff:fe10:5d02/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1472 Metric:1

RX packets:16 errors:0 dropped:0 overruns:0 frame:0

TX packets:8 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:1296 (1.2 KiB) TX bytes:648 (648.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

#4.2节点之间互ping

/ #

/ # ping 172.16.79.2

PING 172.16.79.2 (172.16.79.2): 56 data bytes

^C

--- 172.16.79.2 ping statistics ---

2 packets transmitted, 0 packets received, 100% packet loss

/ # ping 172.16.48.2

PING 172.16.48.2 (172.16.48.2): 56 data bytes

^C

--- 172.16.48.2 ping statistics ---

11 packets transmitted, 0 packets received, 100% packet loss

/ #

#4.3容器间ping不通解决办法:

原因是docker1.13版本中,把FORWARD给Drop了

[root@k8s-master ~]# iptables -P FORWARD ACCEPT

[root@k8s-node1 ~]# iptables -P FORWARD ACCEPT

[root@k8s-node2 ~]# iptables -P FORWARD ACCEPT

#修改配置文件,永久生效,三台服务器都要添加

vim /usr/lib/systemd/system/docker.service

Environment=PATH=/usr/libexec/docker:/usr/bin:/usr/sbin

#添加的内容

ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT

#操作完成后,reload一下

systemctl daemon-reload

#4.4再次尝试

--- 172.16.48.2 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.828/0.888/0.949 ms

/ # ping 172.16.79.2

PING 172.16.79.2 (172.16.79.2): 56 data bytes

64 bytes from 172.16.79.2: seq=0 ttl=60 time=0.877 ms

^C

--- 172.16.79.2 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.877/0.877/0.877 ms

/ #

#1.编辑yaml文件

vi nginx_pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

app: web

spec:

containers:

- name: nginx

image: nginx:1.13

ports:

- containerPort: 80

#2.创建pod

#2.1报错1

[root@k8s-master k8s]# kubectl create -f nginx_pod.yaml

Error from server (ServerTimeout): error when creating "nginx_pod.yaml": No API token found for service account "default", retry after the token is automatically created and added to the service account

解决方法,删除ServiceAccount

[root@k8s-master k8s]#vim /etc/kubernetes/apiserver

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"

systemctl restart kube-apiserver.service

#2.2再次创建Pod

[root@k8s-master k8s]# kubectl create -f nginx_pod.yaml

pod "nginx" created

[root@k8s-master k8s]#

#2.3查看创建的pod

[root@k8s-master k8s]#

[root@k8s-master k8s]# kubectl get nodes

NAME STATUS AGE

k8s-master Ready 1h

k8s-node1 Ready 1h

k8s-node2 Ready 1h

[root@k8s-master k8s]# kubectl get componentstatus

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

[root@k8s-master k8s]#

[root@k8s-master k8s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx 0/1 ContainerCreating 0 55s

[root@k8s-master k8s]# kubectl get pod nginx

NAME READY STATUS RESTARTS AGE

nginx 0/1 ContainerCreating 0 2m

[root@k8s-master k8s]#

#一直处于containerCreating状态

#2.4查看该pod错误信息2

[root@k8s-master k8s]# kubectl describe pod nginx

Name: nginx

Namespace: default

Node: k8s-node2/10.0.0.13

Start Time: Wed, 28 Aug 2019 15:43:09 +0800

Labels: app=web

Status: Pending

IP:

Controllers: <none>

Containers:

nginx:

Container ID:

Image: nginx:1.13

Image ID:

Port: 80/TCP

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Volume Mounts: <none>

Environment Variables: <none>

Conditions:

Type Status

Initialized True

Ready False

PodScheduled True

No volumes.

QoS Class: BestEffort

Tolerations: <none>

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

3m 3m 1 {default-scheduler } Normal Scheduled Successfully assigned nginx to k8s-node2

2m 15s 10 {kubelet k8s-node2} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ImagePullBackOff: "Back-off pulling image \"registry.access.redhat.com/rhel7/pod-infrastructure:latest\""

3m 3s 5 {kubelet k8s-node2} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ErrImagePull: "image pull failed for registry.access.redhat.com/rhel7/pod-infrastructure:latest, this may be because there are no credentials on this request. details: (open /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory)"

[root@k8s-master k8s]#

#2.5解决方法

从上面看到,分配到了node2节点上

搜索pod-infrastructure镜像,选中第二个

[root@k8s-node2 ~]# docker search pod-infrastructure

INDEX NAME DESCRIPTION STARS OFFICIAL AUTOMATED

docker.io docker.io/neurons/pod-infrastructure k8s pod 基础容器镜像 2

docker.io docker.io/tianyebj/pod-infrastructure registry.access.redhat.com/rhel7/pod-infra... 2

docker.io docker.io/w564791/pod-infrastructure latest 1

[root@k8s-node2 ~]# vim /etc/kubernetes/kubelet

# pod infrastructure container

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=docker.io/tianyebj/pod-infrastructure:latest"

[root@k8s-node2 ~]# systemctl restart kubelet.service

#2.6等待一会再次查看

也可查看镜像临时下载路径中是否有内容,来查看是否在pull

[root@k8s-master k8s]# ls /var/lib/docker/tmp/

#我这里已经Pull成功了,如果不成功,就要使用能够上网的机器进行Pull,然后使用docker的save和load来进行导入

[root@k8s-master k8s]# kubectl describe pod nginx

Name: nginx

Namespace: default

Node: k8s-node2/10.0.0.13

Start Time: Wed, 28 Aug 2019 15:43:09 +0800

Labels: app=web

Status: Running

IP: 172.16.48.2

Controllers: <none>

Containers:

nginx:

Container ID: docker://fcdfa71abdb58c10a40b81b3546545253e13b5aafe63d458008da4d8d96b0623

Image: nginx:1.13

Image ID: docker-pullable://docker.io/nginx@sha256:b1d09e9718890e6ebbbd2bc319ef1611559e30ce1b6f56b2e3b479d9da51dc35

Port: 80/TCP

State: Running

Started: Wed, 28 Aug 2019 15:56:36 +0800

Ready: True

Restart Count: 0

Volume Mounts: <none>

Environment Variables: <none>

Conditions:

Type Status

Initialized True

Ready True

PodScheduled True

No volumes.

QoS Class: BestEffort

Tolerations: <none>

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

16m 16m 1 {default-scheduler } Normal Scheduled Successfully assigned nginx to k8s-node2

16m 10m 6 {kubelet k8s-node2} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ErrImagePull: "image pull failed for registry.access.redhat.com/rhel7/pod-infrastructure:latest, this may be because there are no credentials on this request. details: (open /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory)"

15m 5m 45 {kubelet k8s-node2} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ImagePullBackOff: "Back-off pulling image \"registry.access.redhat.com/rhel7/pod-infrastructure:latest\""

3m 3m 1 {kubelet k8s-node2} spec.containers{nginx} Normal Pulling pulling image "nginx:1.13"

3m 2m 2 {kubelet k8s-node2} Warning MissingClusterDNS kubelet does not have ClusterDNS IP configured and cannot create Pod using "ClusterFirst" policy. Falling back to DNSDefault policy.

2m 2m 1 {kubelet k8s-node2} spec.containers{nginx} Normal Pulled Successfully pulled image "nginx:1.13"

2m 2m 1 {kubelet k8s-node2} spec.containers{nginx} Normal Created Created container with docker id fcdfa71abdb5; Security:[seccomp=unconfined]

2m 2m 1 {kubelet k8s-node2} spec.containers{nginx} Normal Started Started container with docker id fcdfa71abdb5

[root@k8s-master k8s]#

#2.7node2上查看pull下来的镜像docker.io/tianyebj/pod-infrastructure

[root@k8s-node2 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

10.0.0.63/library/busybox latest db8ee88ad75f 5 weeks ago 1.22 MB

docker.io/busybox latest db8ee88ad75f 5 weeks ago 1.22 MB

docker.io/nginx 1.13 ae513a47849c 16 months ago 109 MB

docker.io/tianyebj/pod-infrastructure latest 34d3450d733b 2 years ago 205 MB

docker.io/progrium/consul latest 09ea64205e55 4 years ago 69.4 MB

[root@k8s-node2 ~]#

#3.删除镜像

[root@k8s-master k8s]# kubectl delete pod nginx

pod "nginx" deleted

[root@k8s-master k8s]# kubectl get pods

No resources found.

[root@k8s-master k8s]#

#4.创建私有仓库,节省流量,节省带宽

企业中可以使用harbor私有仓库

#4.1master节点拉取registry镜像

[root@k8s-master k8s]# docker pull registry

Using default tag: latest

Trying to pull repository docker.io/library/registry ...

latest: Pulling from docker.io/library/registry

Digest: sha256:8004747f1e8cd820a148fb7499d71a76d45ff66bac6a29129bfdbfdc0154d146

Status: Image is up to date for docker.io/registry:latest

#4.2master节点启动私有仓库

[root@k8s-master k8s]# docker run -d -p 5000:5000 --restart=always --name registry -v /opt/myregistry:/var/lib/registry registry

c60f03647fa50c1f515b90b92ea3aa54ba8c6fe46c5ae166bd88a9154db64568

[root@k8s-master k8s]#

[root@k8s-master k8s]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c60f03647fa5 registry "/entrypoint.sh /e..." About a minute ago Up About a minute 0.0.0.0:5000->5000/tcp registry

50ad15d96e3b busybox "sh" About an hour ago Exited (0) 55 minutes ago quizzical_murdock

[root@k8s-master k8s]#

#4.2node2节点把刚刚pull下来的pod-infrastructure上传到私有仓库中

[root@k8s-node2 ~]# docker tag docker.io/tianyebj/pod-infrastructure 10.0.0.11:5000/pod-infrastructure:latest

[root@k8s-node2 ~]# docker push 10.0.0.11:5000/pod-infrastructure:latest

The push refers to a repository [10.0.0.11:5000/pod-infrastructure

Get https://10.0.0.11:5000/v1/_ping: http: server gave HTTP response to HTTPS client

#配置镜像加速和信任

[root@k8s-node1 ~]# cat /etc/sysconfig/docker

# /etc/sysconfig/docker

# Modify these options if you want to change the way the docker daemon runs

#OPTIONS=‘--selinux-enabled --log-driver=journald --signature-verification=false‘

OPTIONS=‘--selinux-enabled --log-driver=journald --signature-verification=false --registry-mirror=https://registry.docker-cn.com --insecure-registry=10.0.0.11:5000‘

[root@k8s-node1 ~]#systemctl restart docker.service

[root@k8s-node2 ~]# docker push 10.0.0.11:5000/pod-infrastructure:ltest

The push refers to a repository [10.0.0.11:5000/pod-infrastructure]

ba3d4cbbb261: Pushed

0a081b45cb84: Pushed

df9d2808b9a9: Pushed

ltest: digest: sha256:a378b2d7a92231ffb07fdd9dbd2a52c3c439f19c8d675a0d8d9ab74950b15a1b size: 948

[root@k8s-node2 ~]#

#由于需要nginx镜像,所以把nginx也Push到私有仓库

[root@k8s-node2 ~]# docker tag docker.io/nginx:1.13 10.0.0.11:5000/nginx:1.13

[root@k8s-node2 ~]# docker push 10.0.0.11:5000/nginx:1.13

The push refers to a repository [10.0.0.11:5000/nginx]

7ab428981537: Pushed

82b81d779f83: Pushed

d626a8ad97a1: Pushed

1.13: digest: sha256:e4f0474a75c510f40b37b6b7dc2516241ffa8bde5a442bde3d372c9519c84d90 size: 948

#4.3所有节点配置

#配置镜像加速和信任

[root@k8s-node1 ~]# cat /etc/sysconfig/docker

# /etc/sysconfig/docker

# Modify these options if you want to change the way the docker daemon runs

#OPTIONS=‘--selinux-enabled --log-driver=journald --signature-verification=false‘

OPTIONS=‘--selinux-enabled --log-driver=journald --signature-verification=false --registry-mirror=https://registry.docker-cn.com --insecure-registry=10.0.0.11:5000‘

[root@k8s-node1 ~]#systemctl restart docker.service

#配置从私有仓库拉取pod-infrastructure

[root@k8s-node1 ~]# cat /etc/kubernetes/kubelet

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=10.0.0.11:5000/pod-infrastructure:latest"

[root@k8s-node1 ~]#systemctl restart kubelet.service

#4.4master节点查看pod状态,已经成功

[root@k8s-master k8s]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 35m

[root@k8s-master k8s]#

[root@k8s-master k8s]# kubectl describe pod nginx

Name: nginx

Namespace: default

Node: k8s-node1/10.0.0.12

Start Time: Wed, 28 Aug 2019 16:14:30 +0800

Labels: app=web

Status: Running

IP: 172.16.79.2

Controllers: <none>

Containers:

nginx:

Container ID: docker://13374ea686595b25b07bb1b2a5228c58bd818455c42e2344bcb200990a94ec69

Image: nginx:1.13

Image ID: docker-pullable://docker.io/nginx@sha256:b1d09e9718890e6ebbbd2bc319ef1611559e30ce1b6f56b2e3b479d9da51dc35

Port: 80/TCP

State: Running

Started: Wed, 28 Aug 2019 16:44:13 +0800

Ready: True

Restart Count: 0

Volume Mounts: <none>

Environment Variables: <none>

Conditions:

Type Status

Initialized True

Ready True

PodScheduled True

No volumes.

QoS Class: BestEffort

Tolerations: <none>

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

30m 30m 1 {default-scheduler } Normal Scheduled Successfully assigned nginx to k8s-node1

30m 20m 7 {kubelet k8s-node1} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ErrImagePull: "image pull failed for registry.access.redhat.com/rhel7/pod-infrastructure:latest, this may be because there are no credentials on this request. details: (open /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory)"

30m 17m 56 {kubelet k8s-node1} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ImagePullBackOff: "Back-off pulling image \"registry.access.redhat.com/rhel7/pod-infrastructure:latest\""

16m 16m 3 {kubelet k8s-node1} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ErrImagePull: "image pull failed for registry.access.redhat.com/rhel7/pod-infrastructure:latest, this may be because there are no credentials on this request. details: (open /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory)"

16m 15m 3 {kubelet k8s-node1} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ImagePullBackOff: "Back-off pulling image \"registry.access.redhat.com/rhel7/pod-infrastructure:latest\""

15m 12m 5 {kubelet k8s-node1} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ErrImagePull: "Error: image pod-infrastructure:latest not found"

14m 9m 22 {kubelet k8s-node1} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ImagePullBackOff: "Back-off pulling image \"10.0.0.11:5000/pod-infrastructure:latest\""

2m 2m 1 {kubelet k8s-node1} spec.containers{nginx} Warning Failed Failed to pull image "nginx:1.13": net/http: request canceled

2m 2m 1 {kubelet k8s-node1} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "nginx" with ErrImagePull: "net/http: request canceled"

2m 2m 1 {kubelet k8s-node1} spec.containers{nginx} Normal BackOff Back-off pulling image "nginx:1.13"

2m 2m 1 {kubelet k8s-node1} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "nginx" with ImagePullBackOff: "Back-off pulling image \"nginx:1.13\""

9m 2m 2 {kubelet k8s-node1} spec.containers{nginx} Normal Pulling pulling image "nginx:1.13"

9m 1m 2 {kubelet k8s-node1} Warning MissingClusterDNS kubelet does not have ClusterDNS IP configured and cannot create Pod using "ClusterFirst" policy. Falling back to DNSDefault policy.

1m 1m 1 {kubelet k8s-node1} spec.containers{nginx} Normal Pulled Successfully pulled image "nginx:1.13"

1m 1m 1 {kubelet k8s-node1} spec.containers{nginx} Normal Created Created container with docker id 13374ea68659; Security:[seccomp=unconfined]

1m 1m 1 {kubelet k8s-node1} spec.containers{nginx} Normal Started Started container with docker id 13374ea68659

[root@k8s-master k8s]# cat

#5.使用私有仓库构建pod

[root@k8s-master k8s]# cat nginx_pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: test

labels:

app: web

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

[root@k8s-master k8s]#

#很快就构建成功了

[root@k8s-master k8s]# kubectl create -f nginx_pod.yaml

pod "test" created

[root@k8s-master k8s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 45m

test 1/1 Running 0 15s

[root@k8s-master k8s]# kubectl describe pod test

Name: test

Namespace: default

Node: k8s-node2/10.0.0.13

Start Time: Wed, 28 Aug 2019 16:59:30 +0800

Labels: app=web

Status: Running

IP: 172.16.48.2

Controllers: <none>

Containers:

nginx:

Container ID: docker://964ab40f1fdf91755e7510d5bf497b50181957e5d4eba51ff04a05899d5fbf11

Image: 10.0.0.11:5000/nginx:1.13

Image ID: docker-pullable://10.0.0.11:5000/nginx@sha256:e4f0474a75c510f40b37b6b7dc2516241ffa8bde5a442bde3d372c9519c84d90

Port: 80/TCP

State: Running

Started: Wed, 28 Aug 2019 16:59:30 +0800

Ready: True

Restart Count: 0

Volume Mounts: <none>

Environment Variables: <none>

Conditions:

Type Status

Initialized True

Ready True

PodScheduled True

No volumes.

QoS Class: BestEffort

Tolerations: <none>

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

2m 2m 1 {default-scheduler } Normal Scheduled Successfully assigned test to k8s-node2

2m 2m 2 {kubelet k8s-node2} Warning MissingClusterDNS kubelet does not have ClusterDNS IP configured and cannot create Pod using "ClusterFirst" policy. Falling back to DNSDefault policy.

2m 2m 1 {kubelet k8s-node2} spec.containers{nginx} Normal Pulled Container image "10.0.0.11:5000/nginx:1.13" already present on machine

2m 2m 1 {kubelet k8s-node2} spec.containers{nginx} Normal Created Created container with docker id 964ab40f1fdf; Security:[seccomp=unconfined]

2m 2m 1 {kubelet k8s-node2} spec.containers{nginx} Normal Started Started container with docker id 964ab40f1fdf

[root@k8s-master k8s]#

k8s中创建一个pod资源,控制docker启动两个容器,业务容器nginx和基础pod容器。#1.查看pod信息

可以看到pod的IP和所在的节点

[root@k8s-master k8s]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 0 58m 172.16.79.2 k8s-node1

test 1/1 Running 0 13m 172.16.48.2 k8s-node2

[root@k8s-master k8s]#

#2.访问Pod的IP

[root@k8s-master k8s]# curl -I 172.16.79.2

HTTP/1.1 200 OK

Server: nginx/1.13.12

Date: Wed, 28 Aug 2019 09:13:37 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Mon, 09 Apr 2018 16:01:09 GMT

Connection: keep-alive

ETag: "5acb8e45-264"

Accept-Ranges: bytes

[root@k8s-master k8s]#

#3.node1,node2上查看容器

[root@k8s-node1 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

13374ea68659 nginx:1.13 "nginx -g ‘daemon ..." 31 minutes ago Up 31 minutes k8s_nginx.78d00b5_nginx_default_dc2f032c-c96b-11e9-b75c-000c29e15b21_35d7190a

5289d7fdf594 10.0.0.11:5000/pod-infrastructure:latest "/pod" 39 minutes ago Up 39 minutes k8s_POD.177f01b0_nginx_default_dc2f032c-c96b-11e9-b75c-000c29e15b21_ee1e6ad2

[root@k8s-node2 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

964ab40f1fdf 10.0.0.11:5000/nginx:1.13 "nginx -g ‘daemon ..." 15 minutes ago Up 15 minutes k8s_nginx.91390390_test_default_259f45ce-c972-11e9-b75c-000c29e15b21_7e2518c4

ad6f45f152f8 10.0.0.11:5000/pod-infrastructure:latest "/pod" 15 minutes ago Up 15 minutes k8s_POD.177f01b0_test_default_259f45ce-c972-11e9-b75c-000c29e15b21_a7cfa831

#4.查看IP

#查看Pod容器有ip,nginx容器没有ip,

#nginx容器的网络类型为container,和pod容器共用ip地址

[root@k8s-node1 ~]# docker inspect 5289d7fdf594|grep IPAddress

"SecondaryIPAddresses": null,

"IPAddress": "172.16.79.2",

"IPAddress": "172.16.79.2",

[root@k8s-node1 ~]# docker inspect 13374ea68659|grep -i network

"NetworkMode": "container:5289d7fdf594594340f4d82d58f6e9173880c11247862d402f853ee936bbcd6a",

"NetworkSettings": {

"Networks": {}

[root@k8s-node1 ~]#

kubectl describe pod test

kubectl create -f nginx_pod.yaml

kubectl get pods

kubectl delete pod test2

kubectl delete pod test2 --force --grace-period=0#1.编写pod.yaml文件

imagePullPolicy:意思是本地有镜像,就不去docker官网去Pull,校验了,可以节省时间

一个pod中创建了两个容器

[root@k8s-master k8s]# cat nginx_pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: test3

labels:

app: web

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

- name: busybox

image: docker.io/busybox:latest

imagePullPolicy: IfNotPresent

command: ["sleep","3600"]

ports:

- containerPort: 80

[root@k8s-master k8s]#

#2.创建pod

[root@k8s-master k8s]# kubectl create -f nginx_pod.yaml

pod "test3" created

#3.很快就建好了pod

[root@k8s-master k8s]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 1h

test 1/1 Running 0 42m

test2 2/2 Running 0 4m

test3 2/2 Running 0 21s

#4.可以看到又新建了三个容器,一个pod容器,两个业务容器,业务容器共用Pod容器的网络

[root@k8s-node2 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6b3a5f49a2ef docker.io/busybox:latest "sleep 3600" 5 minutes ago Up 5 minutes k8s_busybox.268a09f9_test3_default_fa1e1433-c977-11e9-b75c-000c29e15b21_65079326

5c123da070d9 10.0.0.11:5000/nginx:1.13 "nginx -g ‘daemon ..." 5 minutes ago Up 5 minutes k8s_nginx.91390390_test3_default_fa1e1433-c977-11e9-b75c-000c29e15b21_2af6be9b

3b0017bbfdbb 10.0.0.11:5000/pod-infrastructure:latest "/pod" 5 minutes ago Up 5 minutes k8s_POD.1597245b_test3_default_fa1e1433-c977-11e9-b75c-000c29e15b21_f2c362e9

964ab40f1fdf 10.0.0.11:5000/nginx:1.13 "nginx -g ‘daemon ..." 47 minutes ago Up 47 minutes k8s_nginx.91390390_test_default_259f45ce-c972-11e9-b75c-000c29e15b21_7e2518c4

ad6f45f152f8 10.0.0.11:5000/pod-infrastructure:latest "/pod" 47 minutes ago Up 47 minutes k8s_POD.177f01b0_test_default_259f45ce-c972-11e9-b75c-000c29e15b21_a7cfa831

599b05a0a368 busybox "sh" 2 hours ago Exited (0) 2 hours ago gracious_brattain

4ace81ed1bf0 progrium/consul "/bin/start -serve..." 8 hours ago Exited (1) 7 hours ago consul

[root@k8s-node2 ~]#

#5.删除pod

[root@k8s-master k8s]# kubectl delete pod test2

pod "test2" deleted

如果删不掉,可以使用强制删除

[root@k8s-master k8s]# kubectl delete pod test2 --force --grace-period=0

[root@k8s-master k8s]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 1h

test 1/1 Running 0 52m

test3 2/2 Running 0 10m

#6.查看帮助信息

[root@k8s-master k8s]# kubectl explain pod.spec.containers

RESOURCE: containers <[]Object>

DESCRIPTION:

List of containers belonging to the pod. Containers cannot currently be

added or removed. There must be at least one container in a Pod. Cannot be

updated. More info: http://kubernetes.io/docs/user-guide/containers

A single application container that you want to run within a pod.

FIELDS:

image <string>

Docker image name. More info: http://kubernetes.io/docs/user-guide/images

name <string> -required-

Name of the container specified as a DNS_LABEL. Each container in a pod

must have a unique name (DNS_LABEL). Cannot be updated.

readinessProbe <Object>

Periodic probe of container service readiness. Container will be removed

from service endpoints if the probe fails. Cannot be updated. More info:

http://kubernetes.io/docs/user-guide/pod-states#container-probes

stdin <boolean>

Whether this container should allocate a buffer for stdin in the container

runtime. If this is not set, reads from stdin in the container will always

result in EOF. Default is false.

args <[]string>

Arguments to the entrypoint. The docker image‘s CMD is used if this is not

provided. Variable references $(VAR_NAME) are expanded using the container‘s

environment. If a variable cannot be resolved, the reference in the input

string will be unchanged. The $(VAR_NAME) syntax can be escaped with a

double $$, ie: $$(VAR_NAME). Escaped references will never be expanded,

regardless of whether the variable exists or not. Cannot be updated. More

info:

http://kubernetes.io/docs/user-guide/containers#containers-and-commands

env <[]Object>

List of environment variables to set in the container. Cannot be updated.

应用托管在Kubernetes之后,Kubernetes需要保证应用能够持续运行,这是RC的工作内容,它会确保任何时间Kubernetes中都有指定数量的Pod在运行。

在此基础上,RC还提供了一些更高级的特性,比如滚动升级、升级回滚等。

滚动升级是一种平滑过渡的升级方式,通过逐步替换的策略,保证整体系统的稳定,在初始升级的时候就可以及时发现、调整问题,以保证问题影响度不会扩大。Kubernetes中滚动升级的命令如下:

$ kubectl rolling-update myweb -f nginx-rc2.yaml --update-period=10s

升级开始后,首先依据提供的定义文件创建V2版本的RC,然后每隔10s(--update-period=10s)逐步的增加V2版本的Pod副本数,逐步减少V1版本Pod的副本数。升级完成之后,删除V1版本的RC,保留V2版本的RC,及实现滚动升级。

升级过程中,发生了错误中途退出时,可以选择继续升级。Kubernetes能够智能的判断升级中断之前的状态,然后紧接着继续执行升级。当然,也可以进行回退,命令如下:

$ kubectl rolling-update myweb myweb2 --update-period=10s --rollback

#1.编辑nginx-rc.yaml

vi nginx-rc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: myweb

spec:

replicas: 2

selector:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

#2.创建rc

[root@k8s-master rc]# kubectl create -f nginx-rc.yaml

replicationcontroller "myweb" created

#3.查看rc,启动两个pod

[root@k8s-master rc]# kubectl get rc

NAME DESIRED CURRENT READY AGE

myweb 2 2 2 16s

[root@k8s-master rc]#

[root@k8s-master rc]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myweb-5wnfl 1/1 Running 0 1m

myweb-qs10q 1/1 Running 0 1m

#4.删除一个pod,立刻启动一个

[root@k8s-master rc]# kubectl delete pod myweb-5wnfl

pod "myweb-5wnfl" deleted

[root@k8s-master rc]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myweb-qs10q 1/1 Running 0 2m

myweb-xmphk 0/1 ErrImagePull 0 3s

[root@k8s-master rc]#

#5.RC与Pod的关联——Label

查看pod的标签----Labels: app=myweb

[root@k8s-master rc]# kubectl describe pod myweb-xmphk

Name: myweb-xmphk

Namespace: default

Node: k8s-node1/10.0.0.12

Start Time: Wed, 28 Aug 2019 18:44:40 +0800

Labels: app=myweb

查看rc的标签----app=myweb

[root@k8s-master rc]# kubectl get rc -o wide

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

myweb 2 2 1 4m myweb 10.0.0.11:5000/nginx:1.13 app=myweb

[root@k8s-master rc]# kubectl get all -o wide

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rc/myweb 2 2 1 5m myweb 10.0.0.11:5000/nginx:1.13 app=myweb

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 <none> 443/TCP 4h <none>

NAME READY STATUS RESTARTS AGE IP NODE

po/myweb-qs10q 1/1 Running 0 5m 172.16.93.2 k8s-master

po/myweb-xmphk 0/1 ImagePullBackOff 0 2m 172.16.79.2 k8s-node1

[root@k8s-master rc]#

#6.新建一个pod,label修改为myweb,会发现自动删除了一个label为myweb的现有Pod,使得数量保持为2

## 6.1新建一个pod

[root@k8s-master k8s]# kubectl create -f nginx_pod.yaml

pod "test3" created

## 6.2查看

[root@k8s-master k8s]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myweb-qs10q 1/1 Running 0 11m

myweb-xmphk 0/1 ImagePullBackOff 0 8m

test3 2/2 Running 0 11s

## 6.3编辑

[root@k8s-master k8s]# kubectl edit pod test3

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: 2019-08-28T10:53:28Z

labels:

app: myweb ##这里改为myweb

pod "test3" edited

## 6.4查看,删除了一个AGE较小的pod

[root@k8s-master k8s]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myweb-qs10q 1/1 Running 0 13m

test3 2/2 Running 0 1m

[root@k8s-master k8s]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myweb-qs10q 1/1 Running 0 13m

test3 2/2 Running 0 1m

[root@k8s-master k8s]#

#7.rc的滚动升级

##7.1准备rc.yaml

[root@k8s-master rc]# cp nginx-rc.yaml nginx-rc2.yaml

[root@k8s-master rc]# sed -i s#myweb#myweb2#g nginx-rc2.yaml

[root@k8s-master rc]# cat nginx-rc2.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: myweb2

spec:

replicas: 2

selector:

app: myweb2

template:

metadata:

labels:

app: myweb2

spec:

containers:

- name: myweb2

image: 10.0.0.11:5000/nginx:1.15

ports:

- containerPort: 80

[root@k8s-master rc]#

##7.2把nginx:1.15版本上传到私有仓库

[root@k8s-master rc]# docker pull nginx:1.15

Trying to pull repository docker.io/library/nginx ...

1.15: Pulling from docker.io/library/nginx

Digest: sha256:23b4dcdf0d34d4a129755fc6f52e1c6e23bb34ea011b315d87e193033bcd1b68

Status: Downloaded newer image for docker.io/nginx:1.15

[root@k8s-master rc]#

[root@k8s-master rc]# docker tag docker.io/nginx:1.15 10.0.0.11:5000/nginx:1.15

[root@k8s-master rc]# docker push 10.0.0.11:5000/nginx:1.15

The push refers to a repository [10.0.0.11:5000/nginx]

332fa54c5886: Pushed

6ba094226eea: Pushed

6270adb5794c: Pushed

1.15: digest: sha256:e770165fef9e36b990882a4083d8ccf5e29e469a8609bb6b2e3b47d9510e2c8d size: 948

##7.3开始滚动升级

myweb升级为nginx-rc2.yaml

[root@k8s-master rc]# kubectl rolling-update myweb -f nginx-rc2.yaml --update-period=10s

Created myweb2

Scaling up myweb2 from 0 to 2, scaling down myweb from 2 to 0 (keep 2 pods available, dont exceed 3 pods)

Scaling myweb2 up to 1

Scaling myweb down to 1

Scaling myweb2 up to 2

Scaling myweb down to 0

Update succeeded. Deleting myweb

replicationcontroller "myweb" rolling updated to "myweb2"

[root@k8s-master rc]#

[root@k8s-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myweb2-kbsjh 1/1 Running 0 27s

myweb2-tl7fv 1/1 Running 0 37s

test3 2/2 Terminating 0 21m

[root@k8s-master ~]#

##7.4回滚

myweb2回滚为nginx-rc.yaml

[root@k8s-master rc]# kubectl rolling-update myweb2 -f nginx-rc.yaml --update-period=10s

Created myweb

Scaling up myweb from 0 to 2, scaling down myweb2 from 2 to 0 (keep 2 pods available, dont exceed 3 pods)

Scaling myweb up to 1

Scaling myweb2 down to 1

Scaling myweb up to 2

Scaling myweb2 down to 0

Update succeeded. Deleting myweb2

replicationcontroller "myweb2" rolling updated to "myweb"

[root@k8s-master rc]#

[root@k8s-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myweb-p40zr 1/1 Running 0 49s

myweb-zrnb4 1/1 Running 0 59s

[root@k8s-master ~]#

##7.5升级过程中断了

#myweb升级到myweb2过程中断了

[root@k8s-master rc]# kubectl rolling-update myweb -f nginx-rc2.yaml --update-period=30s

Created myweb2

Scaling up myweb2 from 0 to 2, scaling down myweb from 2 to 0 (keep 2 pods available, dot exceed 3 pods)

Scaling myweb2 up to 1

^C

[root@k8s-master rc]#

#此时myweb有两个,myweb2有一个

[root@k8s-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myweb-p40zr 1/1 Running 0 3m

myweb-zrnb4 1/1 Running 0 3m

myweb2-lm7f2 1/1 Running 0 12s

[root@k8s-master ~]#

#回退,还原回原来的myweb

myweb2 from 1 to 0

[root@k8s-master ~]# kubectl rolling-update myweb myweb2 --update-period=10s --rollback

Setting "myweb" replicas to 2

Continuing update with existing controller myweb.

Scaling up myweb from 2 to 2, scaling down myweb2 from 1 to 0 (keep 2 pods available, dont exceed 3 pods)

Scaling myweb2 down to 0

Update succeeded. Deleting myweb2

replicationcontroller "myweb" rolling updated to "myweb2"

[root@k8s-master ~]#

[root@k8s-master rc]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myweb-p40zr 1/1 Running 0 5m

myweb-zrnb4 1/1 Running 0 5m

[root@k8s-master rc]##1.service

由于pod是动态变化的,如果一个pod挂掉了,replication会自动再进行创建,以维持固定数量的pod

所以Pod的ip也是总在变化,不能通过docker的端口映射来实现外部访问容器内的服务。

service是提供了一个固定IP,cluster ip,通过该固定的IP来进行通信

#2.三种IP

#2.1nodeip就是宿主机的IP

#2.2clusterip----10.254.0.1

[root@k8s-master rc]# kubectl get all

NAME DESIRED CURRENT READY AGE

rc/myweb 2 2 2 23m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kubernetes 10.254.0.1 <none> 443/TCP 5h

NAME READY STATUS RESTARTS AGE

po/myweb-p40zr 1/1 Running 0 23m

po/myweb-zrnb4 1/1 Running 0 23m

[root@k8s-master rc]#

#2.3podip就是pod的ip

[root@k8s-master rc]# kubectl get all -o wide

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rc/myweb 2 2 2 28m myweb 10.0.0.11:5000/nginx:1.13 app=myweb

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 <none> 443/TCP 5h <none>

NAME READY STATUS RESTARTS AGE IP NODE

po/myweb-p40zr 1/1 Running 0 28m 172.16.79.3 k8s-node1

po/myweb-zrnb4 1/1 Running 0 28m 172.16.48.2 k8s-node2

[root@k8s-master rc]#

#3.创建service

vi nginx-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: myweb

spec:

type: NodePort

ports:

- port: 80

nodePort: 30000

targetPort: 80

selector:

app: myweb

[root@k8s-master svc]# kubectl create -f nginx-svc.yaml

service "myweb" created

[root@k8s-master svc]# kubectl describe svc myweb

Name: myweb

Namespace: default

Labels: <none>

Selector: app=myweb

Type: NodePort

IP: 10.254.6.89

Port: <unset> 80/TCP

NodePort: <unset> 30000/TCP

Endpoints: 172.16.48.2:80,172.16.79.3:80

Session Affinity: None

No events.

[root@k8s-master svc]#

[root@k8s-master svc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

myweb-p40zr 1/1 Running 0 50m 172.16.79.3 k8s-node1

myweb-zrnb4 1/1 Running 0 50m 172.16.48.2 k8s-node2

[root@k8s-master svc]#

#4.访问,以下地址都可访问到

是由kuber-proxy服务提供的

http://10.0.0.11:30000/

http://10.0.0.12:30000/

http://10.0.0.13:30000/

#5.service服务的自动发现

[root@k8s-master svc]# kubectl scale rc myweb --replicas=3

replicationcontroller "myweb" scaled

[root@k8s-master svc]# kubctl get pod -o wide

-bash: kubctl: command not found

[root@k8s-master svc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

myweb-p40zr 1/1 Running 0 51m 172.16.79.3 k8s-node1

myweb-q5wrj 1/1 Running 0 16s 172.16.93.2 k8s-master

myweb-zrnb4 1/1 Running 0 51m 172.16.48.2 k8s-node2

[root@k8s-master svc]# kubectl describe svc myweb

Name: myweb

Namespace: default

Labels: <none>

Selector: app=myweb

Type: NodePort

IP: 10.254.6.89

Port: <unset> 80/TCP

NodePort: <unset> 30000/TCP

Endpoints: 172.16.48.2:80,172.16.79.3:80,172.16.93.2:80

Session Affinity: None

No events.

[root@k8s-master svc]#

[root@k8s-master svc]# kubectl get svc

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes 10.254.0.1 <none> 443/TCP 5h

myweb 10.254.6.89 <nodes> 80:30000/TCP 9m

#6.service服务的负载均衡

[root@k8s-master svc]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myweb-p40zr 1/1 Running 0 1h

myweb-q5wrj 1/1 Running 0 11m

myweb-zrnb4 1/1 Running 0 1h

[root@k8s-master svc]# echo pod1>index.html

[root@k8s-master svc]# kubectl cp index.html myweb-p40zr:/usr/share/nginx/html/index.html

/etc/hosts一定要做主机名解析,否则报错

[root@k8s-master svc]# kubectl cp index.html myweb-q5wrj:/usr/share/nginx/html/index.html

Error from server: error dialing backend: dial tcp: lookup k8s-master on 223.5.5.5:53: no such host

[root@k8s-master svc]# echo pod2>index.html

[root@k8s-master svc]# kubectl cp index.html myweb-q5wrj:/usr/share/nginx/html/index.html

[root@k8s-master svc]# echo pod3>index.html

[root@k8s-master svc]# kubectl cp index.html myweb-zrnb4:/usr/share/nginx/html/index.html

[root@k8s-master svc]#

会持续一段时间

[root@k8s-master svc]# curl 10.0.0.12:30000

pod1

[root@k8s-master svc]# curl 10.0.0.12:30000

pod3

[root@k8s-master svc]# curl 10.0.0.12:30000

pod1

[root@k8s-master svc]# curl 10.0.0.12:30000

pod3

[root@k8s-master svc]# curl 10.0.0.12:30000

pod1

[root@k8s-master svc]# curl 10.0.0.12:30000

pod2

[root@k8s-master svc]#

#7. nodePort端口范围默认是30000-32767

##7.1修改默认端口范围

[root@k8s-master svc]# vim /etc/kubernetes/apiserver

KUBE_API_ARGS="--service-node-port-range=10000-60000"

[root@k8s-master svc]# systemctl restart kube-apiserver.service

#启动参数应用处

[root@k8s-master svc]# cat /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

After=etcd.service

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/apiserver

User=kube

ExecStart=/usr/bin/kube-apiserver $KUBE_LOGTOSTDERR $KUBE_LOG_LEVEL $KUBE_ETCD_SERVERS $KUBE_API_ADDRESS $KUBE_API_PORT $KUBELET_PORT $KUBE_ALLOW_PRIV $KUBE_SERVICE_ADDRESSES $KUBE_ADMISSION_CONTROL $KUBE_API_ARGS

Restart=on-failure

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

[root@k8s-master svc]#

##7.2修改端口为10000试一下

[root@k8s-master svc]# cat nginx-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: myweb

spec:

type: NodePort

ports:

- port: 80

nodePort: 10000

targetPort: 80

selector:

app: myweb

[root@k8s-master svc]# kubectl apply -f nginx-svc.yaml

service "myweb" configured

[root@k8s-master svc]#

[root@k8s-master svc]# kubectl describe svc myweb

Name: myweb

Namespace: default

Labels: <none>

Selector: app=myweb

Type: NodePort

IP: 10.254.6.89

Port: <unset> 80/TCP

NodePort: <unset> 10000/TCP

Endpoints: 172.16.48.2:80,172.16.79.3:80,172.16.93.2:80

Session Affinity: None

No events.

[root@k8s-master svc]#

[root@k8s-master svc]# curl 10.0.0.12:10000

pod2

[root@k8s-master svc]# curl 10.0.0.12:10000

pod3

[root@k8s-master svc]# curl 10.0.0.12:10000

pod1

[root@k8s-master svc]#

##7.3cluster ip地址范围指定处

[root@k8s-master svc]# cat /etc/kubernetes/apiserver

# Address range to use for services

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16"

标签:flannel 影响 pass 加速 mode pack within exe 节点配置

原文地址:https://blog.51cto.com/10983441/2433436